日志系统ELKStack安装部署

Posted 探索运维

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了日志系统ELKStack安装部署相关的知识,希望对你有一定的参考价值。

ELK 简介

ELK是Elasticsearch、Logstash、Kibana三大开源框架首字母大称,它是一套完整的日志收集以及展示的解决方案。

其中:

Elasticsearch: 分布式搜索引擎,提供搜集、分析、存储数据三大功能。

Logstash: 日志的搜集、分析、过滤日志的工具,支持大量的数据获取方式。

Kibana: 主要为Logstash 和ElasticSearch 提供的日志分析友好的 Web 界面,可以帮助汇总、分析和搜索重要数据日志。

ELK 用途

在规模较大的场景中,面临问题包括日志量太大如何归档、文本搜索太慢怎么办、如何多维度查询。需要集中化的日志管理,所有服务器上的日志收集总。常见解决思路是建立集中式日志收集系统,将所有节点上的日志统一收集,管理,访问。

ELK在海量日志系统运维中,可用于:

故障排查

监控和预警

分布式日志数据查询和统一管理

报表功能

安全信息和事件管理

安装环境

ELK软件安装可以使用在线yum安装方式部署,需要先添加yum仓库。

1. 下载并安装GPG key

[root ~]# rpm --import https://packages.elastic.co/GPG-KEY-elasticsearch

[root ~]# rpm --import https://packages.elastic.co/GPG-KEY-elasticsearch

2. 添加yum仓库

[root ~]# cat/etc/yum.repos.d/elasticsearch.repo[elasticsearch-7.x]name=Elasticsearch repository for 7.xpackagesbaseurl=https://artifacts.elastic.co/packages/7.x/yumgpgcheck=1gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearchenabled=1autorefresh=1type=rpm-md

[root ~]# cat/etc/yum.repos.d/elasticsearch.repo[elasticsearch-7.x]name=Elasticsearch repository for 7.xpackagesbaseurl=https://artifacts.elastic.co/packages/7.x/yumgpgcheck=1gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearchenabled=1autorefresh=1type=rpm-md

PS : 由于这个在国内下载很慢!本次介绍的ELK软件是使用电脑存的旧版本软件,自己本地搭建一个yum仓库进行安装部署 (大家可以百度/谷歌搜索ELK国内资源进行下载),下面是主机配置,由于资源问题,本次用的架构也比较精简~

| IP (hostname) |

software |

| 10.0.0.20 (elasticsearch01) | elasticsearch |

| 10.0.0.21 (elasticsearch02) | elasticsearch |

| 10.0.0.22 (kibana01) | kibana |

| 10.0.0.22 (logstash01) | logstash |

Elasticsearch

集群安装

Elasticsearch部署用了两台主机做个小集群,下面是安装步骤~

##第一台Elasticsearch

1.安装java

[][]openjdk version "1.8.0_242"OpenJDK Runtime Environment (build 1.8.0_242-b08)OpenJDK 64-Bit Server VM (build 25.242-b08, mixed mode)

[][]openjdk version "1.8.0_242"OpenJDK Runtime Environment (build 1.8.0_242-b08)OpenJDK 64-Bit Server VM (build 25.242-b08, mixed mode)

2.安装Elasticsearch及配置

[]

[]cluster.name: my-elk node.name: elasticsearch01 path.data: /data/elasticsearch-data path.logs: /var/log/elasticsearch bootstrap.mlockall: true network.host: 10.0.0.20 http.port: 9200 discovery.zen.ping.unicast.hosts: ["elasticsearch01", "elasticsearch02"]

[][]

[][]cluster.name: my-elknode.name: elasticsearch01path.data: /data/elasticsearch-datapath.logs: /var/log/elasticsearchbootstrap.mlockall: truenetwork.host: 10.0.0.20http.port: 9200discovery.zen.ping.unicast.hosts: ["elasticsearch01", "elasticsearch02"][][]

2.启动

[][][]tcp6 0 0 10.0.0.20:9200 :::* LISTEN 925/java

[]{ "name" : "elasticsearch01", "cluster_name" : "my-elk", "version" : { "number" : "2.3.4", "build_hash" : "e455fd0c13dceca8dbbdbb1665d068ae55dabe3f", "build_timestamp" : "2016-06-30T11:24:31Z", "build_snapshot" : false, "lucene_version" : "5.5.0" }, "tagline" : "You Know, for Search"}

[][][]tcp6 0 0 10.0.0.20:9200 :::* LISTEN 925/java[]{"name" : "elasticsearch01","cluster_name" : "my-elk","version" : {"number" : "2.3.4","build_hash" : "e455fd0c13dceca8dbbdbb1665d068ae55dabe3f","build_timestamp" : "2016-06-30T11:24:31Z","build_snapshot" : false,"lucene_version" : "5.5.0"},"tagline" : "You Know, for Search"}

##第二台Elasticsearch

PS: 第二台安装和第一台安装步骤一样,只是配置文件一点小区别

[]cluster.name: my-elknode.name: elasticsearch02 path.data: /data/elasticsearch-datapath.logs: /var/log/elasticsearchbootstrap.mlockall: truenetwork.host: 10.0.0.21 http.port: 9200

[]{ "name" : "elasticsearch02", "cluster_name" : "my-elk", "version" : { "number" : "2.3.4", "build_hash" : "e455fd0c13dceca8dbbdbb1665d068ae55dabe3f", "build_timestamp" : "2016-06-30T11:24:31Z", "build_snapshot" : false, "lucene_version" : "5.5.0" }, "tagline" : "You Know, for Search"}

[]cluster.name: my-elknode.name: elasticsearch02path.data: /data/elasticsearch-datapath.logs: /var/log/elasticsearchbootstrap.mlockall: truenetwork.host: 10.0.0.21http.port: 9200[]{"name" : "elasticsearch02","cluster_name" : "my-elk","version" : {"number" : "2.3.4","build_hash" : "e455fd0c13dceca8dbbdbb1665d068ae55dabe3f","build_timestamp" : "2016-06-30T11:24:31Z","build_snapshot" : false,"lucene_version" : "5.5.0"},"tagline" : "You Know, for Search"}

##查看集群状态

[root@elasticsearch01 ~]# curl 10.0.0.20:9200/_cluster/health?pretty

[root@elasticsearch01 ~]# curl 10.0.0.20:9200/_cluster/health?pretty

-----到这个elasticsearch集群就已经安装完成。

##Elasticsearch插件

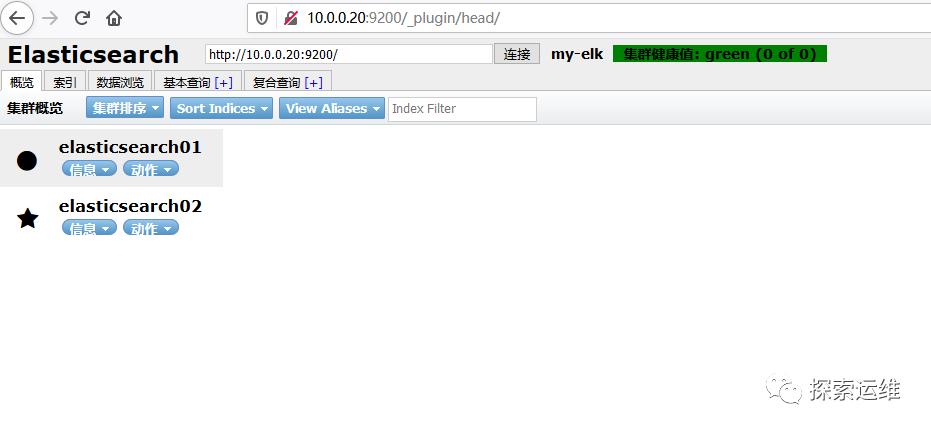

1. 集群管理插件

[root@elasticsearch01 ~]# cd /usr/share/elasticsearch/bin/[root@elasticsearch01 bin]# ./plugin install ftp://10.0.0.240/elk/elasticsearch-head-master.zip

---浏览器访问 http://10.0.0.20:9200/_plugin/head/

[root@elasticsearch01 ~]# cd /usr/share/elasticsearch/bin/[root@elasticsearch01 bin]# ./plugin install ftp://10.0.0.240/elk/elasticsearch-head-master.zip

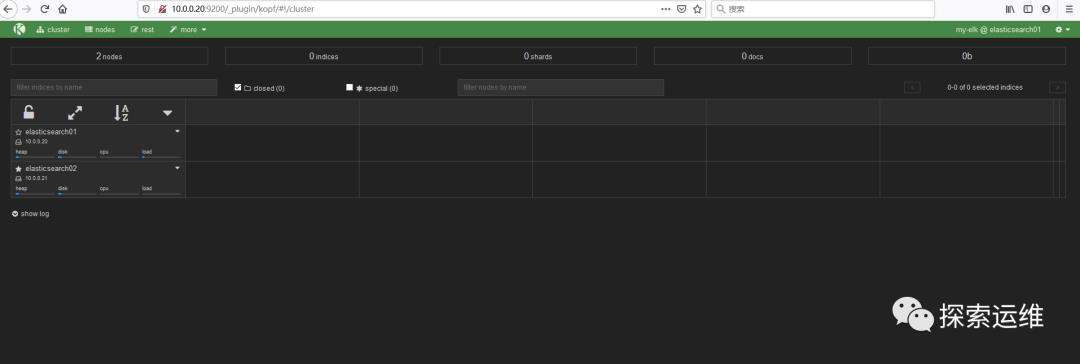

2. 安装监控插件

[root@elasticsearch01 bin]# ./plugin install ftp://10.0.0.240/elk/elasticsearch-kopf-master.zip

---浏览器访问 http://10.0.0.20:9200/_plugin/kopf

[root@elasticsearch01 bin]# ./plugin install ftp://10.0.0.240/elk/elasticsearch-kopf-master.zip

Kibana安装

Kibana 是为 Elasticsearch 设计的开源分析和可视化平台。可以用来搜索,查看存储在 Elasticsearch 索引中的数据并与之交互。

1.安装java

[][]

[][]

2.安装kibana及配置

[]

[]

[]server.port: 5601 server.host: 10.0.0.23 elasticsearch.url: http://10.0.0.20:9200 #elasticsearch地址 kibana.index: ".kibana" kibana.defaultAppId: "discover" elasticsearch.pingTimeout: 1500 elasticsearch.requestTimeout: 30000 elasticsearch.startupTimeout: 5000

[][][]server.port: 5601server.host: 10.0.0.23elasticsearch.url: http://10.0.0.20:9200 #elasticsearch地址kibana.index: ".kibana"kibana.defaultAppId: "discover"elasticsearch.pingTimeout: 1500elasticsearch.requestTimeout: 30000elasticsearch.startupTimeout: 5000

2.启动

[][][]tcp 0 0 10.0.0.23:5601 0.0.0.0:* LISTEN 617/node

[][][]tcp 0 0 10.0.0.23:5601 0.0.0.0:* LISTEN 617/node

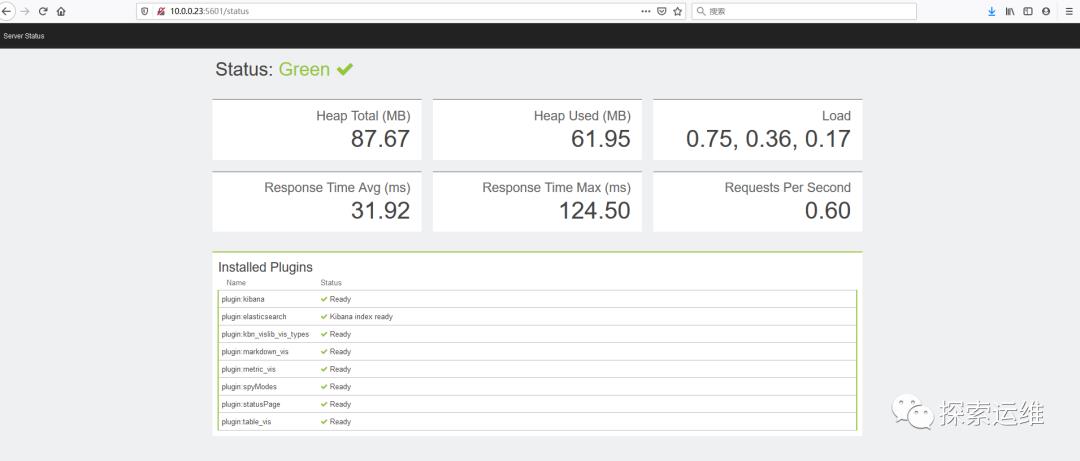

---浏览器访问 http://10.0.0.23:5601/

##浏览器访问,全绿就表示成功了。

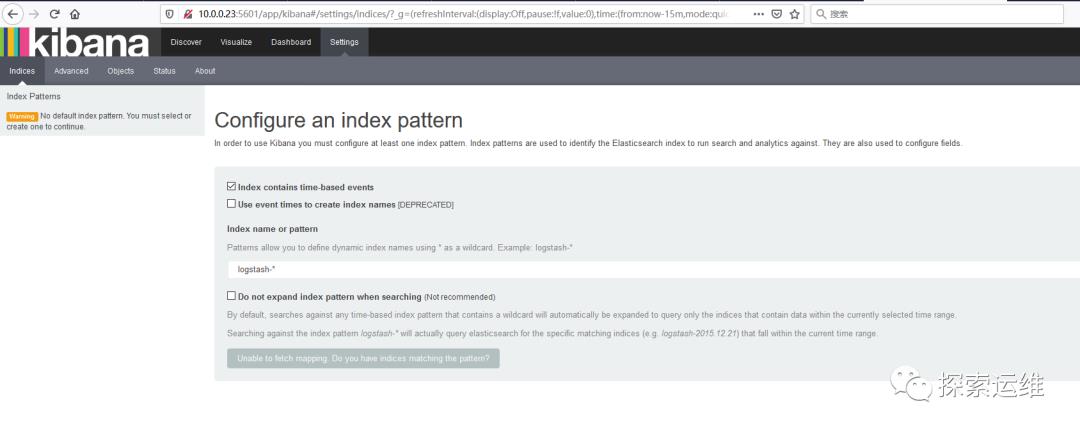

##主页面,还没有索引信息。待后续创建再配置。

logstash安装

logstash收集日志基本流程: input-->codec-->filter-->codec-->output

1.input:从哪里收集日志。

2.filter:发出去前进行过滤

3.output:输出至Elasticsearch或Redis消息队列

4.codec:输出至前台

1.安装java

[][]

[][]

2.安装logstash及配置

[]

[][]input { stdin { }}output { stdout { codec => rubydebug }}

[][][]input {stdin {}}output {stdout {codec => rubydebug}}

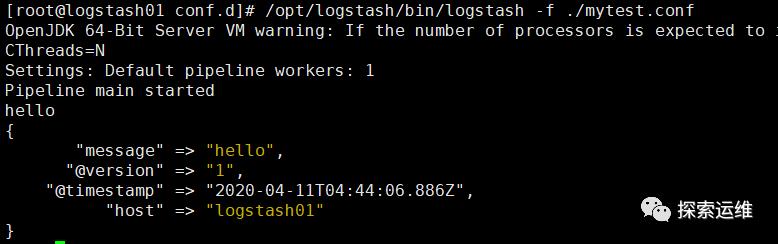

3.启动/测试

[]

[]

##输入hello,前台会输出信息。

logstash收集nginx日志

logstash收集nginx日志,输出到elasticsearch,并通过kibana前台显示。

1.安装Nginx

----安装过程可以见下文:

----安装过程可以见下文:

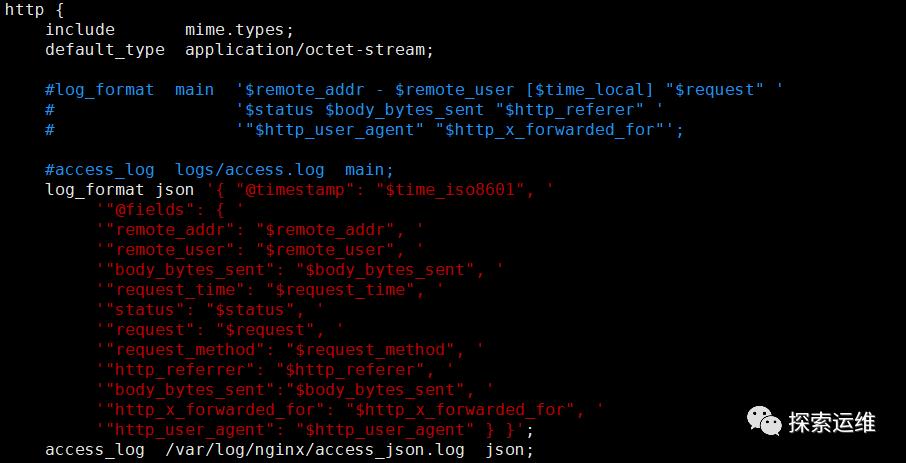

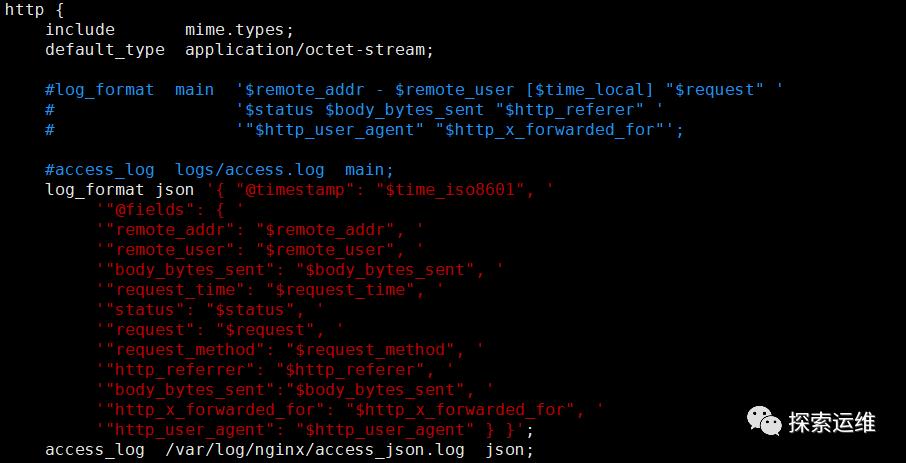

2.配置nginx输出json日志

[] log_format json '{ "@timestamp": "$time_iso8601", ' '"@fields": { ' '"remote_addr": "$remote_addr", ' '"remote_user": "$remote_user", ' '"body_bytes_sent": "$body_bytes_sent", ' '"request_time": "$request_time", ' '"status": "$status", ' '"request": "$request", ' '"request_method": "$request_method", ' '"http_referrer": "$http_referer", ' '"body_bytes_sent":"$body_bytes_sent", ' '"http_x_forwarded_for": "$http_x_forwarded_for", ' '"http_user_agent": "$http_user_agent" } }'; access_log /var/log/nginx/access_json.log json;

[]log_format json '{ "@timestamp": "$time_iso8601", ''"@fields": { ''"remote_addr": "$remote_addr", ''"remote_user": "$remote_user", ''"body_bytes_sent": "$body_bytes_sent", ''"request_time": "$request_time", ''"status": "$status", ''"request": "$request", ''"request_method": "$request_method", ''"http_referrer": "$http_referer", ''"body_bytes_sent":"$body_bytes_sent", ''"http_x_forwarded_for": "$http_x_forwarded_for", ''"http_user_agent": "$http_user_agent" } }';access_log /var/log/nginx/access_json.log json;

3.创建logstash配置文件

[]input {

file { type => "access_nginx" path => "/var/log/nginx/access_json.log" codec => "json" }}

output { elasticsearch { hosts => ["http://10.0.0.20:9200"] index => "nginxlog" }}

[]input {file {type => "access_nginx"path => "/var/log/nginx/access_json.log"codec => "json"}}output {elasticsearch {hosts => ["http://10.0.0.20:9200"]index => "nginxlog"}}

4.启动

[]

[]

[][]

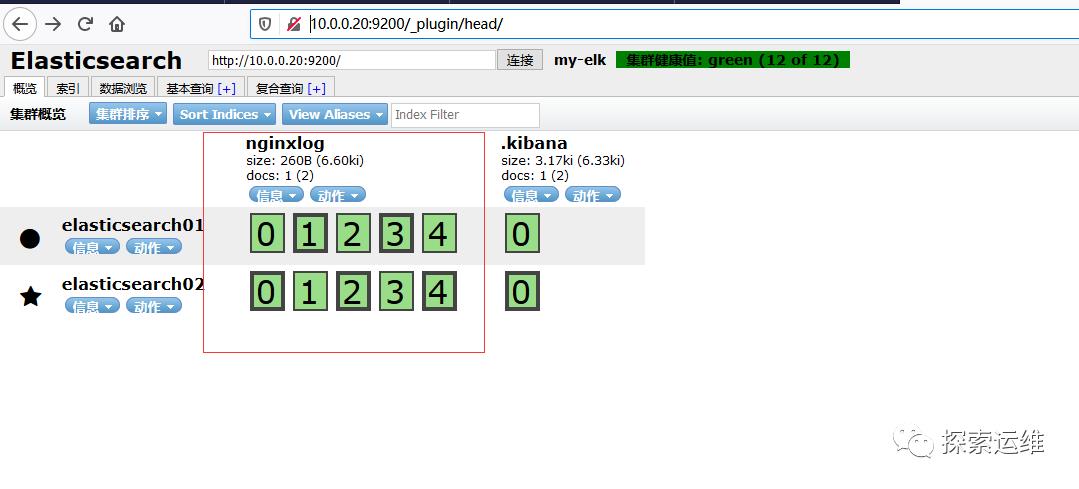

##访问elasticsearch的head模块,可以看见创建的索引。

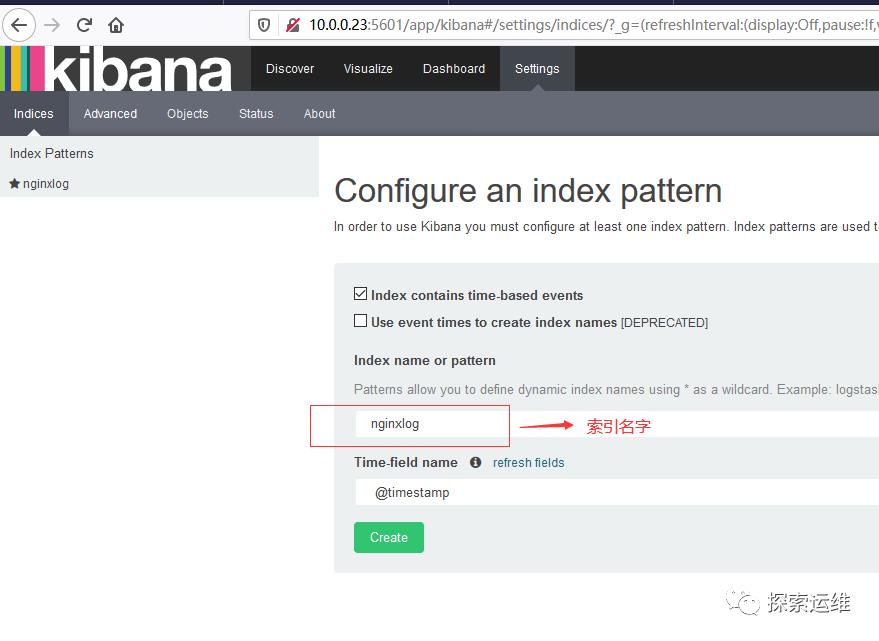

##访问kibana,配置nginxlog索引

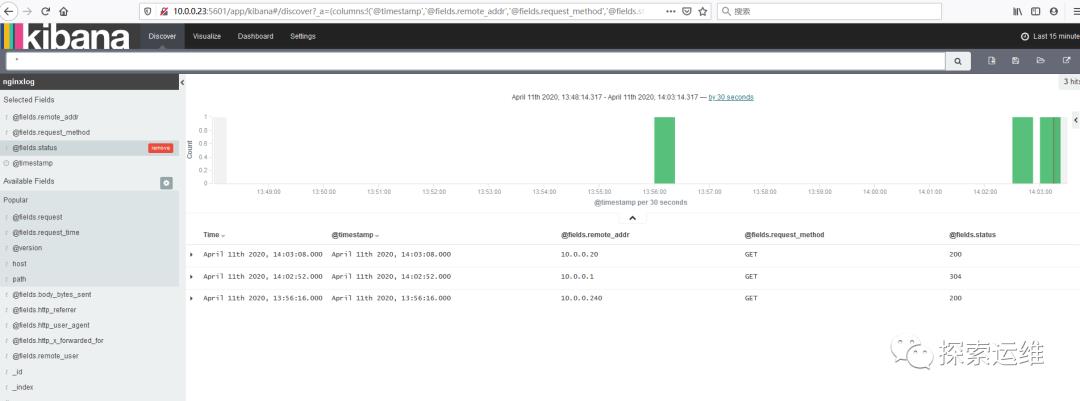

##配置成功之后,nginx的访问日志信息就会输出到kibana可视化页面,就可以进一步进行搜索等交互功能。

有什么需要补充或不足大家可以多多留言,一起探讨,谢谢

以上是关于日志系统ELKStack安装部署的主要内容,如果未能解决你的问题,请参考以下文章