分布式日志收集框架Flume学习笔记

Posted 数据分析

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了分布式日志收集框架Flume学习笔记相关的知识,希望对你有一定的参考价值。

业务现状分析

我们有很多servers和systems,比如network device、operating system、web server、Application,他们会产生日志和其他数据,如何使用这些数据呢?可以把源系统的日志数据移到分布式的存储和计算框架上处理,如何解决?

shell cp hadoop集群的机器上,hadoop fs -put …,有一系列问题,容错、负载均衡、高延时、压缩等。

Flume,把A端的数据移到B端,通过写配置文件可以cover掉大部分的应用场景。

Flume概述

Flume is a distributed, reliable, and available service for efficiently collecting(收集) aggregating(聚合), and moving(移动) large amounts of log data.

webserver(源端) ===> flume ===> hdfs(目的地)

Flume架构及核心组件

Source, 收集

Channel, 聚集

Sink, 输出

Flume环境部署

Flume安装前置条件,版本Flume 1.7.0,

Java Runtime Environment - Java 1.7 or later

Memory - Sufficient memory for configurations used by sources, channels or sinks

Disk Space - Sufficient disk space for configurations used by channels or sinks

Directory Permissions - Read/Write permissions for directories used by agent

安装jdk,下载,解压到目标目录,配置到系统环境变量中~/.bash_profile,source让其配置生效,验证java -version。

安装Flume,下载,解压到目标目录,配置到系统环境变量中~/.bash_profile,source让其配置生效,修改配置文件$FLUME_HOME/conf/flume-env.sh,配置Flume的JAVA_HOME,验证flume-ng version。

Flume实战案例

应用需求1:从指定网络端口采集数据输出到控制台。

技术选型:netcat source + memory channel + logger sink。

使用Flume的关键就是写配置文件,

配置Source

配置Channel

配置Sink

把以上三个组件串起来

a1: agent的名称,r1: source的名称,k1: sink的名称,c1: chanel的名称

1# example.conf: A single-node Flume configuration

2# Name the components on this agent

3a1.sources = r1

4a1.sinks = k1

5a1.channels = c1

6# Describe/configure the source

7a1.sources.r1.type = netcat

8a1.sources.r1.bind = 192.168.169.100

9a1.sources.r1.port = 44444

10# Describe the sink

11a1.sinks.k1.type = logger

12# Use a channel which buffers events in memory

13a1.channels.c1.type = memory

14# Bind the source and sink to the channel

15a1.sources.r1.channels = c1

16a1.sinks.k1.channel = c1

注意:一个source可以输出到多个channel,一个sink只能从一个channel过来。

1a1.sources.r1.channels = c1

2a1.sinks.k1.channel = c1

启动agent,

1# bin/flume-ng agent -n $agent_name -c conf -f conf/flume-conf.properties.template

2bin/flume-ng agent \

3--name a1 \

4--conf $FLUME_HOME/conf/myconf \

5--conf-file $FLUME_HOME/conf/myconf/example.conf \

6-Dflume.root.logger=INFO,console

配置telnet客户端与服务端,

1rpm -qa | grep telnet

2yum list | grep telnet

3yum install -y telnet telnet-server

4# 将telnet服务设置为默认启动(可选)

5cd /etc/xinetd.d

6cp telnet telnet.bak

7vi telent

8disable = no

9# 启动telnet和验证

10service xinetd start

11telnet localhost

使用telnet进行测试,

1telnet 192.168.169.100 44444

2hello

3world

Event是Flume数据传输的基本单元,Event = 可选的header + byte array。

应用需求2:监控一个文件实时采集新增的数据输出到控制台。

Agent选型:exec source + memory channel + logger sink。

1# exec-memory-logger.conf

2# Name the components on this agent

3a1.sources = r1

4a1.sinks = k1

5a1.channels = c1

6# Describe/configure the source

7a1.sources.r1.type = exec

8a1.sources.r1.command = tail -F /export/data/flume_sources/data.log

9a1.sources.r1.shell = /bin/sh -c

10# Describe the sink

11a1.sinks.k1.type = logger

12# Use a channel which buffers events in memory

13a1.channels.c1.type = memory

14# Bind the source and sink to the channel

15a1.sources.r1.channels = c1

16a1.sinks.k1.channel = c1

启动agent,

1# bin/flume-ng agent -n $agent_name -c conf -f conf/flume-conf.properties.template

2bin/flume-ng agent \

3--name a1 \

4--conf $FLUME_HOME/conf/myconf \

5--conf-file $FLUME_HOME/conf/myconf/exec-memory-logger.conf \

6-Dflume.root.logger=INFO,console

验证,

1echo hello >> data.log

2echo world >> data.log

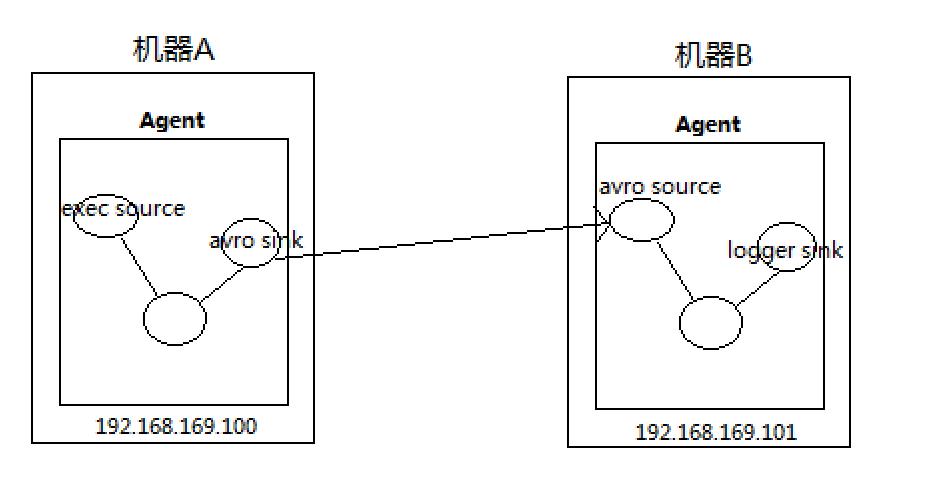

应用需求3:将A服务器上的日志实时采集到B服务器。

日志收集过程:

机器A上监控一个文件,当我们访问主站时会有用户行为日志记录到access.log中。

avro sink把新产生的日志输出到对应的avro source指定的hostname和port上。

通过avro source对应的agent将我们的日志输出到控制台(Kafka)。

技术选型:

exec-memory-avro.conf: exec source + memory channel + avro sink

avro-memory-logger.conf: avro source + memory channel + logger sink

1# exec-memory-avro.conf

2# Name the components on this agent

3exec-memory-avro.sources = exec-source

4exec-memory-avro.sinks = avro-sink

5exec-memory-avro.channels = memory-channel

6# Describe/configure the source

7exec-memory-avro.sources.exec-source.type = exec

8exec-memory-avro.sources.exec-source.command = tail -F /export/data/flume_sources/data.log

9exec-memory-avro.sources.exec-source.shell = /bin/sh -c

10# Describe the sink

11exec-memory-avro.sinks.avro-sink.type = avro

12exec-memory-avro.sinks.avro-sink.hostname = 192.168.169.100

13exec-memory-avro.sinks.avro-sink.port = 44444

14# Use a channel which buffers events in memory

15exec-memory-avro.channels.memory-channel.type = memory

16# Bind the source and sink to the channel

17exec-memory-avro.sources.exec-source.channels = memory-channel

18exec-memory-avro.sinks.avro-sink.channel = memory-channel

1# avro-memory-logger.conf

2# Name the components on this agent

3avro-memory-logger.sources = avro-source

4avro-memory-logger.sinks = logger-sink

5avro-memory-logger.channels = memory-channel

6# Describe/configure the source

7avro-memory-logger.sources.avro-source.type = avro

8avro-memory-logger.sources.avro-source.bind = 192.168.169.100

9avro-memory-logger.sources.avro-source.port = 44444

10# Describe the sink

11avro-memory-logger.sinks.logger-sink.type = logger

12# Use a channel which buffers events in memory

13avro-memory-logger.channels.memory-channel.type = memory

14# Bind the source and sink to the channel

15avro-memory-logger.sources.avro-source.channels = memory-channel

16avro-memory-logger.sinks.logger-sink.channel = memory-channel

验证,先启动avro-memory-logger.conf,因为它监听192.168.169.100的44444端口,

1# bin/flume-ng agent -n $agent_name -c conf -f conf/flume-conf.properties.template

2bin/flume-ng agent \

3--name avro-memory-logger \

4--conf $FLUME_HOME/conf/myconf \

5--conf-file $FLUME_HOME/conf/myconf/avro-memory-logger.conf \

6-Dflume.root.logger=INFO,console

1# bin/flume-ng agent -n $agent_name -c conf -f conf/flume-conf.properties.template

2bin/flume-ng agent \

3--name exec-memory-avro \

4--conf $FLUME_HOME/conf/myconf \

5--conf-file $FLUME_HOME/conf/myconf/exec-memory-avro.conf \

6-Dflume.root.logger=INFO,console

本文首发于steem,感谢阅读,转载请注明。

https://steemit.com/@padluo

读者交流电报群

https://t.me/sspadluo

知识星球交流群

以上是关于分布式日志收集框架Flume学习笔记的主要内容,如果未能解决你的问题,请参考以下文章