写一个Flutter手势追踪插件

Posted 郭霖

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了写一个Flutter手势追踪插件相关的知识,希望对你有一定的参考价值。

/ 今日科技快讯 /

近日字节跳动组织升级,张利东担任字节跳动(中国)董事长,全面协调公司运营,包括字节跳动中国的战略、商业化、公共事务、公共关系、财务、人力;抖音CEO张楠将担任字节跳动(中国)CEO,作为中国业务总负责人,全面协调公司中国业务的产品、运营、市场和内容合作,包括今日头条、抖音、西瓜视频、搜索等业务和产品。两人向张一鸣汇报。

/ 作者简介 /

繁忙的一周又结束啦,提前祝大家周末愉快!

本篇文章来自诌在行的投稿,分享了flutter的手势插件,相信会对大家有所帮助!同时也感谢作者贡献的精彩文章。

https://github.com/zhouzaihang

/ 前言 /

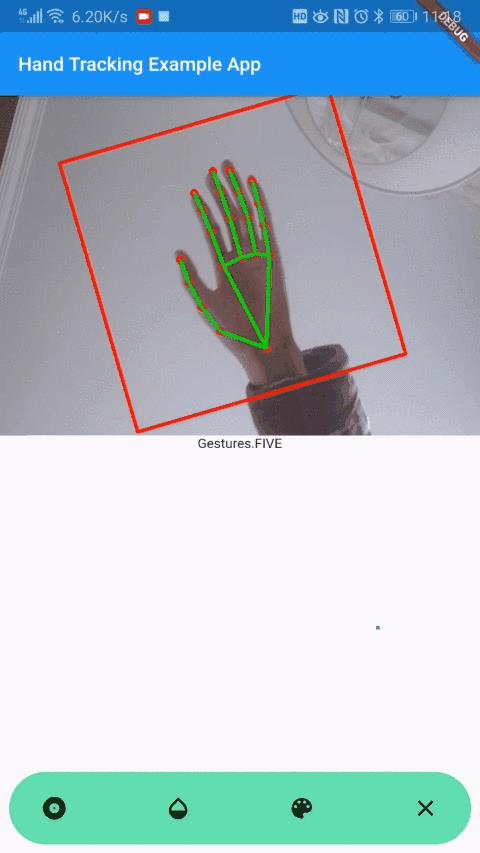

这个 Flutter Packge 是为了实现调用 Andorid 设备摄像头精确追踪并识别十指的运动路径/轨迹和手势动作, 且输出22个手部关键点以支持更多手势自定义.

基于这个包可以编写业务逻辑将手势信息实时转化为指令信息: 一二三四五, rock, spiderman...同时基于 Flutter 可以对不同手势编写不同特效. 可用于短视频直播特效, 智能硬件等领域, 为人机互动带来更自然丰富的体验.

涉及到的技术

-

编写一个 Flutter Plugin Package -

使用 Docker 配置 MediaPipe 开发环境 -

在 Gradle 中使用 MediaPipe -

Flutter 程序运行 MediaPipe 图 -

Flutter 页面中嵌入原生视图 -

protobuf 的使用

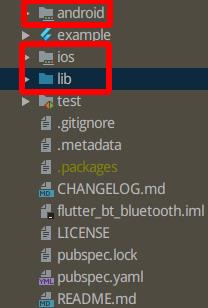

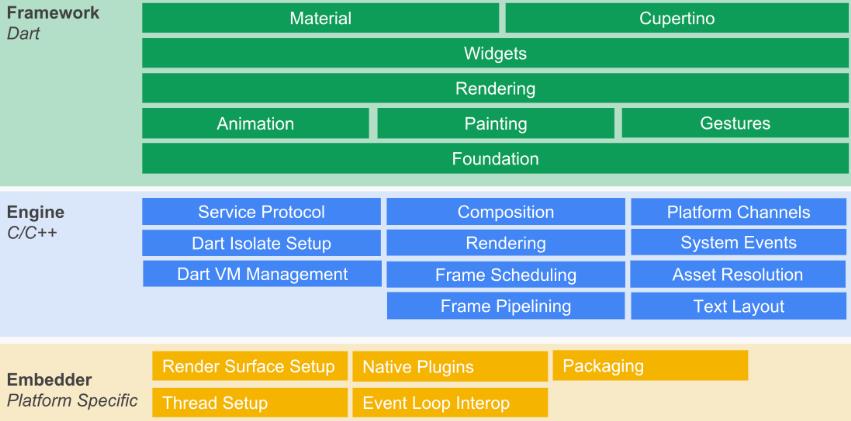

什么是 Flutter Package

为什么需要 Flutter Plugin Package

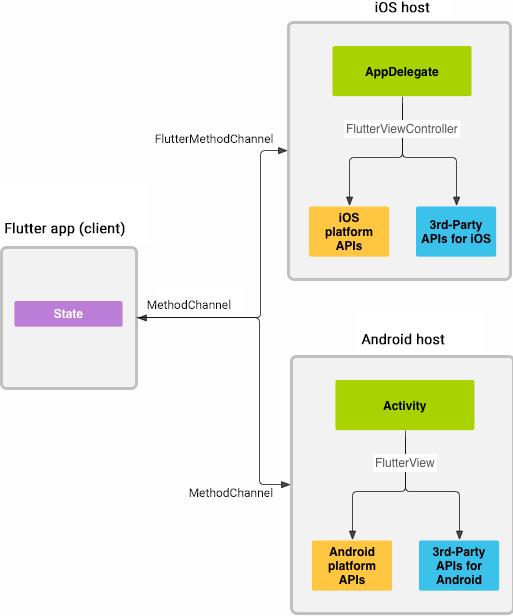

Flutter Plugin Package 是如何工作的

新建 Flutter Plugin Package

-

打开 Android Studio, 点击 New Flutter Project -

选择 Flutter Plugin 选项 -

输入项目名字, 描述等信息

编写 Factory 类

package xyz.zhzh.flutter_hand_tracking_plugin

import android.content.Context

import io.flutter.plugin.common.PluginRegistry

import io.flutter.plugin.common.StandardMessageCodec

import io.flutter.plugin.platform.PlatformView

import io.flutter.plugin.platform.PlatformViewFactory

class HandTrackingViewFactory(private val registrar: PluginRegistry.Registrar) :

PlatformViewFactory(StandardMessageCodec.INSTANCE) {

override fun create(context: Context?, viewId: Int, args: Any?): PlatformView {

return FlutterHandTrackingPlugin(registrar, viewId)

}

}

编写 AndroidView 类

class FlutterHandTrackingPlugin(r: Registrar, id: Int) : PlatformView, MethodCallHandler {

companion object {

private const val TAG = "HandTrackingPlugin"

private const val NAMESPACE = "plugins.zhzh.xyz/flutter_hand_tracking_plugin"

@JvmStatic

fun registerWith(registrar: Registrar) {

registrar.platformViewRegistry().registerViewFactory(

"$NAMESPACE/view",

HandTrackingViewFactory(registrar))

}

init { // Load all native libraries needed by the app.

System.loadLibrary("mediapipe_jni")

System.loadLibrary("opencv_java3")

}

}

private var previewDisplayView: SurfaceView = SurfaceView(r.context())

}

class FlutterHandTrackingPlugin(r: Registrar, id: Int) : PlatformView, MethodCallHandler {

// ...

override fun getView(): SurfaceView? {

return previewDisplayView

}

override fun dispose() {

// TODO: ViewDispose()

}

}

在 Dart 中调用原生实现的 View

AndroidView(

viewType: '$NAMESPACE/blueview',

onPlatformViewCreated: (id) => _id = id),

)

import 'dart:async';

import 'package:flutter/cupertino.dart';

import 'package:flutter/foundation.dart';

import 'package:flutter/services.dart';

import 'package:flutter_hand_tracking_plugin/gen/landmark.pb.dart';

const NAMESPACE = "plugins.zhzh.xyz/flutter_hand_tracking_plugin";

typedef void HandTrackingViewCreatedCallback(

HandTrackingViewController controller);

class HandTrackingView extends StatelessWidget {

const HandTrackingView({@required this.onViewCreated})

: assert(onViewCreated != null);

final HandTrackingViewCreatedCallback onViewCreated;

@override

Widget build(BuildContext context) {

switch (defaultTargetPlatform) {

case TargetPlatform.android:

return AndroidView(

viewType: "$NAMESPACE/view",

onPlatformViewCreated: (int id) => onViewCreated == null

? null

: onViewCreated(HandTrackingViewController._(id)),

);

case TargetPlatform.fuchsia:

case TargetPlatform.iOS:

default:

throw UnsupportedError(

"Trying to use the default webview implementation for"

" $defaultTargetPlatform but there isn't a default one");

}

}

}

class HandTrackingViewController {

final MethodChannel _methodChannel;

HandTrackingViewController._(int id)

: _methodChannel = MethodChannel("$NAMESPACE/$id"),

_eventChannel = EventChannel("$NAMESPACE/$id/landmarks");

Future<String> get platformVersion async =>

await _methodChannel.invokeMethod("getPlatformVersion");

}

创建一个 mediapipe_aar()

load("//mediapipe/java/com/google/mediapipe:mediapipe_aar.bzl", "mediapipe_aar")

mediapipe_aar(

name = "hand_tracking_aar",

calculators = ["//mediapipe/graphs/hand_tracking:mobile_calculators"],

)

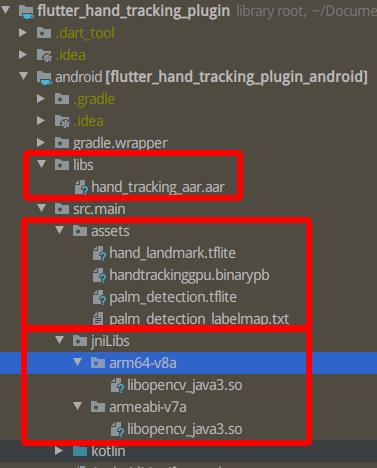

生成 aar

bazel build -c opt --action_env=HTTP_PROXY=$HTTP_PROXY --action_env=HTTPS_PROXY=$HTTPS_PROXY --fat_apk_cpu=arm64-v8a,armeabi-v7a mediapipe/examples/android/src/java/com/google/mediapipe/apps/aar_example:hand_tracking_aar

生成 binary graph

bazel build -c opt mediapipe/examples/android/src/java/com/google/mediapipe/apps/handtrackinggpu:binary_graph

添加 assets 和 OpenCV library

cp -R ~/Downloads/OpenCV-android-sdk/sdk/native/libs/arm* /path/to/your/plugin/android/src/main/jniLibs/

class FlutterHandTrackingPlugin(r: Registrar, id: Int) : PlatformView, MethodCallHandler {

companion object {

init { // Load all native libraries needed by the app.

System.loadLibrary("mediapipe_jni")

System.loadLibrary("opencv_java3")

}

}

}

修改 build.gradle

dependencies {

implementation "org.jetbrains.kotlin:kotlin-stdlib-jdk7:$kotlin_version"

implementation fileTree(dir: 'libs', include: ['*.aar'])

// MediaPipe deps

implementation 'com.google.flogger:flogger:0.3.1'

implementation 'com.google.flogger:flogger-system-backend:0.3.1'

implementation 'com.google.code.findbugs:jsr305:3.0.2'

implementation 'com.google.guava:guava:27.0.1-android'

implementation 'com.google.guava:guava:27.0.1-android'

implementation 'com.google.protobuf:protobuf-lite:3.0.1'

// CameraX core library

def camerax_version = "1.0.0-alpha06"

implementation "androidx.camera:camera-core:$camerax_version"

implementation "androidx.camera:camera-camera2:$camerax_version"

implementation "androidx.core:core-ktx:1.2.0"

}

获取摄像头权限

<!-- For using the camera -->

<uses-permission android:name="android.permission.CAMERA" />

<uses-feature android:name="android.hardware.camera" />

<uses-feature android:name="android.hardware.camera.autofocus" />

<!-- For MediaPipe -->

<uses-feature

android:glEsVersion="0x00020000"

android:required="true" />

defaultConfig {

// TODO: Specify your own unique Application ID (https://developer.android.com/studio/build/application-id.html).

applicationId "xyz.zhzh.flutter_hand_tracking_plugin_example"

minSdkVersion 21

targetSdkVersion 27

versionCode flutterVersionCode.toInteger()

versionName flutterVersionName

testInstrumentationRunner "androidx.test.runner.AndroidJUnitRunner"

}

PermissionHelper.checkAndRequestCameraPermissions(activity)

class FlutterHandTrackingPlugin(r: Registrar, id: Int) : PlatformView, MethodCallHandler {

private val activity: Activity = r.activity()

init {

// ...

r.addRequestPermissionsResultListener(CameraRequestPermissionsListener())

PermissionHelper.checkAndRequestCameraPermissions(activity)

if (PermissionHelper.cameraPermissionsGranted(activity)) onResume()

}

private inner class CameraRequestPermissionsListener :

PluginRegistry.RequestPermissionsResultListener {

override fun onRequestPermissionsResult(requestCode: Int,

permissions: Array<out String>?,

grantResults: IntArray?): Boolean {

return if (requestCode != 0) false

else {

for (result in grantResults!!) {

if (result == PERMISSION_GRANTED) onResume()

else Toast.makeText(activity, "请授予摄像头权限", Toast.LENGTH_LONG).show()

}

true

}

}

}

private fun onResume() {

// ...

if (PermissionHelper.cameraPermissionsGranted(activity)) startCamera()

}

private fun startCamera() {}

}

// {@link SurfaceTexture} where the camera-preview frames can be accessed.

private var previewFrameTexture: SurfaceTexture? = null

// {@link SurfaceView} that displays the camera-preview frames processed by a MediaPipe graph.

private var previewDisplayView: SurfaceView = SurfaceView(r.context())

init {

r.addRequestPermissionsResultListener(CameraRequestPermissionsListener())

setupPreviewDisplayView()

PermissionHelper.checkAndRequestCameraPermissions(activity)

if (PermissionHelper.cameraPermissionsGranted(activity)) onResume()

}

private fun setupPreviewDisplayView() {

previewDisplayView.visibility = View.GONE

// TODO

}

class FlutterHandTrackingPlugin(r: Registrar, id: Int) : PlatformView, MethodCallHandler {

// ...

private var cameraHelper: CameraXPreviewHelper? = null

// ...

}

private fun startCamera() {

cameraHelper = CameraXPreviewHelper()

cameraHelper!!.setOnCameraStartedListener { surfaceTexture: SurfaceTexture? ->

previewFrameTexture = surfaceTexture

// Make the display view visible to start showing the preview. This triggers the

// SurfaceHolder.Callback added to (the holder of) previewDisplayView.

previewDisplayView.visibility = View.VISIBLE

}

cameraHelper!!.startCamera(activity, CAMERA_FACING, /*surfaceTexture=*/null)

}

class FlutterHandTrackingPlugin(r: Registrar, id: Int) : PlatformView, MethodCallHandler {

companion object {

private val CAMERA_FACING = CameraHelper.CameraFacing.FRONT

// ...

}

//...

}

// Creates and manages an {@link EGLContext}.

private var eglManager: EglManager = EglManager(null)

// Converts the GL_TEXTURE_EXTERNAL_OES texture from Android camera into a regular texture to be

// consumed by {@link FrameProcessor} and the underlying MediaPipe graph.

private var converter: ExternalTextureConverter? = null

private fun onResume() {

converter = ExternalTextureConverter(eglManager.context)

converter!!.setFlipY(FLIP_FRAMES_VERTICALLY)

if (PermissionHelper.cameraPermissionsGranted(activity)) {

startCamera()

}

}

private fun setupPreviewDisplayView() {

previewDisplayView.visibility = View.GONE

previewDisplayView.holder.addCallback(

object : SurfaceHolder.Callback {

override fun surfaceCreated(holder: SurfaceHolder) {

processor.videoSurfaceOutput.setSurface(holder.surface)

}

override fun surfaceChanged(holder: SurfaceHolder, format: Int, width: Int, height: Int) { // (Re-)Compute the ideal size of the camera-preview display (the area that the

// camera-preview frames get rendered onto, potentially with scaling and rotation)

// based on the size of the SurfaceView that contains the display.

val viewSize = Size(width, height)

val displaySize = cameraHelper!!.computeDisplaySizeFromViewSize(viewSize)

val isCameraRotated = cameraHelper!!.isCameraRotated

// Connect the converter to the camera-preview frames as its input (via

// previewFrameTexture), and configure the output width and height as the computed

// display size.

converter!!.setSurfaceTextureAndAttachToGLContext(

previewFrameTexture,

if (isCameraRotated) displaySize.height else displaySize.width,

if (isCameraRotated) displaySize.width else displaySize.height)

}

override fun surfaceDestroyed(holder: SurfaceHolder) {

// TODO

}

})

}

-

计算摄像头的帧在设备屏幕上适当的显示尺寸 -

传入 previewFrameTexture 和 displaySize 到 converter

调用 MediaPipe graph

// Initialize asset manager so that MediaPipe native libraries can access the app assets, e.g.,

// binary graphs.

AndroidAssetUtil.initializeNativeAssetManager(activity)

init {

setupProcess()

}

private fun setupProcess() {

processor.videoSurfaceOutput.setFlipY(FLIP_FRAMES_VERTICALLY)

// TODO

}

class FlutterHandTrackingPlugin(r: Registrar, id: Int) : PlatformView, MethodCallHandler {

companion object {

private const val BINARY_GRAPH_NAME = "handtrackinggpu.binarypb"

private const val INPUT_VIDEO_STREAM_NAME = "input_video"

private const val OUTPUT_VIDEO_STREAM_NAME = "output_video"

private const val OUTPUT_HAND_PRESENCE_STREAM_NAME = "hand_presence"

private const val OUTPUT_LANDMARKS_STREAM_NAME = "hand_landmarks"

}

}

// Sends camera-preview frames into a MediaPipe graph for processing, and displays the processed

// frames onto a {@link Surface}.

private var processor: FrameProcessor = FrameProcessor(

activity,

eglManager.nativeContext,

BINARY_GRAPH_NAME,

INPUT_VIDEO_STREAM_NAME,

OUTPUT_VIDEO_STREAM_NAME)

private fun onResume() {

converter = ExternalTextureConverter(eglManager.context)

converter!!.setFlipY(FLIP_FRAMES_VERTICALLY)

converter!!.setConsumer(processor)

if (PermissionHelper.cameraPermissionsGranted(activity)) {

startCamera()

}

}

private fun setupPreviewDisplayView() {

previewDisplayView.visibility = View.GONE

previewDisplayView.holder.addCallback(

object : SurfaceHolder.Callback {

override fun surfaceCreated(holder: SurfaceHolder) {

processor.videoSurfaceOutput.setSurface(holder.surface)

}

override fun surfaceChanged(holder: SurfaceHolder, format: Int, width: Int, height: Int) {

// (Re-)Compute the ideal size of the camera-preview display (the area that the

// camera-preview frames get rendered onto, potentially with scaling and rotation)

// based on the size of the SurfaceView that contains the display.

val viewSize = Size(width, height)

val displaySize = cameraHelper!!.computeDisplaySizeFromViewSize(viewSize)

val isCameraRotated = cameraHelper!!.isCameraRotated

// Connect the converter to the camera-preview frames as its input (via

// previewFrameTexture), and configure the output width and height as the computed

// display size.

converter!!.setSurfaceTextureAndAttachToGLContext(

previewFrameTexture,

if (isCameraRotated) displaySize.height else displaySize.width,

if (isCameraRotated) displaySize.width else displaySize.height)

}

override fun surfaceDestroyed(holder: SurfaceHolder) {

processor.videoSurfaceOutput.setSurface(null)

}

})

}

在 Android 平台打开 EventChannel

private val eventChannel: EventChannel = EventChannel(r.messenger(), "$NAMESPACE/$id/landmarks")

private var eventSink: EventChannel.EventSink? = null

init {

this.eventChannel.setStreamHandler(landMarksStreamHandler())

}

private fun landMarksStreamHandler(): EventChannel.StreamHandler {

return object : EventChannel.StreamHandler {

override fun onListen(arguments: Any?, events: EventChannel.EventSink) {

eventSink = events

// Log.e(TAG, "Listen Event Channel")

}

override fun onCancel(arguments: Any?) {

eventSink = null

}

}

}

private val uiThreadHandler: Handler = Handler(Looper.getMainLooper())

private fun setupProcess() {

processor.videoSurfaceOutput.setFlipY(FLIP_FRAMES_VERTICALLY)

processor.addPacketCallback(

OUTPUT_HAND_PRESENCE_STREAM_NAME

) { packet: Packet ->

val handPresence = PacketGetter.getBool(packet)

if (!handPresence) Log.d(TAG, "[TS:" + packet.timestamp + "] Hand presence is false, no hands detected.")

}

processor.addPacketCallback(

OUTPUT_LANDMARKS_STREAM_NAME

) { packet: Packet ->

val landmarksRaw = PacketGetter.getProtoBytes(packet)

if (eventSink == null) try {

val landmarks = LandmarkProto.NormalizedLandmarkList.parseFrom(landmarksRaw)

if (landmarks == null) {

Log.d(TAG, "[TS:" + packet.timestamp + "] No hand landmarks.")

return@addPacketCallback

}

// Note: If hand_presence is false, these landmarks are useless.

Log.d(TAG, "[TS: ${packet.timestamp}] #Landmarks for hand: ${landmarks.landmarkCount}\n ${getLandmarksString(landmarks)}")

} catch (e: InvalidProtocolBufferException) {

Log.e(TAG, "Couldn't Exception received - $e")

return@addPacketCallback

}

else uiThreadHandler.post { eventSink?.success(landmarksRaw) }

}

}

在 Dart 层获得 eventChannel 的数据

class HandTrackingViewController {

final MethodChannel _methodChannel;

final EventChannel _eventChannel;

HandTrackingViewController._(int id)

: _methodChannel = MethodChannel("$NAMESPACE/$id"),

_eventChannel = EventChannel("$NAMESPACE/$id/landmarks");

Future<String> get platformVersion async =>

await _methodChannel.invokeMethod("getPlatformVersion");

Stream<NormalizedLandmarkList> get landMarksStream async* {

yield* _eventChannel

.receiveBroadcastStream()

.map((buffer) => NormalizedLandmarkList.fromBuffer(buffer));

}

}

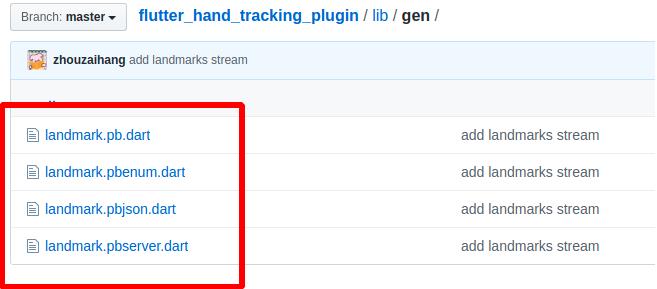

dependencies:

flutter:

sdk: flutter

protobuf: ^1.0.1

pub install

pub global activate protoc_plugin

protoc --dart_out=../lib/gen ./landmark.proto

https://github.com/zhouzaihang/flutter_hand_tracking_plugin

以上是关于写一个Flutter手势追踪插件的主要内容,如果未能解决你的问题,请参考以下文章