如何使用Python Impyla客户端连接Hive和Impala

Posted Hadoop实操

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了如何使用Python Impyla客户端连接Hive和Impala相关的知识,希望对你有一定的参考价值。

温馨提示:要看高清无码套图,请使用手机打开并单击图片放大查看。

1.文档编写目的

继上一章讲述后,本章节主要讲述如何使用Pyton Impyla客户端连接CDH集群的HiveServer2和Impala Daemon,并进行SQL操作。

内容概述

1.依赖包安装

2.代码编写

3.代码测试

测试环境

1.CM和CDH版本为5.11.2

2.RedHat7.2

前置条件

1.CDH集群环境正常运行

2.Anaconda已安装并配置环境变量

3.pip工具能够正常安装Python包

4.Python版本2.6+ or 3.3+

5.非安全集群环境

2.Impyla依赖包安装

Impyla所依赖的Python包

six

bit_array

thrift (on Python 2.x) orthriftpy (on Python 3.x)

thrift_sasl

sasl

1.首先安装Impyla依赖的Python包

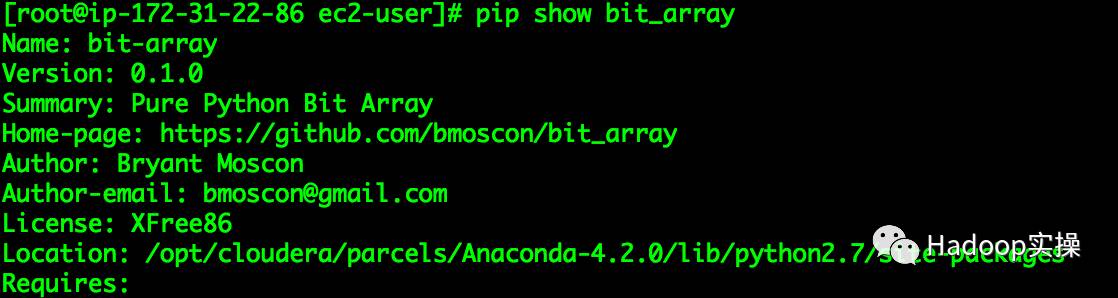

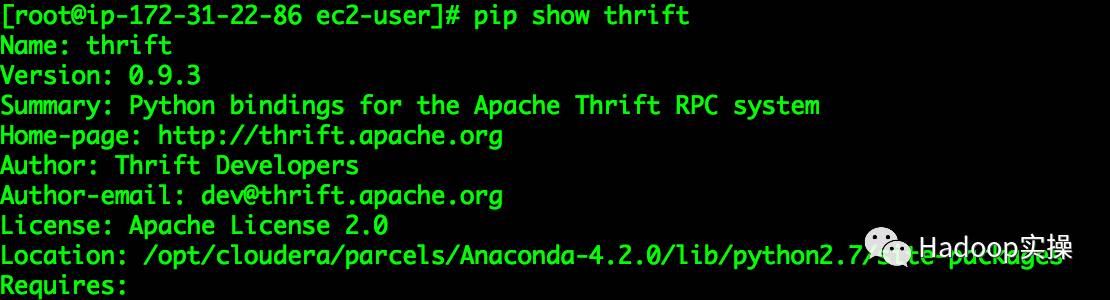

[root@ip-172-31-22-86 ~]# pip install bit_array

[root@ip-172-31-22-86 ~]# pip install thrift==0.9.3

[root@ip-172-31-22-86 ~]# pip install six

[root@ip-172-31-22-86 ~]# pip install thrift_sasl

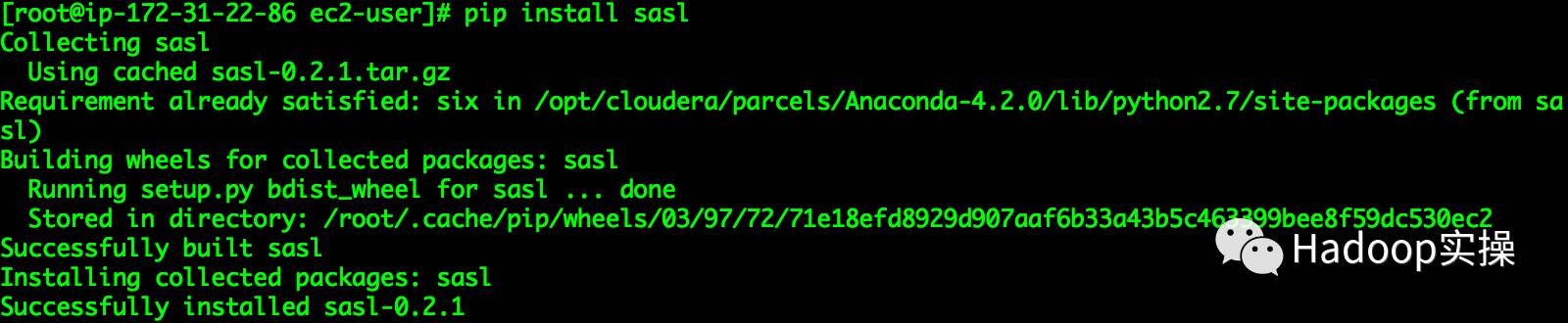

[root@ip-172-31-22-86 ~]# pip install sasl

注意:thrift的版本必须使用0.9.3,默认安装的为0.10.0版本,需要卸载后重新安装0.9.3版本,卸载命令pip uninstall thrift

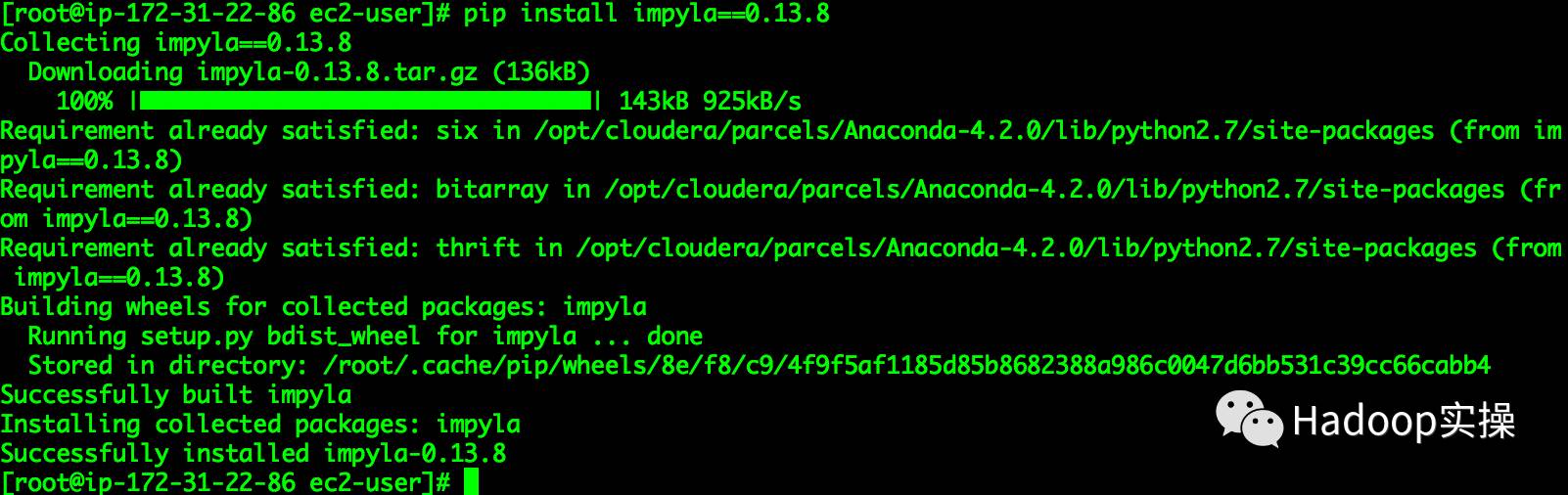

2.安装Impyla包

impyla版本,默认安装的是0.14.0,需要将卸载后安装0.13.8版本

[root@ip-172-31-22-86 ec2-user]# pip install impyla==0.13.8

Collecting impyla

Downloading impyla-0.14.0.tar.gz (151kB)

100% |████████████████████████████████| 153kB 1.0MB/s

Requirement already satisfied: six in /opt/cloudera/parcels/Anaconda-4.2.0/lib/python2.7/site-packages (from impyla)

Requirement already satisfied: bitarray in /opt/cloudera/parcels/Anaconda-4.2.0/lib/python2.7/site-packages (from impyla)

Requirement already satisfied: thrift in /opt/cloudera/parcels/Anaconda-4.2.0/lib/python2.7/site-packages (from impyla)

Building wheels for collected packages: impyla

Running setup.py bdist_wheel for impyla ... done

Stored in directory: /root/.cache/pip/wheels/96/fa/d8/40e676f3cead7ec45f20ac43eb373edc471348ac5cb485d6f5

Successfully built impyla

Installing collected packages: impyla

Successfully installed impyla-0.14.0

3.编写Python代码

Python连接Hive(HiveTest.py)

from impala.dbapi importconnect

conn = connect(host='ip-172-31-21-45.ap-southeast-1.compute.internal',port=10000,database='default',auth_mechan

ism='PLAIN')

print(conn)

cursor = conn.cursor()

cursor.execute('show databases')

print cursor.description # prints the result set's schema

results = cursor.fetchall()

print(results)

cursor.execute('SELECT * FROM test limit 10')

print cursor.description # prints the result set's schema

results = cursor.fetchall()

print(results)

Python连接Impala(ImpalaTest.py)

from impala.dbapi importconnect

conn = connect(host='ip-172-31-26-80.ap-southeast-1.compute.internal',port=21050)

print(conn)

cursor = conn.cursor()

cursor.execute('show databases')

print cursor.description # prints the result set's schema

results = cursor.fetchall()

print(results)

cursor.execute('SELECT * FROM test limit 10')

print cursor.description # prints the result set's schema

results = cursor.fetchall()

print(results)

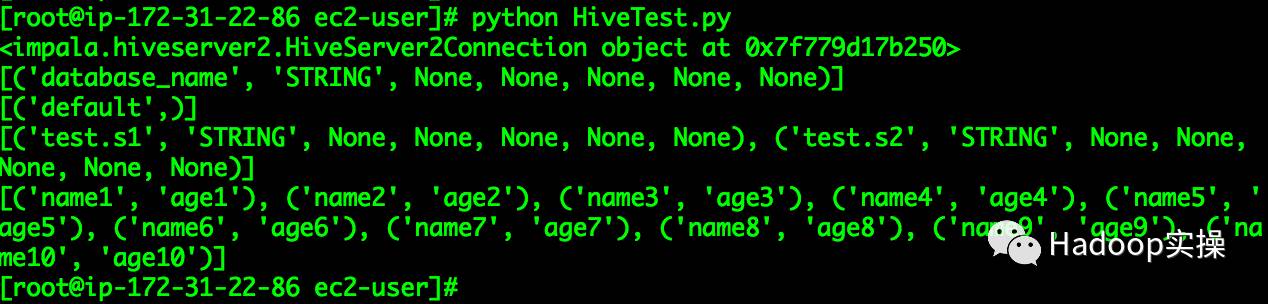

4.测试代码

在shell命令行执行Python代码测试

1.测试连接Hive

[root@ip-172-31-22-86ec2-user]# python HiveTest.py

<impala.hiveserver2.HiveServer2Connectionobject at 0x7f66eee00250>

[('database_name', 'STRING', None, None, None, None, None)]

[('default',)]

[('test.s1', 'STRING',None, None, None, None, None), ('test.s2', 'STRING', None, None, None, None, None)]

[('name1', 'age1'), ('name2', 'age2'), ('name3', 'age3'), ('name4', 'age4'), ('name5', 'age5'), ('name6', 'age6'), ('name7', 'age7'), ('name8', 'age8'), ('name9', 'age9'), ('name10', 'age10')]

[root@ip-172-31-22-86 ec2-user]#

2.测试连接Impala

[root@ip-172-31-22-86ec2-user]# python ImpalaTest.py

<impala.hiveserver2.HiveServer2Connectionobject at 0x7f7e1f2cfad0>

[('name', 'STRING', None, None, None, None, None), ('comment', 'STRING', None, None, None, None, None)]

[('_impala_builtins', 'Systemdatabase for Impala builtin functions'), ('default', 'Default Hive database')]

[('s1', 'STRING', None, None, None,None, None), ('s2', 'STRING', None, None, None,None, None)]

[('name1', 'age1'), ('name2', 'age2'), ('name3', 'age3'), ('name4', 'age4'), ('name5', 'age5'), ('name6', 'age6'), ('name7', 'age7'), ('name8', 'age8'), ('name9', 'age9'), ('name10', 'age10')]

[root@ip-172-31-22-86 ec2-user]#

5.常见问题

1.错误一

building 'sasl.saslwrapper' extension

creating build/temp.linux-x86_64-2.7

creating build/temp.linux-x86_64-2.7/sasl

gcc -pthread -fno-strict-aliasing -g -O2 -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -Isasl -I/opt/cloudera/parcels/Anaconda/include/python2.7 -c sasl/saslwrapper.cpp -o build/temp.linux-x86_64-2.7/sasl/saslwrapper.o

unable to execute 'gcc': No such file or directory

error: command 'gcc' failed with exit status 1

----------------------------------------

Command "/opt/cloudera/parcels/Anaconda/bin/python -u -c "import setuptools, tokenize;__file__='/tmp/pip-build-kD6tvP/sasl/setup.py';f=getattr(tokenize, 'open', open)(__file__);code=f.read().replace('\r\n', '\n');f.close();exec(compile(code, __file__, 'exec'))" install --record /tmp/pip-WJFNeG-record/install-record.txt --single-version-externally-managed --compile" failed with error code 1 in /tmp/pip-build-kD6tvP/sasl/

解决方法:

[root@ip-172-31-22-86 ec2-user]# yum -y install gcc

[root@ip-172-31-22-86 ec2-user]# yum install gcc-c++

2.错误二

gcc -pthread -fno-strict-aliasing -g -O2 -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -Isasl -I/opt/cloudera/parcels/Anaconda/include/python2.7 -c sasl/saslwrapper.cpp -o build/temp.linux-x86_64-2.7/sasl/saslwrapper.o

cc1plus: warning: command line option ‘-Wstrict-prototypes’ is valid for C/ObjC but not for C++ [enabled by default]

In file included from sasl/saslwrapper.cpp:254:0:

sasl/saslwrapper.h:22:23: fatal error: sasl/sasl.h: No such file or directory

#include <sasl/sasl.h>

^

compilation terminated.

error: command 'gcc' failed with exit status 1

解决方法:

[root@ip-172-31-22-86 ec2-user]# yum -y install python-devel.x86_64 cyrus-sasl-devel.x86_64

醉酒鞭名马,少年多浮夸! 岭南浣溪沙,呕吐酒肆下!挚友不肯放,数据玩的花!

温馨提示:要看高清无码套图,请使用手机打开并单击图片放大查看。

您可能还想看

推荐关注Hadoop实操,第一时间,分享更多Hadoop干货,欢迎转发和分享。

以上是关于如何使用Python Impyla客户端连接Hive和Impala的主要内容,如果未能解决你的问题,请参考以下文章