Hive数据分析实战演练

Posted 程序猿的修身养性

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Hive数据分析实战演练相关的知识,希望对你有一定的参考价值。

1、准备工作

Hive的底层是基于MapReduce分布式计算和HDFS分布式存储,因此,在使用Hive进行数据操作前,需要先启动Hadoop。如果事先已经搭建好了伪分布式环境的Hadoop,运行命令: start-all.sh,等待Hadoop启动完成即可。

使用Hive进行数据分析操作,必然需要安装和配置Hive数据仓库工具,这里就不介绍其安装和配置了,具体内容可以参考前面相关文章。本文基于Hive的本地模式(元数据信息保存到第三方mysql数据库中)进行操作,运行命令:hive,等待Hive启动完成。如下图所示,这样便可以在Hive的shell命令行窗口中进行数据分析操作。

在正式开始操作Hive进行数据分析之前,先介绍几个Hive的基本命令。

创建数据库

create database mytest;

切换到指定数据库

use mytest;

查看指定的数据库信息

describe database mytest;

查看指定数据表的详细信息

desc formatted special1;

2、SUM、AVG、MIN、MAX函数

A、数据准备

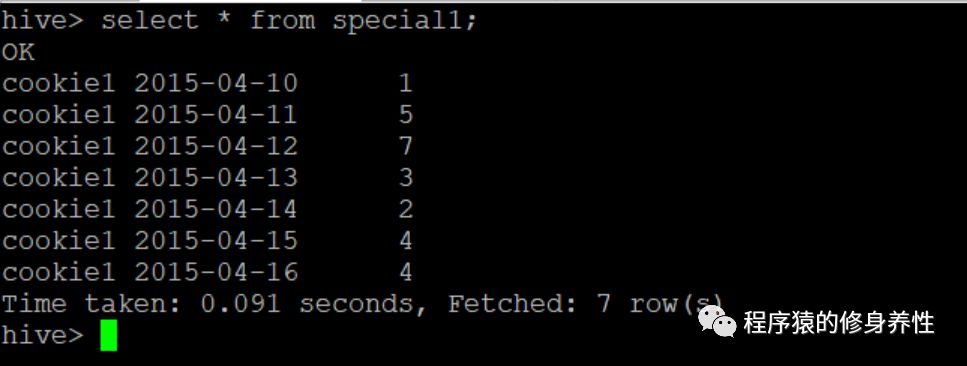

创建文件special1,往该文件中输入相应的测试数据,如下图所示:

然后,将special1文件拷贝到指定目录下,这里使用的目录是:/root/temp,然后运行如下命令创建对应的外部表:

CREATE EXTERNAL TABLE special1 (

cookieid string,createtime string,pv INT)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

stored as textfile location '/root/temp/special1/';

最后,运行如下命令将本地文件special1中的数据导入表special1中:

load data local inpath '/root/temp/special1' into table special1;

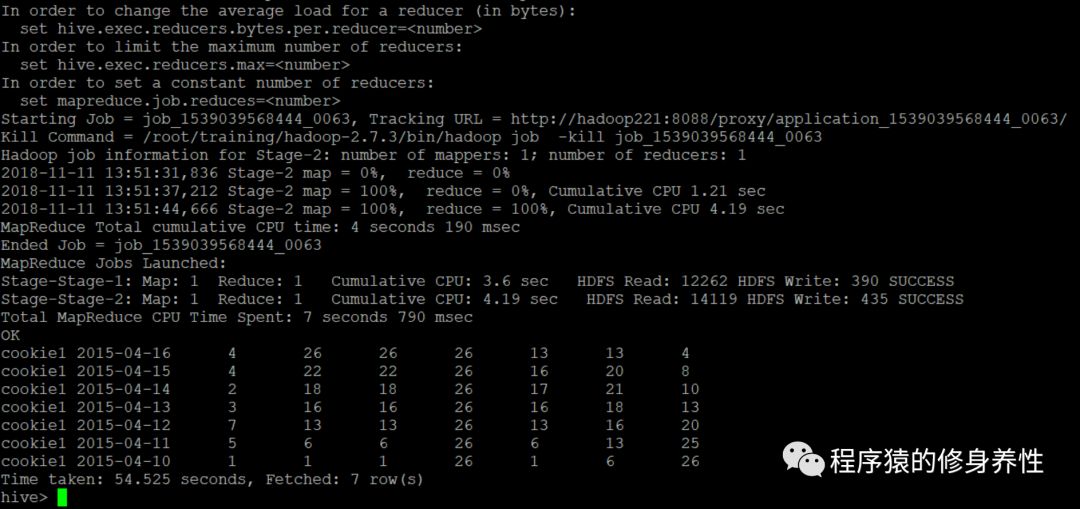

B、SUM函数使用

功能:实现分组内所有和连续累积的统计,注意,结果和ORDER BY相关,默认为升序。命令如下:

SELECT cookieid,createtime,pv,

SUM(pv) OVER(PARTITION BY cookieid ORDER BY createtime) AS pv1,--默认为从起点到当前行

SUM(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW) AS pv2,--从起点到当前行,结果同pv1

SUM(pv) OVER(PARTITION BY cookieid) AS pv3,--分组内所有行,会使得最终结果降序排列

SUM(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN 3 PRECEDING AND CURRENT ROW) AS pv4,--当前行+往前3行

SUM(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN 3 PRECEDING AND 1 FOLLOWING) AS pv5,--当前行+往前3行+往后1行

SUM(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN CURRENT ROW AND UNBOUNDED FOLLOWING) AS pv6--当前行+往后所有行

FROM special1;

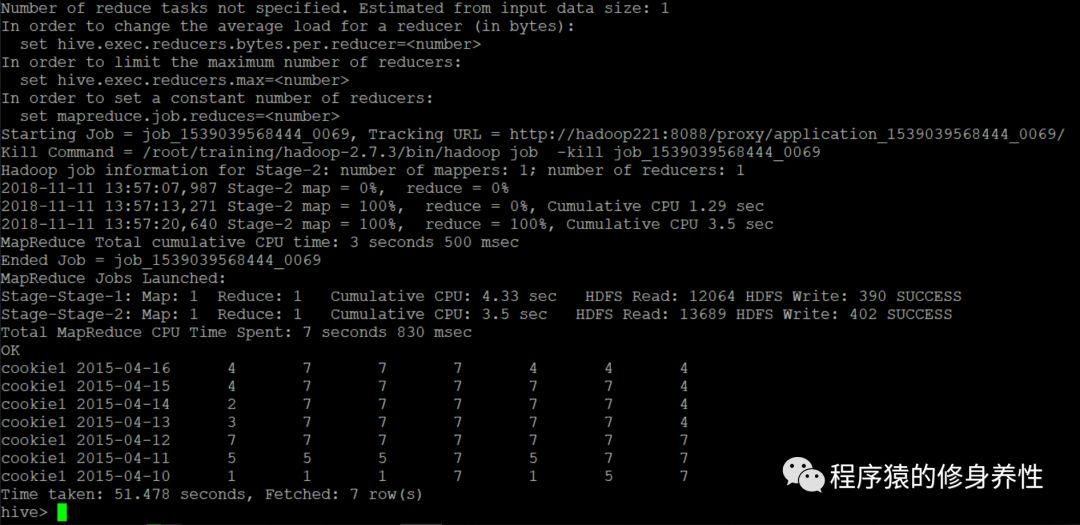

运行结果如下图所示:

解析:

pv1: 分组内从起点到当前行的pv累积,如11号的pv1等于10号的pv值加上11号的pv值, 12号的pv1等于10号的pv值加上11号的pv值加上12号的pv值;

pv2: 同pv1的计算方法;

pv3: 分组内(cookie1)所有的pv值累加;

pv4: 分组内当前行+往前3行,如11号=10号+11号,12号=10号+11号+12号,13号=10号+11号+12号+13号,14号=11号+12号+13号+14号;

pv5: 分组内当前行+往前3行+往后1行,如14号=11号+12号+13号+14号+15号=5+7+3+2+4=21;

pv6: 分组内当前行+往后所有行,如13号=13号+14号+15号+16号=3+2+4+4=13,14号=14号+15号+16号=2+4+4=10;

如果不指定ROWS BETWEEN,默认为从起点到当前行;

如果不指定ORDER BY,则将分组内所有值累加;

关键是理解ROWS BETWEEN含义,也叫做WINDOW子句:

PRECEDING:往前,FOLLOWING:往后,CURRENT ROW:当前行

UNBOUNDED:起点,UNBOUNDED PRECEDING 表示从前面的起点, UNBOUNDED FOLLOWING:表示到后面的终点

——其他AVG,MIN,MAX函数,和SUM函数的用法一样。

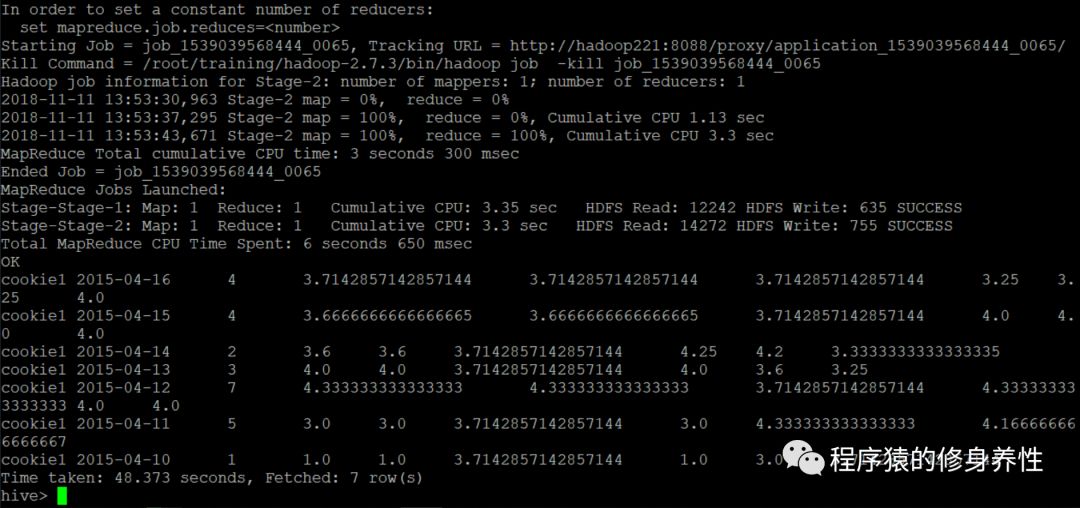

C、AVG函数使用

功能:实现求分组内指定数量行数据的平均值。命令如下:

SELECT cookieid,createtime,pv,

AVG(pv) OVER(PARTITION BY cookieid ORDER BY createtime) AS pv1,--默认为从起点到当前行

AVG(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW) AS pv2,--从起点到当前行,结果同pv1

AVG(pv) OVER(PARTITION BY cookieid) AS pv3,--分组内所有行,会使得最终结果降序排列

AVG(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN 3 PRECEDING AND CURRENT ROW) AS pv4,--当前行+往前3行

AVG(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN 3 PRECEDING AND 1 FOLLOWING) AS pv5,--当前行+往前3行+往后1行

AVG(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN CURRENT ROW AND UNBOUNDED FOLLOWING) AS pv6--当前行+往后所有行

FROM special1;

运行结果如下图所示:

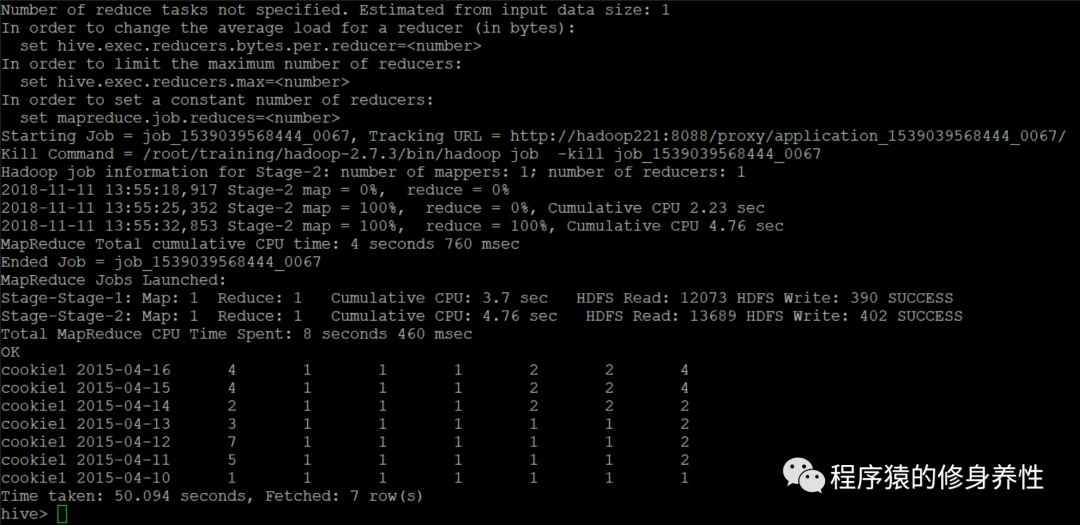

D、MIN函数使用

功能:实现求分组内指定数量行数据的最小值。命令如下:

SELECT cookieid,createtime,pv,

MIN(pv) OVER(PARTITION BY cookieid ORDER BY createtime) AS pv1,--默认为从起点到当前行

MIN(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW) AS pv2,--从起点到当前行,结果同pv1

MIN(pv) OVER(PARTITION BY cookieid) AS pv3,--分组内所有行,会使得最终结果降序排列

MIN(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN 3 PRECEDING AND CURRENT ROW) AS pv4,--当前行+往前3行

MIN(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN 3 PRECEDING AND 1 FOLLOWING) AS pv5,--当前行+往前3行+往后1行

MIN(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN CURRENT ROW AND UNBOUNDED FOLLOWING) AS pv6--当前行+往后所有行

FROM special1;

运行结果如下图所示:

E、MAX函数使用

功能:实现求分组内指定数量行数据的最大值。命令如下:

SELECT cookieid,createtime,pv,

MAX(pv) OVER(PARTITION BY cookieid ORDER BY createtime) AS pv1,--默认为从起点到当前行

MAX(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW) AS pv2,--从起点到当前行,结果同pv1

MAX(pv) OVER(PARTITION BY cookieid) AS pv3,--分组内所有行

MAX(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN 3 PRECEDING AND CURRENT ROW) AS pv4,--当前行+往前3行

MAX(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN 3 PRECEDING AND 1 FOLLOWING) AS pv5,--当前行+往前3行+往后1行

MAX(pv) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN CURRENT ROW AND UNBOUNDED FOLLOWING) AS pv6--当前行+往后所有行

FROM special1;

运行结果如下图所示:

3、NTILE、ROW_NUMBER、RANK,DENSE_RANK函数

A、数据准备

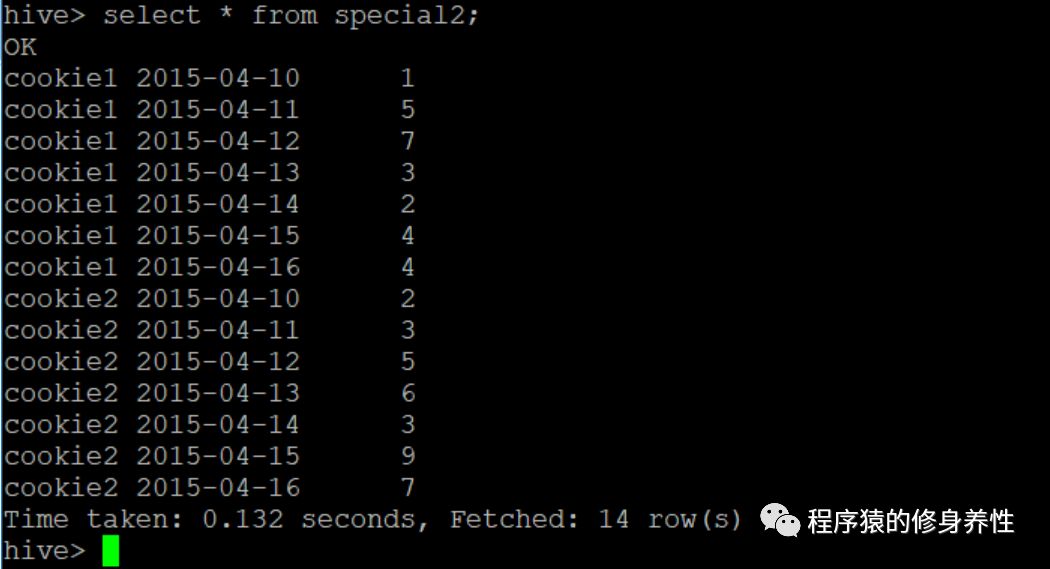

创建文件special2,往该文件中输入相应的测试数据,如下图所示:

然后,将special2文件拷贝到指定目录下,这里使用的目录是:/root/temp,然后运行如下命令创建对应的外部表:

CREATE EXTERNAL TABLE special2 (

cookieid string,createtime string,pv INT)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

stored as textfile location '/root/temp/special2/';

最后,运行如下命令将本地文件special2中的数据导入表special2中:

load data local inpath '/root/temp/special2' into table special2;

B、NTILE函数使用

功能:NTILE(n),用于将分组数据按照顺序切分成n片,并返回当前切片值。

NTILE不支持ROWS BETWEEN,比如 NTILE(2) OVER(PARTITION BY cookieid ORDER BY createtime ROWS BETWEEN 3 PRECEDING AND CURRENT ROW)。如果切片不均匀,默认增加第一个切片的分布。命令如下:

SELECT cookieid,createtime,pv,

NTILE(2) OVER(PARTITION BY cookieid ORDER BY createtime) AS rn1,--将分组内数据分成2片

NTILE(3) OVER(PARTITION BY cookieid ORDER BY createtime) AS rn2,--将分组内数据分成3片

NTILE(4) OVER(ORDER BY createtime) AS rn3--将所有数据分成4片

FROM special2

ORDER BY cookieid,createtime;

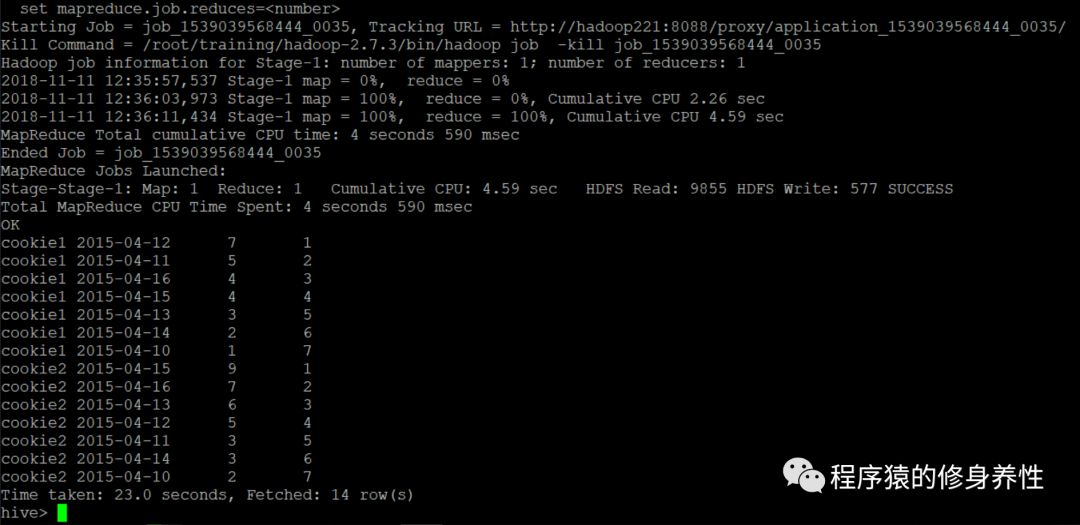

运行结果如下图所示:

再比如,统计一个cookie,pv数最多的前1/3数量的天,命令如下:

SELECT cookieid,createtime,pv,

NTILE(3) OVER(PARTITION BY cookieid ORDER BY pv DESC) AS rn

FROM special2;

运行结果如下图所示:

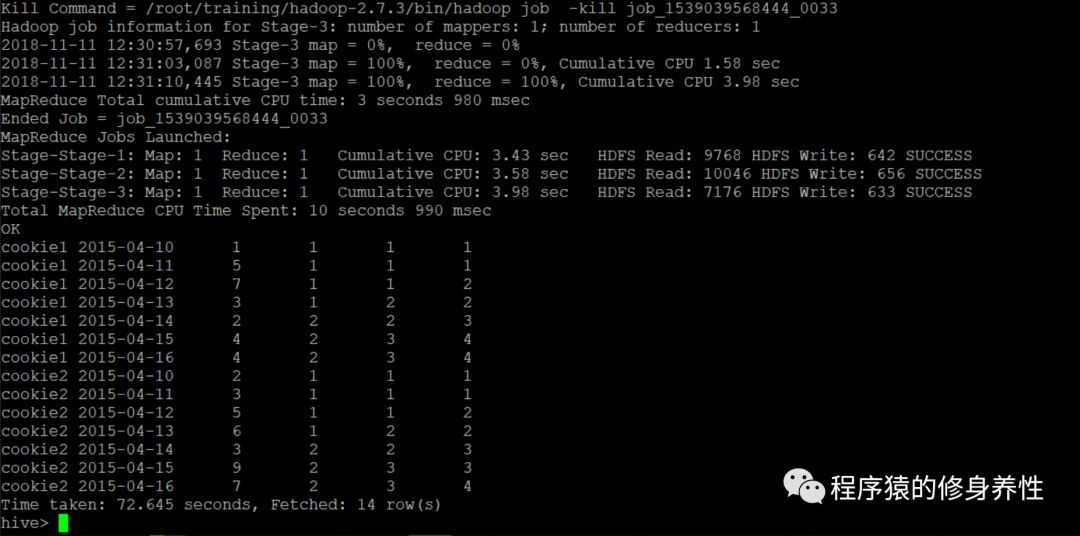

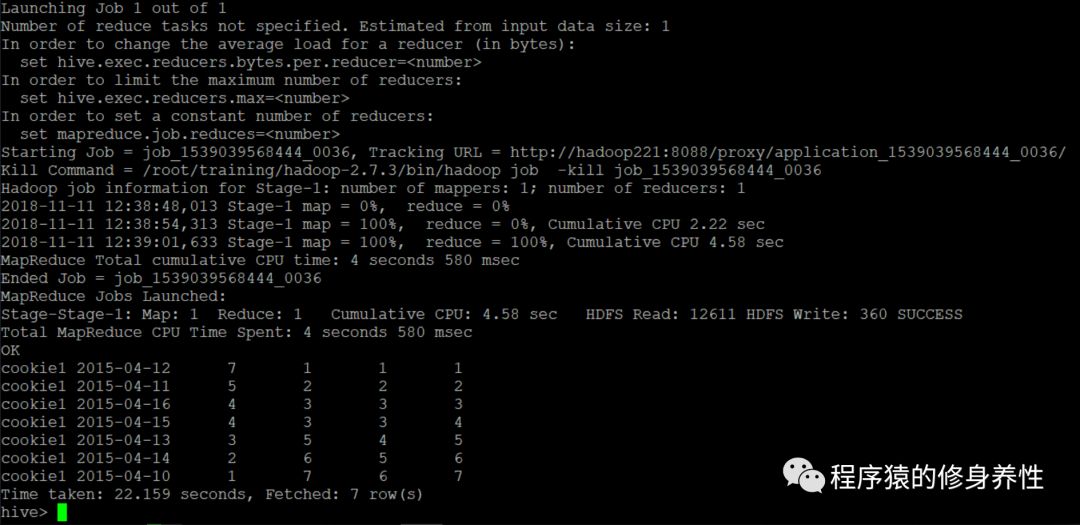

C、ROW_NUMBER函数使用

功能:从1开始,按照顺序,生成分组内记录的序列。比如,按照pv降序排列,生成分组内每天的pv名次。ROW_NUMBER()的应用场景非常多,再比如,获取分组内排序第一的记录;获取一个session中的第一条refer等。命令如下:

SELECT cookieid,createtime,pv,

ROW_NUMBER() OVER(PARTITION BY cookieid ORDER BY pv desc) AS rn

FROM special2;

运行结果如下图所示:

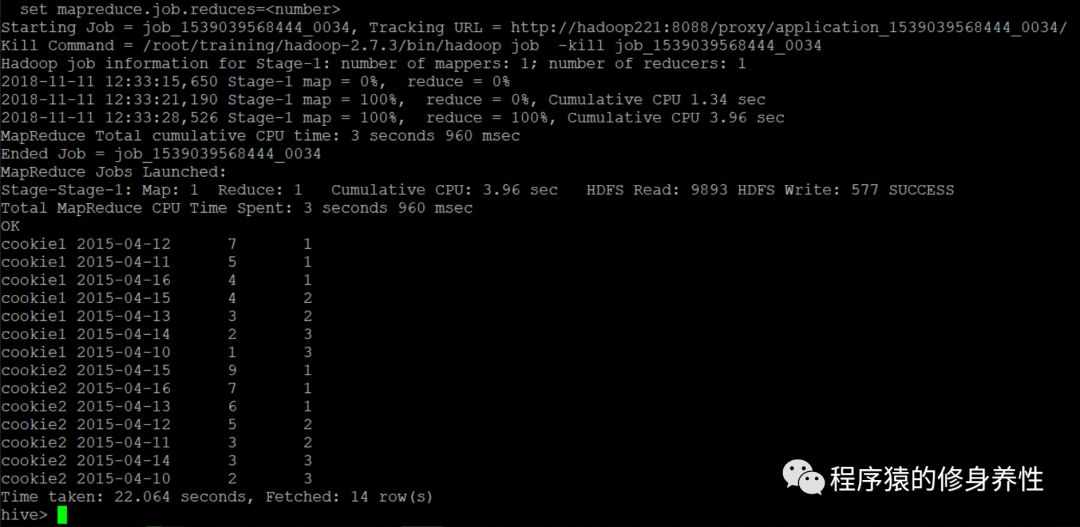

D、RANK和DENSE_RANK函数使用

功能:RANK()生成数据项在分组中的排名,排名相等会在名次中留下空缺位;DENSE_RANK()生成数据项在分组中的排名,排名相等不会在名次中留下空缺位。命令如下:

SELECT cookieid,createtime,pv,

RANK() OVER(PARTITION BY cookieid ORDER BY pv desc) AS rn1,

DENSE_RANK() OVER(PARTITION BY cookieid ORDER BY pv desc) AS rn2,

ROW_NUMBER() OVER(PARTITION BY cookieid ORDER BY pv DESC) AS rn3

FROM special2

WHERE cookieid = 'cookie1';

运行结果如下图所示:

4、CUME_DIST、PERCENT_RANK函数

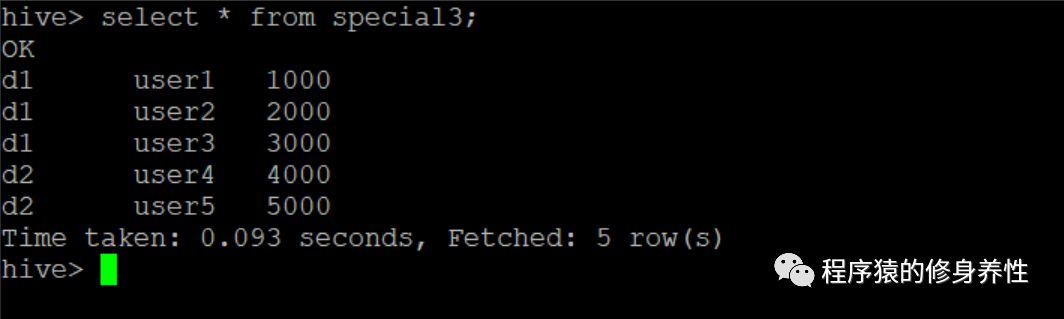

A、数据准备

创建文件special3,往该文件中输入相应的测试数据,如下图所示:

然后,将special3文件拷贝到指定目录下,这里使用的目录是:/root/temp,然后运行如下命令创建对应的外部表:

CREATE EXTERNAL TABLE special3 (

dept STRING,userid string,sal INT)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

stored as textfile location '/root/temp/special3/';

最后,运行如下命令将本地文件special3中的数据导入表special3中:

load data local inpath '/root/temp/special3' into table special3;

B、CUME_DIST函数使用

功能:实现求小于等于当前值的行数/分组内总行数,比如,统计小于等于当前薪水的人数,所占总人数的比例。命令如下:

SELECT dept,userid,sal,

CUME_DIST() OVER(ORDER BY sal) AS rn1,

CUME_DIST() OVER(PARTITION BY dept ORDER BY sal) AS rn2

FROM special3;

运行结果如下图所示:

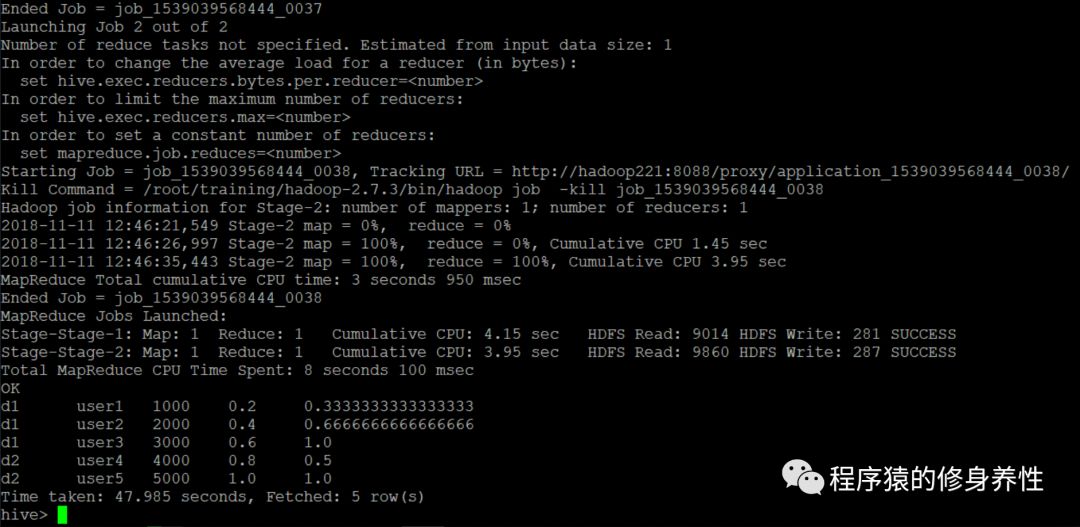

C、PERCENT_RANK函数使用

功能:实现求分组内当前行的RANK值-1/分组内总行数-1的比值,该函数的功能比较特殊,应用场景不太了解。命令如下:

SELECT dept,userid,sal,

PERCENT_RANK() OVER(ORDER BY sal) AS rn1,--分组内

RANK() OVER(ORDER BY sal) AS rn11,--分组内RANK值

SUM(1) OVER(PARTITION BY NULL) AS rn12,--分组内总行数

PERCENT_RANK() OVER(PARTITION BY dept ORDER BY sal) AS rn2

FROM special3;

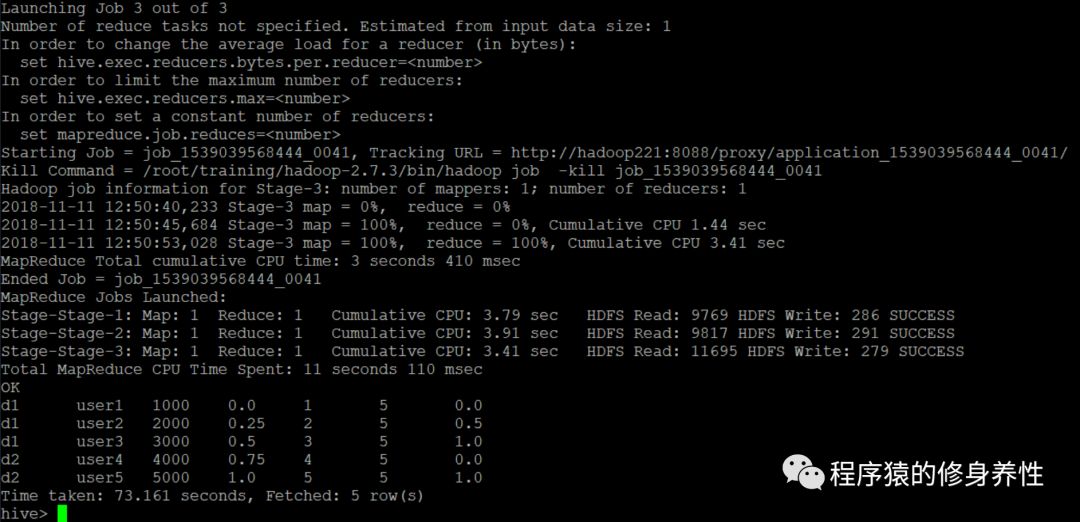

运行结果如下图所示:

5、LAG、LEAD、FIRST_VALUE、LAST_VALUE函数

A、数据准备

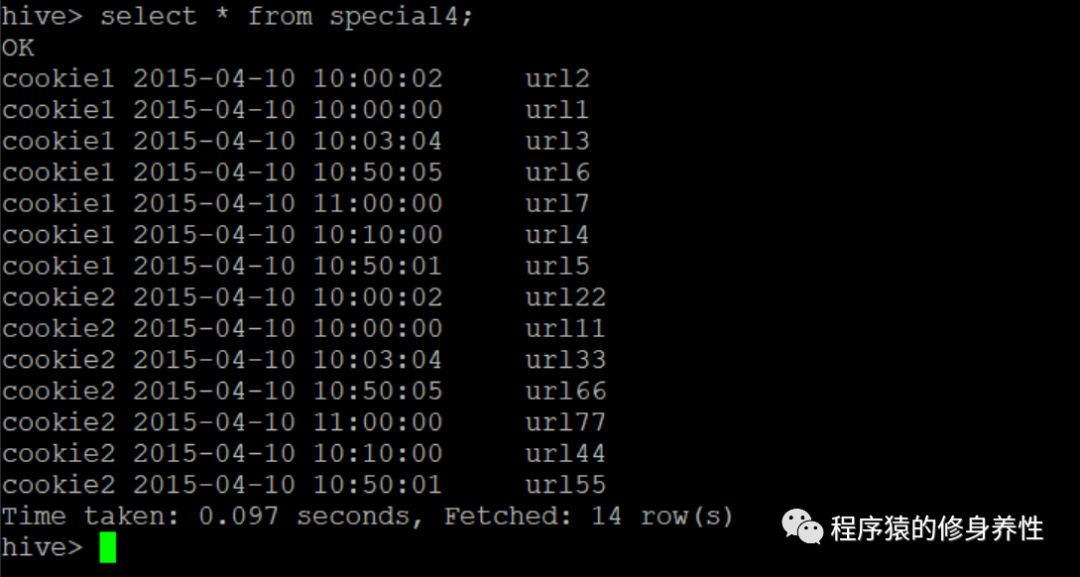

创建文件special4,往该文件中输入相应的测试数据,如下图所示:

然后,将special4文件拷贝到指定目录下,这里使用的目录是:/root/temp,然后运行如下命令创建对应的外部表:

CREATE EXTERNAL TABLE special4 (

cookieid string,createtime string,url STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

stored as textfile location '/root/temp/special4/';

最后,运行如下命令将本地文件special4中的数据导入表special4中:

load data local inpath '/root/temp/special4' into table special4;

B、LAG函数使用

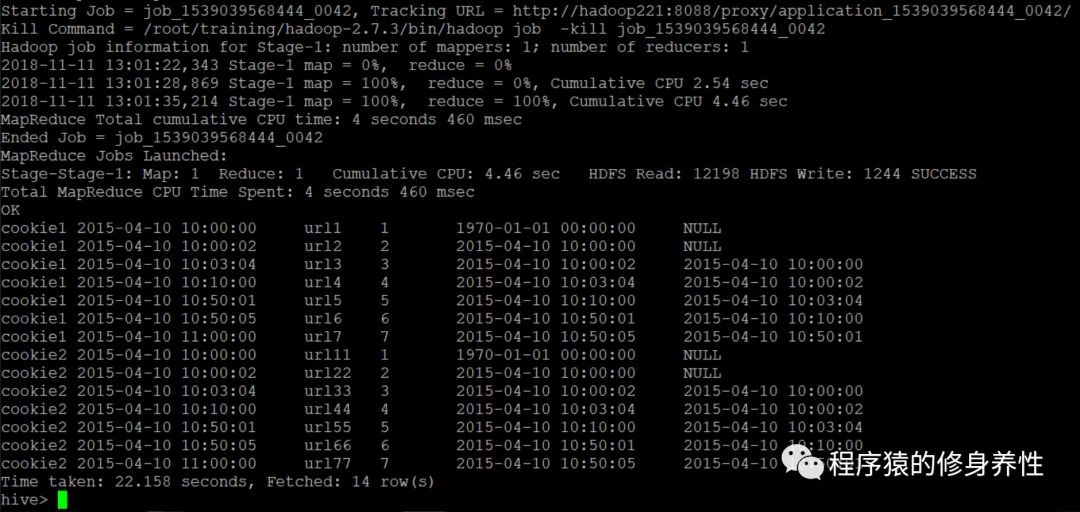

功能:LAG(col,n,DEFAULT) 用于统计窗口内往上第n行的值,第一个参数为列名,第二个参数为往上第n行(可选,默认为1),第三个参数为默认值(当往上第n行为NULL时候,取默认值,如不指定,则为NULL)。命令如下:

SELECT cookieid,createtime,url,

ROW_NUMBER() OVER(PARTITION BY cookieid ORDER BY createtime) AS rn,

LAG(createtime,1,'1970-01-01 00:00:00') OVER(PARTITION BY cookieid ORDER BY createtime) AS last_1_time,

LAG(createtime,2) OVER(PARTITION BY cookieid ORDER BY createtime) AS last_2_time

FROM special4;

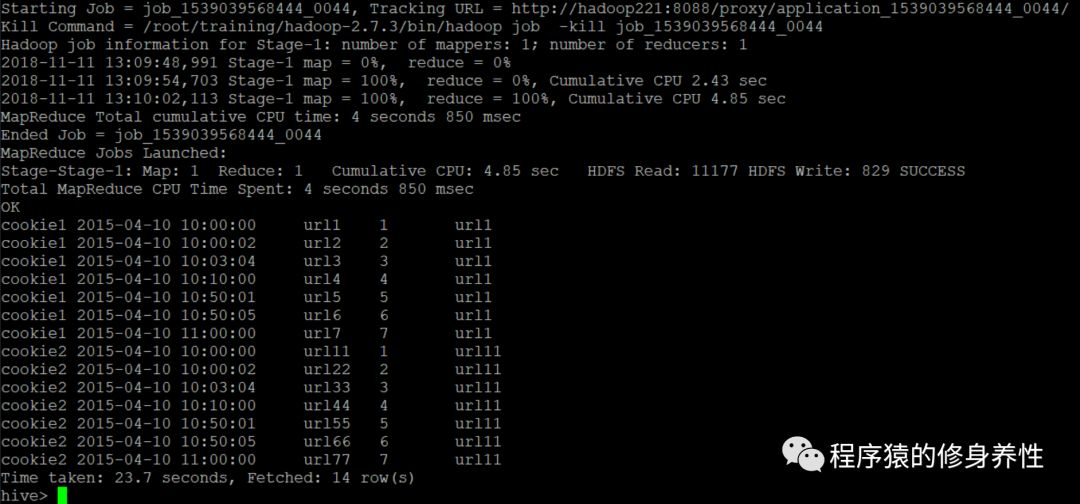

运行结果如下图所示:

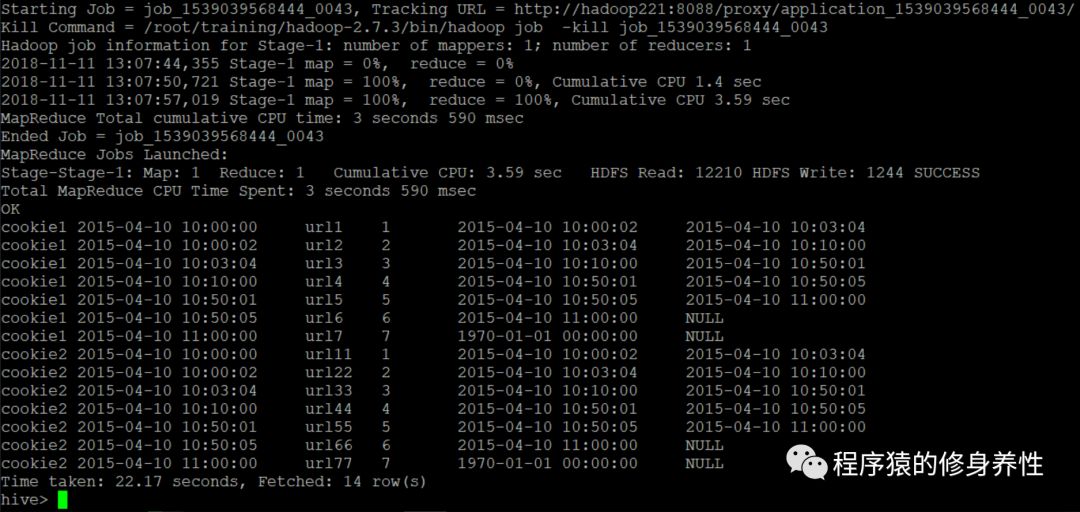

C、LEAD函数使用

功能:与LAG相反,LEAD(col,n,DEFAULT)用于统计窗口内往下第n行的值,第一个参数为列名,第二个参数为往下第n行(可选,默认为1),第三个参数为默认值(当往下第n行为NULL时候,取默认值,如不指定,则为NULL)。命令如下:

SELECT cookieid,createtime,url,

ROW_NUMBER() OVER(PARTITION BY cookieid ORDER BY createtime) AS rn,

LEAD(createtime,1,'1970-01-01 00:00:00') OVER(PARTITION BY cookieid ORDER BY createtime) AS next_1_time,

LEAD(createtime,2) OVER(PARTITION BY cookieid ORDER BY createtime) AS next_2_time

FROM special4;

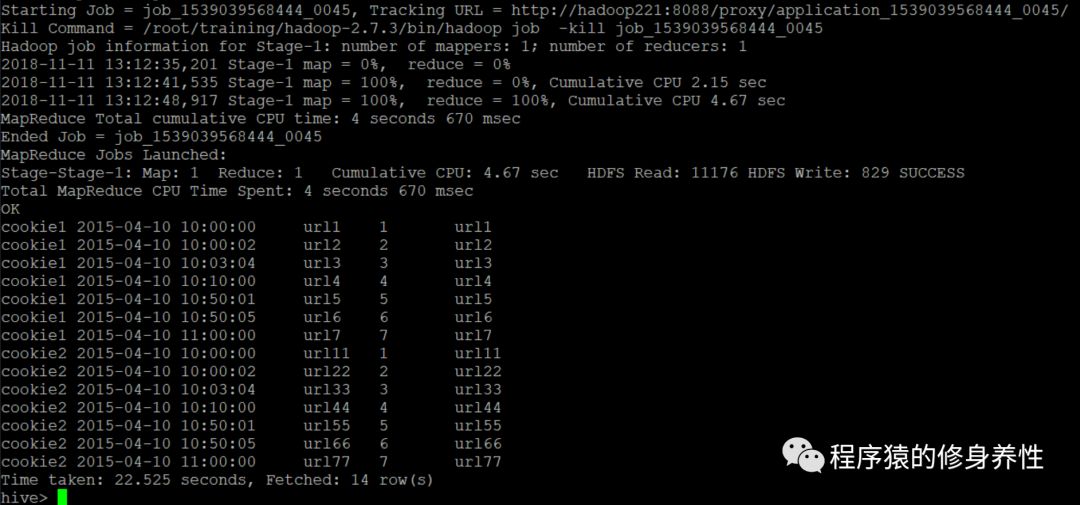

运行结果如下图所示:

D、FIRST_VALUE函数使用

功能:实现求分组内排序后,截止到当前行,第一个值。命令如下:

SELECT cookieid,createtime,url,

ROW_NUMBER() OVER(PARTITION BY cookieid ORDER BY createtime) AS rn,

FIRST_VALUE(url) OVER(PARTITION BY cookieid ORDER BY createtime) AS first1

FROM special4;

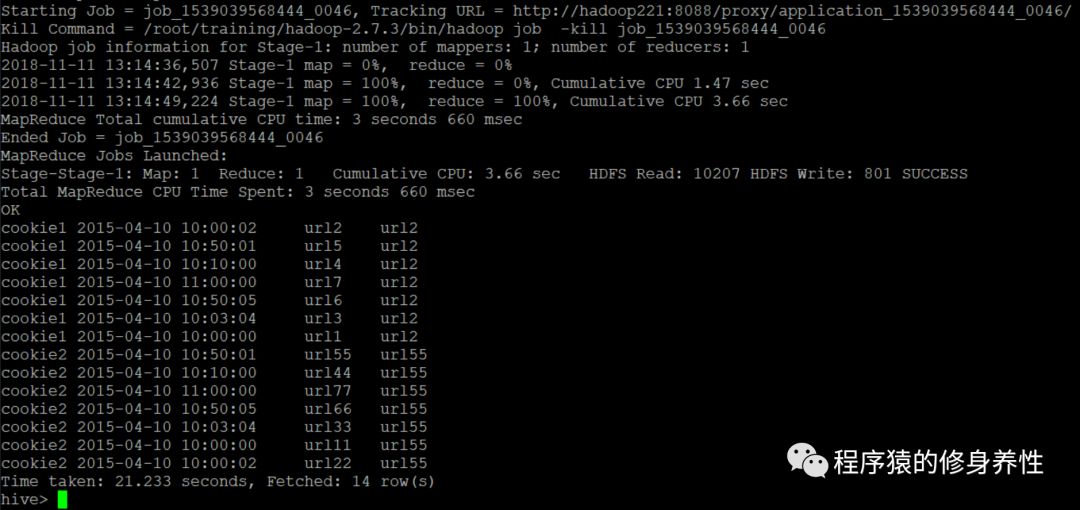

运行结果如下图所示:

E、LAST_VALUE函数使用

功能:实现求分组内排序后,截止到当前行,最后一个值。命令如下:

SELECT cookieid,createtime,url,

ROW_NUMBER() OVER(PARTITION BY cookieid ORDER BY createtime) AS rn,

LAST_VALUE(url) OVER(PARTITION BY cookieid ORDER BY createtime) AS last1

FROM special4;

运行结果如下图所示:

如果不指定ORDER BY,则默认按照记录在文件中的偏移量进行排序。命令如下:

SELECT cookieid,createtime,url,

FIRST_VALUE(url) OVER(PARTITION BY cookieid) AS first2

FROM special4;

运行结果如下图所示:

如果想要取分组内排序后最后一个值,则需要变通一下。命令如下:

SELECT cookieid,createtime,url,

ROW_NUMBER() OVER(PARTITION BY cookieid ORDER BY createtime) AS rn,

LAST_VALUE(url) OVER(PARTITION BY cookieid ORDER BY createtime) AS last1,

FIRST_VALUE(url) OVER(PARTITION BY cookieid ORDER BY createtime DESC) AS last2

FROM special4

ORDER BY cookieid,createtime;

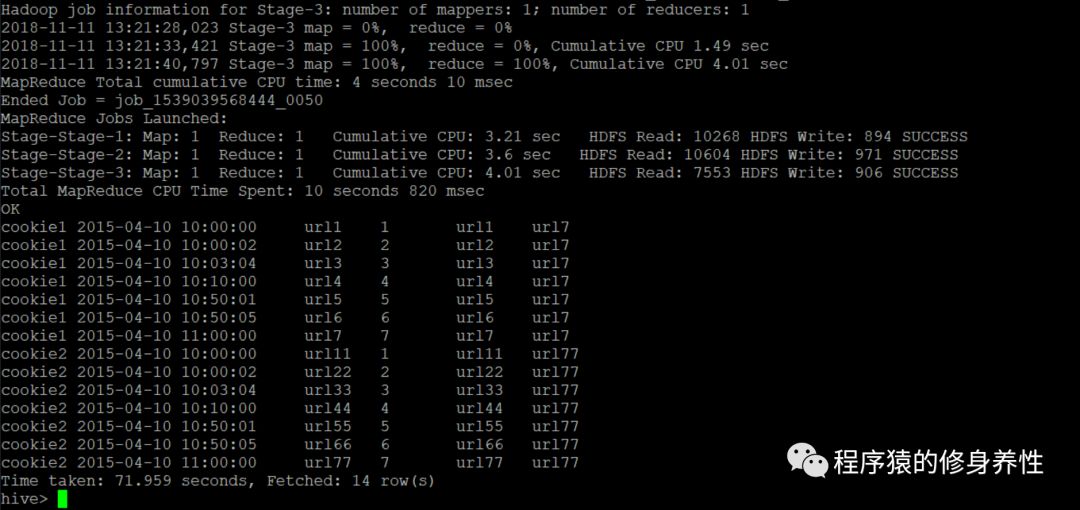

运行结果如下图所示:

6、GROUPING SETS、GROUPING__ID、CUBE、ROOUP函数

A、数据准备

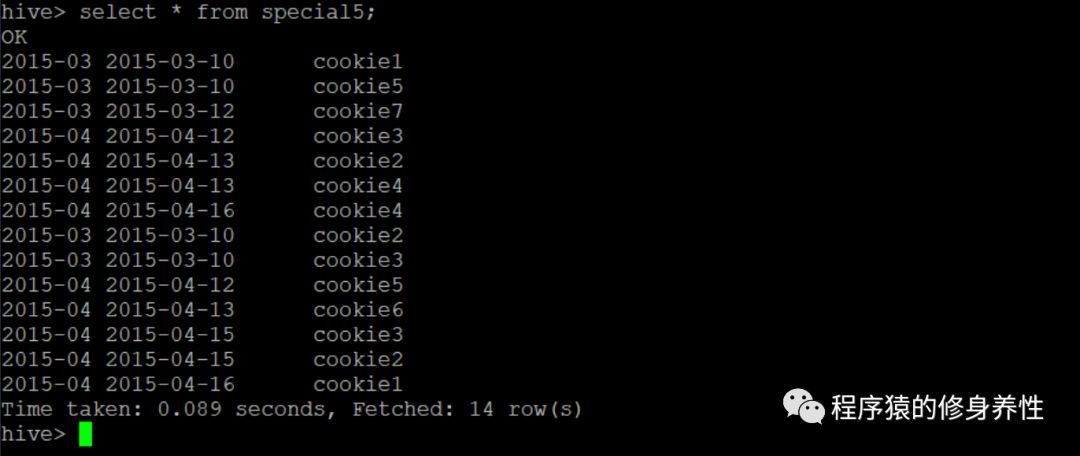

创建文件special5,往该文件中输入相应的测试数据,如下图所示:

然后,将special5文件拷贝到指定目录下,这里使用的目录是:/root/temp,然后运行如下命令创建对应的外部表:

CREATE EXTERNAL TABLE special5 (

month STRING,day STRING,cookieid STRING )

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

stored as textfile location '/root/temp/special5/';

最后,运行如下命令将本地文件special5中的数据导入表special5中:

load data local inpath '/root/temp/special5' into table special5;

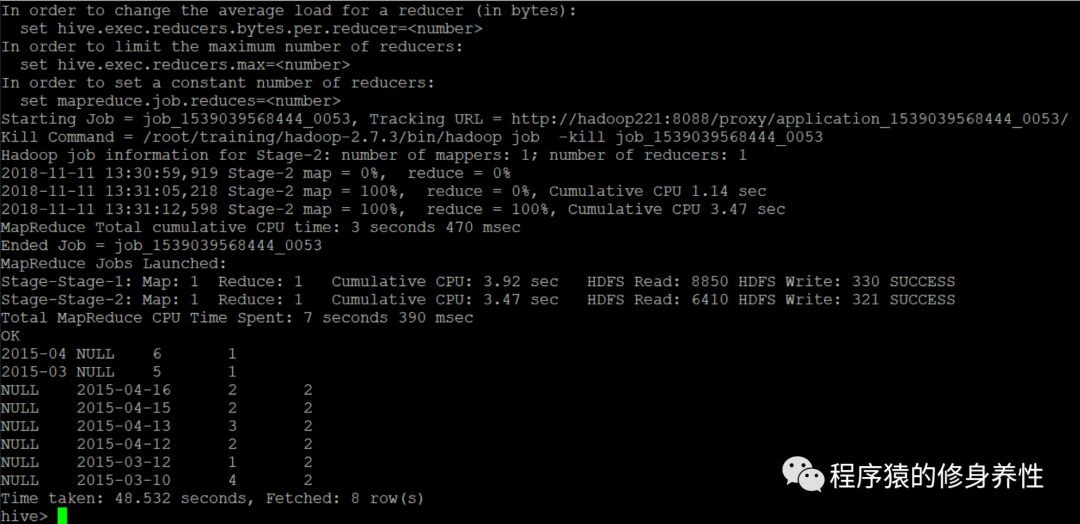

B、GROUPINT SETS函数使用

功能:在一个GROUP BY查询中,根据不同的维度组合进行聚合,等价于将不同维度的GROUP BY结果集进行UNION ALL。命令如下:

SELECT month,day,

COUNT(DISTINCT cookieid) AS uv,

GROUPING__ID

FROM special5

GROUP BY month,day

GROUPING SETS (month,day)

ORDER BY GROUPING__ID;

运行结果如下图所示:

上面的语句等价于:

SELECT month,NULL,COUNT(DISTINCT cookieid) AS uv,1 AS GROUPING__ID FROM special5 GROUP BY month

UNION ALL

SELECT NULL,day,COUNT(DISTINCT cookieid) AS uv,2 AS GROUPING__ID FROM special5 GROUP BY day

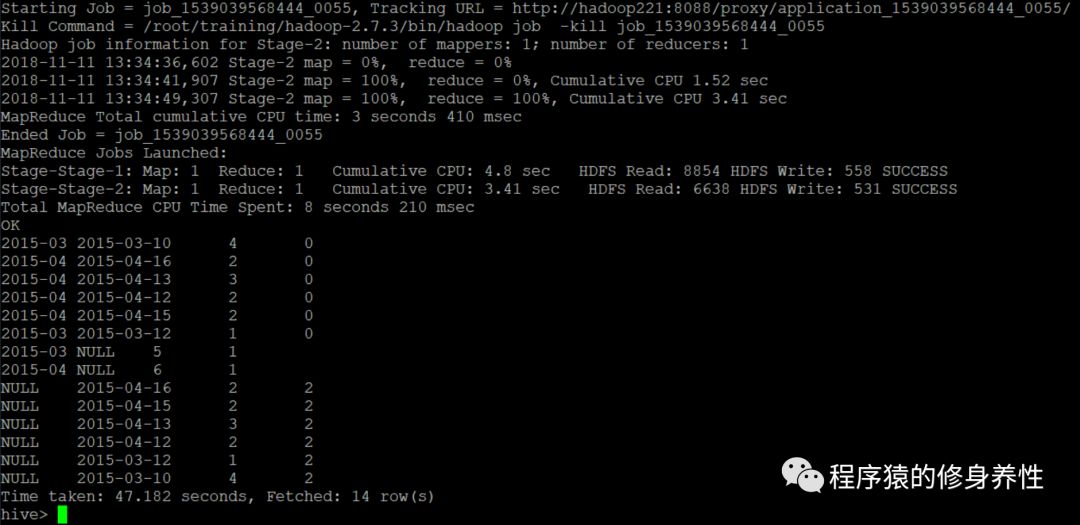

再比如下述命令:

SELECT month,day,

COUNT(DISTINCT cookieid) AS uv,

GROUPING__ID

FROM special5

GROUP BY month,day

GROUPING SETS (month,day,(month,day))

ORDER BY GROUPING__ID;

运行结果如下图所示:

上述命令等价于:

SELECT month,NULL,COUNT(DISTINCT cookieid) AS uv,1 AS GROUPING__ID FROM special5 GROUP BY month

UNION ALL

SELECT NULL,day,COUNT(DISTINCT cookieid) AS uv,2 AS GROUPING__ID FROM special5 GROUP BY day

UNION ALL

SELECT month,day,COUNT(DISTINCT cookieid) AS uv,3 AS GROUPING__ID FROM special5 GROUP BY month,day

其中的GROUPING__ID,表示结果属于哪一个分组集合。

C、CUBE函数使用

功能:根据GROUP BY的维度的所有组合进行聚合。命令如下:

SELECT month,day,

COUNT(DISTINCT cookieid) AS uv,

GROUPING__ID

FROM special5

GROUP BY month,day

WITH CUBE

ORDER BY GROUPING__ID;

运行结果如下图所示:

上述命令等价于:

SELECT NULL,NULL,COUNT(DISTINCT cookieid) AS uv,0 AS GROUPING__ID FROM special5

UNION ALL

SELECT month,NULL,COUNT(DISTINCT cookieid) AS uv,1 AS GROUPING__ID FROM special5 GROUP BY month

UNION ALL

SELECT NULL,day,COUNT(DISTINCT cookieid) AS uv,2 AS GROUPING__ID FROM special5 GROUP BY day

UNION ALL

SELECT month,day,COUNT(DISTINCT cookieid) AS uv,3 AS GROUPING__ID FROM special5 GROUP BY month,day

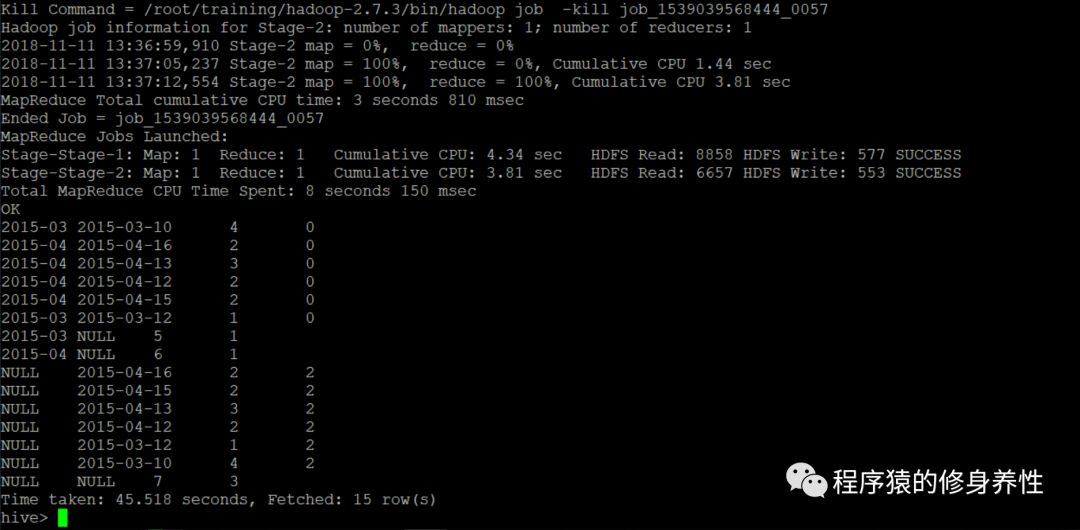

D、ROLLUP函数使用

功能:是CUBE的子集,以最左侧的维度为主,从该维度进行层级聚合。命令如下:

SELECT month,day,

COUNT(DISTINCT cookieid) AS uv,

GROUPING__ID

FROM special5

GROUP BY month,day

WITH ROLLUP

ORDER BY GROUPING__ID;

运行结果如下图所示:

还可以实现这样的上钻过程:月天的uv->月的uv->总uv,把month和day调换顺序,则以day维度进行层级聚合。命令如下:

SELECT day,month,

COUNT(DISTINCT cookieid) AS uv,

GROUPING__ID

FROM special5

GROUP BY day,month

WITH ROLLUP

ORDER BY GROUPING__ID;

运行结果如下图所示:

以上是关于Hive数据分析实战演练的主要内容,如果未能解决你的问题,请参考以下文章