可视化神经网络架构工具

Posted 自动化电气系统

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了可视化神经网络架构工具相关的知识,希望对你有一定的参考价值。

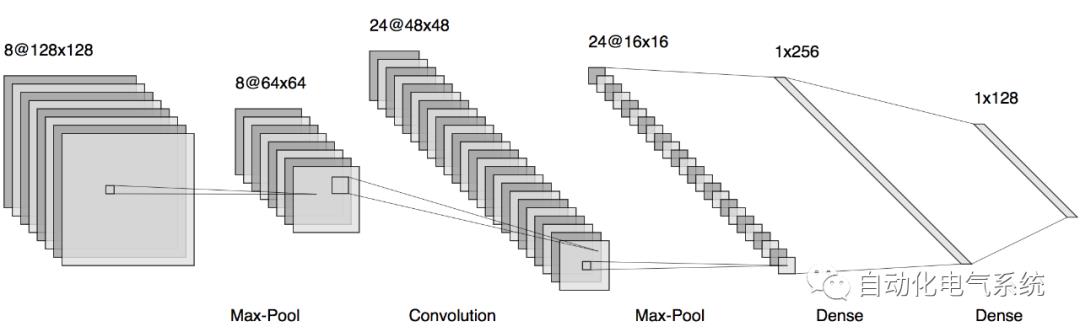

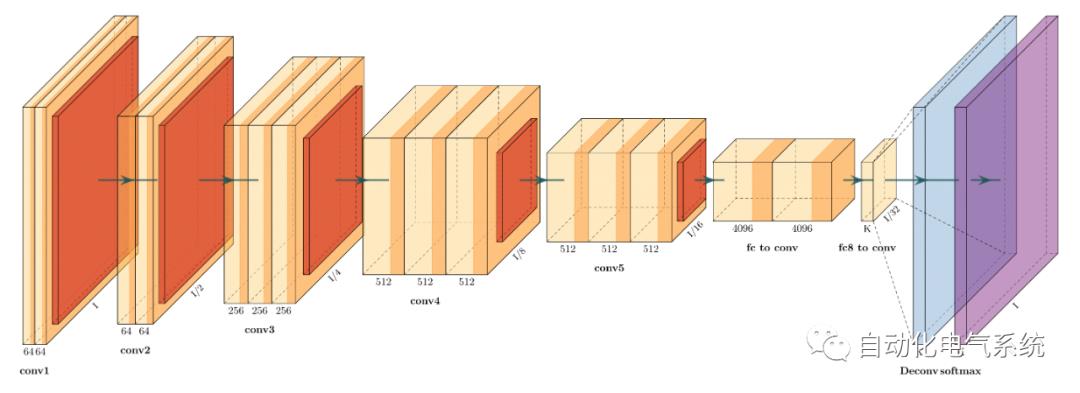

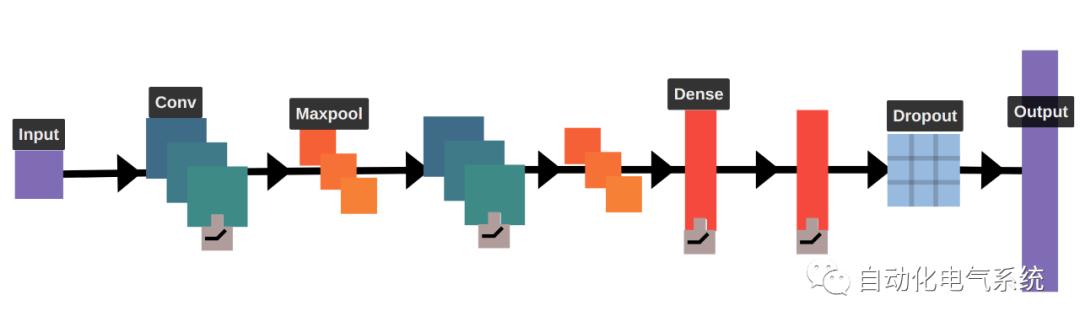

draw_convnet :Python 脚本,用于说明卷积神经网络 (ConvNet)

https://github.com/gwding/draw_convnet.git

使用代码

无需引用任何内容才能使用代码。

如果您没有面临空间限制,并且它不会破坏纸张的流,您可以考虑添加类似"此图是通过调整 https://github.com/gwding/draw_convnet 代码生成的"(可能在脚注中)。

Fyi, 最初我用代码生成本文中的 convnet 图 "从陷阱图像自动检测虫害管理"

。

Nnsvg

http://alexlenail.me/NN-SVG/LeNet.html

PlotNeuralNet :用于绘制神经网络的乳胶代码用于报告和演示。查看示例,了解它们是如何制作的。此外,让我们整合您进行的任何改进并修复任何 Bug,以帮助更多的人使用此代码。

https://github.com/HarisIqbal88/PlotNeuralNet.git

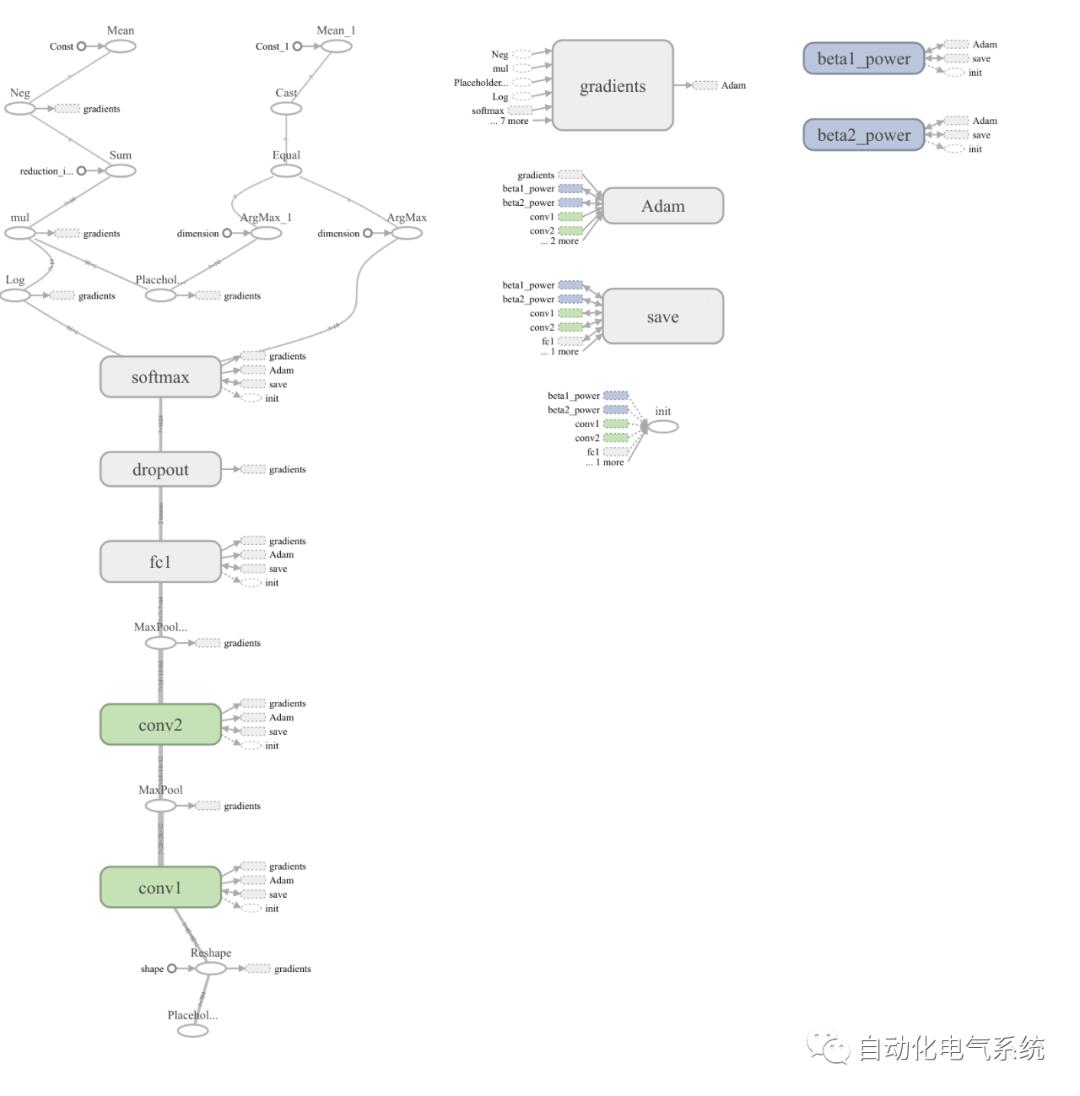

张力板 - 张力板的图形仪表板是检查张力流模型的强大工具。

https://www.tensorflow.org/tensorboard/graphs

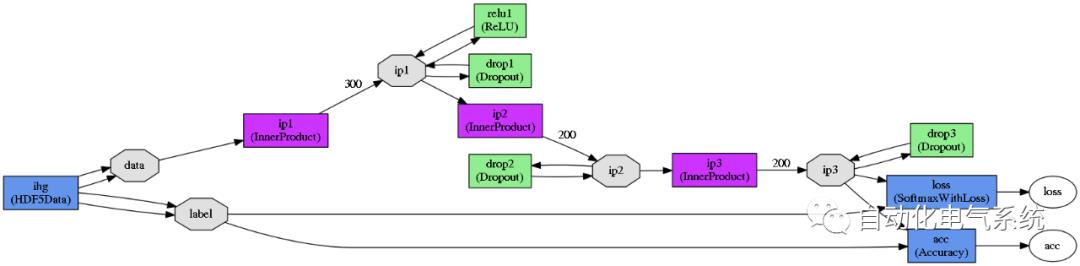

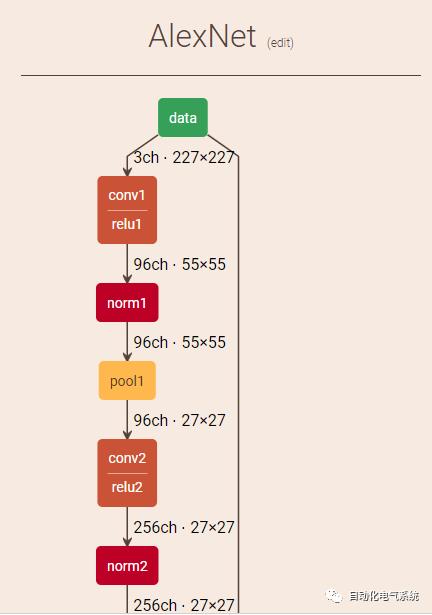

5 Caffe

https://github.com/BVLC/caffe/blob/master/python/caffe/draw.py

使用Caffe/draw.py

"""Caffe network visualization: draw the NetParameter protobuffer... note::This requires pydot>=1.0.2, which is not included in requirements.txt sinceit requires graphviz and other prerequisites outside the scope of theCaffe."""from caffe.proto import caffe_pb2"""pydot is not supported under python 3 and pydot2 doesn't work properly.pydotplus works nicely (pip install pydotplus)"""try:# Try to load pydotplusimport pydotplus as pydotexcept ImportError:import pydot# Internal layer and blob styles.LAYER_STYLE_DEFAULT = {'shape': 'record','fillcolor': '#6495ED','style': 'filled'}NEURON_LAYER_STYLE = {'shape': 'record','fillcolor': '#90EE90','style': 'filled'}BLOB_STYLE = {'shape': 'octagon','fillcolor': '#E0E0E0','style': 'filled'}def get_pooling_types_dict():"""Get dictionary mapping pooling type number to type name"""desc = caffe_pb2.PoolingParameter.PoolMethod.DESCRIPTORd = {}for k, v in desc.values_by_name.items():d[v.number] = kreturn ddef get_edge_label(layer):"""Define edge label based on layer type."""if layer.type == 'Data':edge_label = 'Batch ' + str(layer.data_param.batch_size)elif layer.type == 'Convolution' or layer.type == 'Deconvolution':edge_label = str(layer.convolution_param.num_output)elif layer.type == 'InnerProduct':edge_label = str(layer.inner_product_param.num_output)else:edge_label = '""'return edge_labeldef get_layer_lr_mult(layer):"""Get the learning rate multipliers.Get the learning rate multipliers for the given layer. Assumes aConvolution/Deconvolution/InnerProduct layer.Parameters----------layer : caffe_pb2.LayerParameterA Convolution, Deconvolution, or InnerProduct layer.Returns-------learning_rates : tuple of floatsthe learning rate multipliers for the weights and biases."""if layer.type not in ['Convolution', 'Deconvolution', 'InnerProduct']:raise ValueError("%s layers do not have a ""learning rate multiplier" % layer.type)if not hasattr(layer, 'param'):return (1.0, 1.0)params = getattr(layer, 'param')if len(params) == 0:return (1.0, 1.0)if len(params) == 1:lrm0 = getattr(params[0],'lr_mult', 1.0)return (lrm0, 1.0)if len(params) == 2:lrm0, lrm1 = [getattr(p,'lr_mult', 1.0) for p in params]return (lrm0, lrm1)raise ValueError("Could not parse the learning rate multiplier")def get_layer_label(layer, rankdir, display_lrm=False):"""Define node label based on layer type.Parameters----------layer : caffe_pb2.LayerParameterrankdir : {'LR', 'TB', 'BT'}Direction of graph layout.display_lrm : boolean, optionalIf True include the learning rate multipliers in the label (default isFalse).Returns-------node_label : stringA label for the current layer"""if rankdir in ('TB', 'BT'):# If graph orientation is vertical, horizontal space is free and# vertical space is not; separate words with spacesseparator = ' 'else:# If graph orientation is horizontal, vertical space is free and# horizontal space is not; separate words with newlinesseparator = r'\n'# Initializes a list of descriptors that will be concatenated into the# `node_label`descriptors_list = []# Add the layer's namedescriptors_list.append(layer.name)# Add layer's typeif layer.type == 'Pooling':pooling_types_dict = get_pooling_types_dict()layer_type = '(%s %s)' % (layer.type,pooling_types_dict[layer.pooling_param.pool])else:layer_type = '(%s)' % layer.typedescriptors_list.append(layer_type)# Describe parameters for spatial operation layersif layer.type in ['Convolution', 'Deconvolution', 'Pooling']:if layer.type == 'Pooling':kernel_size = layer.pooling_param.kernel_sizestride = layer.pooling_param.stridepadding = layer.pooling_param.padelse:kernel_size = layer.convolution_param.kernel_size[0] if \len(layer.convolution_param.kernel_size) else 1stride = layer.convolution_param.stride[0] if \len(layer.convolution_param.stride) else 1padding = layer.convolution_param.pad[0] if \len(layer.convolution_param.pad) else 0spatial_descriptor = separator.join(["kernel size: %d" % kernel_size,"stride: %d" % stride,"pad: %d" % padding,])descriptors_list.append(spatial_descriptor)# Add LR multiplier for learning layersif display_lrm and layer.type in ['Convolution', 'Deconvolution', 'InnerProduct']:lrm0, lrm1 = get_layer_lr_mult(layer)if any([lrm0, lrm1]):lr_mult = "lr mult: %.1f, %.1f" % (lrm0, lrm1)descriptors_list.append(lr_mult)# Concatenate the descriptors into one labelnode_label = separator.join(descriptors_list)# Outer double quotes needed or else colon characters don't parse# properlynode_label = '"%s"' % node_labelreturn node_labeldef choose_color_by_layertype(layertype):"""Define colors for nodes based on the layer type."""color = '#6495ED' # Defaultif layertype == 'Convolution' or layertype == 'Deconvolution':color = '#FF5050'elif layertype == 'Pooling':color = '#FF9900'elif layertype == 'InnerProduct':color = '#CC33FF'return colordef get_pydot_graph(caffe_net, rankdir, label_edges=True, phase=None, display_lrm=False):"""Create a data structure which represents the `caffe_net`.Parameters----------caffe_net : objectrankdir : {'LR', 'TB', 'BT'}Direction of graph layout.label_edges : boolean, optionalLabel the edges (default is True).phase : {caffe_pb2.Phase.TRAIN, caffe_pb2.Phase.TEST, None} optionalInclude layers from this network phase. If None, include all layers.(the default is None)display_lrm : boolean, optionalIf True display the learning rate multipliers when relevant (default isFalse).Returns-------pydot graph object"""pydot_graph = pydot.Dot(caffe_net.name if caffe_net.name else 'Net',graph_type='digraph',rankdir=rankdir)pydot_nodes = {}pydot_edges = []for layer in caffe_net.layer:if phase is not None:included = Falseif len(layer.include) == 0:included = Trueif len(layer.include) > 0 and len(layer.exclude) > 0:raise ValueError('layer ' + layer.name + ' has both include ''and exclude specified.')for layer_phase in layer.include:included = included or layer_phase.phase == phasefor layer_phase in layer.exclude:included = included and not layer_phase.phase == phaseif not included:continuenode_label = get_layer_label(layer, rankdir, display_lrm=display_lrm)node_name = "%s_%s" % (layer.name, layer.type)if (len(layer.bottom) == 1 and len(layer.top) == 1 andlayer.bottom[0] == layer.top[0]):# We have an in-place neuron layer.pydot_nodes[node_name] = pydot.Node(node_label,**NEURON_LAYER_STYLE)else:layer_style = LAYER_STYLE_DEFAULTlayer_style['fillcolor'] = choose_color_by_layertype(layer.type)pydot_nodes[node_name] = pydot.Node(node_label, **layer_style)for bottom_blob in layer.bottom:pydot_nodes[bottom_blob + '_blob'] = pydot.Node('%s' % bottom_blob,**BLOB_STYLE)edge_label = '""'pydot_edges.append({'src': bottom_blob + '_blob','dst': node_name,'label': edge_label})for top_blob in layer.top:pydot_nodes[top_blob + '_blob'] = pydot.Node('%s' % (top_blob))if label_edges:edge_label = get_edge_label(layer)else:edge_label = '""'pydot_edges.append({'src': node_name,'dst': top_blob + '_blob','label': edge_label})# Now, add the nodes and edges to the graph.for node in pydot_nodes.values():pydot_graph.add_node(node)for edge in pydot_edges:pydot_graph.add_edge(pydot.Edge(pydot_nodes[edge['src']],pydot_nodes[edge['dst']],label=edge['label']))return pydot_graphdef draw_net(caffe_net, rankdir, ext='png', phase=None, display_lrm=False):"""Draws a caffe net and returns the image string encoded using the givenextension.Parameters----------caffe_net : a caffe.proto.caffe_pb2.NetParameter protocol buffer.ext : string, optionalThe image extension (the default is 'png').phase : {caffe_pb2.Phase.TRAIN, caffe_pb2.Phase.TEST, None} optionalInclude layers from this network phase. If None, include all layers.(the default is None)display_lrm : boolean, optionalIf True display the learning rate multipliers for the learning layers(default is False).Returns-------string :Postscript representation of the graph."""return get_pydot_graph(caffe_net, rankdir, phase=phase,display_lrm=display_lrm).create(format=ext)def draw_net_to_file(caffe_net, filename, rankdir='LR', phase=None, display_lrm=False):"""Draws a caffe net, and saves it to file using the format given as thefile extension. Use '.raw' to output raw text that you can manually feedto graphviz to draw graphs.Parameters----------caffe_net : a caffe.proto.caffe_pb2.NetParameter protocol buffer.filename : stringThe path to a file where the networks visualization will be stored.rankdir : {'LR', 'TB', 'BT'}Direction of graph layout.phase : {caffe_pb2.Phase.TRAIN, caffe_pb2.Phase.TEST, None} optionalInclude layers from this network phase. If None, include all layers.(the default is None)display_lrm : boolean, optionalIf True display the learning rate multipliers for the learning layers(default is False)."""ext = filename[filename.rfind('.')+1:]with open(filename, 'wb') as fid:fid.write(draw_net(caffe_net, rankdir, ext, phase, display_lrm))

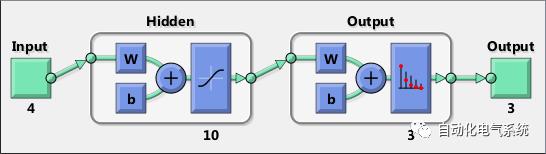

Matlab

https://www.mathworks.com/help/deeplearning/ref/view.html;jsessionid=ecfc1e72a879f7422a88421ad24c

例子;此示例演示如何查看模式识别网络的图表。

-

[x,t] = iris_dataset;net = patternnet;net = configure(net,x,t);view(net)

7. Keras.js

https://github.com/transcranial/keras-js.git

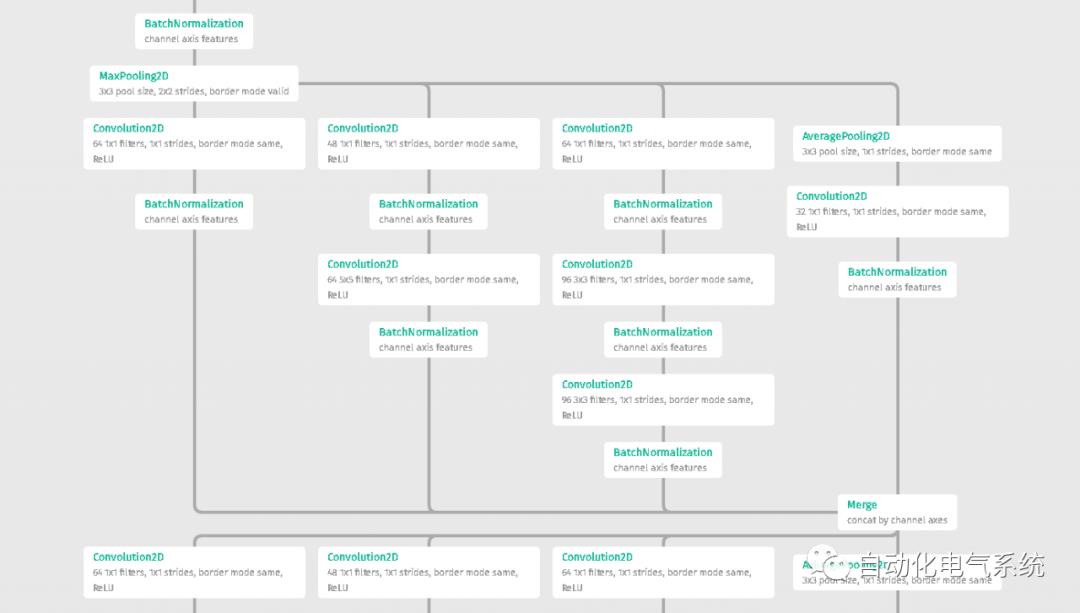

https://transcranial.github.io/keras-js/#/inception-v3

8.keras-顺序-ascii - Keras 的库,用于调查顺序模型的体系结构和参数。

https://github.com/stared/keras-sequential-ascii.git

VGG 16 架构

OPERATION DATA DIMENSIONS WEIGHTS(N) WEIGHTS(%)Input ##### 3 224 224InputLayer | ------------------- 0 0.0%##### 3 224 224Convolution2D \|/ ------------------- 1792 0.0%relu ##### 64 224 224Convolution2D \|/ ------------------- 36928 0.0%relu ##### 64 224 224MaxPooling2D Y max ------------------- 0 0.0%##### 64 112 112Convolution2D \|/ ------------------- 73856 0.1%relu ##### 128 112 112Convolution2D \|/ ------------------- 147584 0.1%relu ##### 128 112 112MaxPooling2D Y max ------------------- 0 0.0%##### 128 56 56Convolution2D \|/ ------------------- 295168 0.2%relu ##### 256 56 56Convolution2D \|/ ------------------- 590080 0.4%relu ##### 256 56 56Convolution2D \|/ ------------------- 590080 0.4%relu ##### 256 56 56MaxPooling2D Y max ------------------- 0 0.0%##### 256 28 28Convolution2D \|/ ------------------- 1180160 0.9%relu ##### 512 28 28Convolution2D \|/ ------------------- 2359808 1.7%relu ##### 512 28 28Convolution2D \|/ ------------------- 2359808 1.7%relu ##### 512 28 28MaxPooling2D Y max ------------------- 0 0.0%##### 512 14 14Convolution2D \|/ ------------------- 2359808 1.7%relu ##### 512 14 14Convolution2D \|/ ------------------- 2359808 1.7%relu ##### 512 14 14Convolution2D \|/ ------------------- 2359808 1.7%relu ##### 512 14 14MaxPooling2D Y max ------------------- 0 0.0%##### 512 7 7Flatten ||||| ------------------- 0 0.0%##### 25088Dense XXXXX ------------------- 102764544 74.3%relu ##### 4096Dense XXXXX ------------------- 16781312 12.1%relu ##### 4096Dense XXXXX ------------------- 4097000 3.0%softmax ##### 1000

9 Graphviz

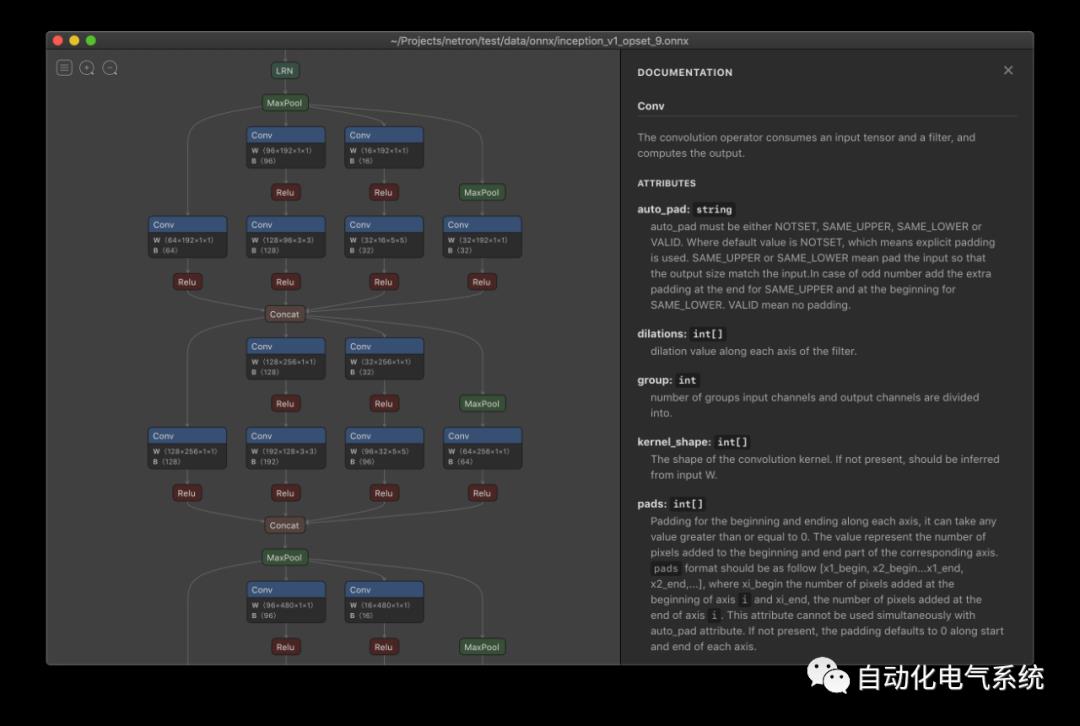

https://github.com/lutzroeder/netron.git

DotNet

https://github.com/martisak/dotnets.git

Graphviz : Tutorial

http://www.graphviz.org

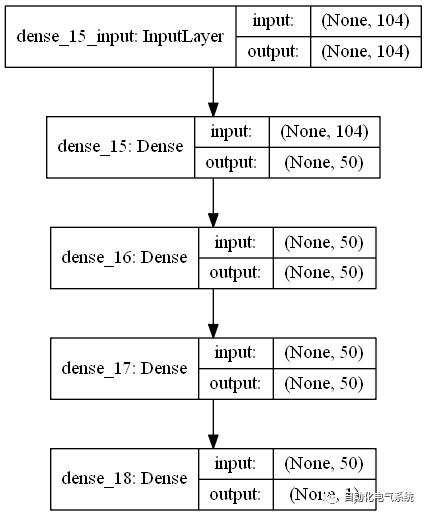

Keras Visualization - The keras.utils.vis_utils module provides utility functions to plot a Keras model (using graphviz)

https://keras.io/api/utils/model_plotting_utils/

模型绘图实用程序

plot_model功能

tf.keras.utils.plot_model(model,to_file="model.png",show_shapes=False,show_dtype=False,show_layer_names=True,rankdir="TB",expand_nested=False,dpi=96,)

将 Keras 模型转换为点格式并保存为文件。

例子

input = tf.keras.Input(shape=(100,), dtype='int32', name='input')x = tf.keras.layers.Embedding(output_dim=512, input_dim=10000, input_length=100)(input)x = tf.keras.layers.LSTM(32)(x)x = tf.keras.layers.Dense(64, activation='relu')(x)x = tf.keras.layers.Dense(64, activation='relu')(x)x = tf.keras.layers.Dense(64, activation='relu')(x)output = tf.keras.layers.Dense(1, activation='sigmoid', name='output')(x)model = tf.keras.Model(inputs=[input], outputs=[output])dot_img_file = '/tmp/model_1.png'tf.keras.utils.plot_model(model, to_file=dot_img_file, show_shapes=True)

参数

模型:Keras 模型实例

to_file:绘图图像的文件名。

show_shapes:是否显示形状信息。

show_dtype:是否显示图层 dtype。

show_layer_names:是否显示图层名称。

rankdir:传递给 PyDot 的参数,一个指定绘图格式的字符串:"TB"创建垂直绘图;"LR"创建水平图。

rankdirexpand_nested:是否将嵌套模型扩展到群集。

dpi:每英寸点数。

返回

如果安装了 Jupyter,则为 Jupyter 笔记本映像对象。这允许在笔记本中在线显示模型图。

model_to_dot功能

tf.keras.utils.model_to_dot(model,show_shapes=False,show_dtype=False,show_layer_names=True,rankdir="TB",expand_nested=False,dpi=96,subgraph=False,)

将 Keras 模型转换为点格式。

参数

模型:Keras 模型实例。

show_shapes:是否显示形状信息。

show_dtype:是否显示图层 dtype。

show_layer_names:是否显示图层名称。

rankdir:传递给 PyDot 的参数,一个指定绘图格式的字符串:"TB"创建垂直绘图;"LR"创建水平图。

rankdirexpand_nested:是否将嵌套模型扩展到群集。

dpi:每英寸点数。

子图:是否返回实例。

pydot.Cluster

返回

表示 Keras 模型的实例或表示嵌套模型的实例 if 。pydot.Dotpydot.Clustersubgraph=True

提高

导入器:如果图形或 pydot 不可用。

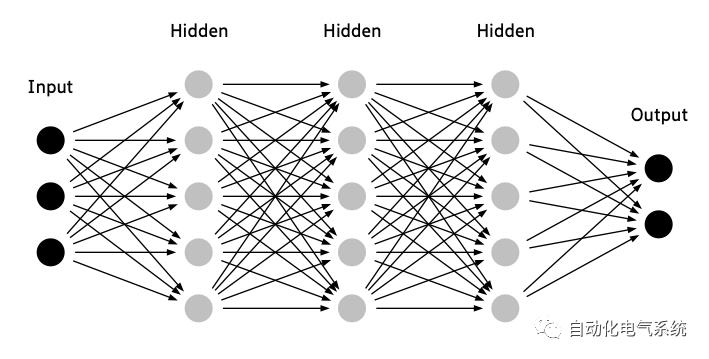

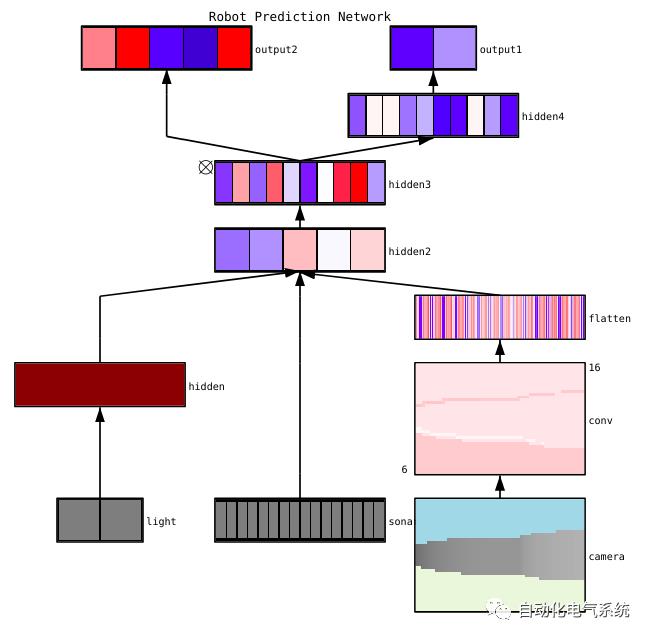

Conx - The Python package

conxcan visualize networks with activations with the functionnet.picture()to produce SVG, PNG, or PIL Images like this:

https://conx.readthedocs.io/en/latest/index.html

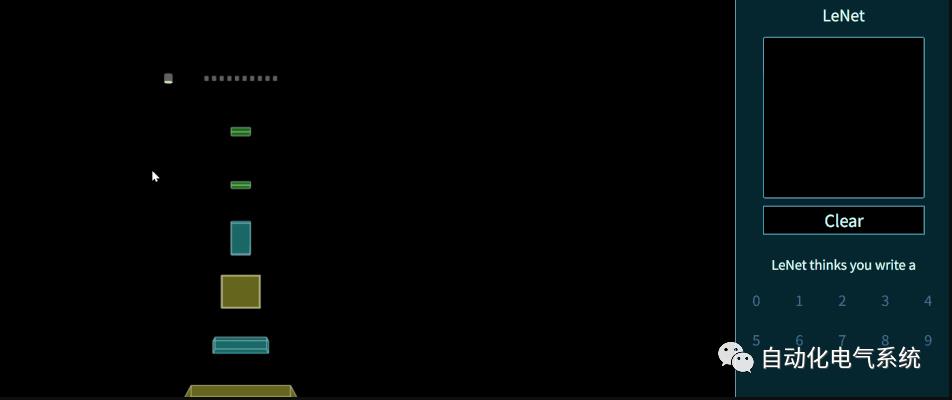

ENNUI - Working on a drag-and-drop neural network visualizer (and more). Here's an example of a visualization for a LeNet-like architecture.

https://math.mit.edu/ennui/

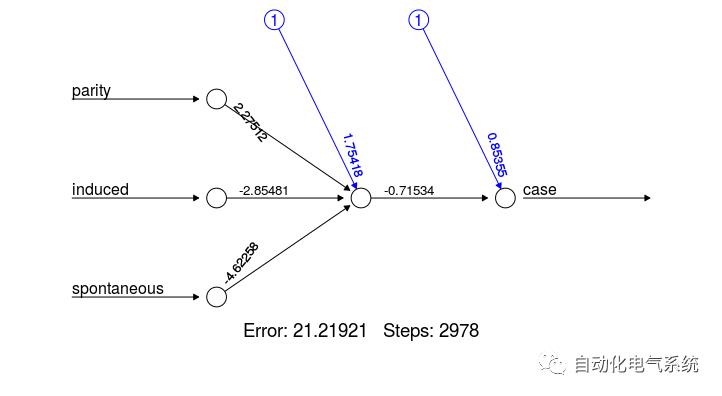

NNet - R Package - Tutorial

data(infert, package="datasets")

plot(neuralnet(case~parity+induced+spontaneous, infert))https://beckmw.wordpress.com/2013/03/04/visualizing-neural-networks-from-the-nnet-package/

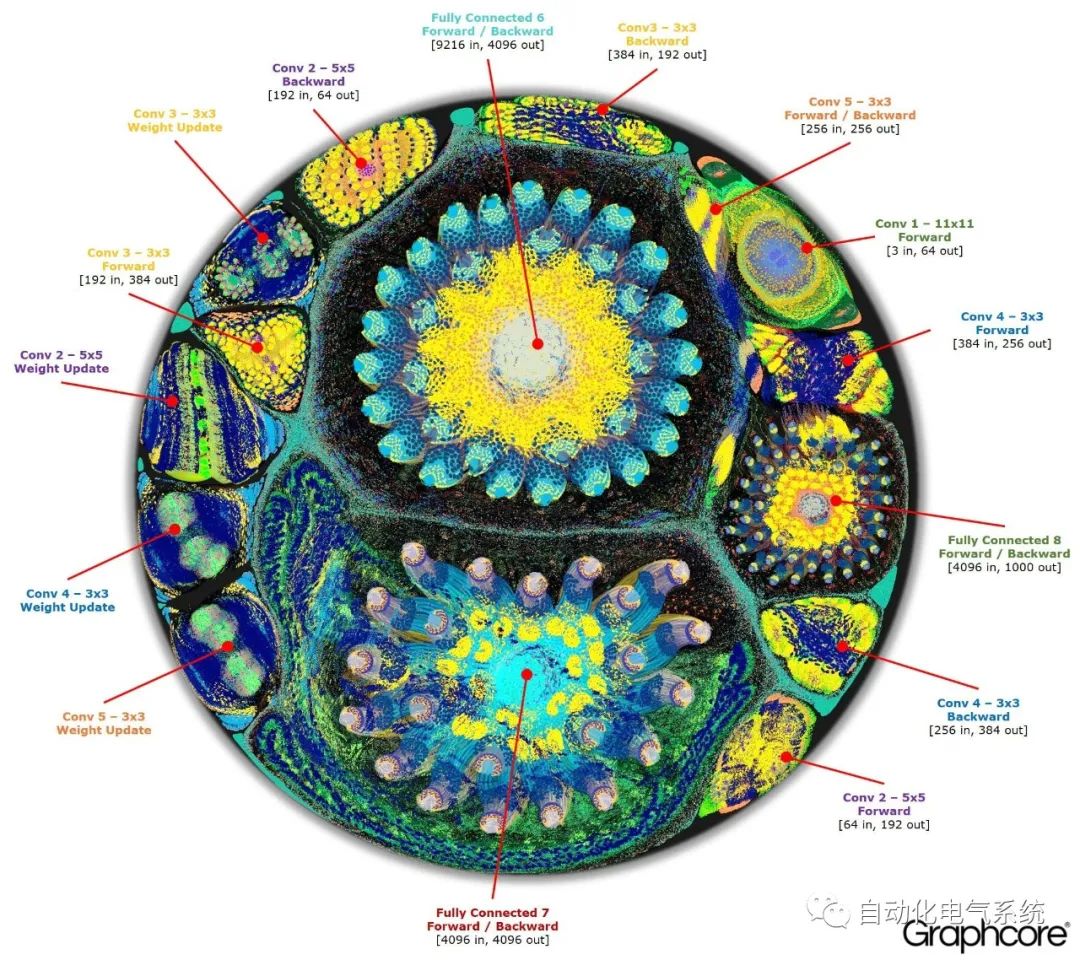

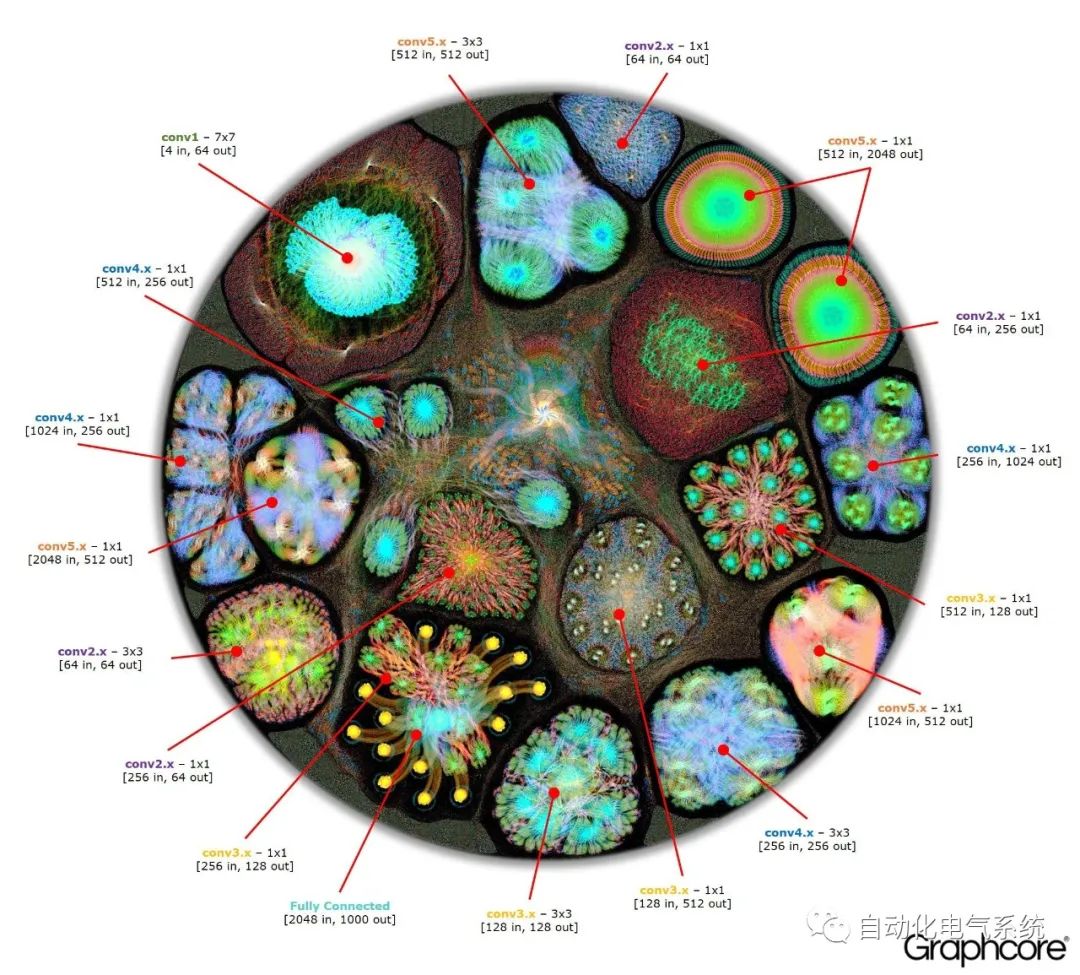

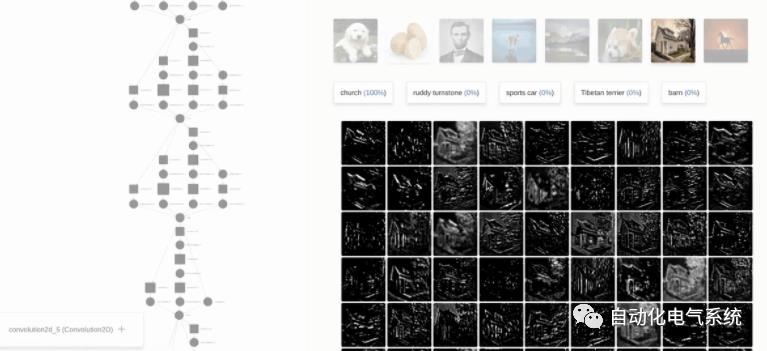

GraphCore - These approaches are more oriented towards visualizing neural network operation, however, NN architecture is also somewhat visible on the resulting diagrams.

https://www.graphcore.ai/posts/what-does-machine-learning-look-like

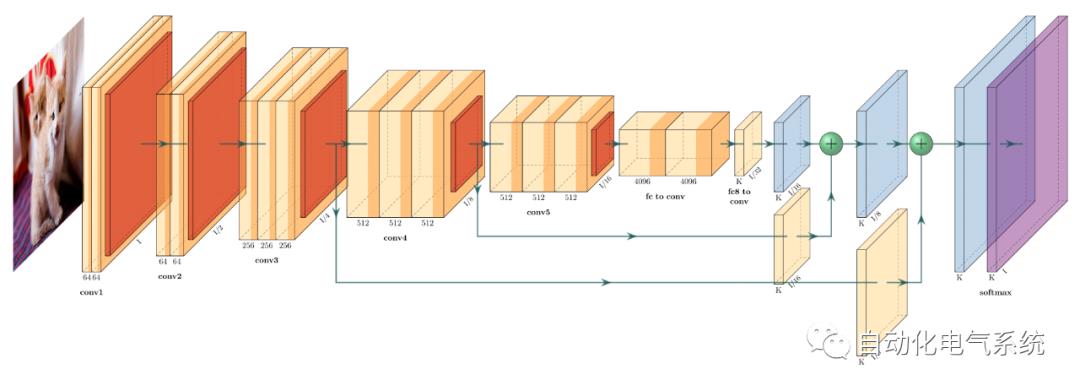

AlexNet

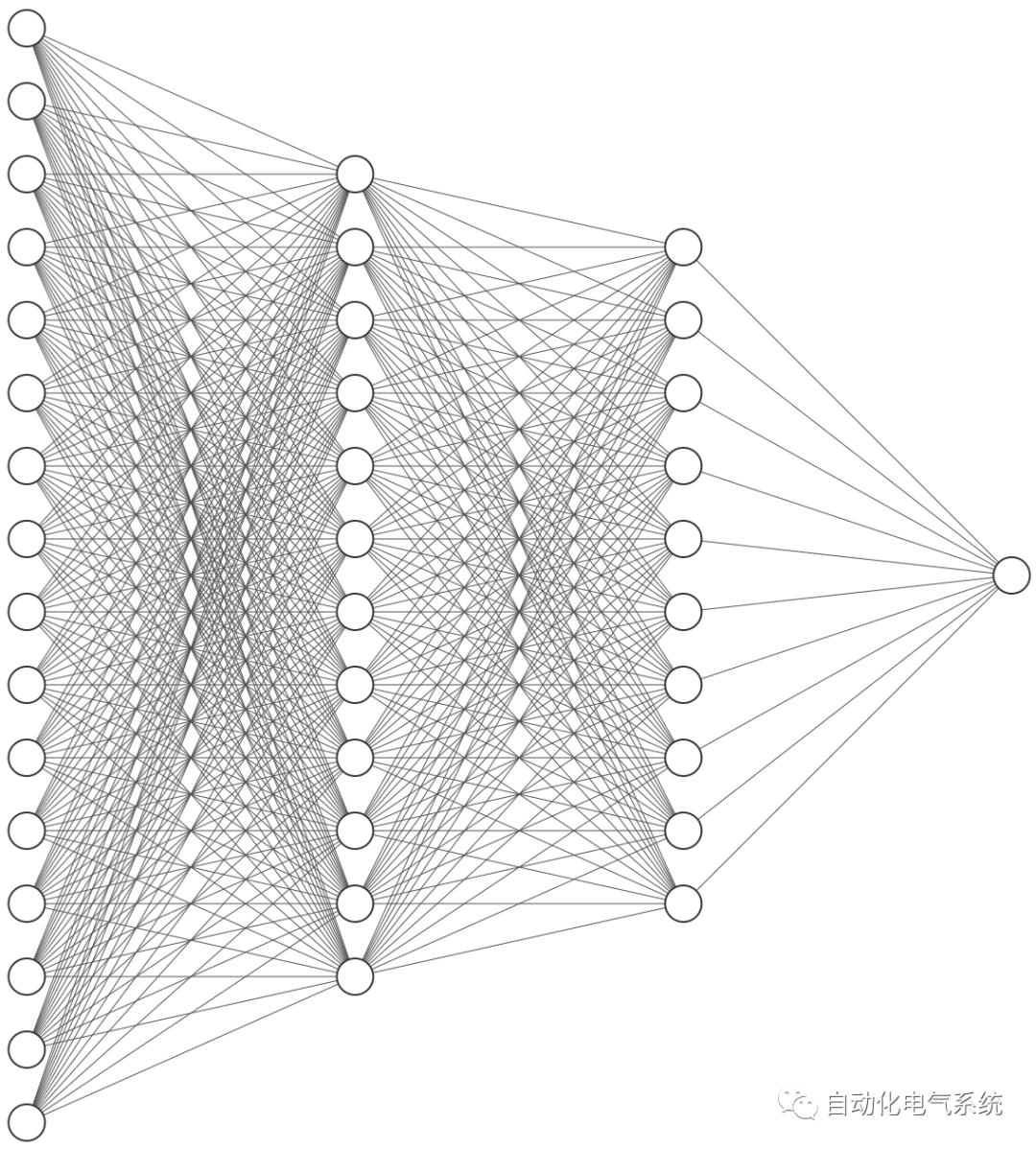

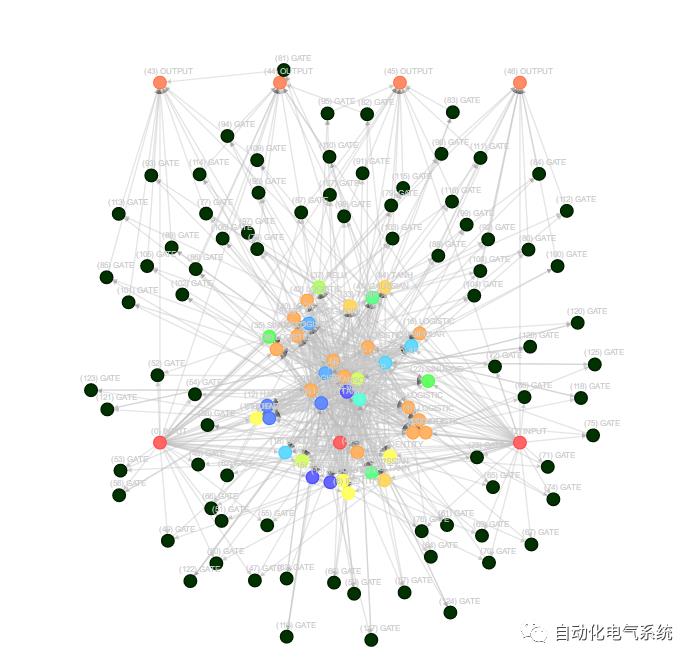

Neataptic

Neataptic 提供灵活的神经网络;神经元和突触可以通过一行代码去除。神经网络运行不需要固定体系结构。这种灵活性允许通过神经进化为数据集塑造网络,神经进化是使用多个线程完成的。

网页;wagenaartje.github.io/neataptic/

储存库;github.com/wagenaartje/neataptic

https://www.npmjs.com/package/neataptic

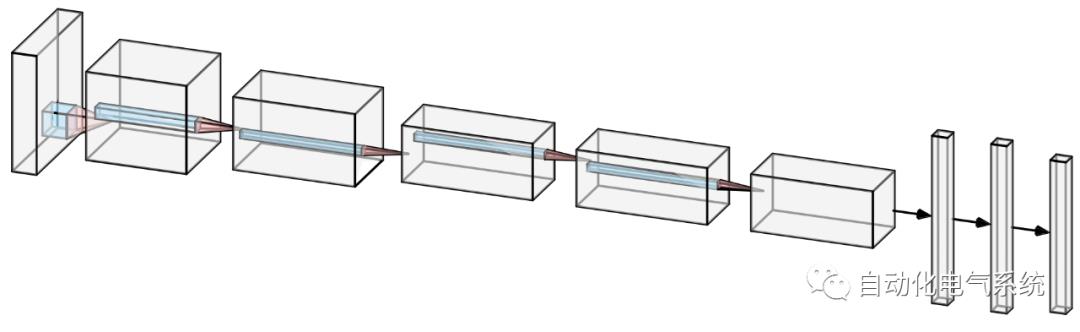

18.TensorSpace.js

TensorSpace是一套用于构建神经网络3D可视化应用的框架。开发者可以使用 TensorSpace API,轻松创建可视化网络、加载神经网络模型并在浏览器中基于已加载的模型进行3D可交互呈现。TensorSpace可以使您更直观地观察神经网络模型,并了解该模型是如何通过中间层 tensor 的运算来得出最终结果的。TensorSpace 支持3D可视化经过适当预处理之后的 TensorFlow、Keras、TensorFlow.js 模型。

TensorSpace 使用场景

TensorSpace 基于 TensorFlow.js、Three.js 和 Tween.js 开发,用于对神经网络进行3D可视化呈现。通过使用 TensorSpace,不仅仅能展示神经网络的结构,还可以呈现网络的内部特征提取、中间层的数据交互以及最终的结果预测等一系列过程。

通过使用 TensorSpace,可以帮助您更直观地观察、理解、展示基于 TensorFlow、Keras 或者 TensorFlow.js 开发的神经网络模型。TensorSpace 降低了前端开发者进行深度学习相关应用开发的门槛。我们期待看到更多基于 TensorSpace 开发的3D可视化应用。

交互 -- 使用 Layer API,在浏览器中构建可交互的3D可视化模型。

直观 -- 观察并展示模型中间层预测数据,直观演示模型推测过程。

集成 -- 支持使用 TensorFlow、Keras 以及 TensorFlow.js 训练的模型

https://tensorspace.org/index_zh.html

https://github.com/tensorspace-team/tensorspace

19.Netscope CNN Analyzer

网镜 CNN 分析仪

一种基于 Web 的工具,用于可视化和分析卷积神经网络体系结构(或技术上的任何定向非环图)。目前支持 Caffe的原型格式。

基础由乙醚。扩展为CNN分析由dgschwend。

要点支持

如果您的文件是 GitHub Gist 的一部分,您可以通过访问此 URL 来可视化它:.prototxt

http://dgschwend.github.io/netscope/#/gist/your-gist-id

Monial

https://github.com/mlajtos/moniel.git

介绍

Moniel 是为利用图形思维创建深度学习模型的符号的许多尝试之一。我们将模型定义为声明性数据流图,而不是将计算定义为 formulea 列表。它不是一种编程语言,而只是一个方便的符号。(这将是可执行的。想帮忙吗?)

注意:GitHub 上没有正确的语法突出显示。使用应用程序获得最佳体验。

让我们从一无所有开始,即注释:

// This is line comment./*This is blockcomment.*/

可以通过说明其类型来创建节点:

Sigmoid不必编写类型的全名。使用适合你的首字母缩略词!这些都是等效的:

LocalResponseNormalization // canonical, but too longLocRespNorm // weird, but why not?LRN // cryptic for beginners, enough for others

节点使用箭头与其他节点连接:

Sigmoid -> MaxPooling可以有任何长度的链:

LRN -> Sigm -> BatchNorm -> ReLU -> Tanh -> MP -> Conv -> BN -> ELU此外,可以有多个链:

ReLU -> BNLRN -> Conv -> MPSigm -> Tanh

节点可以具有标识符:

conv:Convolution标识符允许您引用使用多个次的节点:

inefficient declaration of matrix-matrix multiplicationmatrix1:Tensormatrix2:Tensormm:MatrixMultiplicationmatrix1 -> mmmatrix2 -> mm

但是,这可以在不使用列表标识符的情况下重写:

[] -> MatMul列表让我们您可以轻松地声明多连接:

// Maximum of 3 random numbers[] -> Maximum

列表到列表的连接有时非常方便:

// Range of 3 random numbers[] -> [Max,Min] -> Sub -> Abs

节点可以选择修改其行为的命名属性:

Fill(shape = 10x10x10, value = 1.0)属性名称也可以缩短:

Ones(s=10x10x10)在没有适当结构的情况下定义大型图形是无法管理的。Metanodes可以帮助:

layer:{RandomNormal(shape=784x1000) -> weights:Variableweights -> dp:DotProduct -> act:ReLU}Tensor -> layer/dp // feed input into the DotProduct of the "layer" metanodelayer/act -> Softmax // feed output of the "layer" metanode into another node

当 Metanodes 定义正确的输入输出边界时,它们更强大:

layer1:{RandomNormal(shape=784x1000) -> weigths:Variable[] -> DotProduct -> ReLU -> out:Output}layer2:{RandomNormal(shape=1000x10) -> weigths:Variable[] -> DotProduct -> ReLU -> out:Output}// connect metanodes directlylayer1 -> layer2

或者,您可以使用内联元诺德斯:

In -> layer:{[In,Tensor] -> Conv -> Out} -> Out或者,您不需要给它起名字:

In -> {[In,Tensor] -> Conv -> Out} -> Out如果 metanodes 具有相同的结构,我们可以创建一个可重用的 metanode,并使用它作为一个普通节点:

+ReusableLayer(shape = 1x1){RandN(shape = shape) -> w:Var[] -> DP -> RLU -> out:Out}RL(s = 784x1000) -> RL(s = 1000x10)

类似的项目和灵感

Piotr Migda+:复杂神经网络的简单图- 可视化 ML 体系结构的伟大总结

Lobe (视频) = "使用简单的视觉界面构建、训练和运送自定义深度学习模型。

Serrano – "具有加速和金属支持的图形计算框架。

子图= "子图是用于开发计算图形的可视化 IDE。

PyTorch – "Python 中的张力和动态神经网络具有强大的 GPU 加速。

十四行诗 — "Sonnet 是一个构建在 TensorFlow 之上的图书馆,用于构建复杂的神经网络。

TensorGraph – "TensorGraph 是构建基于 TensorFlow 的任何可想象模型的框架"

nngraph = "火炬中 nn 库的图形计算"

DNNGraph – "哈斯克尔的深度神经网络模型生成 DSL"

NNVM = "深度学习系统的中间计算图形表示"

DeepRosetta – "通用的深度学习模型对话者"

TensorBuilder – "基于构建器模式的功能流畅的不可变 API"

Keras – "极简主义、高度模块化的神经网络库"

漂亮传感器– "高级构建器 API"

TF-Slim – "用于定义、培训和评估模型的轻量级库"

TFLearn – "模块化和透明的深度学习库"

Caffe – "以表达、速度和模块化为思想的深度学习框架"

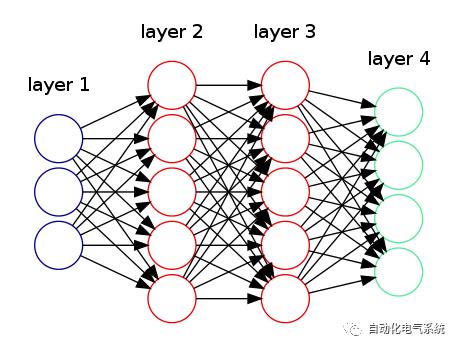

Texample

https://texample.net/tikz/examples/neural-network/

\documentclass{article}\usepackage{tikz}\begin{document}\pagestyle{empty}\def\layersep{2.5cm}\begin{tikzpicture}[shorten >=1pt,->,draw=black!50, node distance=\layersep]\tikzstyle{every pin edge}=[<-,shorten <=1pt]\tikzstyle{neuron}=[circle,fill=black!25,minimum size=17pt,inner sep=0pt]\tikzstyle{input neuron}=[neuron, fill=green!50];\tikzstyle{output neuron}=[neuron, fill=red!50];\tikzstyle{hidden neuron}=[neuron, fill=blue!50];\tikzstyle{annot} = [text width=4em, text centered]% Draw the input layer nodes\foreach \name / \y in {1,...,4}% This is the same as writing \foreach \name / \y in {1/1,2/2,3/3,4/4}\node[input neuron, pin=left:Input \#\y] (I-\name) at (0,-\y) {};% Draw the hidden layer nodes\foreach \name / \y in {1,...,5}\path[yshift=0.5cm]node[hidden neuron] (H-\name) at (\layersep,-\y cm) {};% Draw the output layer node\node[output neuron,pin={[pin edge={->}]right:Output}, right of=H-3] (O) {};% Connect every node in the input layer with every node in the% hidden layer.\foreach \source in {1,...,4}\foreach \dest in {1,...,5}\path (I-\source) edge (H-\dest);% Connect every node in the hidden layer with the output layer\foreach \source in {1,...,5}\path (H-\source) edge (O);% Annotate the layers\node[annot,above of=H-1, node distance=1cm] (hl) {Hidden layer};\node[annot,left of=hl] {Input layer};\node[annot,right of=hl] {Output layer};\end{tikzpicture}% End of code\end{document}Quiver

https://github.com/keplr-io/quiver.git

References :

https://datascience.stackexchange.com/questions/12851/how-do-you-visualize-neural-network-architectures

https://datascience.stackexchange.com/questions/2670/visualizing-deep-neural-network-training

专注于人工智能与自动化系统领域知识内容分享。感谢你的关注与分享!!!

微信交流群: zidonghuadianqi123

QQ交流群:60886850

您的赞赏是对我们的鼓励,We’ll be more solid with your donations.

以上是关于可视化神经网络架构工具的主要内容,如果未能解决你的问题,请参考以下文章