OpenCV4.x中请别再用HAAR级联检测器检测人脸,有更好更准的方法

Posted OpenCV学堂

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了OpenCV4.x中请别再用HAAR级联检测器检测人脸,有更好更准的方法相关的知识,希望对你有一定的参考价值。

关注获取更多计算机视觉与深度学习知识

引言

我写这篇文章是因为我很久以前写过一些文章,用了人脸检测,我当时用的都是HAAR级联检测器,导致最近几个人问我说这个HAAR级联不太准,我跟他们都解释了一下,OpenCV2.4.x跟OpenCV3.0的时候人脸检测都是基于HAAR没错,但是都2020年啦,OpenCV4发布以来,官方支持的人脸检测方法已经转换为基于深度学习的狠准快的方法了。就连HAAR级联训练的工具在OpenCV4中都被除名了。所以与时俱进很重要,希望这篇文章能帮大家厘清OpenCV现在使用的人脸检测技术。

HAAR级联检测器方法

OpenCV3.3之前,一直是OpenCV对象检测在用的,该方法需要使用者有很强的图像处理基础知识,特别要重视预处理跟后处理技术,OpenCV支持该方法的函数为:

void cv::CascadeClassifier::detectMultiScale(InputArray image,std::vector< Rect > & objects,double scaleFactor = 1.1,int minNeighbors = 3,int flags = 0,Size minSize = Size(),Size maxSize = Size())

各个参数解释如下:

image:输入图像

objects 人脸框

scaleFactor 放缩比率

minNeighbors 表示最低相邻矩形框

flags 标志项OpenCV3.x以后不用啦,

minSize 可以检测的最小人脸

maxSize 可以检测的最大人脸

简单的代码演示如下:

#include <opencv2/opencv.hpp>

#include <iostream>

using namespace cv;

using namespace std;

CascadeClassifier faceDetector;

String haar_data_file = "D:/opencv-4.2.0/opencv/build/etc/haarcascades/haarcascade_frontalface_alt_tree.xml";

int main(int artc, char** argv) {

Mat frame, gray;

vector<Rect> faces;

VideoCapture capture(0);

faceDetector.load(haar_data_file);

namedWindow("frame", WINDOW_AUTOSIZE);

while (true) {

capture.read(frame);

cvtColor(frame, gray, COLOR_BGR2GRAY);

equalizeHist(gray, gray);

faceDetector.detectMultiScale(gray, faces, 1.2, 1, 0, Size(30, 30), Size(400, 400));

for (size_t t = 0; t < faces.size(); t++) {

rectangle(frame, faces[t], Scalar(0, 0, 255), 2, 8, 0);

}

char c = waitKey(10);

if (c == 27) {

break;

}

imshow("frame", frame);

}

waitKey(0);

return 0;

}OpenCV4 DNN人脸检测

在OpenCV的\sources\samples\dnn\face_detector目录下,有一个download_weights.py脚本文件,首先运行一下,下载模型文件。下载的模型文件分别为:

Caffe模型

res10_300x300_ssd_iter_140000_fp16.caffemodel

deploy.prototxt

tensorflow模型

opencv_face_detector_uint8.pb

opencv_face_detector.pbtxt

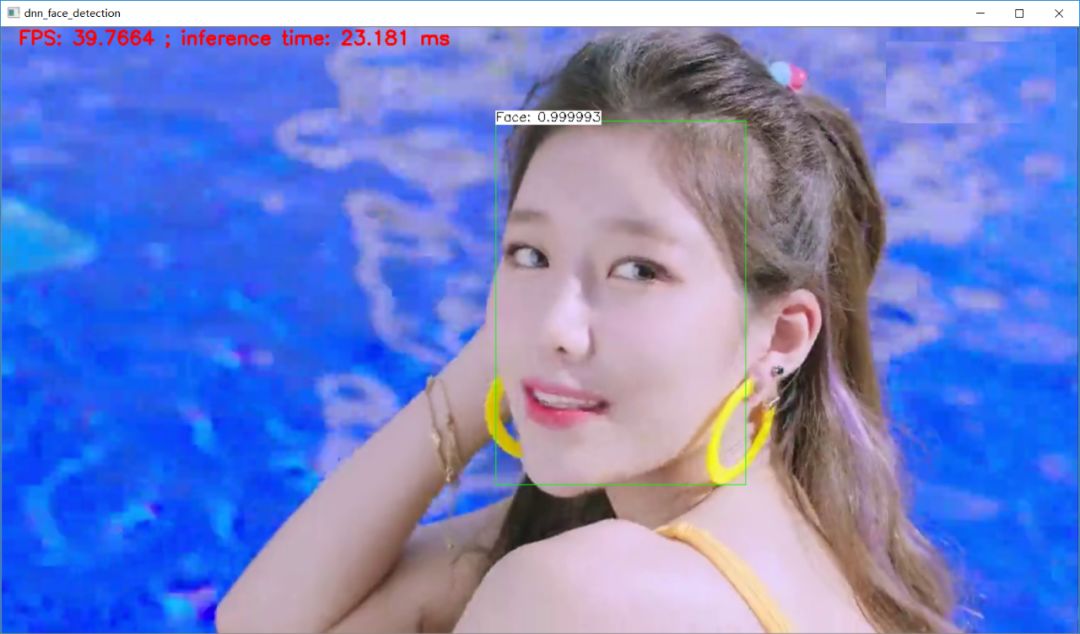

其中tensorflow的模型OpenCV官方对它进行了量化处理,大小只有2MB左右,非常适合在各种场景下使用,运行效果如下:

演示代码:

#include <opencv2/dnn.hpp>

#include <opencv2/opencv.hpp>

using namespace cv;

using namespace cv::dnn;

#include <iostream>

#include <cstdlib>

using namespace std;

const size_t inWidth = 300;

const size_t inHeight = 300;

const double inScaleFactor = 1.0;

const Scalar meanVal(104.0, 177.0, 123.0);

const float confidenceThreshold = 0.7;

void face_detect_dnn();

void mtcnn_demo();

int main(int argc, char** argv)

{

face_detect_dnn();

waitKey(0);

return 0;

}

void face_detect_dnn() {

//String modelDesc = "D:/projects/opencv_tutorial/data/models/resnet/deploy.prototxt";

// String modelBinary = "D:/projects/opencv_tutorial/data/models/resnet/res10_300x300_ssd_iter_140000.caffemodel";

String modelBinary = "D:/opencv-4.2.0/opencv/sources/samples/dnn/face_detector/opencv_face_detector_uint8.pb";

String modelDesc = "D:/opencv-4.2.0/opencv/sources/samples/dnn/face_detector/opencv_face_detector.pbtxt";

// 初始化网络

// dnn::Net net = readNetFromCaffe(modelDesc, modelBinary);

dnn::Net net = readNetFromTensorflow(modelBinary, modelDesc);

net.setPreferableBackend(DNN_BACKEND_OPENCV);

net.setPreferableTarget(DNN_TARGET_CPU);

if (net.empty())

{

printf("could not load net...\n");

return;

}

// 打开摄像头

// VideoCapture capture(0);

VideoCapture capture("D:/images/video/Boogie_Up.mp4");

if (!capture.isOpened()) {

printf("could not load camera...\n");

return;

}

Mat frame;

int count=0;

while (capture.read(frame)) {

int64 start = getTickCount();

if (frame.empty())

{

break;

}

// 水平镜像调整

// flip(frame, frame, 1);

imshow("input", frame);

if (frame.channels() == 4)

cvtColor(frame, frame, COLOR_BGRA2BGR);

// 输入数据调整

Mat inputBlob = blobFromImage(frame, inScaleFactor,

Size(inWidth, inHeight), meanVal, false, false);

net.setInput(inputBlob, "data");

// 人脸检测

Mat detection = net.forward("detection_out");

vector<double> layersTimings;

double freq = getTickFrequency() / 1000;

double time = net.getPerfProfile(layersTimings) / freq;

Mat detectionMat(detection.size[2], detection.size[3], CV_32F, detection.ptr<float>());

ostringstream ss;

for (int i = 0; i < detectionMat.rows; i++)

{

// 置信度 0~1之间

float confidence = detectionMat.at<float>(i, 2);

if (confidence > confidenceThreshold)

{

count++;

int xLeftBottom = static_cast<int>(detectionMat.at<float>(i, 3) * frame.cols);

int yLeftBottom = static_cast<int>(detectionMat.at<float>(i, 4) * frame.rows);

int xRightTop = static_cast<int>(detectionMat.at<float>(i, 5) * frame.cols);

int yRightTop = static_cast<int>(detectionMat.at<float>(i, 6) * frame.rows);

Rect object((int)xLeftBottom, (int)yLeftBottom,

(int)(xRightTop - xLeftBottom),

(int)(yRightTop - yLeftBottom));

rectangle(frame, object, Scalar(0, 255, 0));

ss << confidence;

String conf(ss.str());

String label = "Face: " + conf;

int baseLine = 0;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

rectangle(frame, Rect(Point(xLeftBottom, yLeftBottom - labelSize.height),

Size(labelSize.width, labelSize.height + baseLine)),

Scalar(255, 255, 255), FILLED);

putText(frame, label, Point(xLeftBottom, yLeftBottom),

FONT_HERSHEY_SIMPLEX, 0.5, Scalar(0, 0, 0));

}

}

float fps = getTickFrequency() / (getTickCount() - start);

ss.str("");

ss << "FPS: " << fps << " ; inference time: " << time << " ms";

putText(frame, ss.str(), Point(20, 20), 0, 0.75, Scalar(0, 0, 255), 2, 8);

imshow("dnn_face_detection", frame);

if (waitKey(1) >= 0) break;

}

printf("total face: %d\n", count);

}对比总结

1080P的视频文件,两种方法运行检测速度跟总人脸检测数目对比如下(Windows 10 64位,纯CPU运行):

OpenCV4 DNN中的人脸检测吊打HAAR级联检测器人脸检测方法,HAAR级联检测器人脸检测已经凉凉了,建议不要再学习,纯属浪费时间而已!

云厚者,雨必猛

弓劲者,箭必远

推荐阅读

以上是关于OpenCV4.x中请别再用HAAR级联检测器检测人脸,有更好更准的方法的主要内容,如果未能解决你的问题,请参考以下文章

Haar-级联对象检测 OpenCV - .xml 文件分类器未正确检测

OpenCV-Python实战(番外篇)——基于 Haar 级联的猫脸检测器