ELK 安装配置&Logstash插件

Posted Memoryleak

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了ELK 安装配置&Logstash插件相关的知识,希望对你有一定的参考价值。

简介

在我们日常生活中,我们经常需要回顾以前发生的一些事情;或者,当出现了一些问题的时候,可以从某些地方去查找原因,寻找发生问题的痕迹。无可避免需要用到文字的、图像的等等不同形式的记录。用计算机的术语表达,就是 LOG,或日志。

日志,对于任何系统来说都是及其重要的组成部分。在计算机系统里面,更是如此。但是由于现在的计算机系统大多比较复杂,很多系统都不是在一个地方,甚至都是跨国界的;即使是在一个地方的系统,也有不同的来源,比如,操作系统,应用服务,业务逻辑等等。他们都在不停产生各种各样的日志数据。根据不完全统计,我们全球每天大约要产生 2EB(1018)的数据。

面对如此海量的数据,又是分布在各个不同地方,如果我们需要去查找一些重要的信息,难道还是使用传统的方法,去登陆到一台台机器上查看?看来传统的工具和方法已经显得非常笨拙和低效了。于是,一些聪明人就提出了建立一套集中式的方法,把不同来源的数据集中整合到一个地方。

一个完整的集中式日志系统,是离不开以下几个主要特点的。

收集-能够采集多种来源的日志数据

传输-能够稳定的把日志数据传输到中央系统

存储-如何存储日志数据

分析-可以支持 UI 分析

警告-能够提供错误报告,监控机制

市场上的产品

基于上述思路,于是许多产品或方案就应运而生了。比如,简单的 Rsyslog,Syslog-ng;商业化的 Splunk ;开源的有 FaceBook 公司的 Scribe,Apache 的 Chukwa,Linkedin 的 Kafak,Cloudera 的 Fluentd,ELK 等等。

在上述产品中,Splunk 是一款非常优秀的产品,但是它是商业产品,价格昂贵,让许多人望而却步。

直到 ELK 的出现,让大家又多了一种选择。相对于其他几款开源软件来说,本文重点介绍 ELK。

ELK 协议栈介绍及体系结构

ELK 其实并不是一款软件,而是一整套解决方案,是三个软件产品的首字母缩写,Elasticsearch,Logstash 和 Kibana。这三款软件都是开源软件,通常是配合使用,而且又先后归于 Elastic.co 公司名下,故被简称为 ELK 协议栈,见图 1。

图 1.ELK 协议栈

Elasticsearch

Elasticsearch 是一个实时的分布式搜索和分析引擎,它可以用于全文搜索,结构化搜索以及分析。它是一个建立在全文搜索引擎 Apache Lucene 基础上的搜索引擎,使用 Java 语言编写。目前,最新的版本是 2.1.0。

主要特点

实时分析

分布式实时文件存储,并将每一个字段都编入索引

文档导向,所有的对象全部是文档

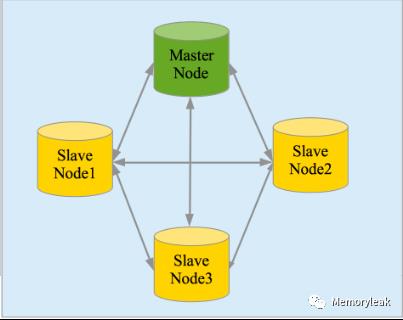

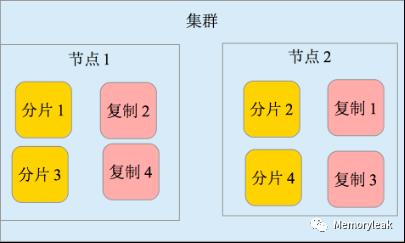

高可用性,易扩展,支持集群(Cluster)、分片和复制(Shards 和 Replicas)。见图 2 和图 3

接口友好,支持 JSON

图 2. 集群

图 3. 分片和复制

Logstash

Logstash 是一个具有实时渠道能力的数据收集引擎。使用 JRuby 语言编写。其作者是世界著名的运维工程师乔丹西塞 (JordanSissel)。目前最新的版本是 2.1.1。

主要特点

几乎可以访问任何数据

可以和多种外部应用结合

支持弹性扩展

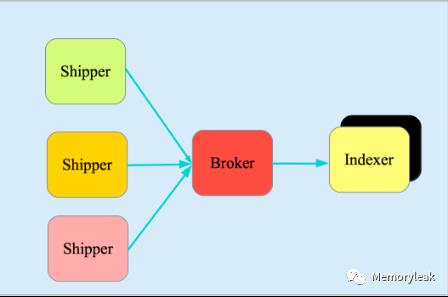

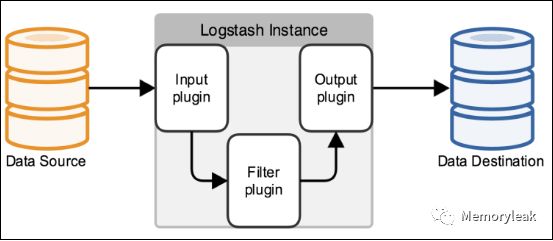

它由三个主要部分组成,见图 4:

Shipper-发送日志数据

Broker-收集数据,缺省内置 Redis

Indexer-数据写入

图 4.Logstash 基本组成

Kibana

Kibana 是一款基于 Apache 开源协议,使用 javascript 语言编写,为 Elasticsearch 提供分析和可视化的 Web 平台。它可以在 Elasticsearch 的索引中查找,交互数据,并生成各种维度的表图。目前最新的版本是 4.3,简称 Kibana 4。

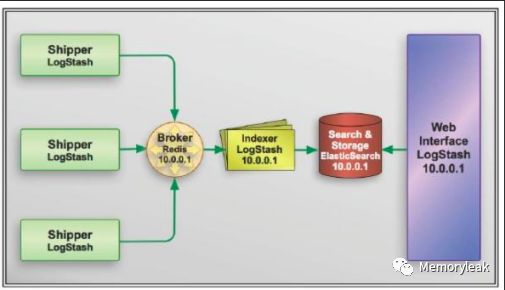

ELK 协议栈体系结构

完整的 ELK 协议栈体系结构见图 5。基本流程是 Shipper 负责从各种数据源里采集数据,然后发送到 Broker,Indexer 将存放在 Broker 中的数据再写入 Elasticsearch,Elasticsearch 对这些数据创建索引,然后由 Kibana 对其进行各种分析并以图表的形式展示。

图 5.ELK 协议栈体系结构

ELK 三款软件之间互相配合使用,完美衔接,高效的满足了很多场合的应用,并且被很多用户所采纳,诸如路透社,脸书(Facebook),StackOverFlow 等等。

ELK 的安装及配置

这一部分,我将描述一下如何安装配置 ELK 协议栈。

选取的实验平台为

Ubuntu 14.04

Centos 7.1

其中用到的软件如下

Elasticsearch 使用的是 2.1.0

Logstash 使用的是 2.1.1

Kibana 使用的是 4.3.0

除此之外,还需要用到以下软件,nginx,Logstash-forwarder 以及 JDK。

实验系统架构见图 6。

图 6. 实验系统架构

值得注意的是,在我们的实验中,使用了 Nginx 的反向代理,使得用户可以从外部访问到 Kibana,并且它本身具有负载均衡的作用,也能够提高性能。

特别还提到一下 Logstash-forwarder,这是一款使用 Go 语言编写的日志传输工具。由于 Logstash 是使用 Java 来运行,为了提升网络传输效率,我们并不在客户系统上面使用 Logstash 来传输数据。这款软件就是原来的 Lumberjack,今后慢慢将被 Elastic.co 公司的另外一款产品 Beat 吸收合并到 Filebeat 中,这个超出本文叙述的范畴,就不在这里详细讨论了。

具体安装过程如下

步骤 1,安装 JDK

步骤 2,安装 Elasticsearch

步骤 3,安装 Kibana

步骤 4,安装 Nginx

步骤 5,安装 Logstash

步骤 6,配置 Logstash

步骤 7,安装 Logstash-forwarder

步骤 8,最终验证

安装前的准备

两台 64 位虚拟机,操作系统是 Ubuntu 14.04,2 CPU,4G 内存,30G 硬盘

两台 64 位虚拟机,操作系统是 CentOS 7.1,2 CPU,4G 内存,30G 硬盘

创建用户 elk 和组 elk,以下所有的安装均由这个用户操作,并授予 sudo 权限

如果是 CentOS,还需要配置官方 YUM 源,可以访问 CentOS 软件包

注意:以下所有操作都是在两个平台上完成。

步骤 1,安装 JDK

Elasticsearch 要求至少 Java 7。一般推荐使用 Oracle JDK 1.8 或者 OpenJDK 1.8。我们这里使用 OpenJDK 1.8。

Ubuntu 14.04

加入 Java 软件源(Repository)

1 |

|

更新系统并安装 JDK

1 2 |

|

验证 Java

1 2 3 4 |

|

CentOS 7.1

配置 YUM 源

1 2 |

|

加入以下内容

1 2 3 4 5 6 7 8 9 10 11 12 13 |

|

安装 JDK

1 |

|

验证 Java

1 2 3 4 |

|

步骤 2,安装 Elasticsearch

Ubuntu 14.04

下载 Elasticsearch 软件

$ wgethttps://download.elasticsearch.org/elasticsearch/release/org/elasticsearch/distribution/tar/elasticsearch/2.1.0/elasticsearch-2.1.0.tar.gz

解压

1 |

|

文件目录结构如下:

1 2 3 4 |

|

修改配置文件

1 2 |

|

找到 # network.host 一行,修改成以下:

1 |

|

启动 elasticsearch

1 2 |

|

验证 elasticsearch

1 2 3 4 5 6 7 8 9 10 11 12 13 |

|

CentOS 7.1

步骤和上述 Ubuntu 14.04 安装完全一致

步骤 3,安装 Kibana

Ubuntu 14.04

下载 Kibana 安装软件

$ wget https://download.elastic.co/kibana/kibana/kibana-4.3.0-linux-x64.tar.gz

解压

1 |

|

文件目录结构如下:

1 2 3 4 5 |

|

修改配置文件

1 2 |

|

找到 # server.host,修改成以下:

1 |

|

启动 Kibana

1 2 3 4 5 |

|

验证 Kibana

由于我们是配置在 localhost,所以是无法直接访问 Web 页面的。

可以使用 netstat 来检查缺省端口 5601,或者使用 curl:

1 2 3 4 5 6 7 8 9 10 |

|

CentOS 7.1

步骤和上述 Ubuntu 14.04 安装完全一致。

步骤 4,安装 Nginx

Nginx 提供了反向代理服务,可以使外面的请求被发送到内部的应用上。

Ubuntu 14.04

安装软件

1 |

|

修改 Nginx 配置文件

1 |

|

找到 server_name,修改成正确的值。或者使用 IP,或者使用 FQDN。

然后在加入下面一段内容:

1 2 3 4 5 6 7 8 9 10 11 12 |

|

注意:建议使用 IP。

重启 Nginx 服务

1 |

|

验证访问

http://FQDN 或者 http://IP

CentOS 7.1

配置 Nginx 官方 yum 源

1 2 3 4 5 6 |

|

安装软件

1 |

|

修改 Nginx 配置文件

1 |

|

检查是否 http 块(http{...})含有下面这一行:

1 |

|

为 Kibana 创建一个配置文件

1 |

|

加入以下这一段内容:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

|

注意:建议使用 IP。

启动 Nginx 服务

1 2 |

|

验证访问

http://FQDN 或者 http://IP

步骤 5,安装 Logstash

Ubuntu 14.04

下载 Logstash 安装软件

1 |

|

解压

1 |

|

文件目录结构如下:

1 2 3 4 5 |

|

验证 Logstash

1 2 3 4 |

|

显示如下:

1 2 |

|

说明 Logstash 已经可以正常工作了。按CTRL-D 退出

CentOS 7.1

步骤和上述 Ubuntu 14.04 安装完全一致。

步骤 6,配置 Logstash

我们需要配置 Logstash 以指明从哪里读取数据,向哪里输出数据。这个过程我们称之为定义 Logstash 管道(Logstash Pipeline)。

通常一个管道需要包括必须的输入(input),输出(output),和一个可选项目 Filter。见图 7。

图 7.Logstash 管道结构示意

标准的管道配置文件格式如下:

1 2 3 4 5 6 7 8 9 10 |

|

每一个输入/输出块里面都可以包含多个源。Filter 是定义如何按照用户指定的格式写数据。

由于我们这次是使用 logstash-forwarder 从客户机向服务器来传输数据,作为输入数据源。所以,我们首先需要配置 SSL 证书(Certification)。用来在客户机和服务器之间验证身份。

Ubuntu 14.04

配置 SSL

1 2 |

|

找到 [v3_ca] 段,添加下面一行,保存退出。

1 |

|

执行下面命令:

1 2 3 |

|

这里产生的 logstash-forwarder.crt 文件会在下一节安装配置 Logstash-forwarder 的时候使用到。

配置 Logstash 管道文件

1 2 3 |

|

添加以下内容:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

|

启动 Logstsh

1 2 |

|

CentOS 7.1

在 CentOS 7.1 上配置 Logstash,只有一步配置 SSL 是稍微有点不同,其他全部一样。

1 |

|

找到 [v3_ca] 段,添加下面一行,保存退出。

1 2 3 4 5 |

|

这里产生的 logstash-forwarder.crt 文件会在下一节安装配置 Logstash-forwarder 的时候使用到。

步骤 7,安装 Logstash-forwarder

注意:Logstash-forwarder 也是一个开源项目,最早是由 lumberjack 改名而来。在作者写这篇文章的时候,被吸收合并到了 Elastic.co 公司的另外一个产品 Beat 中的 FileBeat。如果是用 FileBeat,配置稍微有些不一样,具体需要去参考官网。

Ubuntu14.04

安装 Logstash-forwarder 软件

注意:Logstash-forwarder 是安装在另外一台机器上。用来模拟客户机传输数据到 Logstash 服务器。

配置 Logstash-forwarder 安装源

执行以下命令:

1 2 |

|

$ wget -qO - https://packages.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

安装软件包

1 2 |

|

配置 SSL

1 |

|

把在步骤六中在 Logstash 服务器上产生的 ssl 证书文件拷贝到刚刚创建出来的目录下:

1 |

|

配置 Logstash-forwarder

1 |

|

在 network 段("network": {),修改如下:

1 2 3 |

|

在 files 段("files": [),修改如下:

1 2 3 4 5 6 7 |

|

启动 Logstash-forwarder

1 |

|

验证 Logstash-forwarder

1 2 |

|

如果有错误,则需要去/var/log/logstash-forwarder 目录下面检查。

CentOS 7.1

配置 Logstash-forwarder 安装源

执行以下命令:

1 2 3 |

|

加入以下内容:

1 2 3 4 5 6 |

|

存盘退出。

安装软件包

1 |

|

剩余步骤和上述在 Ubuntu 14.04 上面的做法完全一样。

步骤 8,最后验证

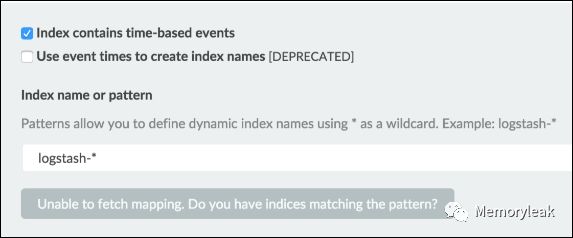

在前面安装 Kibana 的时候,曾经有过验证。不过,当时没有数据,打开 Web 页面的时候,将如下所示:

图 8. 无数据初始页面

现在,由于 logstash-forwarder 已经开始传输数据了,再次打开 Web 页面,将如下所示:

图 9. 配置索引页面

点击创建按钮(Create),在选择 Discover,可以看到如下画面:

图 10. 数据展示页面

至此,所有部件的工作都可以正常使用了。关于如何具体使用 Kibana 就不在本文中加以描述了,有兴趣的同学可以参考官网。

Input pluginsedit

An input plugin enables a specific source of events to be read by Logstash.

The following input plugins are available below. For a list of Elastic supported plugins, please consult the Support Matrix.

Plugin |

Description |

Github repository |

azure_event_hubs |

Receives events from Azure Event Hubs |

azure_event_hubs |

beats |

Receives events from the Elastic Beats framework |

logstash-input-beats |

cloudwatch |

Pulls events from the Amazon Web Services CloudWatch API |

logstash-input-cloudwatch |

couchdb_changes |

Streams events from CouchDB’s |

logstash-input-couchdb_changes |

dead_letter_queue |

read events from Logstash’s dead letter queue |

logstash-input-dead_letter_queue |

elasticsearch |

Reads query results from an Elasticsearch cluster |

logstash-input-elasticsearch |

exec |

Captures the output of a shell command as an event |

logstash-input-exec |

file |

Streams events from files |

logstash-input-file |

ganglia |

Reads Ganglia packets over UDP |

logstash-input-ganglia |

gelf |

Reads GELF-format messages from Graylog2 as events |

logstash-input-gelf |

generator |

Generates random log events for test purposes |

logstash-input-generator |

github |

Reads events from a GitHub webhook |

logstash-input-github |

google_pubsub |

Consume events from a Google Cloud PubSub service |

logstash-input-google_pubsub |

graphite |

Reads metrics from the |

logstash-input-graphite |

heartbeat |

Generates heartbeat events for testing |

logstash-input-heartbeat |

http |

Receives events over HTTP or HTTPS |

logstash-input-http |

http_poller |

Decodes the output of an HTTP API into events |

logstash-input-http_poller |

imap |

Reads mail from an IMAP server |

logstash-input-imap |

irc |

Reads events from an IRC server |

logstash-input-irc |

jdbc |

Creates events from JDBC data |

logstash-input-jdbc |

jms |

Reads events from a Jms Broker |

logstash-input-jms |

jmx |

Retrieves metrics from remote Java applications over JMX |

logstash-input-jmx |

kafka |

Reads events from a Kafka topic |

logstash-input-kafka |

kinesis |

Receives events through an AWS Kinesis stream |

logstash-input-kinesis |

log4j |

Reads events over a TCP socket from a Log4j |

logstash-input-log4j |

lumberjack |

Receives events using the Lumberjack protocl |

logstash-input-lumberjack |

meetup |

Captures the output of command line tools as an event |

logstash-input-meetup |

pipe |

Streams events from a long-running command pipe |

logstash-input-pipe |

puppet_facter |

Receives facts from a Puppet server |

logstash-input-puppet_facter |

rabbitmq |

Pulls events from a RabbitMQ exchange |

logstash-input-rabbitmq |

redis |

Reads events from a Redis instance |

logstash-input-redis |

relp |

Receives RELP events over a TCP socket |

logstash-input-relp |

rss |

Captures the output of command line tools as an event |

logstash-input-rss |

s3 |

Streams events from files in a S3 bucket |

logstash-input-s3 |

salesforce |

Creates events based on a Salesforce SOQL query |

logstash-input-salesforce |

snmp |

Polls network devices using Simple Network Management Protocol (SNMP) |

logstash-input-snmp |

snmptrap |

Creates events based on SNMP trap messages |

logstash-input-snmptrap |

sqlite |

Creates events based on rows in an SQLite database |

logstash-input-sqlite |

sqs |

Pulls events from an Amazon Web Services Simple Queue Service queue |

logstash-input-sqs |

stdin |

Reads events from standard input |

logstash-input-stdin |

stomp |

Creates events received with the STOMP protocol |

logstash-input-stomp |

syslog |

Reads syslog messages as events |

logstash-input-syslog |

tcp |

Reads events from a TCP socket |

logstash-input-tcp |

Reads events from the Twitter Streaming API |

logstash-input-twitter |

|

udp |

Reads events over UDP |

logstash-input-udp |

unix |

Reads events over a UNIX socket |

logstash-input-unix |

varnishlog |

Reads from the |

logstash-input-varnishlog |

websocket |

Reads events from a websocket |

logstash-input-websocket |

wmi |

Creates events based on the results of a WMI query |

logstash-input-wmi |

xmpp |

Receives events over the XMPP/Jabber protocol |

logstash-input-xmpp |

Filter pluginsedit

A filter plugin performs intermediary processing on an event. Filters are often applied conditionally depending on the characteristics of the event.

The following filter plugins are available below. For a list of Elastic supported plugins, please consult the Support Matrix.

Plugin |

Description |

Github repository |

aggregate |

Aggregates information from several events originating with a single task |

logstash-filter-aggregate |

alter |

Performs general alterations to fields that the |

logstash-filter-alter |

cidr |

Checks IP addresses against a list of network blocks |

logstash-filter-cidr |

cipher |

Applies or removes a cipher to an event |

logstash-filter-cipher |

clone |

Duplicates events |

logstash-filter-clone |

csv |

Parses comma-separated value data into individual fields |

logstash-filter-csv |

date |

Parses dates from fields to use as the Logstash timestamp for an event |

logstash-filter-date |

de_dot |

Computationally expensive filter that removes dots from a field name |

logstash-filter-de_dot |

dissect |

Extracts unstructured event data into fields using delimiters |

logstash-filter-dissect |

dns |

Performs a standard or reverse DNS lookup |

logstash-filter-dns |

drop |

Drops all events |

logstash-filter-drop |

elapsed |

Calculates the elapsed time between a pair of events |

logstash-filter-elapsed |

elasticsearch |

Copies fields from previous log events in Elasticsearch to current events |

logstash-filter-elasticsearch |

environment |

Stores environment variables as metadata sub-fields |

logstash-filter-environment |

extractnumbers |

Extracts numbers from a string |

logstash-filter-extractnumbers |

fingerprint |

Fingerprints fields by replacing values with a consistent hash |

logstash-filter-fingerprint |

geoip |

Adds geographical information about an IP address |

logstash-filter-geoip |

grok |

Parses unstructured event data into fields |

logstash-filter-grok |

http |

Provides integration with external web services/REST APIs |

logstash-filter-http |

i18n |

Removes special characters from a field |

logstash-filter-i18n |

jdbc_static |

Enriches events with data pre-loaded from a remote database |

logstash-filter-jdbc_static |

jdbc_streaming |

Enrich events with your database data |

logstash-filter-jdbc_streaming |

json |

Parses JSON events |

logstash-filter-json |

json_encode |

Serializes a field to JSON |

logstash-filter-json_encode |

kv |

Parses key-value pairs |

logstash-filter-kv |

memcached |

Provides integration with external data in Memcached |

logstash-filter-memcached |

metricize |

Takes complex events containing a number of metrics and splits these up into multiple events, each holding a single metric |

logstash-filter-metricize |

metrics |

Aggregates metrics |

logstash-filter-metrics |

mutate |

Performs mutations on fields |

logstash-filter-mutate |

prune |

Prunes event data based on a list of fields to blacklist or whitelist |

logstash-filter-prune |

range |

Checks that specified fields stay within given size or length limits |

logstash-filter-range |

ruby |

Executes arbitrary Ruby code |

logstash-filter-ruby |

sleep |

Sleeps for a specified time span |

logstash-filter-sleep |

split |

Splits multi-line messages into distinct events |

logstash-filter-split |

syslog_pri |

Parses the |

logstash-filter-syslog_pri |

throttle |

Throttles the number of events |

logstash-filter-throttle |

tld |

Replaces the contents of the default message field with whatever you specify in the configuration |

logstash-filter-tld |

translate |

Replaces field contents based on a hash or YAML file |

logstash-filter-translate |

truncate |

Truncates fields longer than a given length |

logstash-filter-truncate |

urldecode |

Decodes URL-encoded fields |

logstash-filter-urldecode |

useragent |

Parses user agent strings into fields |

logstash-filter-useragent |

uuid |

Adds a UUID to events |

logstash-filter-uuid |

xml |

Parses XML into fields |

logstash-filter-xml |

Output pluginsedit

An output plugin sends event data to a particular destination. Outputs are the final stage in the event pipeline.

The following output plugins are available below. For a list of Elastic supported plugins, please consult the Support Matrix.

Plugin |

Description |

Github repository |

boundary |

Sends annotations to Boundary based on Logstash events |

logstash-output-boundary |

circonus |

Sends annotations to Circonus based on Logstash events |

logstash-output-circonus |

cloudwatch |

Aggregates and sends metric data to AWS CloudWatch |

logstash-output-cloudwatch |

csv |

Writes events to disk in a delimited format |

logstash-output-csv |

datadog |

Sends events to DataDogHQ based on Logstash events |

logstash-output-datadog |

datadog_metrics |

Sends metrics to DataDogHQ based on Logstash events |

logstash-output-datadog_metrics |

elastic_app_search |

Sends events to the Elastic App Search solution |

logstash-output-elastic_app_search |

elasticsearch |

Stores logs in Elasticsearch |

logstash-output-elasticsearch |

Sends email to a specified address when output is received |

logstash-output-email |

|

exec |

Runs a command for a matching event |

logstash-output-exec |

file |

Writes events to files on disk |

logstash-output-file |

ganglia |

Writes metrics to Ganglia’s |

logstash-output-ganglia |

gelf |

Generates GELF formatted output for Graylog2 |

logstash-output-gelf |

google_bigquery |

Writes events to Google BigQuery |

logstash-output-google_bigquery |

google_pubsub |

Uploads log events to Google Cloud Pubsub |

logstash-output-google_pubsub |

graphite |

Writes metrics to Graphite |

logstash-output-graphite |

graphtastic |

Sends metric data on Windows |

logstash-output-graphtastic |

http |

Sends events to a generic HTTP or HTTPS endpoint |

logstash-output-http |

influxdb |

Writes metrics to InfluxDB |

logstash-output-influxdb |

irc |

Writes events to IRC |

logstash-output-irc |

juggernaut |

Pushes messages to the Juggernaut websockets server |

logstash-output-juggernaut |

kafka |

Writes events to a Kafka topic |

logstash-output-kafka |

librato |

Sends metrics, annotations, and alerts to Librato based on Logstash events |

logstash-output-librato |

loggly |

Ships logs to Loggly |

logstash-output-loggly |

lumberjack |

Sends events using the |

logstash-output-lumberjack |

metriccatcher |

Writes metrics to MetricCatcher |

logstash-output-metriccatcher |

mongodb |

Writes events to MongoDB |

logstash-output-mongodb |

nagios |

Sends passive check results to Nagios |

logstash-output-nagios |

nagios_nsca |

Sends passive check results to Nagios using the NSCA protocol |

logstash-output-nagios_nsca |

opentsdb |

Writes metrics to OpenTSDB |

logstash-output-opentsdb |

pagerduty |

Sends notifications based on preconfigured services and escalation policies |

logstash-output-pagerduty |

pipe |

Pipes events to another program’s standard input |

logstash-output-pipe |

rabbitmq |

Pushes events to a RabbitMQ exchange |

logstash-output-rabbitmq |

redis |

Sends events to a Redis queue using the |

logstash-output-redis |

redmine |

Creates tickets using the Redmine API |

logstash-output-redmine |

riak |

Writes events to the Riak distributed key/value store |

logstash-output-riak |

riemann |

Sends metrics to Riemann |

logstash-output-riemann |

s3 |

Sends Logstash events to the Amazon Simple Storage Service |

logstash-output-s3 |

sns |

Sends events to Amazon’s Simple Notification Service |

logstash-output-sns |

solr_http |

Stores and indexes logs in Solr |

logstash-output-solr_http |

sqs |

Pushes events to an Amazon Web Services Simple Queue Service queue |

logstash-output-sqs |

statsd |

Sends metrics using the |

logstash-output-statsd |

stdout |

Prints events to the standard output |

logstash-output-stdout |

stomp |

Writes events using the STOMP protocol |

logstash-output-stomp |

syslog |

Sends events to a |

logstash-output-syslog |

tcp |

Writes events over a TCP socket |

logstash-output-tcp |

timber |

Sends events to the Timber.io logging service |

logstash-output-timber |

udp |

Sends events over UDP |

logstash-output-udp |

webhdfs |

Sends Logstash events to HDFS using the |

logstash-output-webhdfs |

websocket |

Publishes messages to a websocket |

logstash-output-websocket |

xmpp |

Posts events over XMPP |

logstash-output-xmpp |

zabbix |

Sends events to a Zabbix server |

logstash-output-zabbix |

https://www.elastic.co/cn/products/logstash

以上是关于ELK 安装配置&Logstash插件的主要内容,如果未能解决你的问题,请参考以下文章