小知识|Spark扫描Kerberos hbase环境配置

Posted 大数据技术与架构

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了小知识|Spark扫描Kerberos hbase环境配置相关的知识,希望对你有一定的参考价值。

集成kerberos后,很多使用服务的程序代码需要改写,例如java通过jdbc链接impala、java扫描hbase表、java的kafka客户端...除了 spark程序以外。

下面介绍下集成kerberos后,要做什么准备才能让spark程序正常跑起来

在实施方案前,假设读者已经基本熟悉以下技术 (不细说)

-

熟悉spark程序,spark-submit脚本 -

cdh集成kerberos没有问题 -

hbase没问题 -

这里不提供spark读hbase代码

方案实施

cdh集成spark2,安装完成之后能看到这个玩意:

spark集成kerberos

重启spark2并部署客户端

添加软链接

[root@node1 spark2]# vi /etc/extra-lib/hbase/classpath.txt/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/jars/htrace-core-3.0.4.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/jars/htrace-core-3.2.0-incubating.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/jars/htrace-core4-4.0.1-incubating.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/jars/commons-pool2-2.4.3.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/jars/jedis-2.9.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/jars/druid-1.1.6.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-annotations-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-annotations-1.2.0-cdh5.11.0-tests.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-client-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-client.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-common-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-common-1.2.0-cdh5.11.0-tests.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-examples-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-examples.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-external-blockcache-1.2.0-cdh5.11.0.jar"/etc/extra-lib/hbase/classpath.txt" 41L, 3584C/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/jars/htrace-core-3.0.4.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/jars/htrace-core-3.2.0-incubating.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/jars/htrace-core4-4.0.1-incubating.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/jars/commons-pool2-2.4.3.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/jars/jedis-2.9.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/jars/druid-1.1.6.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-annotations-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-annotations-1.2.0-cdh5.11.0-tests.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-client-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-client.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-common-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-common-1.2.0-cdh5.11.0-tests.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-examples-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-examples.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-external-blockcache-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-external-blockcache.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-hadoop2-compat-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-hadoop2-compat-1.2.0-cdh5.11.0-tests.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-hadoop-compat-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-hadoop-compat-1.2.0-cdh5.11.0-tests.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-it-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-it-1.2.0-cdh5.11.0-tests.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-prefix-tree-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-procedure-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-protocol-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-resource-bundle-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-rest-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-rsgroup-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-rsgroup-1.2.0-cdh5.11.0-tests.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-server-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-server-1.2.0-cdh5.11.0-tests.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-shell-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-spark-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH-5.11.0-1.cdh5.11.0.p0.34/lib/hbase/hbase-thrift-1.2.0-cdh5.11.0.jar/opt/cloudera/parcels/CDH/jars/ojdbc6.jar/etc/extra-lib/hbase/spring/spring-beans-5.0.2.RELEASE.jar/etc/extra-lib/hbase/spring/spring-core-5.0.2.RELEASE.jar/etc/extra-lib/hbase/spring/spring-expression-5.0.2.RELEASE.jar/etc/extra-lib/hbase/spring/spring-jcl-5.0.2.RELEASE.jar:wq#每个节点都要有scp /etc/extra-lib/hbase/classpath.txt root@bi-slave1:/etc/extra-lib/hbase/...

修改Spark2在CM内的配置:

“spark2-conf/spark-env.sh 的 Spark 2 服务高级配置代码段(安全阀)”

“spark2-conf/spark-env.sh 的 Spark 2 客户端高级配置代码段(安全阀)”

在以上两个配置框内加入:

export SPARK_DIST_CLASSPATH="SPARKDISTCLASSPATH(paste -sd: "/etc/extra-lib/hbase/classpath.txt")"

重启spark,并部署客户端

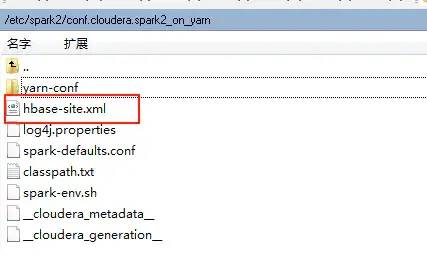

添加hbase_site.xml到$SPARK_HOME/conf

下载客户端代码

解压获得hbase_site.xml文件, 并添加到$SPARK_HOME/conf里面

yarn-conf里面也需要有该xml

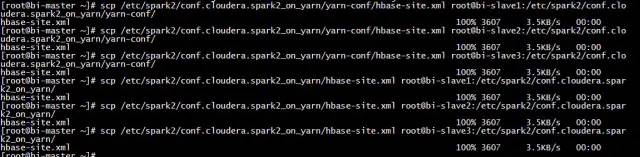

拷贝到其他节点

spark测试

因为写的spark程序需要读取hbase的表,先给用户赋权限,这里以deng_yb账号为例子

用hbase账号登录hbase shell

[root@node1 ~]# kinit -kt /opt/cm-5.11.0/run/cloudera-scm-agent/process/1247-hbase-HBASERESTSERVER/hbase.keytab hbase/node1@W.COM[root@node1 ~]# klistTicket cache: FILE:/tmp/krb5cc_0Default principal: hbase/node1@W.COMValid starting Expires Service principal06/13/18 21:20:03 06/14/18 21:20:03 krbtgt/W.COM@W.COMrenew until 06/18/18 21:20:03[root@node1 ~]# hbase shell.....

为deng_yb账号赋权

这里赋权U:DAY_ORG_CMP_OSI和U:DAY_ORG_PRO_CATE_SPARK读写

hbase(main):001:0> listTABLEU:DAY_ORG_CMP_OSIU:DAY_ORG_PRO_CATE_SPARKtesttest_useruser_info5 row(s) in 0.5330 seconds=> ["U:DAY_ORG_CMP_OSI", "U:DAY_ORG_PRO_CATE_SPARK", "test", "test_user", "user_info"]hbase(main):002:0> grant 'deng_yb','RW','U:DAY_ORG_CMP_OSI'0 row(s) in 0.6180 secondshbase(main):003:0> grant 'deng_yb','RW','U:DAY_ORG_PRO_CATE_SPARK'0 row(s) in 0.1320 seconds

登录deng_yb账号

[root@node1 ~]# kinit deng_ybPassword for deng_yb@W.COM:[root@node1 ~]# klistTicket cache: FILE:/tmp/krb5cc_0Default principal: deng_yb@W.COMValid starting Expires Service principal06/13/18 21:24:18 06/14/18 21:24:18 krbtgt/W.COM@W.COMrenew until 06/20/18 21:24:18

查看hbase权限下的表

hbase(main):001:0> listTABLEU:DAY_ORG_CMP_OSIU:DAY_ORG_PRO_CATE_SPARK2 row(s) in 0.5380 seconds=> ["U:DAY_ORG_CMP_OSI", "U:DAY_ORG_PRO_CATE_SPARK"]hbase(main):002:0>#赋权成功

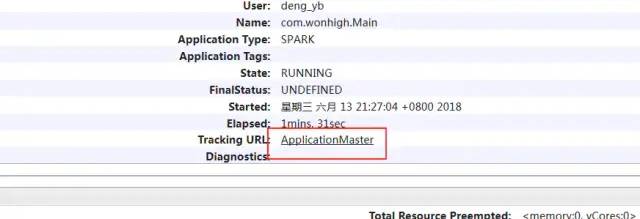

执行spark程序 (执行的账号必须能够访问hive元数据)

spark2-submit --master yarn --deploy-mode cluster --executor-memory 4G --total-executor-cores 4 --driver-memory 4g --class com.W.Main /usr/local/W/bi-bdap-0.1.0-SNAPSHOT.jar执行完之后,记得去查看AM日志

AM日志没问题,再去看executors日志

到这里,假如日志没显示异常代表spark运行成功

到这里,假如日志没显示异常代表spark运行成功

问题解惑

部分开发遇到认证失败

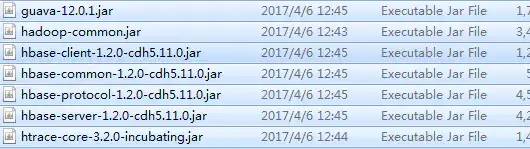

以下几个jar是必须要有的

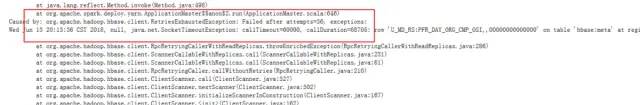

连接hbase一直报超时

结论

spark在集群环境跑,必须要满足

1).sparkclassath 必须包含hbase相关jarSPARK_HOME/conf必须要有hbase_site.xml或者程序Configuration必须

版权声明:

文章不错?点个【在看】吧! 以上是关于小知识|Spark扫描Kerberos hbase环境配置的主要内容,如果未能解决你的问题,请参考以下文章 Spark Streaming 和 Phoenix Kerberos 问题