深入浅出Kubernetes网络:容器网络初探

Posted 分布式实验室

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了深入浅出Kubernetes网络:容器网络初探相关的知识,希望对你有一定的参考价值。

| Mount Namespace | 文件系统隔离 |

|---|---|

| UTS Namespace | 主机名隔离 |

| IPC Namespace | 进程间通信隔离 |

| PID Namespace | 进程号隔离 |

| Network Namespace | 网络隔离 |

| User Namespace | 用户认证隔离 |

| Control group(Cgroup) Namespace | Cgroup认证(since Linux 4.6) |

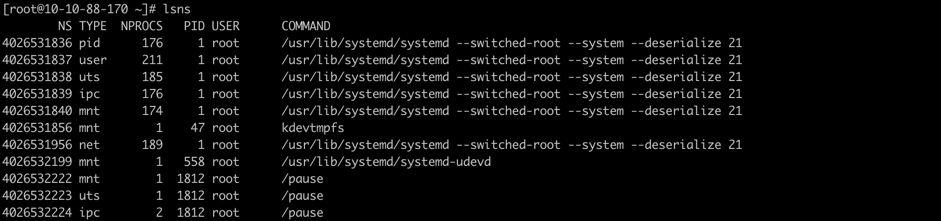

| NS | Namespace identifier(inode number) |

|---|---|

| TYPE | kind of namespace |

| NPROCS | number of processes in the namespaces |

| PID | lowers PID in the namespace |

| USER | username of the PID |

| COMMAND | command line of the PID |

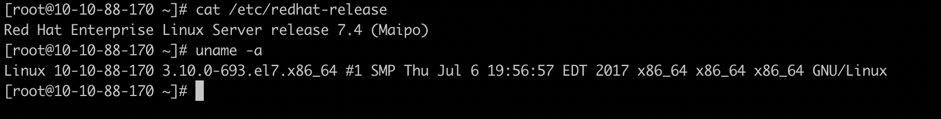

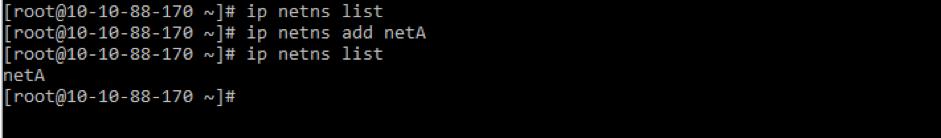

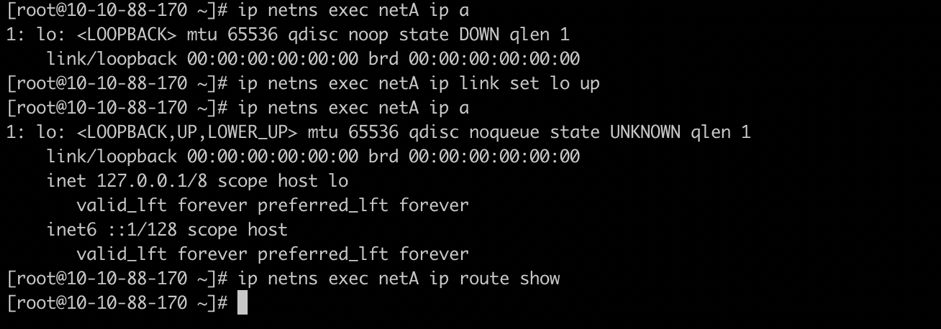

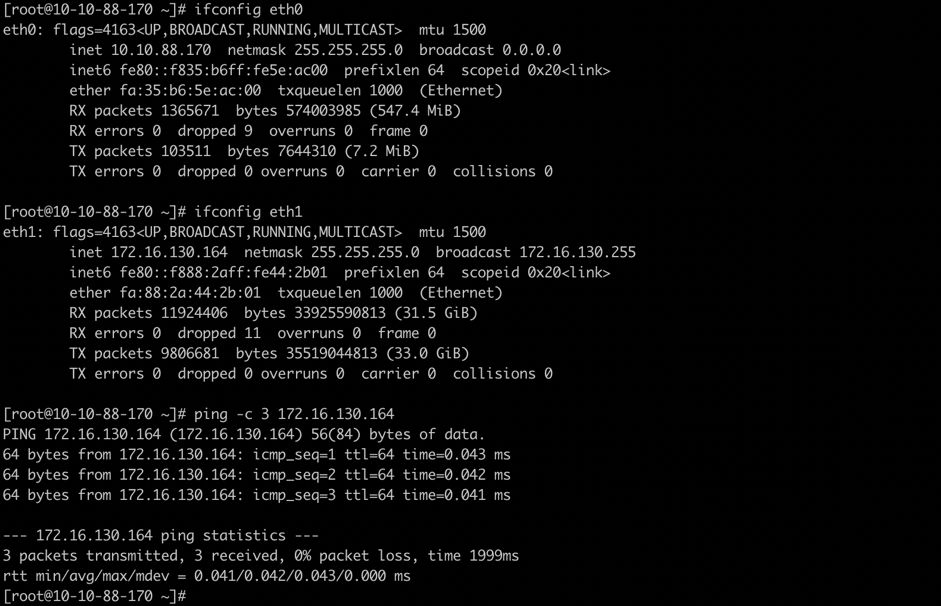

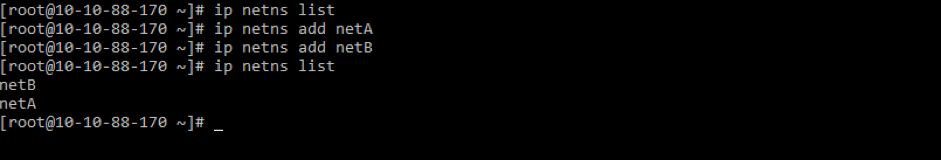

与Network Namespace相关性较强的还有另外一个命令 ip netns,主要用于持久化命名空间的管理,包括Network Namespace的创建、删除以和配置等。 ip netns命令在创建Network Namespace时默认会在/var/run/netns目录下创建一个bind mount的挂载点,从而达到持久化Network Namespace的目的,即允许在该命名空间当中没有进程的情况下依然保留该命名空间。Docker当中由于缺少了这一步,玩过Docker的同学就会发现通过Docker创建容器后并不能在宿主机上通过 ip netns查看到相关的Network Namespace(这个后面会讲怎么才能够看到,稍微小操作一下就行)。

ip netns add <namespace name> # 添加network namespace

ip netns list # 查看Network Namespace

ip netns delete <namespace name> # 删除Network Namespace

ip netns exec <namespace name> <command> # 进入到Network Namespace当中执行命令

ip link set dev eth0 netns netA

宿主机上看不到eth0网络接口了(同一时刻网络接口只能在一个Network Namespace)

netA network namespace里面无法ping通root namespace当中的eth1(网络隔离)

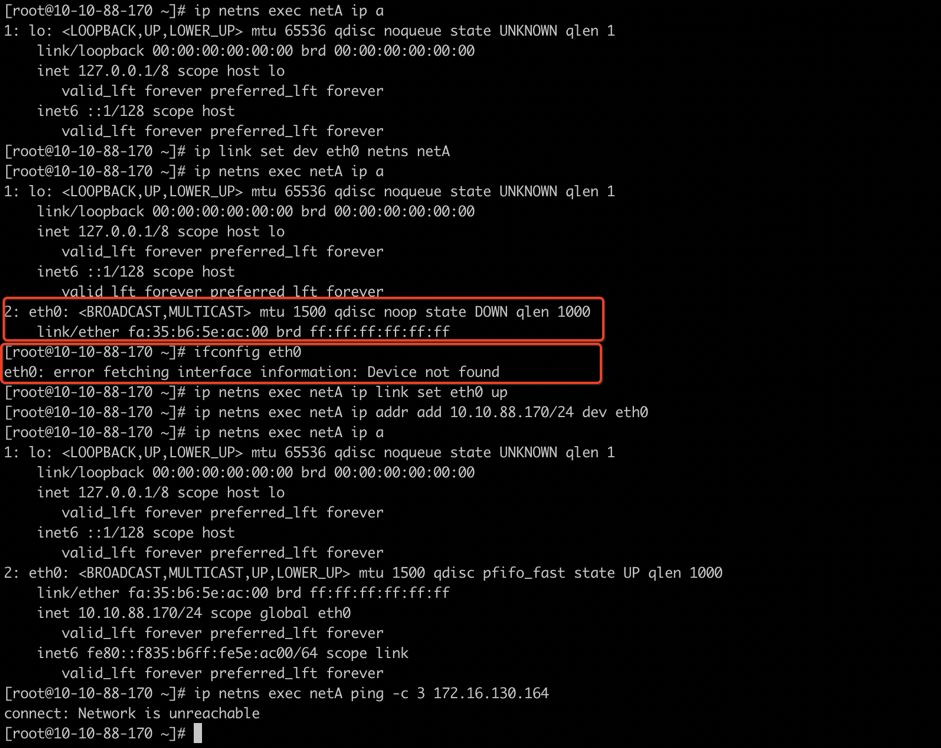

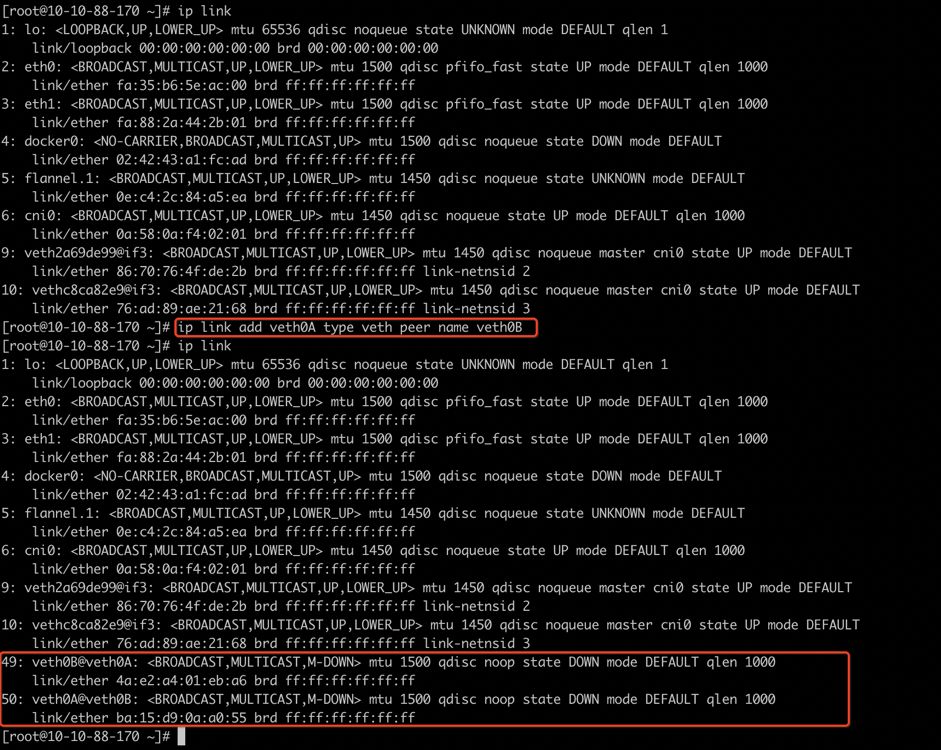

vEth作为一种虚拟以太网络设备,可以连接两个不同的Network Namespace。

vEth总是成对创建,所以一般叫veth pair。(因为没有只有一头的网线)。

vEth当中一端收到数据包后另一端也会立马收到。

可以通过ethtool找到vEth的对端接口。(注意后面会用到)

ip link add <veth name> type veth peer name <veth peer name>

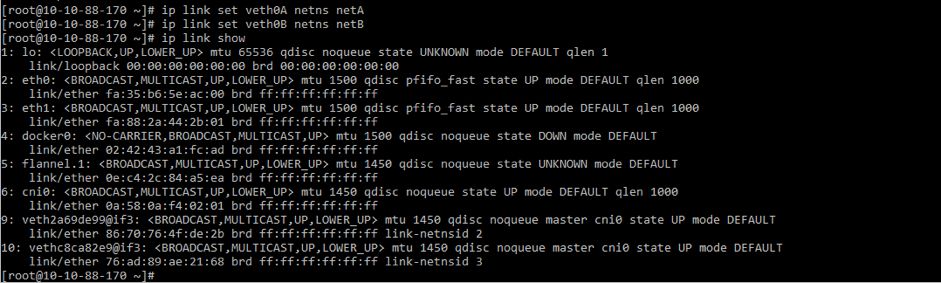

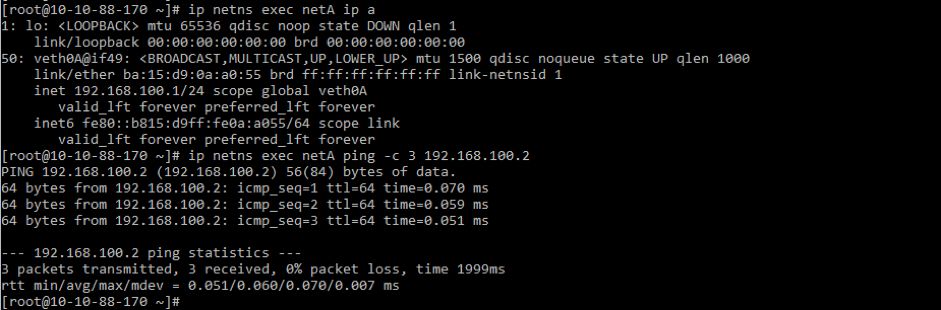

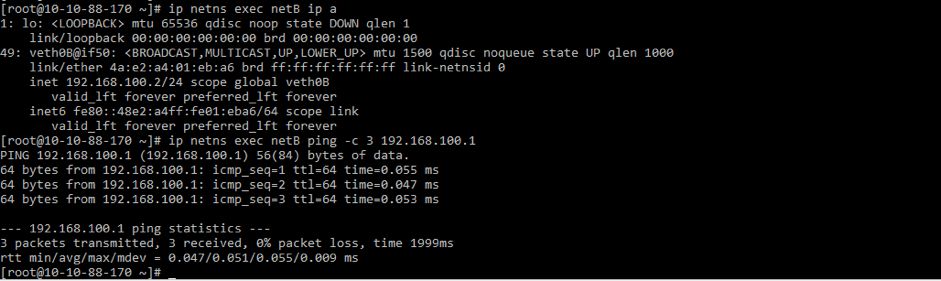

ip netns add netA

ip netns add netB

ip link set veth0A netns netA

ip link set veth0B netns netB

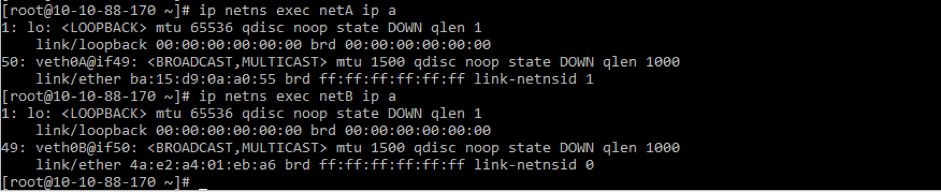

ip netns exec netA ip link set veth0A up

ip netns exec netA ip addr add 192.168.100.1/24 dev veth0A

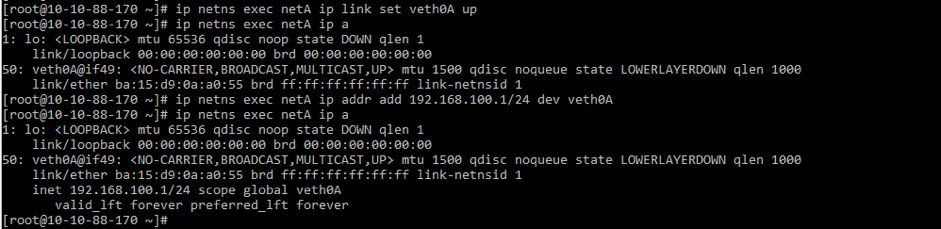

ip netns exec netB ip addr add 192.168.100.2/24 dev veth0B

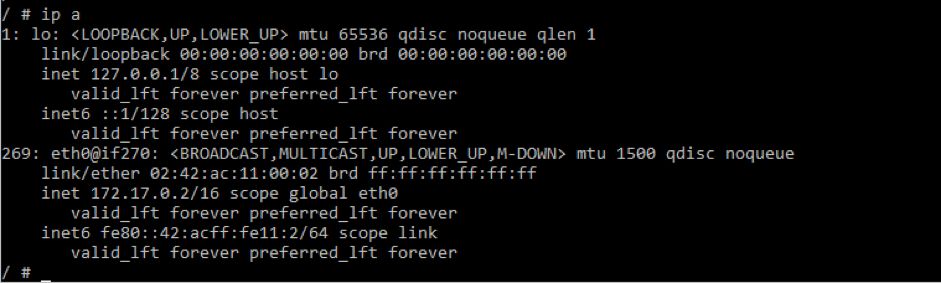

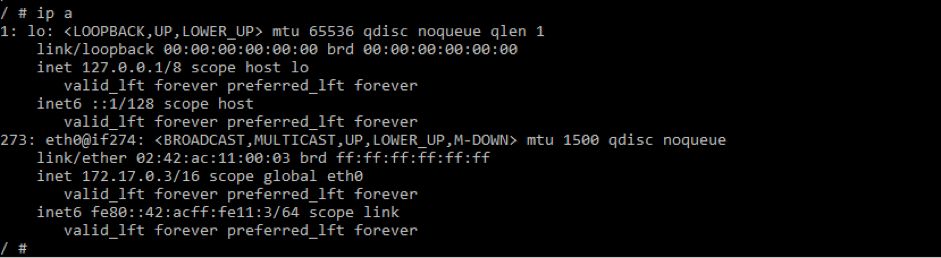

docker run -it --name testA busybox sh

docker run -it --name testB busybox sh

tcpdump -n -i docker0

[root@10-10-88-192 network]# kubectl get node --show-labels

NAME STATUS ROLES AGE VERSION LABELS

10-10-88-170 Ready <none> 47d v1.10.5-28+187e1312d40a02 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=10-10-88-170

10-10-88-192 Ready master 47d v1.10.5-28+187e1312d40a02 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=10-10-88-192,node-role.kubernetes.io/master=

10-10-88-195 Ready <none> 47d v1.10.5-28+187e1312d40a02 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=10-10-88-195

[root@10-10-88-192 network]#

[root@10-10-88-192 network]# kubectl label --overwrite node 10-10-88-170 host=node1node "10-10-88-170" labeled

[root@10-10-88-192 network]#

[root@10-10-88-192 network]# kubectl label --overwrite node 10-10-88-195 host=node2node "10-10-88-195" labeled

[root@10-10-88-192 network]#

[root@10-10-88-192 network]# kubectl get node --show-labels

NAME STATUS ROLES AGE VERSION LABELS

10-10-88-170 Ready <none> 47d v1.10.5-28+187e1312d40a02 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,host=node1,kubernetes.io/hostname=10-10-88-170

10-10-88-192 Ready master 47d v1.10.5-28+187e1312d40a02 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/hostname=10-10-88-192,node-role.kubernetes.io/master=

10-10-88-195 Ready <none> 47d v1.10.5-28+187e1312d40a02 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,host=node2,kubernetes.io/hostname=10-10-88-195

[root@10-10-88-192 network]#

[root@10-10-88-192 network]# ls

busybox1.yaml busybox2.yaml

[root@10-10-88-192 network]# cat busybox1.yaml

apiVersion: v1

kind: Pod

metadata:

name: busybox1

labels:

app: busybox1

spec:

containers:

- name: busybox1

image: busybox

command: ['sh', '-c', 'sleep 100000']

nodeSelector:

host: node1

[root@10-10-88-192 network]#

[root@10-10-88-192 network]# cat busybox2.yaml

apiVersion: v1kind: Pod

metadata:

name: busybox2

labels:

app: busybox2

spec:

containers:

- name: busybox2

image: busybox

command: ['sh', '-c', 'sleep 200000']

nodeSelector:

host: node1

[root@10-10-88-192 network]#

[root@10-10-88-192 network]#

[root@10-10-88-192 network]# kubectl create -f busybox1.yaml

pod "busybox1" created

[root@10-10-88-192 network]# kubectl create -f busybox2.yaml

pod "busybox2" created

[root@10-10-88-192 network]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

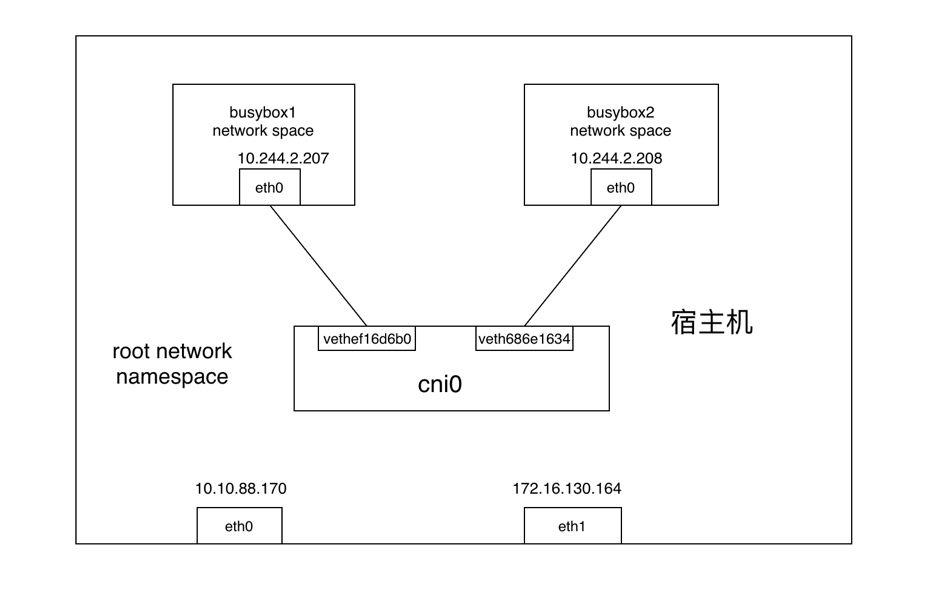

busybox1 1/1 Running 0 33s 10.244.2.207 10-10-88-170

busybox2 1/1 Running 0 20s 10.244.2.208 10-10-88-170

[root@10-10-88-192 network]#

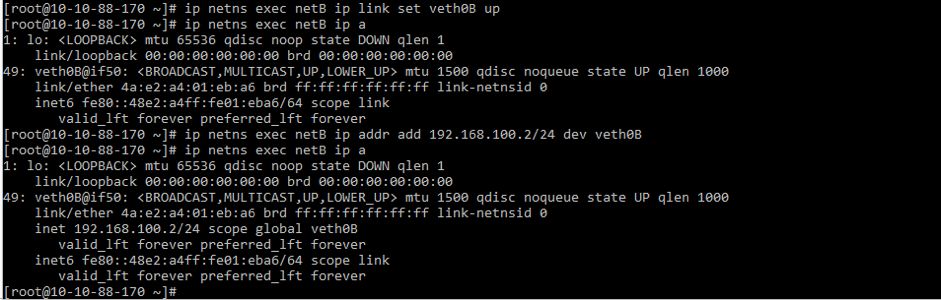

[root@10-10-88-170 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether fa:35:b6:5e:ac:00 brd ff:ff:ff:ff:ff:ff

inet 10.10.88.170/24 brd 10.10.88.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::f835:b6ff:fe5e:ac00/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether fa:88:2a:44:2b:01 brd ff:ff:ff:ff:ff:ff

inet 172.16.130.164/24 brd 172.16.130.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::f888:2aff:fe44:2b01/64 scope link

valid_lft forever preferred_lft forever

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:43:a1:fc:ad brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 scope global docker0

valid_lft forever preferred_lft forever

5: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN

link/ether 0e:c4:2c:84:a5:ea brd ff:ff:ff:ff:ff:ff

inet 10.244.2.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::cc4:2cff:fe84:a5ea/64 scope link

valid_lft forever preferred_lft forever

6: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP qlen 1000

link/ether 0a:58:0a:f4:02:01 brd ff:ff:ff:ff:ff:ff

inet 10.244.2.1/24 scope global cni0

valid_lft forever preferred_lft forever

inet6 fe80::f0a0:7dff:feec:3ffd/64 scope link

valid_lft forever preferred_lft forever

9: veth2a69de99@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP

link/ether 86:70:76:4f:de:2b brd ff:ff:ff:ff:ff:ff link-netnsid 2

inet6 fe80::8470:76ff:fe4f:de2b/64 scope link

valid_lft forever preferred_lft forever

10: vethc8ca82e9@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP

link/ether 76:ad:89:ae:21:68 brd ff:ff:ff:ff:ff:ff link-netnsid 3

inet6 fe80::74ad:89ff:feae:2168/64 scope link

valid_lft forever preferred_lft forever

39: veth686e1634@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP

link/ether 66:99:fe:30:d2:e1 brd ff:ff:ff:ff:ff:ff link-netnsid 4

inet6 fe80::6499:feff:fe30:d2e1/64 scope link

valid_lft forever preferred_lft forever

40: vethef16d6b0@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP

link/ether c2:7f:73:93:85:fc brd ff:ff:ff:ff:ff:ff link-netnsid 5

inet6 fe80::c07f:73ff:fe93:85fc/64 scope link

valid_lft forever preferred_lft forever

[root@10-10-88-170 ~]#

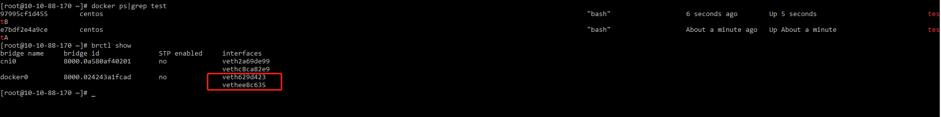

[root@10-10-88-170 ~]# brctl show

bridge name bridge id STP enabled interfaces

cni0 8000.0a580af40201 no veth2a69de99

veth686e1634

vethc8ca82e9

vethef16d6b0

docker0 8000.024243a1fcad no

[root@10-10-88-170 ~]#

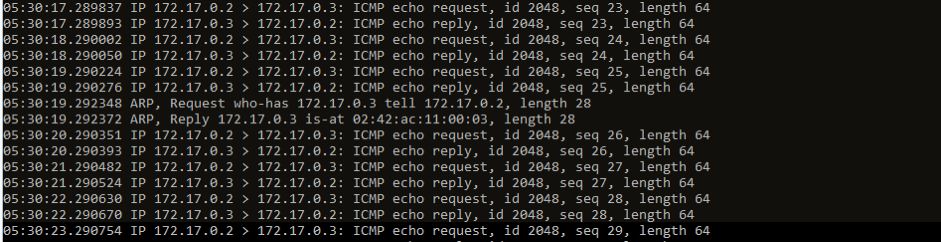

网络包从busybox1的eth0发出,并通过vethef16d6b0进入到root netns(网络包从vEth的一端发送后另一端会立马收到)。

网络包被传到网桥cni0,网桥通过发送“who has this IP?”的ARP请求来发现网络包需要转发到的目的地(10.244.2.208)。

busybox2回答到它有这个IP,所以网桥知道应该把网络包转发到veth686e1634(busybox2)。

网络包到达veth686e1634接口,并通过vEth进入到busybox2的netns,从而完成网络包从一个容器busybox1到另一个容器busybox2的过程。

以上是关于深入浅出Kubernetes网络:容器网络初探的主要内容,如果未能解决你的问题,请参考以下文章