HDFS的Shell操作(开发重点)

Posted 五角钱的程序员

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了HDFS的Shell操作(开发重点)相关的知识,希望对你有一定的参考价值。

第一时间关注技术干货!

✨一起学习、成长、温情的热爱生活✨

图丨pixabay

1.基本语法

bin/hadoop fs 具体命令 OR bin/hdfs dfs 具体命令dfs是fs的实现类。

2.命令大全

[hadoop@hadoop103 hadoop-2.7.2]$ bin/hadoop fs

Usage: hadoop fs [generic options]

[-appendToFile <localsrc> ... <dst>]

[-cat [-ignoreCrc] <src> ...]

[-checksum <src> ...]

[-chgrp [-R] GROUP PATH...]

[-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...]

[-chown [-R] [OWNER][:[GROUP]] PATH...]

[-copyFromLocal [-f] [-p] [-l] <localsrc> ... <dst>]

[-copyToLocal [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-count [-q] [-h] <path> ...]

[-cp [-f] [-p | -p[topax]] <src> ... <dst>]

[-createSnapshot <snapshotDir> [<snapshotName>]]

[-deleteSnapshot <snapshotDir> <snapshotName>]

[-df [-h] [<path> ...]]

[-du [-s] [-h] <path> ...]

[-expunge]

[-find <path> ... <expression> ...]

[-get [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-getfacl [-R] <path>]

[-getfattr [-R] {-n name | -d} [-e en] <path>]

[-getmerge [-nl] <src> <localdst>]

[-help [cmd ...]]

[-ls [-d] [-h] [-R] [<path> ...]]

[-mkdir [-p] <path> ...]

[-moveFromLocal <localsrc> ... <dst>]

[-moveToLocal <src> <localdst>]

[-mv <src> ... <dst>]

[-put [-f] [-p] [-l] <localsrc> ... <dst>]

[-renameSnapshot <snapshotDir> <oldName> <newName>]

[-rm [-f] [-r|-R] [-skipTrash] <src> ...]

[-rmdir [--ignore-fail-on-non-empty] <dir> ...]

[-setfacl [-R] [{-b|-k} {-m|-x <acl_spec>} <path>]|[--set <acl_spec> <path>]]

[-setfattr {-n name [-v value] | -x name} <path>]

[-setrep [-R] [-w] <rep> <path> ...]

[-stat [format] <path> ...]

[-tail [-f] <file>]

[-test -[defsz] <path>]

[-text [-ignoreCrc] <src> ...]

[-touchz <path> ...]

[-truncate [-w] <length> <path> ...]

[-usage [cmd ...]]

Generic options supported are

-conf <configuration file> specify an application configuration file

-D <property=value> use value for given property

-fs <local|namenode:port> specify a namenode

-jt <local|resourcemanager:port> specify a ResourceManager

-files <comma separated list of files> specify comma separated files to be copied to the map reduce cluster

-libjars <comma separated list of jars> specify comma separated jar files to include in the classpath.

-archives <comma separated list of archives> specify comma separated archives to be unarchived on the compute machines.

The general command line syntax is

bin/hadoop command [genericOptions] [commandOptions]

3.常用命令实操

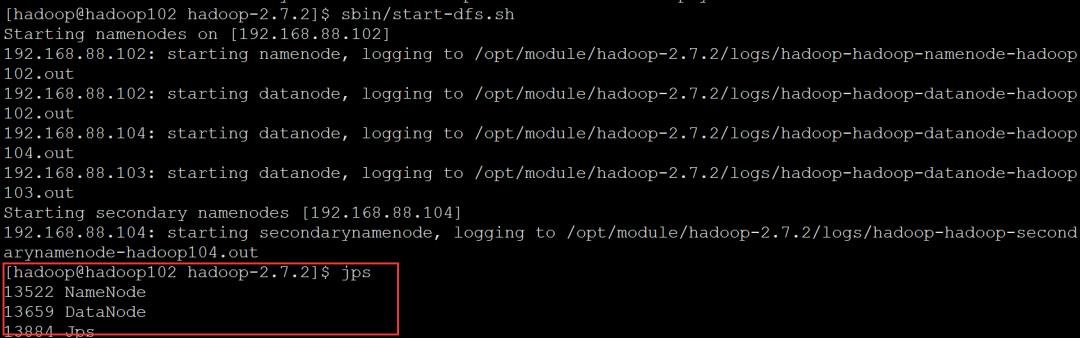

先启动集群:

102

[hadoop@hadoop102 ~]$ cd /opt/module/hadoop-2.7.2/

[hadoop@hadoop102 hadoop-2.7.2]$ sbin/start-dfs.sh

103

[hadoop@hadoop103 ~]$ cd /opt/ module/hadoop -2.7.2/

[hadoop@hadoop103 hadoop -2.7.2]$ sbin/start-yarn.sh

(1)-help:输出这个命令参数

[hadoop@hadoop103 hadoop -2.7.2]$ hadoop fs -help rm

[hadoop@hadoop103 hadoop -2.7.2]$ hadoop fs -ls /

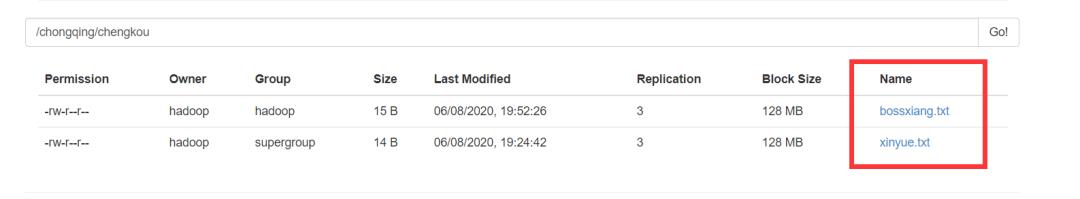

[hadoop@hadoop103 hadoop -2.7.2]$ hadoop fs -mkdir -p /chongqing/chengkou

[hadoop@hadoop103 hadoop -2.7.2]$ touch bossxiang.txt

[hadoop@hadoop103 hadoop -2.7.2]$ vi bossxiang.txt

woshibossxiang

[hadoop@hadoop103 hadoop -2.7.2]$ hadoop fs -moveFromLocal ./bossxiang.txt /chongqing/chengkou

[hadoop@hadoop103 hadoop -2.7.2]$ touch yuan.txt

[hadoop@hadoop103 hadoop -2.7.2]$ vi yuan.txt

wo shi chen yuan

[hadoop@hadoop103 hadoop -2.7.2]$ hadoop fs -appendToFile ./yuan.txt /chongqing/chengkou/bossxiang.txt

[hadoop@hadoop103 hadoop -2.7.2]$ hadoop fs -cat /chongqing/chengkou/bossxiang.txt

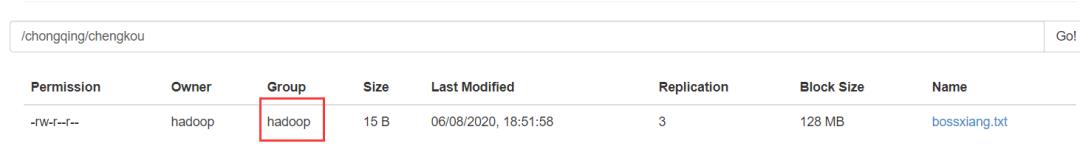

[hadoop@hadoop103 hadoop -2.7.2]$ hadoop fs -chgrp hadoop /chongqing/chengkou/bossxiang.txt

(8)-copyFromLocal:从本地文件系统中拷贝文件到HDFS路径去

[hadoop@hadoop102 hadoop -2.7.2]$ touch xinyue.txt

[hadoop@hadoop102 hadoop -2.7.2]$ vi xinyue.txt

wo shi xinyue

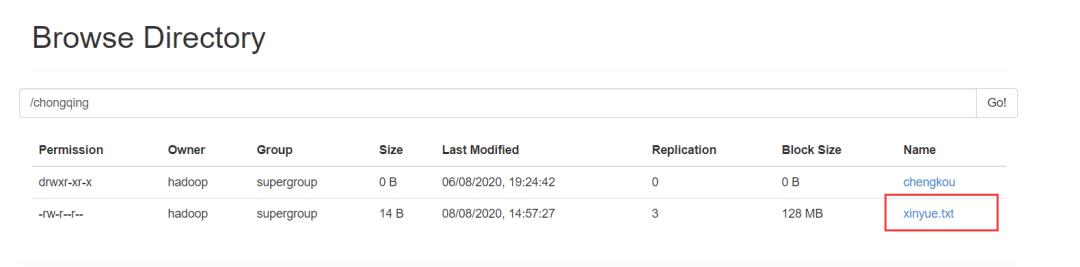

[hadoop@hadoop102 hadoop -2.7.2]$ hadoop fs -copyFromLocal ./xinyue.txt /chongqing/chengkou/

[hadoop@hadoop102 hadoop -2.7.2]$ hadoop fs -copyToLocal /chongqing/chengkou/bossxiang.txt ./

[hadoop@hadoop102 hadoop -2.7.2]$ hadoop fs -cp /chongqing/chengkou/xinyue.txt /chongqing/

[hadoop@hadoop102 hadoop -2.7.2]$ hadoop fs -mv /chongqing/xinyue.txt /

copyToLocal,就是从HDFS下载文件到本地

[hadoop@hadoop102 hadoop -2.7.2]$ rm xinyue.txt

[hadoop@hadoop102 hadoop -2.7.2]$ hadoop fs -get /xinyue.txt

/user/atguigu/test下有多个文件:log.1, log.2,log.3,…

[hadoop@hadoop102 hadoop -2.7.2]$ hadoop fs -getmerge /chongqing/chengkou /* ./zaiyiqi.txt

[hadoop@hadoop102 hadoop-2.7.2]$ cat zaiyiqi.txt

woshibossxiang

wo shi xinyue

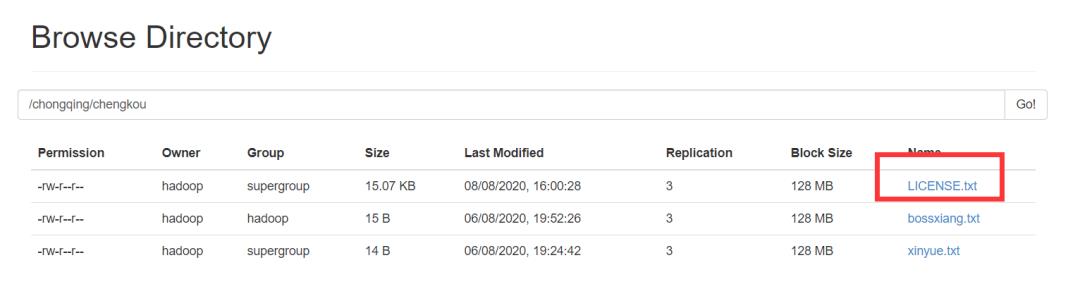

(14)-put:等同于copyFromLocal

[hadoop@hadoop102 hadoop -2.7.2]$ hadoop fs -put ./LICENSE.txt /chongqing/chengkou

(15)-tail:显示一个文件的末尾

[hadoop@hadoop102 hadoop -2.7.2]$ hadoop fs -tail /chongqing/chengkou/LICENSE.txt

mentation and/ or other materials provided with the

distribution.

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

"AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR

A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT

OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL,

SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT

LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE,

DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY

THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

(INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

You can contact the author at :

- LZ4 source repository : http://code.google.com/p/lz4/

- LZ4 public forum : https://groups.google.com/forum/#!forum/lz4c

*/

[hadoop@hadoop102 hadoop -2.7.2]$ hadoop fs -rm /chongqing/chengkou/LICENSE.txt

20/ 08/ 08 04: 21: 42 INFO fs.TrashPolicyDefault: Namenode trash configuration: Deletion interval = 0 minutes, Emptier interval = 0 minutes.

Deleted /chongqing/chengkou/LICENSE.txt

[hadoop@hadoop102 hadoop -2.7.2]$ hadoop fs -mkdir /test

[hadoop@hadoop102 hadoop -2.7.2]$ hadoop fs -rmdir /test

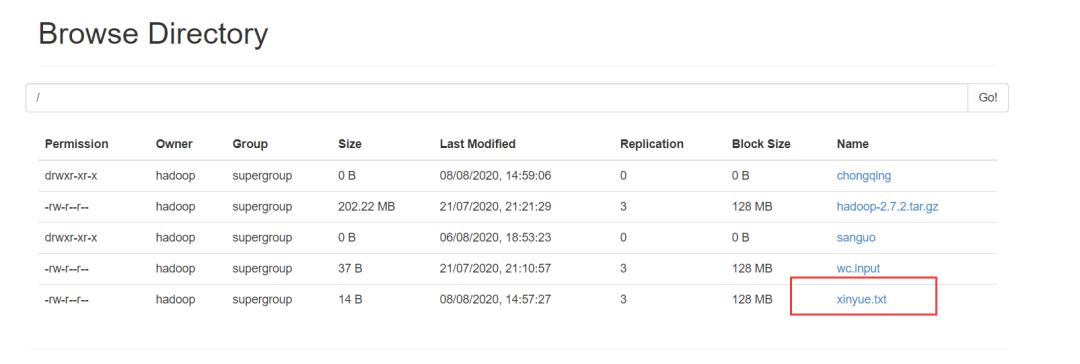

[hadoop@hadoop102 hadoop -2.7.2]$ hadoop fs -du /

29 /chongqing

212046774 /hadoop -2.7.2.tar.gz

13 /sanguo

37 /wc.input

14 /xinyue.txt

[hadoop@hadoop102 hadoop -2.7.2]$ hadoop fs -du -h /

29 /chongqing

202.2 M /hadoop -2.7.2.tar.gz

13 /sanguo

37 /wc.input

14 /xinyue.txt

[hadoop@hadoop102 hadoop -2.7.2]$ hadoop fs -du -h -s /

202.2 M /

[hadoop@hadoop102 hadoop -2.7.2]$ cd data/tmp/dfs/data/current/BP -1454198558-117.59.224.141-1595323236787/current/finalized/subdir0/subdir0/

[hadoop@hadoop102 subdir0]$ hadoop fs -rm -R /chongqing

[hadoop@hadoop102 subdir0]$ hadoop fs -rm /hadoop -2.7.2.tar.gz

[hadoop@hadoop102 subdir0]$ hadoop fs -rm -R /sanguo

[hadoop@hadoop102 subdir0]$ ll

total 207096

-rw-rw-r--. 1 hadoop hadoop 37 Jul 21 09: 10 blk_1073741825

-rw-rw-r--. 1 hadoop hadoop 11 Jul 21 09: 10 blk_1073741825_1001.meta

-rw-rw-r--. 1 hadoop hadoop 14 Aug 8 02: 57 blk_1073741831

-rw-rw-r--. 1 hadoop hadoop 11 Aug 8 02: 57 blk_1073741831_1011.meta

drwxr-xr-x. 9 hadoop hadoop 149 Jan 25 2016 hadoop -2.7.2

-rw-rw-r--. 1 hadoop hadoop 212046774 Jul 21 09: 26 tmp.txt

[hadoop@hadoop102 subdir0]$ cat blk_1073741825

xiang

xiang

lin

lin yuan chen yuan

[hadoop@hadoop102 subdir0]$ cat blk_1073741831

wo shi xinyue

[hadoop@hadoop102 subdir0]$

[hadoop@hadoop102 finalized]$ hadoop fs -setrep 2 /xinyue.txt

参考链接:尚硅谷hadoop教程

以上是关于HDFS的Shell操作(开发重点)的主要内容,如果未能解决你的问题,请参考以下文章