大型hadoop集群中的Mysql高可用架构

Posted 学足迹

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了大型hadoop集群中的Mysql高可用架构相关的知识,希望对你有一定的参考价值。

在CDH和HDP的社区,官方文档都在讲述使用mysql主备来做元数据的高可用,但实际生产集群由于租户化,提交任务比较多,在集群mysql发生主备切换的时候经常由于mysql自身binlog异步同步的天生缺陷,导致元数据不一致、replication停止等烦人的故障,只能祭出大招——使用集群管理套件来做mysql元数据的高可用,解放双手,保护发际线……

架构:rhcs+drbd+mysql

环境:CentOS 7.6

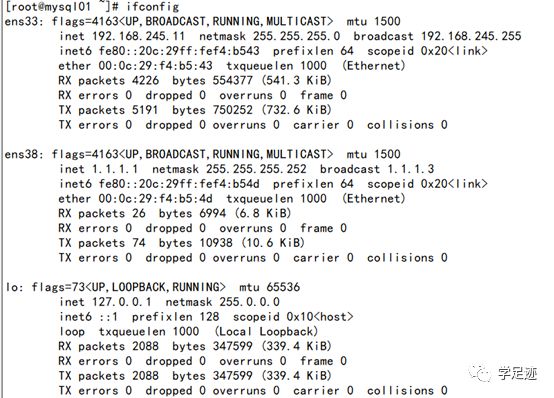

mysql01 192.168.245.11

mysql02 192.168.245.12

vip 192.168.245.100

rpm包:drbd84-utils kmod-drbd84

PS: 此架构不一一介绍了,百度谷歌一堆文章,但是大部分无法在真正的生产环境使用,原因是双机架构缺少仲裁,容易产生脑裂,为此,参考网络架构和Oracle-rac的原理,增加了心跳网络,生产环境中业务网络和心跳网络均需要做网卡聚合实现主机自身网络的高可用。

增加了心跳网卡

两台虚拟机除了系统盘,另外再挂载一块50G的数据盘,分区如下

PS:实验环境请挂1G数据盘,我机械硬盘同步了半小时……

[root@mysql01~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 49G 0 part

├─centos-root 253:0 045.1G 0 lvm /

└─centos-swap 253:1 0 3.9G 0lvm [SWAP]

sdb 8:16 0 50G 0 disk

└─sdb1 8:17 0 50G 0 part

sr0 11:0 1 4.3G 0 rom /media

分区

[root@mysql01 ~]# pvcreate /dev/sdb

WARNING: dos signature detected on /dev/sdbat offset 510. Wipe it? [y/n]: y

Wiping dos signature on /dev/sdb.

Physical volume "/dev/sdb" successfully created.

[root@mysql01 ~]# vgcreate data /dev/sdb

Volume group "data" successfully created

[root@mysql01 ~]# lvcreate --size 50000M--name mysql data

WARNING: LVM2_member signature detected on/dev/data/mysql at offset 536. Wipe it? [y/n]: y

Wiping LVM2_member signature on /dev/data/mysql.

Logical volume "mysql" created.

关闭selinux和firewalld

setenforce 0

sed -i.bak"s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

systemctl disable firewalld.service

systemctl stop firewalld.service

iptables --flush

配置hosts

tail -2 /etc/hosts

192.168.245.11 mysql01

192.168.245.12 mysql02

配置ntp同步(必须)

yum -y install ntp

/etc/ntp.conf

添加

server ntp.aliyun.com iburst minpoll 4maxpoll 10

systemctl start ntpd;systemctl enable ntpd

ssh互信

ssh-keygen -t dsa -f~/.ssh/id_dsa -N ""

ssh-copy-id mysql01

ssh-copy-id mysql02

yum安装drbd

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvhhttp://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

yum install -y kmod-drbd84 drbd84-utils

配置drbd

vim /etc/drbd.d/mysql.res

resource mysql {

#资源名称

protocol C;

#使用协议

meta-disk internal;

device /dev/drbd1;

#DRBD设备名称

syncer {

verify-alg sha1;

# 加密算法

}

#主备配置

net {

allow-two-primaries no;

after-sb-0pri discard-zero-changes;

after-sb-1pri discard-secondary;

after-sb-2pri disconnect;

rr-conflict disconnect;

}

#双主配置

net {

allow-two-primaries;

after-sb-0pri discard-zero-changes;

after-sb-1pri discard-secondary;

after-sb-2pri disconnect;

}

disk {

fencing resource-and-stonith;

}

handlers {

fence-peer"/usr/lib/drbd/crm-fence-peer.sh";

after-resync-target"/usr/lib/drbd/crm-unfence-peer.sh";

}

###双主配置结束

on mysql01 {

disk /dev/data/mysql;

#drbd1使用的磁盘分区为"mysql"

address 1.1.1.1:7789;

}

on mysql02 {

disk /dev/data/mysql;

address 1.1.1.2:7789;

}

}

分发配置文件

scp -rp /etc/drbd.d/* mysql02:/etc/drbd.d/

启动drbd

mysql01:

drbdadm create-md mysql

modprobe drbd;lsmod |grep drbd

drbdadm up mysql

drbdadm --force primary mysql

mysql02:

modprobe drbd;lsmod |grep drbd

drbdadm create-md mysql

drbdadm up mysql

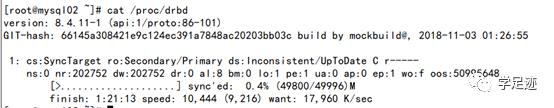

查看drbd同步状态

mysql01 挂载drbd逻辑磁盘并初始化mysql

[root@mysql01 ~]# mkfs.xfs /dev/drbd1

meta-data=/dev/drbd1 isize=512 agcount=4, agsize=3211164 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=12844655,imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=6271,version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

挂载设备

mkdir -pv /var/lib/mysql;chown -Rmysql:mysql /var/lib/mysql

mount /dev/drbd1 /var/lib/mysql

安装mysql

tar -xvfmysql-5.7.28-1.el7.x86_64.rpm-bundle.tar

yum localinstallmysql-community-{server,client,common,libs}-*

[root@mysql01 ~]# grep ^[0-Z]/etc/my.cnf

[mysqld]

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

symbolic-links=0

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

max_connections=2000

character-set-server=utf8

default-storage-engine=INNODB

server-id=98

[mysql]

default-character-set=utf8

scp /etc/my.cnf mysql02:/etc/

systemctl disable mysqld

systemctl start mysqld

grep 'temporary password'/var/log/mysqld.log

mysql -uroot -p

ALTER USER 'root'@'localhost' IDENTIFIED BY'Root@123';

CREATE USER 'root'@'%' IDENTIFIED BY'Root@123';

GRANT ALL PRIVILEGES ON *.* TO 'root'@'%';

flush privileges;

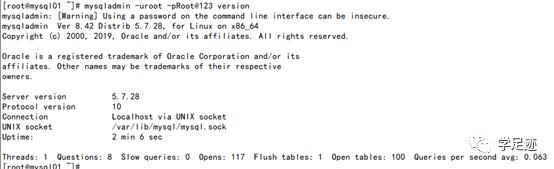

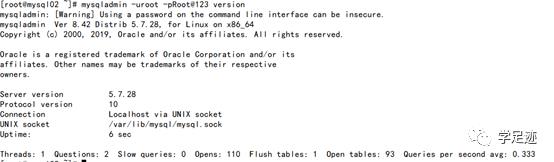

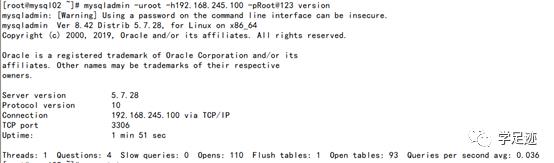

测试主节点mysql服务

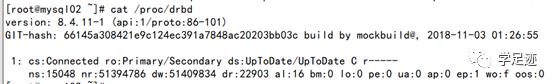

切换drbd primary到mysql02

测试mysqld服务启动是否正常

Mysql01:

systemctl stopmysqld

umount/dev/drbd1

drbdadmsecondary mysql

Mysql02:

drbdadm primarymysql

cat /proc/drbd

mount /dev/drbd1/var/lib/mysql

systemctl startmysqld

卸载分区,停止服务

systemctlstop mysqld

umount/dev/drbd1

开始配置集群管理

安装pcs

yum install -y pacemaker pcs psmiscpolicycoreutils-python

systemctl start pcsd.service

systemctl enable pcsd.service

echo redhat1 | passwd --stdin hacluster

ssh mysql02 'echo redhat1 | passwd --stdin hacluster'

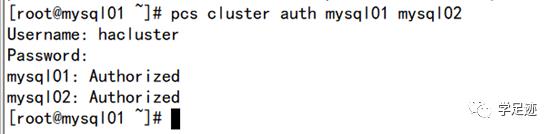

pcs cluster auth mysql01 mysql02

新建集群

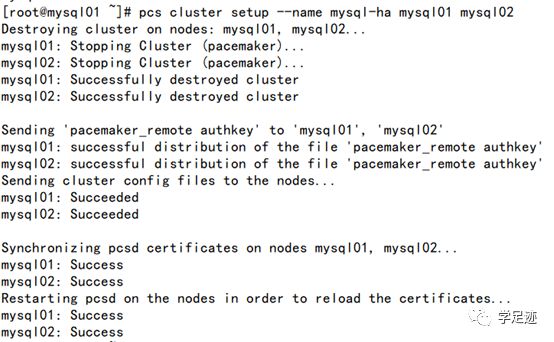

pcs cluster setup --name mysql-ha mysql01mysql02

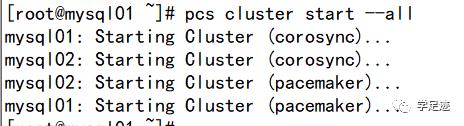

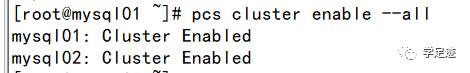

启动集群

pcs cluster start --all

pcs cluster enable --all

查看集群状态

配置mysql高可用

pcs cluster cib mysql_cfg

pcs -f mysql_cfg property setstonith-enabled=false

pcs -f mysql_cfg property setno-quorum-policy=stop

pcs -f mysql_cfg resource defaultsresource-stickiness=200

#主备配置

pcs -f mysql_cfg resource create mysql_drbdocf:linbit:drbd

drbd_resource=mysql

op monitor role=Master interval=29 timeout=20

op monitor role=Slave interval=31 timeout=20

op start timeout=120

op stop timeout=60

#双主配置--与主备选其一

pcs -f mysql_cfg resource create mysql_drbdocf:linbit:drbd

drbd_resource=mysql

op monitorinterval=29 timeout=20

pcs -f mysql_cfg resource mastermysql_primary mysql_drbd

master-max=1 master-node-max=1

clone-max=2 clone-node-max=1

notify=true

pcs -f mysql_cfg resource create mystore_fsocf:heartbeat:Filesystem

device="/dev/drbd1"

directory="/var/lib/mysql"

fstype="xfs"

pcs -f mysql_cfg constraint colocation addmystore_fs with mysql_primary INFINITY with-rsc-role=Master

pcs -f mysql_cfg constraint order promotemysql_primary then start mystore_fs

pcs -f mysql_cfg resource createmysql_database service:mysqld

op start timeout=60s

op stop timeout=60s

op monitor interval=20s timeout=30s

pcs -f mysql_cfg constraint colocation addmysql_database with mystore_fs INFINITY

pcs -f mysql_cfg constraint ordermystore_fs then mysql_database

pcs cluster cib-push mysql_cfg

vip配置

pcs cluster cib mysql_cfg

pcs -f mysql_cfg resource create mysql_VIPocf:heartbeat:IPaddr2 ip=192.168.245.100 cidr_netmask=24 op monitorinterval=20s

pcs -f mysql_cfg constraint colocation addmysql_VIP with mysql_database INFINITY

pcs -f mysql_cfg constraint ordermysql_database then mysql_VIP

pcs cluster cib-push mysql_cfg

检查配置:无输出为正常

crm_verify -L -V

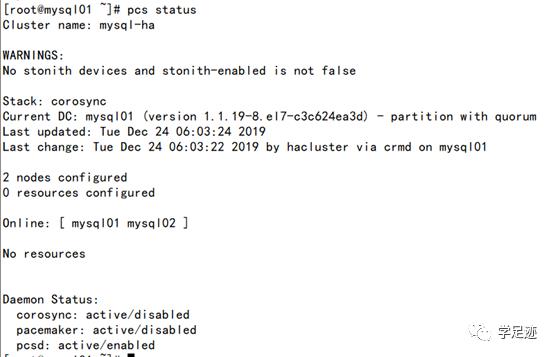

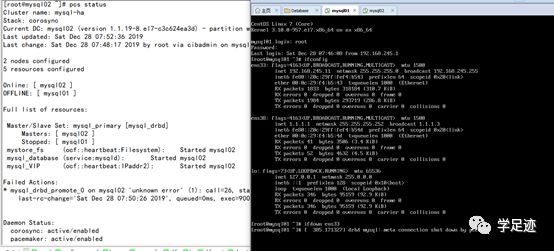

配置完成后,查看集群状态

pcs status

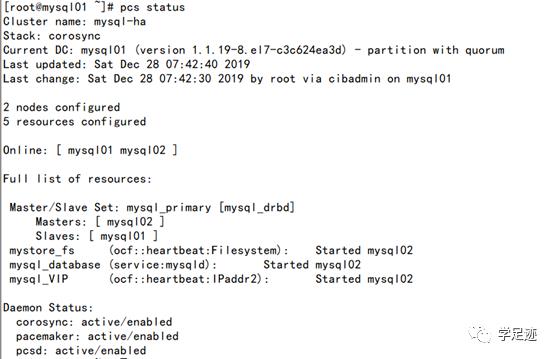

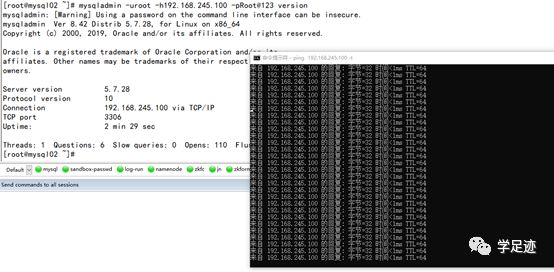

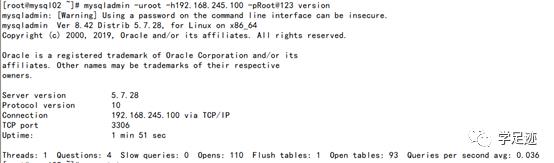

测试服务

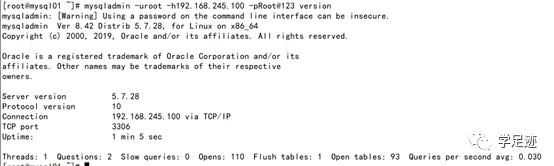

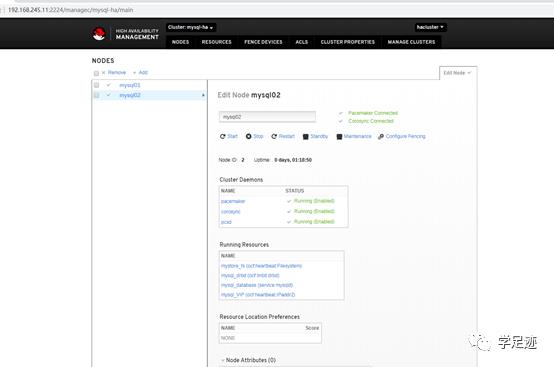

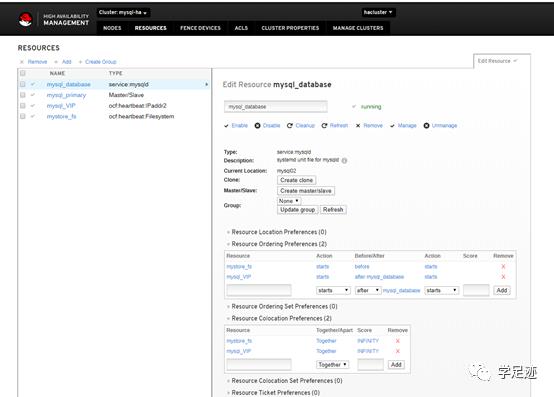

Pcs的web管理页面

故障转移测试:

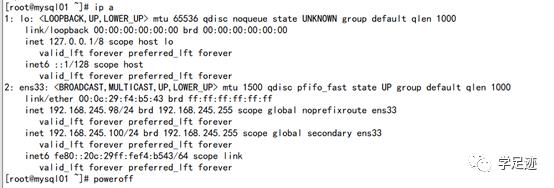

1、宕机

直接poweroff当前节点:mysql01

服务正常

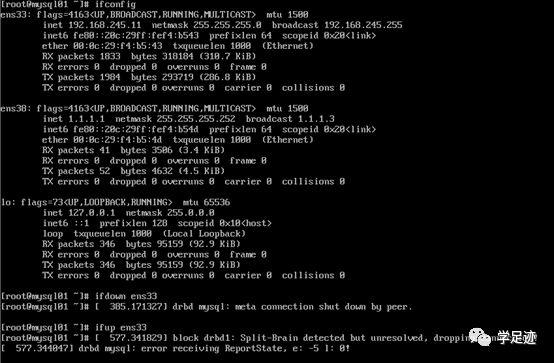

2、网络不通

虚拟机console 断开网络,来查看服务切换情况

服务正常

恢复网络

依然正常

至此,使用RHCS套件实现了mysql的故障转移集群架构,解放双手,为了防止无法恢复的脑裂情况产生形成数据错误,需要再加一个mysql异步同步的备节点并定期做0级和1级备份以防万一。

以上是关于大型hadoop集群中的Mysql高可用架构的主要内容,如果未能解决你的问题,请参考以下文章