Ubuntu下搭建单机Hadoop和Spark集群环境

Posted Linux公社

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Ubuntu下搭建单机Hadoop和Spark集群环境相关的知识,希望对你有一定的参考价值。

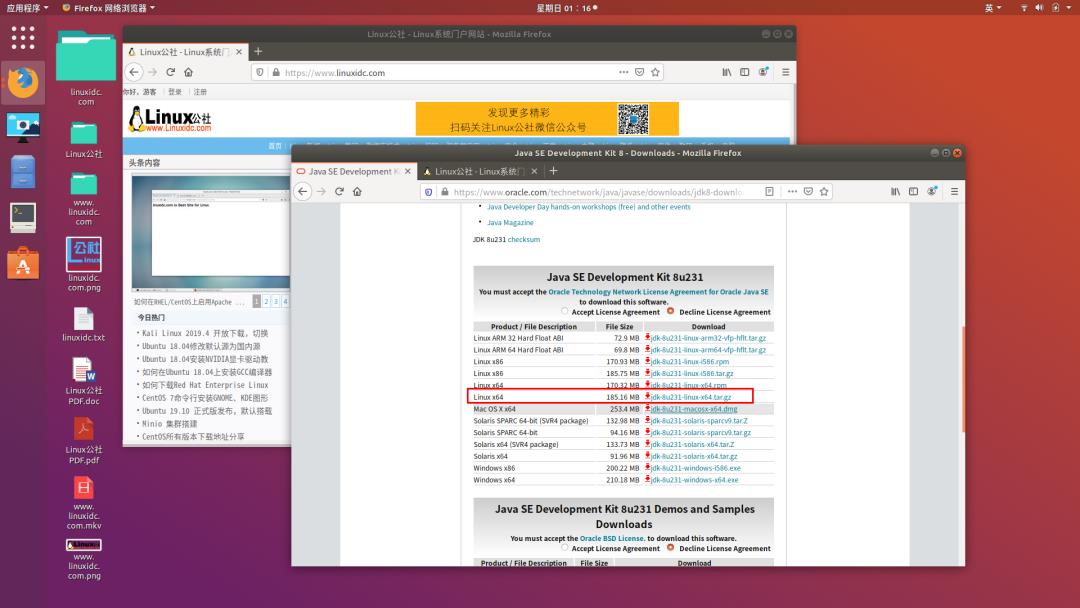

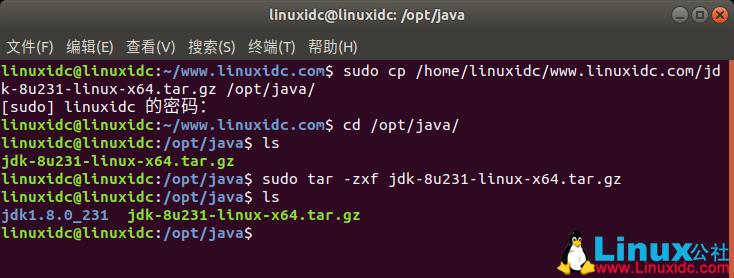

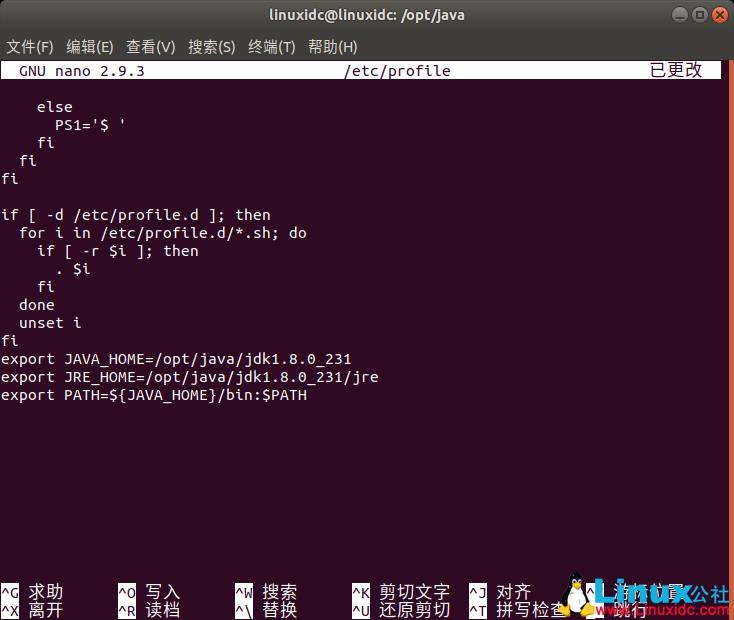

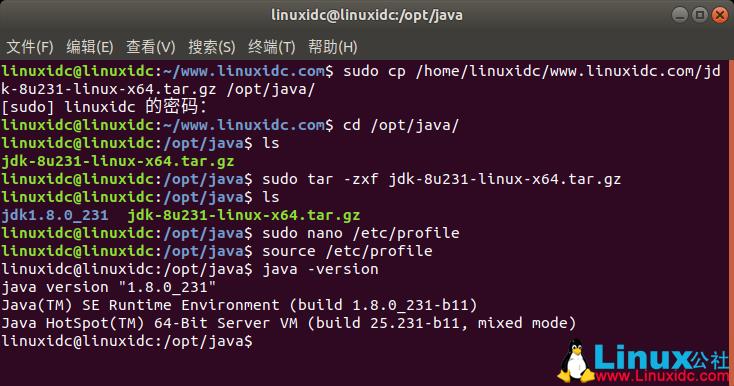

1、Java JDK8的安装

[sudo] linuxidc 的密码:

linuxidc@linuxidc:~/www.linuxidc.com$ cd /opt/java/

linuxidc@linuxidc:/opt/java$ ls

jdk-8u231-linux-x64.tar.gz

linuxidc@linuxidc:/opt/java$ ls

jdk1.8.0_231 jdk-8u231-linux-x64.tar.gz

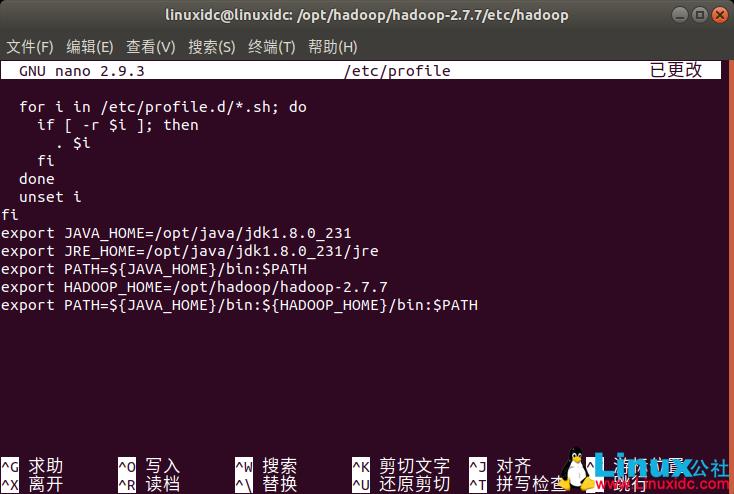

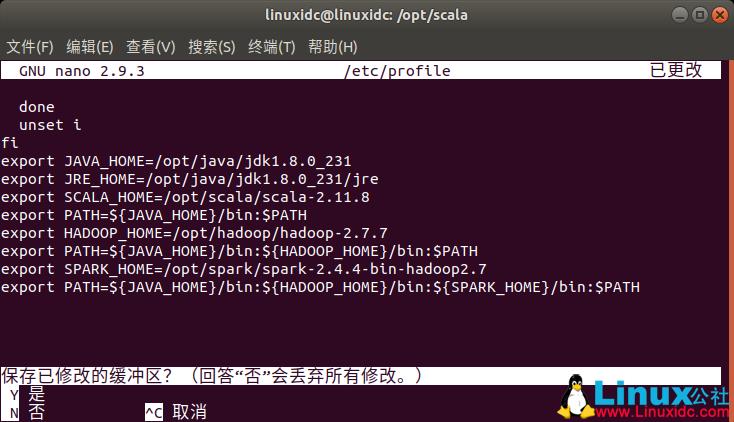

export JRE_HOME=/opt/java/jdk1.8.0_231/jre

export PATH=${JAVA_HOME}/bin:$PATH

java version "1.8.0_231"

Java(TM) SE Runtime Environment (build 1.8.0_231-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.231-b11, mixed mode)

linuxidc@linuxidc:/opt/java$

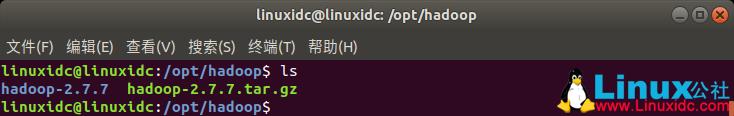

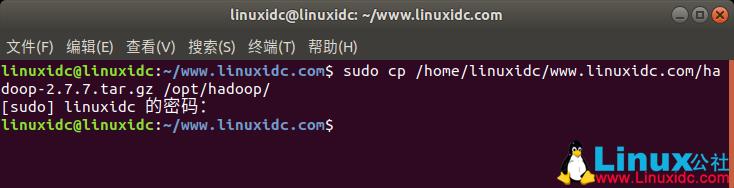

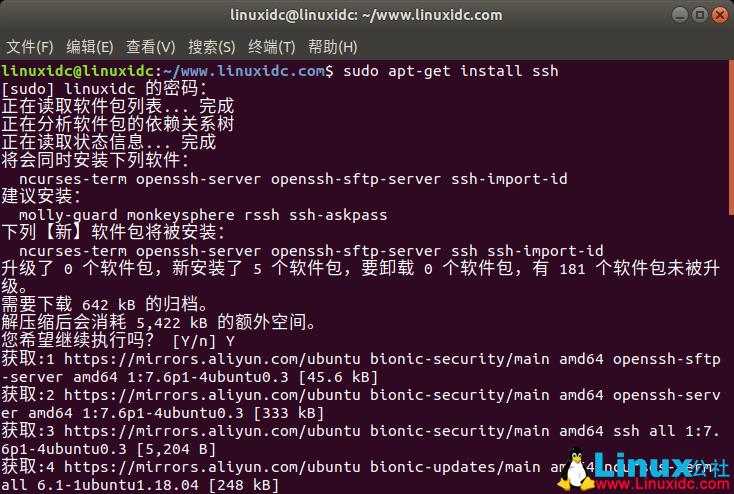

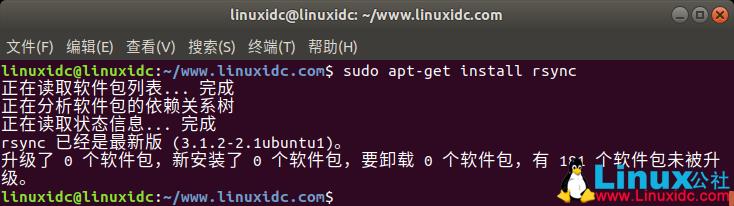

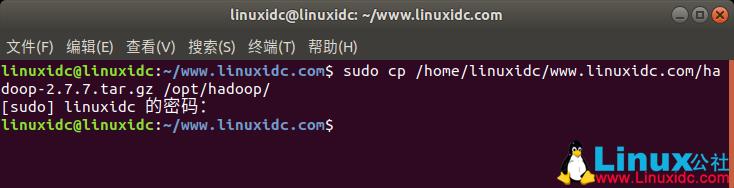

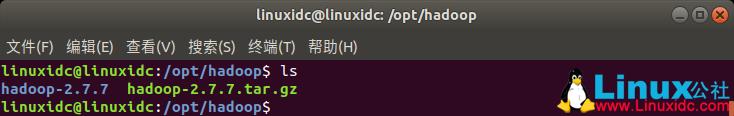

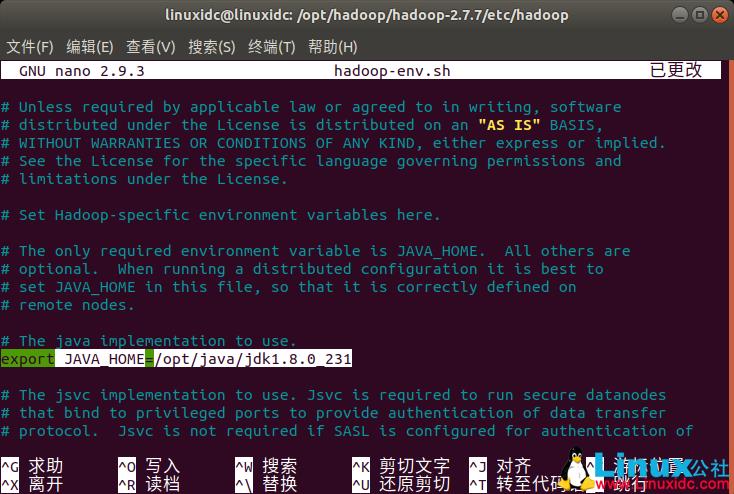

2、安装Hadoop

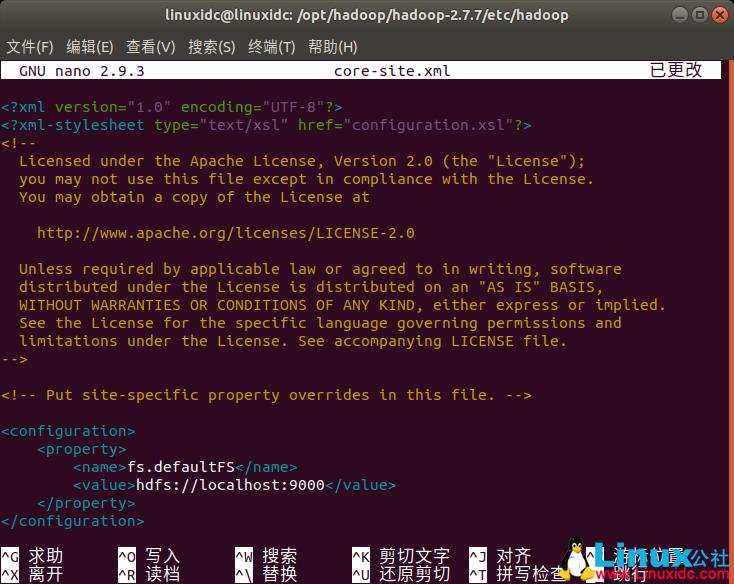

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

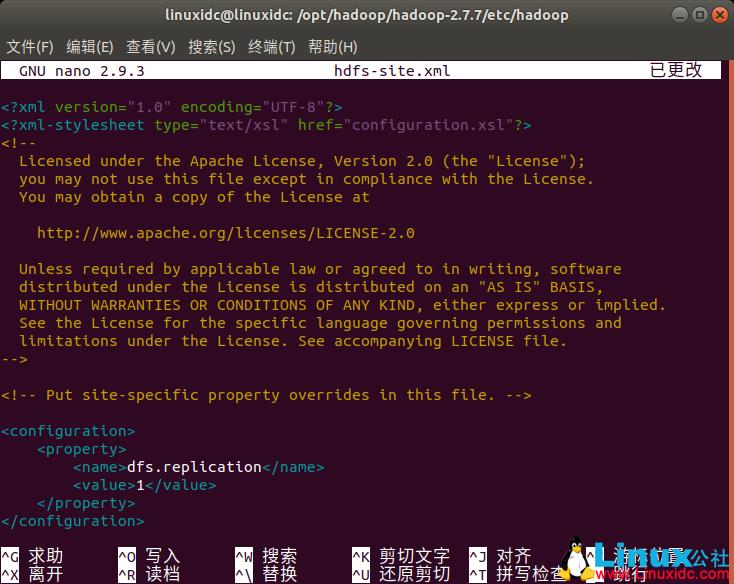

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

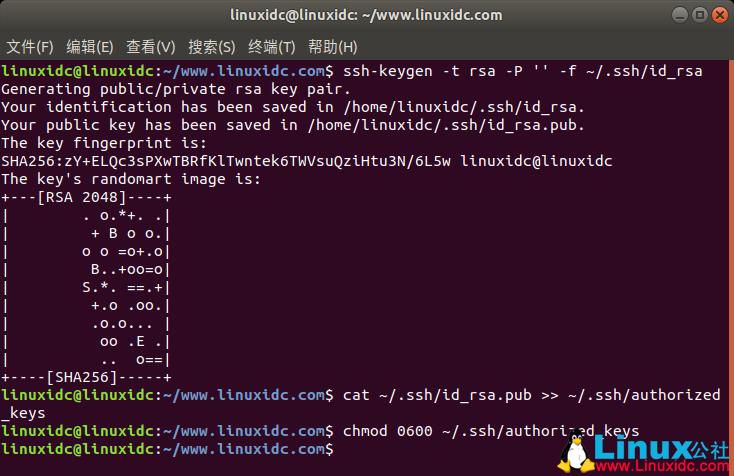

Generating public/private rsa key pair.

Your identification has been saved in /home/linuxidc/.ssh/id_rsa.

Your public key has been saved in /home/linuxidc/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:zY+ELQc3sPXwTBRfKlTwntek6TWVsuQziHtu3N/6L5w linuxidc@linuxidc

The key's randomart image is:

+---[RSA 2048]----+

| . o.*+. .|

| + B o o.|

| o o =o+.o|

| B..+oo=o|

| S.*. ==.+|

| +.o .oo.|

| .o.o... |

| oo .E .|

| .. o==|

+----[SHA256]-----+

linuxidc@linuxidc:~/www.linuxidc.com$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

linuxidc@linuxidc:~/www.linuxidc.com$ chmod 0600 ~/.ssh/authorized_keys

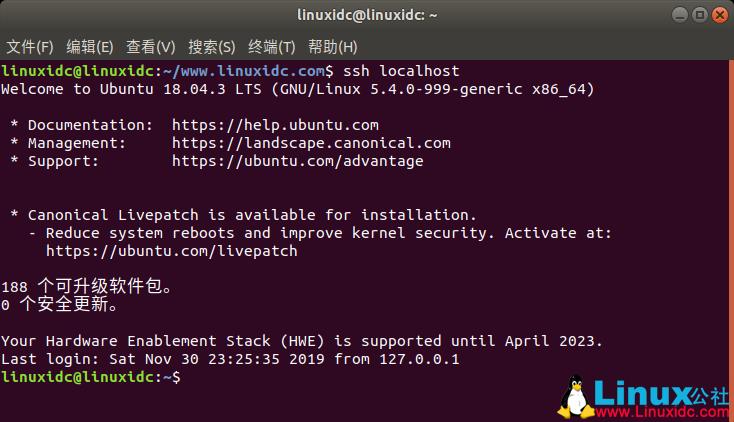

linuxidc@linuxidc:~/www.linuxidc.com$ ssh localhost

Welcome to Ubuntu 18.04.3 LTS (GNU/Linux 5.4.0-999-generic x86_64)

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

* Canonical Livepatch is available for installation.

- Reduce system reboots and improve kernel security. Activate at:

https://ubuntu.com/livepatch

0 个安全更新。

Last login: Sat Nov 30 23:25:35 2019 from 127.0.0.1

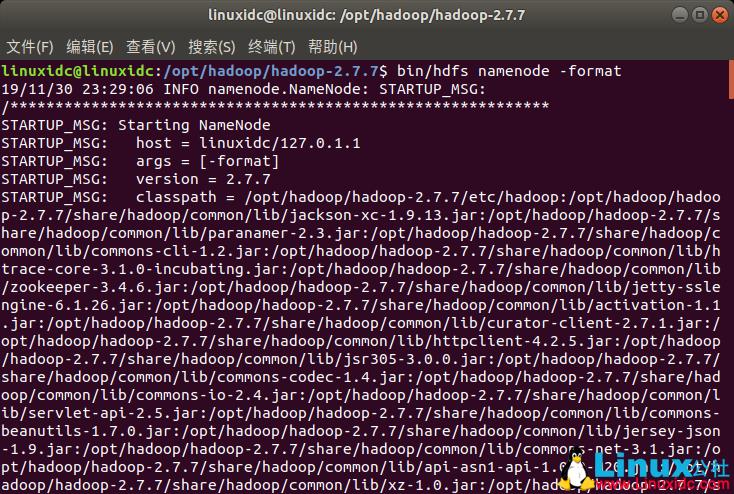

19/11/30 23:29:06 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = linuxidc/127.0.1.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.7.7

......

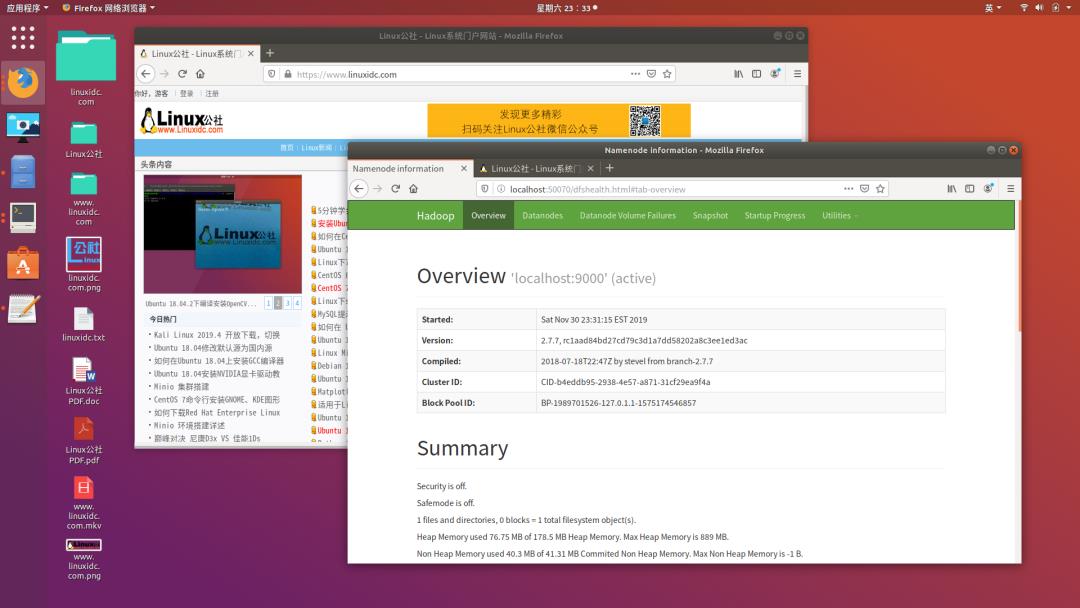

Starting namenodes on [localhost]

localhost: starting namenode, logging to /opt/hadoop/hadoop-2.7.7/logs/hadoop-linuxidc-namenode-linuxidc.out

localhost: starting datanode, logging to /opt/hadoop/hadoop-2.7.7/logs/hadoop-linuxidc-datanode-linuxidc.out

Starting secondary namenodes [0.0.0.0]

The authenticity of host '0.0.0.0 (0.0.0.0)' can't be established.

ECDSA key fingerprint is SHA256:OSXsQK3E9ReBQ8c5to2wvpcS6UGrP8tQki0IInUXcG0.

Are you sure you want to continue connecting (yes/no)? yes

0.0.0.0: Warning: Permanently added '0.0.0.0' (ECDSA) to the list of known hosts.

0.0.0.0: starting secondarynamenode, logging to /opt/hadoop/hadoop-2.7.7/logs/hadoop-linuxidc-secondarynamenode-linuxidc.out

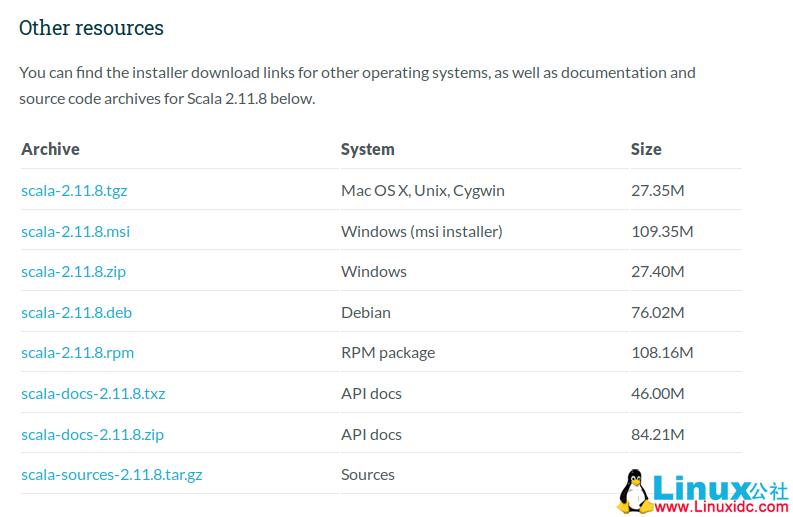

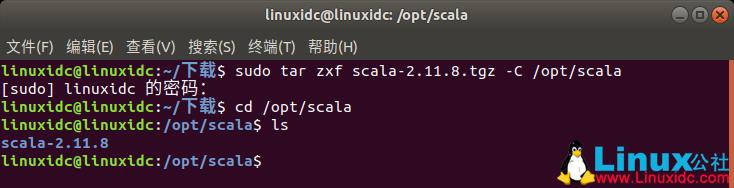

3、Scala安装:

[sudo] linuxidc 的密码:

linuxidc@linuxidc:~/下载$ cd /opt/scala

linuxidc@linuxidc:/opt/scala$ ls

scala-2.11.8

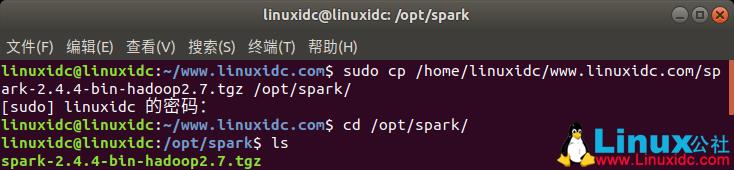

4、安装spark

[sudo] linuxidc 的密码:

linuxidc@linuxidc:~/www.linuxidc.com$ cd /opt/spark/

linuxidc@linuxidc:/opt/spark$ ls

spark-2.4.4-bin-hadoop2.7.tgz

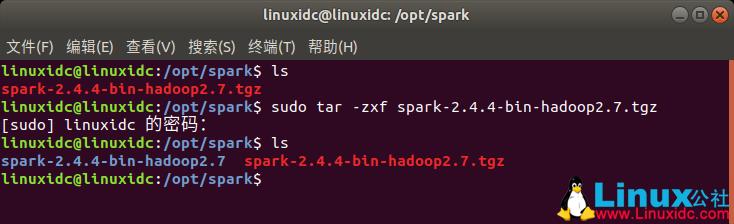

[sudo] linuxidc 的密码:

linuxidc@linuxidc:/opt/spark$ ls

spark-2.4.4-bin-hadoop2.7 spark-2.4.4-bin-hadoop2.7.tgz

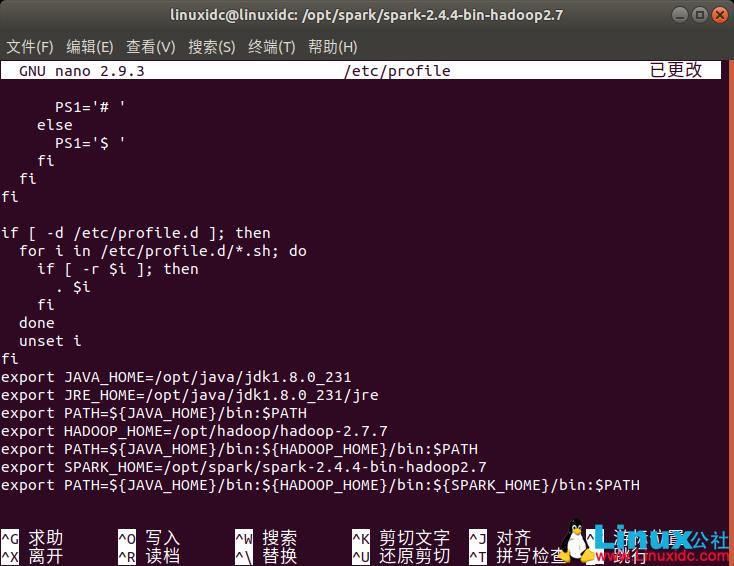

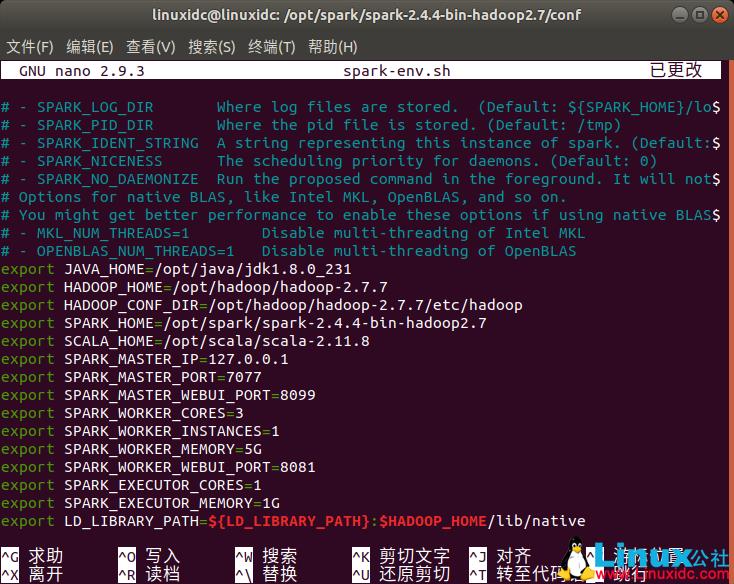

export PATH=${JAVA_HOME}/bin:${HADOOP_HOME}/bin:${SPARK_HOME}/bin:$PATH

export HADOOP_HOME=/opt/hadoop/hadoop-2.7.7

export HADOOP_CONF_DIR=/opt/hadoop/hadoop-2.7.7/etc/hadoop

export SPARK_HOME=/opt/spark/spark-2.4.4-bin-hadoop2.7

export SCALA_HOME=/opt/scala/scala-2.11.8

export SPARK_MASTER_IP=127.0.0.1

export SPARK_MASTER_PORT=7077

export SPARK_MASTER_WEBUI_PORT=8099

export SPARK_WORKER_CORES=3

export SPARK_WORKER_INSTANCES=1

export SPARK_WORKER_MEMORY=5G

export SPARK_WORKER_WEBUI_PORT=8081

export SPARK_EXECUTOR_CORES=1

export SPARK_EXECUTOR_MEMORY=1G

export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:$HADOOP_HOME/lib/native

Linux公社的RSS地址:https://www.linuxidc.com/rssFeed.aspx

以上是关于Ubuntu下搭建单机Hadoop和Spark集群环境的主要内容,如果未能解决你的问题,请参考以下文章

大数据学习系列之七 ----- Hadoop+Spark+Zookeeper+HBase+Hive集群搭建 图文详解