Redis数据迁移同步工具(redis-shake)

Posted 吴码

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Redis数据迁移同步工具(redis-shake)相关的知识,希望对你有一定的参考价值。

前言

最近线上一台自建redis服务的服务器频繁报警,内存使用率有点高,这是一台配置比较简陋(2C8G)的机子了,近期也打算准备抛弃它了。抛弃之前需对原先的数据进行迁移,全量数据,增量数据都需要考虑,确保数据不丢失,在网上查了下发现了阿里自研的RedisShake工具,据说很妙,那就先试试吧

实战

正式操作前先在测试环境实践一把看看效果如何,先说明下环境

源库:192.168.28.142

目标库:192.168.147.128

步骤一:

使用wget命令下载至本地

wget https://github.com/alibaba/RedisShake/releases/download/release-v2.0.2-20200506/redis-shake-v2.0.2.tar.gz

步骤二:

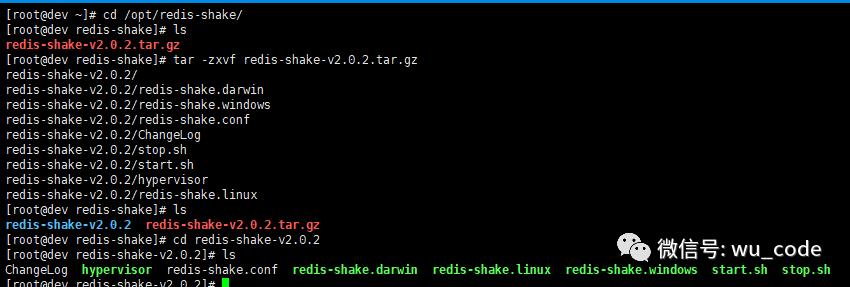

解压,进入相应目录看看有哪些东东

[root@dev ~]# cd /opt/redis-shake/[root@dev redis-shake]# lsredis-shake-v2.0.2.tar.gz[root@dev redis-shake]# tar -zxvf redis-shake-v2.0.2.tar.gzredis-shake-v2.0.2/redis-shake-v2.0.2/redis-shake.darwinredis-shake-v2.0.2/redis-shake.windowsredis-shake-v2.0.2/redis-shake.confredis-shake-v2.0.2/ChangeLogredis-shake-v2.0.2/stop.shredis-shake-v2.0.2/start.shredis-shake-v2.0.2/hypervisorredis-shake-v2.0.2/redis-shake.linux[root@dev redis-shake]# lsredis-shake-v2.0.2 redis-shake-v2.0.2.tar.gz[root@dev redis-shake]# cd redis-shake-v2.0.2[root@dev redis-shake-v2.0.2]# lsChangeLog hypervisor redis-shake.conf redis-shake.darwin redis-shake.linux redis-shake.windows start.sh stop.sh

步骤三:

更改配置文件redis-shake.conf

日志输出

# log file,日志文件,不配置将打印到stdout (e.g. /var/log/redis-shake.log )log.file =/opt/redis-shake/redis-shake.log

源端连接配置

# ip:port# the source address can be the following:# 1. single db address. for "standalone" type.# 2. ${sentinel_master_name}:${master or slave}@sentinel single/cluster address, e.g., mymaster:master@127.0.0.1:26379;127.0.0.1:26380, or @127.0.0.1:26379;127.0.0.1:26380. for "sentinel" type.# 3. cluster that has several db nodes split by semicolon(;). for "cluster" type. e.g., 10.1.1.1:20331;10.1.1.2:20441.# 4. proxy address(used in "rump" mode only). for "proxy" type.# 源redis地址。对于sentinel或者开源cluster模式,输入格式为"master名字:拉取角色为master或者slave@sentinel的地址",别的cluster# 架构,比如codis, twemproxy, aliyun proxy等需要配置所有master或者slave的db地址。source.address = 192.168.28.142:6379# password of db/proxy. even if type is sentinel.source.password_raw = xxxxxxx

目标端设置

# ip:port# the target address can be the following:# 1. single db address. for "standalone" type.# 2. ${sentinel_master_name}:${master or slave}@sentinel single/cluster address, e.g., mymaster:master@127.0.0.1:26379;127.0.0.1:26380, or @127.0.0.1:26379;127.0.0.1:26380. for "sentinel" type.# 3. cluster that has several db nodes split by semicolon(;). for "cluster" type.# 4. proxy address. for "proxy" type.target.address = 192.168.147.128:6379# password of db/proxy. even if type is sentinel.target.password_raw = xxxxxx# auth type, don't modify ittarget.auth_type = auth# all the data will be written into this db. < 0 means disable.target.db = -1

步骤四

./start.sh redis-shake.conf sync

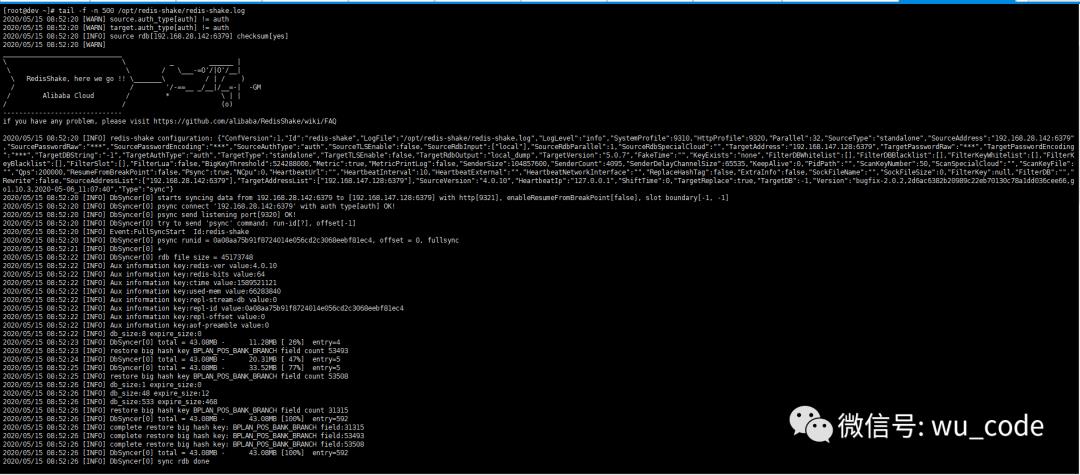

查看日志文件

2020/05/15 09:00:29 [INFO] DbSyncer[0] starts syncing data from 192.168.28.142:6379 to [192.168.147.128:6379] with http[9321], enableResumeFromBreakPoint[false], slot boundary[-1, -1]2020/05/15 09:00:29 [INFO] DbSyncer[0] psync connect '192.168.28.142:6379' with auth type[auth] OK!2020/05/15 09:00:29 [INFO] DbSyncer[0] psync send listening port[9320] OK!2020/05/15 09:00:29 [INFO] DbSyncer[0] try to send 'psync' command: run-id[?], offset[-1]2020/05/15 09:00:29 [INFO] Event:FullSyncStart Id:redis-shake2020/05/15 09:00:29 [INFO] DbSyncer[0] psync runid = 0a08aa75b91f8724014e056cd2c3068eebf81ec4, offset = 126, fullsync2020/05/15 09:00:30 [INFO] DbSyncer[0] +2020/05/15 09:00:30 [INFO] DbSyncer[0] rdb file size = 451737482020/05/15 09:00:30 [INFO] Aux information key:redis-ver value:4.0.102020/05/15 09:00:30 [INFO] Aux information key:redis-bits value:642020/05/15 09:00:30 [INFO] Aux information key:ctime value:15895216092020/05/15 09:00:30 [INFO] Aux information key:used-mem value:663048242020/05/15 09:00:30 [INFO] Aux information key:repl-stream-db value:02020/05/15 09:00:30 [INFO] Aux information key:repl-id value:0a08aa75b91f8724014e056cd2c3068eebf81ec42020/05/15 09:00:30 [INFO] Aux information key:repl-offset value:1262020/05/15 09:00:30 [INFO] Aux information key:aof-preamble value:02020/05/15 09:00:30 [INFO] db_size:8 expire_size:02020/05/15 09:00:31 [INFO] DbSyncer[0] total = 43.08MB - 10.87MB [ 25%] entry=0 filter=42020/05/15 09:00:32 [INFO] DbSyncer[0] total = 43.08MB - 21.78MB [ 50%] entry=0 filter=52020/05/15 09:00:33 [INFO] DbSyncer[0] total = 43.08MB - 32.64MB [ 75%] entry=0 filter=52020/05/15 09:00:34 [INFO] DbSyncer[0] total = 43.08MB - 42.92MB [ 99%] entry=0 filter=62020/05/15 09:00:34 [INFO] db_size:1 expire_size:02020/05/15 09:00:34 [INFO] db_size:48 expire_size:122020/05/15 09:00:34 [INFO] db_size:533 expire_size:4682020/05/15 09:00:34 [INFO] DbSyncer[0] total

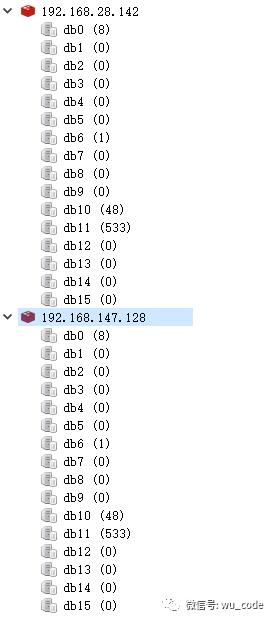

查看下数据同步情况,如下图,发现所有的库都同步过来了,非常nice。

但如果只想同步某个库又该怎么操作呢?

马上查阅了配置文件及官方文档,稍作调整就可以,具体如下

| 配置项 | 说明 |

|---|---|

| target.db | 设置待迁移的数据在目的Redis中的逻辑数据库名。例如,要将所有数据迁移到目的Redis中的DB10,则需将此参数的值设置为10。当该值设置为-1时,逻辑数据库名在源Redis和目的Redis中的名称相同,即源Redis中的DB0将被迁移至目的Redis中的DB0,DB1将被迁移至DB1,以此类推。 |

| filter.db.whitelist | 指定的db被通过,比如0;5;10将会使db0, db5, db10通过, 其他的被过滤 |

那比如我这边只想把源端的10库同步至目标端的10库只需对配置文件进行如下改动

target.db = 10filter.db.whitelist =10

重新执行步骤四命令,执行后效果如下,大功告成。

另外还有一个配置项特意说明下

| 配置项 | 说明 |

|---|---|

| key_exists | 当源目的有重复key,是否进行覆写。rewrite表示源端覆盖目的端。none表示一旦发生进程直接退出。ignore表示保留目的端key,忽略源端的同步key。该值在rump模式下没有用。 |

当前仅仅是单个节点到单个节点的同步,如涉及到集群等其他一些场景下,请参考官方文档说明,自行测试。

https://github.com/alibaba/RedisShake/wiki/%E7%AC%AC%E4%B8%80%E6%AC%A1%E4%BD%BF%E7%94%A8%EF%BC%8C%E5%A6%82%E4%BD%95%E8%BF%9B%E8%A1%8C%E9%85%8D%E7%BD%AE%EF%BC%9F

以上是关于Redis数据迁移同步工具(redis-shake)的主要内容,如果未能解决你的问题,请参考以下文章