2021年最新-可解释机器学习相关研究最新论文书籍博客资源整理分享

Posted 深度学习与NLP

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了2021年最新-可解释机器学习相关研究最新论文书籍博客资源整理分享相关的知识,希望对你有一定的参考价值。

理解(interpret)表示用可被认知(understandable)的说法去解释(explain)或呈现(present)。在机器学习的场景中,可解释性(interpretability)就表示模型能够使用人类可认知的说法进行解释和呈现。[Finale Doshi-Velez]

机器学习模型被许多人称为“黑盒”。这意味着虽然我们可以从中获得准确的预测,但我们无法清楚地解释或识别这些预测背后的逻辑。但是我们如何从模型中提取重要的见解呢?要记住哪些事项以及我们需要实现哪些功能或工具?这些是在提出模型可解释性问题时会想到的重要问题。

本文整理了可解释机器学习相关领域最新的论文,书籍、资源、博客等,分享给需要朋友。

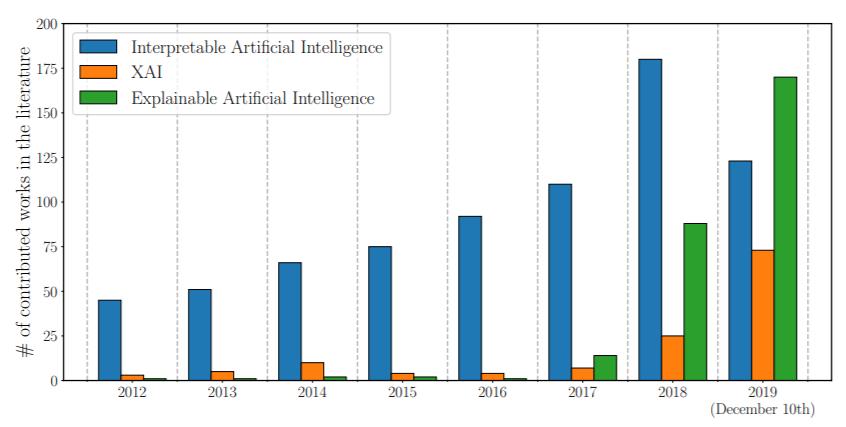

本资源含了近年来热门的可解释人工智能(XAI)的前沿研究。从下图我们可以看到可解释/可解释AI的趋势。关于这个主题的出版物正在蓬勃发展。

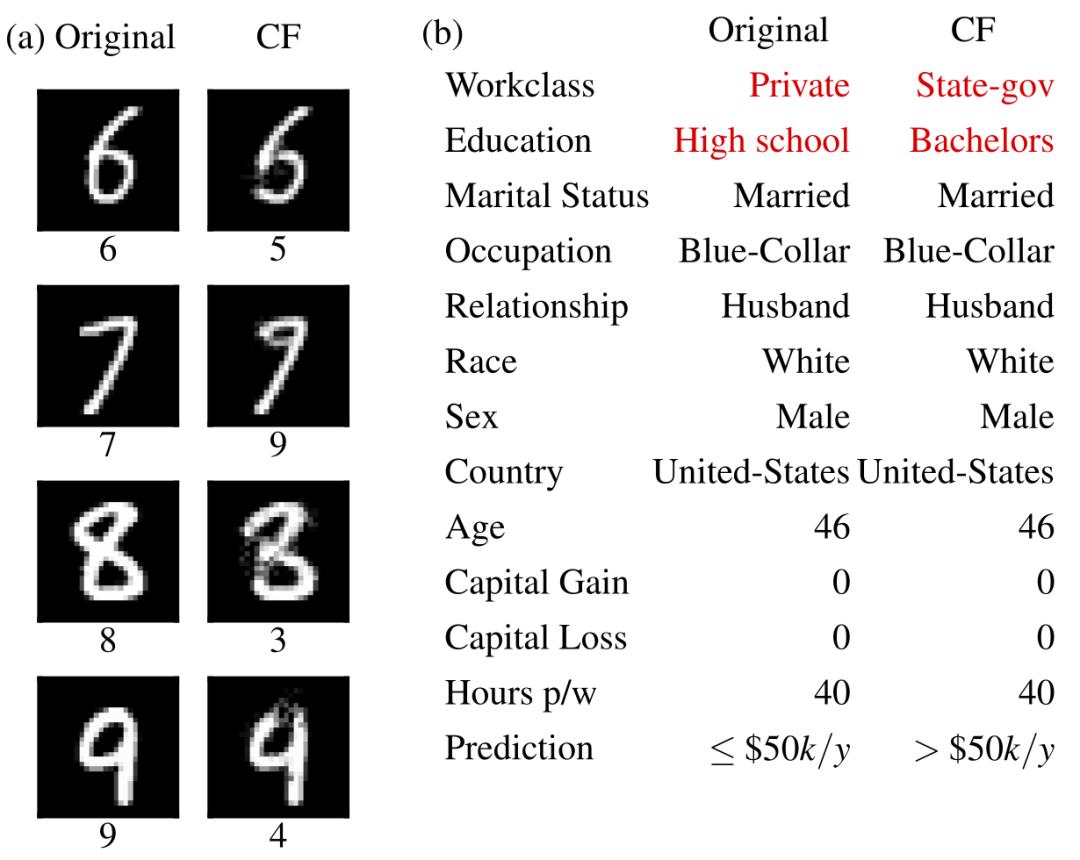

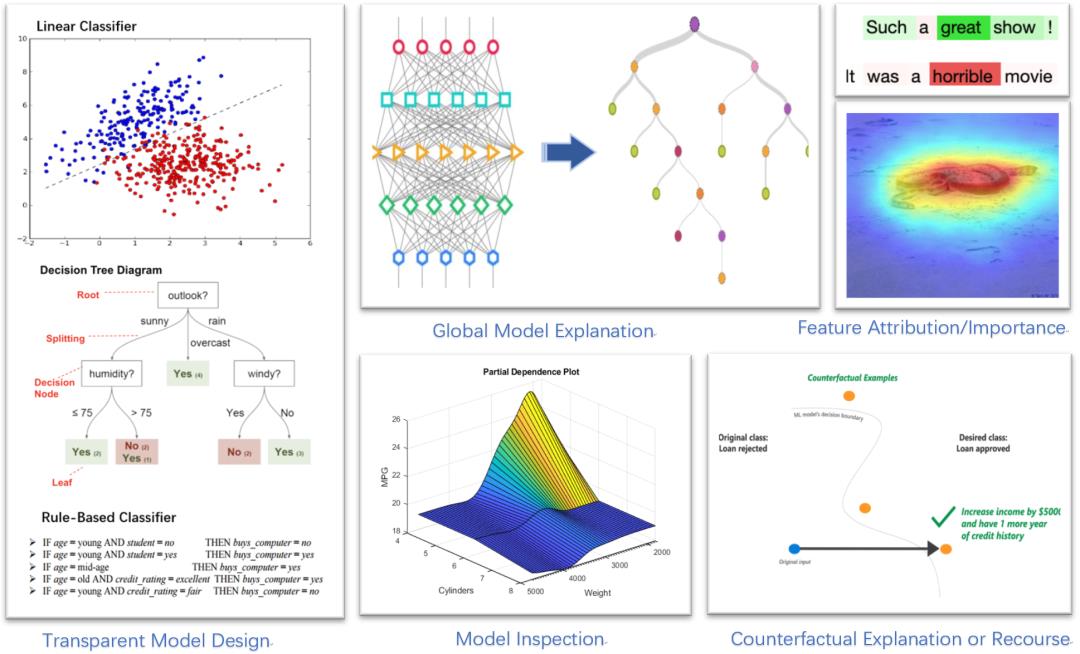

下图展示了XAI的几个用例。在这里,根据这个数字将出版物分成几个类别。

研究性论文

The elephant in the interpretability room: Why use attention as explanation when we have saliency methods, EMNLP Workshop 2020

Explainable Machine Learning in Deployment, FAT 2020

A brief survey of visualization methods for deep learning models from the perspective of Explainable AI, Information Visualization 2020

Explaining Explanations in AI, ACM FAT 2019

Machine learning interpretability: A survey on methods and metrics, Electronics, 2019

A Survey on Explainable Artificial Intelligence (XAI): Towards Medical XAI, IEEE TNNLS 2020

Interpretable machine learning: definitions, methods, and applications, Arxiv preprint 2019

Visual Analytics in Deep Learning: An Interrogative Survey for the Next Frontiers, IEEE Transactions on Visualization and Computer Graphics, 2019

Explainable Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI, Information Fusion, 2019

Evaluating Explanation Without Ground Truth in Interpretable Machine Learning, Arxiv preprint 2019

A survey of methods for explaining black box models, ACM Computing Surveys, 2018

Explaining Explanations: An Overview of Interpretability of Machine Learning, IEEE DSAA, 2018

Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI), IEEE Access, 2018

Explainable artificial intelligence: A survey, MIPRO, 2018

How Convolutional Neural Networks See the World — A Survey of Convolutional Neural Network Visualization Methods, Mathematical Foundations of Computing 2018

Explainable Artificial Intelligence: Understanding, Visualizing and Interpreting Deep Learning Models, Arxiv 2017

Towards A Rigorous Science of Interpretable Machine Learning, Arxiv preprint 2017

Explaining Explanation, Part 1: Theoretical Foundations, IEEE Intelligent System 2017

Explaining Explanation, Part 2: Empirical Foundations, IEEE Intelligent System 2017

Explaining Explanation, Part 3: The Causal Landscape, IEEE Intelligent System 2017

Explaining Explanation, Part 4: A Deep Dive on Deep Nets, IEEE Intelligent System 2017

An accurate comparison of methods for quantifying variable importance in artificial neural networks using simulated data, Ecological Modelling 2004

Review and comparison of methods to study the contribution of variables in artificial neural network models, Ecological Modelling 2003

书籍

Explainable Artificial Intelligence (xAI) Approaches and Deep Meta-Learning Models, Advances in Deep Learning Chapter 2020

Explainable AI: Interpreting, Explaining and Visualizing Deep Learning, Springer 2019

Explanation in Artificial Intelligence: Insights from the Social Sciences, 2017 arxiv preprint

Visualizations of Deep Neural Networks in Computer Vision: A Survey, Springer Transparent Data Mining for Big and Small Data 2017

Explanatory Model Analysis Explore, Explain and Examine Predictive Models

Interpretable Machine Learning A Guide for Making Black Box Models Explainable

An Introduction to Machine Learning Interpretability An Applied Perspective on Fairness, Accountability, Transparency,and Explainable AI

开源课程

Interpretability and Explainability in Machine Learning, Harvard University

文章

We mainly follow the taxonomy in the survey paper and divide the XAI/XML papers into the several branches.

1. Transparent Model Design

2. Post-Explanation

2.1 Model Explanation(Model-level)

2.2 Model Inspection

2.3 Outcome Explanation

2.3.1 Feature Attribution/Importance(Saliency Map)

2.4 Neuron Importance

2.5 Example-based Explanations

2.5.1 Counterfactual Explanations(Recourse)

2.5.2 Influential Instances

2.5.3 Prototypes&Criticisms

Uncategorized Papers on Model/Instance Explanation

Does Explainable Artificial Intelligence Improve Human Decision-Making?, AAAI 2021

Incorporating Interpretable Output Constraints in Bayesian Neural Networks, NeuIPS 2020

Towards Interpretable Natural Language Understanding with Explanations as Latent Variables, NeurIPS 2020

Learning identifiable and interpretable latent models of high-dimensional neural activity using pi-VAE, NeurIPS 2020

Generative causal explanations of black-box classifiers, NeurIPS 2020

Learning outside the Black-Box: The pursuit of interpretable models, NeurIPS 2020

Explaining Groups of Points in Low-Dimensional Representations, ICML 2020

Explaining Knowledge Distillation by Quantifying the Knowledge, CVPR 2020

Fanoos: Multi-Resolution, Multi-Strength, Interactive Explanations for Learned Systems, IJCAI 2020

Machine Learning Explainability for External Stakeholders, IJCAI 2020

Py-CIU: A Python Library for Explaining Machine Learning Predictions Using Contextual Importance and Utility, IJCAI 2020

Machine Learning Explainability for External Stakeholders, IJCAI 2020

Interpretable Models for Understanding Immersive Simulations, IJCAI 2020

Towards Automatic Concept-based Explanations, NIPS 2019

Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead, Nature Machine Intelligence 2019

Interpretml: A unified framework for machine learning interpretability, arxiv preprint 2019

All Models are Wrong, but Many are Useful: Learning a Variable’s Importance by Studying an Entire Class of Prediction Models Simultaneously, JMLR 2019

On the Robustness of Interpretability Methods, ICML 2018 workshop

Towards A Rigorous Science of Interpretable Machine Learning, Arxiv preprint 2017

Object Region Mining With Adversarial Erasing: A Simple Classification to Semantic Segmentation Approach, CVPR 2017

LOCO, Distribution-Free Predictive Inference For Regression, Arxiv preprint 2016

Explaining data-driven document classifications, MIS Quarterly 2014

评测方法

Evaluations and Methods for Explanation through Robustness Analysis, arxiv preprint 2020

Evaluating and Aggregating Feature-based Model Explanations, IJCAI 2020

Sanity Checks for Saliency Metrics, AAAI 2020

A benchmark for interpretability methods in deep neural networks, NIPS 2019

Methods for interpreting and understanding deep neural networks, Digital Signal Processing 2017

Evaluating the visualization of what a Deep Neural Network has learned, IEEE Transactions on Neural Networks and Learning Systems 2015

Python开源库

AIF360: https://github.com/Trusted-AI/AIF360,

AIX360: https://github.com/IBM/AIX360,

Anchor: https://github.com/marcotcr/anchor, scikit-learn

Alibi: https://github.com/SeldonIO/alibi

Alibi-detect: https://github.com/SeldonIO/alibi-detect

BlackBoxAuditing: https://github.com/algofairness/BlackBoxAuditing, scikit-learn

Boruta-Shap: https://github.com/Ekeany/Boruta-Shap, scikit-learn

casme: https://github.com/kondiz/casme, Pytorch

Captum: https://github.com/pytorch/captum, Pytorch,

cnn-exposed: https://github.com/idealo/cnn-exposed, Tensorflow

DALEX: https://github.com/ModelOriented/DALEX,

Deeplift: https://github.com/kundajelab/deeplift, Tensorflow, Keras

DeepExplain: https://github.com/marcoancona/DeepExplain, Tensorflow, Keras

Deep Visualization Toolbox: https://github.com/yosinski/deep-visualization-toolbox, Caffe,

Eli5: https://github.com/TeamHG-Memex/eli5, Scikit-learn, Keras, xgboost, lightGBM, catboost etc.

explainx: https://github.com/explainX/explainx, xgboost, catboost

Grad-cam-Tensorflow: https://github.com/insikk/Grad-CAM-tensorflow, Tensorflow

Innvestigate: https://github.com/albermax/innvestigate, tensorflow, theano, cntk, Keras

imodels: https://github.com/csinva/imodels,

InterpretML: https://github.com/interpretml/interpret

interpret-community: https://github.com/interpretml/interpret-community

Integrated-Gradients: https://github.com/ankurtaly/Integrated-Gradients, Tensorflow

Keras-grad-cam: https://github.com/jacobgil/keras-grad-cam, Keras

Keras-vis: https://github.com/raghakot/keras-vis, Keras

keract: https://github.com/philipperemy/keract, Keras

Lucid: https://github.com/tensorflow/lucid, Tensorflow

LIT: https://github.com/PAIR-code/lit, Tensorflow, specified for NLP Task

Lime: https://github.com/marcotcr/lime, Nearly all platform on Python

LOFO: https://github.com/aerdem4/lofo-importance, scikit-learn

modelStudio: https://github.com/ModelOriented/modelStudio, Keras, Tensorflow, xgboost, lightgbm, h2o

pytorch-cnn-visualizations: https://github.com/utkuozbulak/pytorch-cnn-visualizations, Pytorch

Pytorch-grad-cam: https://github.com/jacobgil/pytorch-grad-cam, Pytorch

PDPbox: https://github.com/SauceCat/PDPbox, Scikit-learn

py-ciu:https://github.com/TimKam/py-ciu/,

PyCEbox: https://github.com/AustinRochford/PyCEbox

path_explain: https://github.com/suinleelab/path_explain, Tensorflow

rulefit: https://github.com/christophM/rulefit,

rulematrix: https://github.com/rulematrix/rule-matrix-py,

Saliency: https://github.com/PAIR-code/saliency, Tensorflow

SHAP: https://github.com/slundberg/shap, Nearly all platform on Python

Skater: https://github.com/oracle/Skater

TCAV: https://github.com/tensorflow/tcav, Tensorflow, scikit-learn

skope-rules: https://github.com/scikit-learn-contrib/skope-rules, Scikit-learn

TensorWatch: https://github.com/microsoft/tensorwatch.git, Tensorflow

tf-explain: https://github.com/sicara/tf-explain, Tensorflow

Treeinterpreter: https://github.com/andosa/treeinterpreter, scikit-learn,

WeightWatcher: https://github.com/CalculatedContent/WeightWatcher, Keras, Pytorch

What-if-tool: https://github.com/PAIR-code/what-if-tool, Tensorflow

XAI: https://github.com/EthicalML/xai, scikit-learn

Related Repositories

https://github.com/jphall663/awesome-machine-learning-interpretability,

https://github.com/lopusz/awesome-interpretable-machine-learning,

https://github.com/pbiecek/xai_resources,

https://github.com/h2oai/mli-resources,

DeepLearning_NLP

深度学习与NLP

以上是关于2021年最新-可解释机器学习相关研究最新论文书籍博客资源整理分享的主要内容,如果未能解决你的问题,请参考以下文章