数据质量监控Griffin——docker部署

Posted AresCarry

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了数据质量监控Griffin——docker部署相关的知识,希望对你有一定的参考价值。

下载镜像

docker pull apachegriffin/griffin_spark2:0.3.0

docker pull apachegriffin/elasticsearch

在喜欢的目录下创建docker的配置文件griffin-compose-batch.yml

griffin:

image: apachegriffin/griffin_spark2:0.3.0 # 下载的镜像名称

hostname: griffin # 设置的docker主机名

links:

- es # 关联的其他docker的主机名

environment: # 配置环境变量

ES_HOSTNAME: es

volumes:

- /var/lib/mysql

ports: #映射到docker外的端口号: docker内组件的端口号

- 32122:2122

- 38088:8088 # yarn rm web ui

- 33306:3306 # mysql

- 35432:5432 # postgres

- 38042:8042 # yarn nm web ui

- 39083:9083 # hive-metastore

- 38998:8998 # livy

- 38080:8080 # griffin ui

tty: true

container_name: griffin # docker名称

es:

image: apachegriffin/elasticsearch

hostname: es

ports:

- 39200:9200

- 39300:9300

container_name: es

启动docker

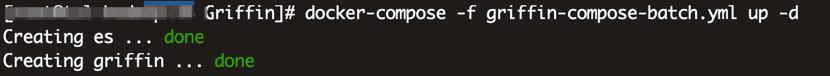

docker-compose -f griffin-compose-batch.yml up -d

执行后可看到

即为启动成功。

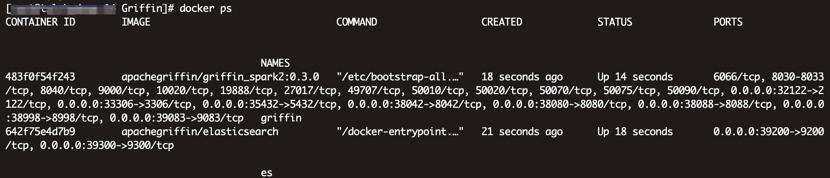

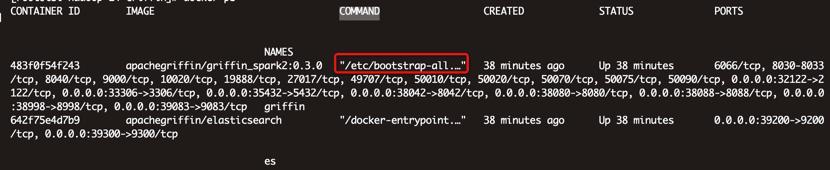

docker ps

执行后可看到

使用命令docker exec -it ${你取的格林芬容器名字} bash

或者docker exec -it ${格林芬容器的CONTAINER ID} bash

例如:

docker exec -it griffin bash

即可进入容器内

到这一步已经将基本的docker版griffin搭建好了。

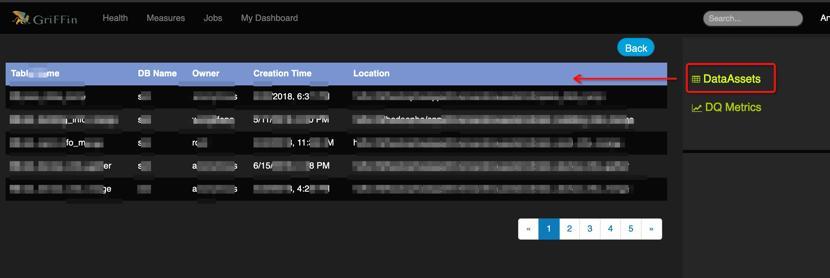

你现在可以在浏览器输入你的服务器ip和griffin ui的端口号访问griffin的ui界面了。

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-VBzwmaGb-1625639381303)(https://tcs.teambition.net/storage/312711ecbe2f14ff263ee3f77e0ea4286792?Signature=eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJBcHBJRCI6IjU5Mzc3MGZmODM5NjMyMDAyZTAzNThmMSIsIl9hcHBJZCI6IjU5Mzc3MGZmODM5NjMyMDAyZTAzNThmMSIsIl9vcmdhbml6YXRpb25JZCI6IjVjYmZjOWMzNzZlM2Q0MDAwMWNhMTZmZSIsImV4cCI6MTYyNjI0MzY5NywiaWF0IjoxNjI1NjM4ODk3LCJyZXNvdXJjZSI6Ii9zdG9yYWdlLzMxMjcxMWVjYmUyZjE0ZmYyNjNlZTNmNzdlMGVhNDI4Njc5MiJ9.8q3FrTJuAGYsQyt_wzno76G6coL6fDc1y1aFsdOwPMs&download=image.png "")]](https://image.cha138.com/20210804/1348369390eb4210896918623433c09d.jpg)

配置自己的Hive数据源或其他自定义组件操作

第一步:在容器内/root/service/config目录下找到配置文件application.properties

第二步:修改application.properties配置文件

将该文件复制到服务器上刚刚写docker配置文件的地方。

exit

进入刚刚写docker配置文件的目录下,使用以下命令将文件复制到当前目录下

docker cp griffin:/root/service/config/application.properties ./

修改application.properties配置文件里hive等你需要改动的组件配置为需要你连接的ip和端口

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing,

# software distributed under the License is distributed on an

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License.

#

spring.datasource.url = jdbc:postgresql://griffin:5432/quartz?autoReconnect=true&useSSL=false

spring.datasource.username = griffin

spring.datasource.password = 123456

spring.jpa.generate-ddl=true

spring.datasource.driverClassName = org.postgresql.Driver

spring.jpa.show-sql = true

# Hive metastore

hive.metastore.uris = thrift:// griffin:9083

hive.metastore.dbname = default

hive.hmshandler.retry.attempts = 15

hive.hmshandler.retry.interval = 2000ms

# Hive cache time

cache.evict.hive.fixedRate.in.milliseconds = 900000

# Kafka schema registry

kafka.schema.registry.url = http://griffin:8081

# Update job instance state at regular intervals

jobInstance.fixedDelay.in.milliseconds = 60000

# Expired time of job instance which is 7 days that is 604800000 milliseconds.Time unit only supports milliseconds

jobInstance.expired.milliseconds = 604800000

# schedule predicate job every 5 minutes and repeat 12 times at most

#interval time unit s:second m:minute h:hour d:day,only support these four units

predicate.job.interval = 5m

predicate.job.repeat.count = 12

# external properties directory location

external.config.location = config

# external BATCH or STREAMING env

external.env.location = config

# login strategy ("default" or "ldap")

login.strategy = default

# ldap

ldap.url = ldap://hostname:port

ldap.email = @example.com

ldap.searchBase = DC=org,DC=example

ldap.searchPattern = (sAMAccountName={0})

# hdfs

fs.defaultFS = hdfs:// griffin:8020

# elasticsearch

elasticsearch.host = es

elasticsearch.port = 9200

elasticsearch.scheme = http

# elasticsearch.user = user

# elasticsearch.password = password

# livy

livy.uri=http://griffin:8998/batches

# yarn url

yarn.uri=http:// griffin:8088

第三步:修改docker执行脚本(方才docker ps显示的COMMAND字段下对应脚本)

将bootstrap-all.sh脚本复制到服务器上刚刚写docker配置文件的地方

docker cp griffin:/etc/bootstrap-all.sh ./

修改bootstrap-all.sh脚本(将不需要在容器里启动的组件的命令全部删除,也可根据自身需求进行修改。)

注意:

sed s/ES_HOSTNAME/$ES_HOSTNAME/ /root/service/config/application.properties.template > /root/service/config/application.properties.temp

sed s/HOSTNAME/$HOSTNAME/ /root/service/config/application.properties.temp > /root/service/config/application.properties

这两条命令要删除,否则会自动覆盖你修改的application.properties配置文件。

下面是示例:

#!/bin/bash

$HADOOP_HOME/etc/hadoop/hadoop-env.sh

rm /apache/pids/*

cd $HADOOP_HOME/share/hadoop/common ; for cp in ${ACP//,/ }; do echo == $cp; curl -LO $cp ; done; cd -

find /var/lib/mysql -type f -exec touch {} \\; && service mysql start

/etc/init.d/postgresql start

sed s/HOSTNAME/$HOSTNAME/ $HADOOP_HOME/etc/hadoop/core-site.xml.template > $HADOOP_HOME/etc/hadoop/core-site.xml

sed s/HOSTNAME/$HOSTNAME/ $HADOOP_HOME/etc/hadoop/yarn-site.xml.template > $HADOOP_HOME/etc/hadoop/yarn-site.xml

sed s/HOSTNAME/$HOSTNAME/ $HADOOP_HOME/etc/hadoop/mapred-site.xml.template > $HADOOP_HOME/etc/hadoop/mapred-site.xml

/etc/init.d/ssh start

sleep 5

$HADOOP_HOME/bin/hdfs dfsadmin -safemode leave

sed s/ES_HOSTNAME/$ES_HOSTNAME/ json/env.json.template > json/env.json.temp

sed s/ZK_HOSTNAME/$ZK_HOSTNAME/ json/env.json.temp > json/env.json

rm json/env.json.temp

hadoop fs -put json/env.json /griffin/json/

sed s/KAFKA_HOSTNAME/$KAFKA_HOSTNAME/ json/streaming-accu-config.json.template > json/streaming-accu-config.json

sed s/KAFKA_HOSTNAME/$KAFKA_HOSTNAME/ json/streaming-prof-config.json.template > json/streaming-prof-config.json

hadoop fs -put json/streaming-accu-config.json /griffin/json/

hadoop fs -put json/streaming-prof-config.json /griffin/json/

#data prepare

sleep 5

cd /root

#start service

sed s/ES_HOSTNAME/$ES_HOSTNAME/ /root/service/config/env_batch.json.template > /root/service/config/env_batch.json

sed s/ES_HOSTNAME/$ES_HOSTNAME/ /root/service/config/env_streaming.json.template > /root/service/config/env_streaming.json.temp

sed s/ZK_HOSTNAME/$ZK_HOSTNAME/ /root/service/config/env_streaming.json.temp > /root/service/config/env_streaming.json

rm /root/service/config/*.temp

cd /root/service

nohup java -jar -Xmx1500m -Xms1500m service.jar > service.log &

cd /root

第五步:将修改好的application.properties、bootstrap-all.sh替换容器内原来的。

分别使用以下命令将修改好的文件放入docker内:

docker cp application.properties griffin:/root/service/config/

docker cp bootstrap-all.sh griffin:/etc

第六步:根据刚才调整的组件,修改docker启动配置griffin-compose-batch.yml

这里我没有使用docker内的yarn、hive和livy,所以将这几个端口映射都删除掉了,大家根据自己情况进行删减就行。

第七步:重启docker

docker restart griffin

现在可以在浏览器输入你的服务器ip和griffin ui的端口号访问griffin的ui界面了,可以看到数据源是你配置好的hive。

以上是关于数据质量监控Griffin——docker部署的主要内容,如果未能解决你的问题,请参考以下文章