SparkSql报错——记java.io.IOException: Illegal type id 0. The valid range is 0 to -1报错

Posted 扫地增

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了SparkSql报错——记java.io.IOException: Illegal type id 0. The valid range is 0 to -1报错相关的知识,希望对你有一定的参考价值。

报错现象

21/07/03 09:44:02 WARN scheduler.TaskSetManager: Lost task 40.0 in stage 1.0 (TID 101, bj00-a-080-024-bdy.iyunxiao.com, executor 2): java.io.IOException: Illegal type id 0. The valid range is 0 to -1

at org.apache.orc.OrcUtils.isValidTypeTree(OrcUtils.java:465)

at org.apache.orc.impl.ReaderImpl.<init>(ReaderImpl.java:387)

at org.apache.orc.OrcFile.createReader(OrcFile.java:342)

at org.apache.spark.sql.execution.datasources.orc.OrcFileFormat.$anonfun$buildReaderWithPartitionValues$2(OrcFileFormat.scala:181)

at org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.org$apache$spark$sql$execution$datasources$FileScanRDD$$anon$$readCurrentFile(FileScanRDD.scala:124)

at org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.nextIterator(FileScanRDD.scala:177)

at org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.hasNext(FileScanRDD.scala:101)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage1.scan_nextBatch_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage1.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anon$2.hasNext(WholeStageCodegenExec.scala:636)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458)

at org.apache.spark.shuffle.sort.UnsafeShuffleWriter.write(UnsafeShuffleWriter.java:187)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:99)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:55)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:411)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

21/07/03 09:44:02 ERROR scheduler.TaskSetManager: Task 40 in stage 1.0 failed 4 times; aborting job

21/07/03 09:44:02 WARN scheduler.TaskSetManager: Lost task 33.0 in stage 1.0 (TID 100, bj00-a-080-024-bdy.iyunxiao.com, executor 2): TaskKilled (Stage cancelled)

21/07/03 09:44:02 ERROR datasources.FileFormatWriter: Aborting job 1ac0178a-a516-4037-b798-30e60597f24c.

org.apache.spark.SparkException: Job aborted due to stage failure: Task 40 in stage 1.0 failed 4 times, most recent failure: Lost task 40.3 in stage 1.0 (TID 107, bj00-a-080-024-bdy.iyunxiao.com, executor 2): java.io.IOException: Illegal type id 0. The valid range is 0 to -1

at org.apache.orc.OrcUtils.isValidTypeTree(OrcUtils.java:465)

at org.apache.orc.impl.ReaderImpl.<init>(ReaderImpl.java:387)

at org.apache.orc.OrcFile.createReader(OrcFile.java:342)

at org.apache.spark.sql.execution.datasources.orc.OrcFileFormat.$anonfun$buildReaderWithPartitionValues$2(OrcFileFormat.scala:181)

at org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.org$apache$spark$sql$execution$datasources$FileScanRDD$$anon$$readCurrentFile(FileScanRDD.scala:124)

at org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.nextIterator(FileScanRDD.scala:177)

at org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.hasNext(FileScanRDD.scala:101)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage1.scan_nextBatch_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage1.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anon$2.hasNext(WholeStageCodegenExec.scala:636)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458)

at org.apache.spark.shuffle.sort.UnsafeShuffleWriter.write(UnsafeShuffleWriter.java:187)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:99)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:55)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:411)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.failJobAndIndependentStages(DAGScheduler.scala:1889)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2(DAGScheduler.scala:1877)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$abortStage$2$adapted(DAGScheduler.scala:1876)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1876)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$handleTaskSetFailed$1(DAGScheduler.scala:926)

at org.apache.spark.scheduler.DAGScheduler.$anonfun$handleTaskSetFailed$1$adapted(DAGScheduler.scala:926)

at scala.Option.foreach(Option.scala:274)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:926)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2110)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2059)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2048)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:737)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:2061)

at org.apache.spark.sql.execution.datasources.FileFormatWriter$.write(FileFormatWriter.scala:167)

at org.apache.spark.sql.hive.execution.SaveAsHiveFile.saveAsHiveFile(SaveAsHiveFile.scala:97)

at org.apache.spark.sql.hive.execution.SaveAsHiveFile.saveAsHiveFile$(SaveAsHiveFile.scala:48)

at org.apache.spark.sql.hive.execution.InsertIntoHiveTable.saveAsHiveFile(InsertIntoHiveTable.scala:66)

at org.apache.spark.sql.hive.execution.InsertIntoHiveTable.processInsert(InsertIntoHiveTable.scala:201)

at org.apache.spark.sql.hive.execution.InsertIntoHiveTable.run(InsertIntoHiveTable.scala:99)

at org.apache.spark.sql.execution.command.DataWritingCommandExec.sideEffectResult$lzycompute(commands.scala:104)

at org.apache.spark.sql.execution.command.DataWritingCommandExec.sideEffectResult(commands.scala:102)

at org.apache.spark.sql.execution.command.DataWritingCommandExec.executeCollect(commands.scala:115)

at org.apache.spark.sql.Dataset.$anonfun$logicalPlan$1(Dataset.scala:194)

at org.apache.spark.sql.Dataset.$anonfun$withAction$2(Dataset.scala:3364)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$1(SQLExecution.scala:78)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:125)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:73)

at org.apache.spark.sql.Dataset.withAction(Dataset.scala:3364)

at org.apache.spark.sql.Dataset.<init>(Dataset.scala:194)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:79)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:642)

at org.iyunxiao.bd.dw.hive.Hive2HiveServiceImpl.save(Hive2HiveServiceImpl.java:44)

at org.iyunxiao.bd.SparkSubmitter.main(SparkSubmitter.java:56)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:684)

Caused by: java.io.IOException: Illegal type id 0. The valid range is 0 to -1

at org.apache.orc.OrcUtils.isValidTypeTree(OrcUtils.java:465)

at org.apache.orc.impl.ReaderImpl.<init>(ReaderImpl.java:387)

at org.apache.orc.OrcFile.createReader(OrcFile.java:342)

at org.apache.spark.sql.execution.datasources.orc.OrcFileFormat.$anonfun$buildReaderWithPartitionValues$2(OrcFileFormat.scala:181)

at org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.org$apache$spark$sql$execution$datasources$FileScanRDD$$anon$$readCurrentFile(FileScanRDD.scala:124)

at org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.nextIterator(FileScanRDD.scala:177)

at org.apache.spark.sql.execution.datasources.FileScanRDD$$anon$1.hasNext(FileScanRDD.scala:101)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage1.scan_nextBatch_0$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIteratorForCodegenStage1.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:43)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anon$2.hasNext(WholeStageCodegenExec.scala:636)

at scala.collection.Iterator$$anon$10.hasNext(Iterator.scala:458)

at org.apache.spark.shuffle.sort.UnsafeShuffleWriter.write(UnsafeShuffleWriter.java:187)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:99)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:55)

at org.apache.spark.scheduler.Task.run(Task.scala:121)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:411)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

原因分析

看报错是由于Illegal type id 0. The valid range is 0 to -1。大概意思是id的超过了有效范围。这个时候我们去代码中排查发现数据原表中DataX抽取数据的例行任务失败,生成了脏数据分区,然后调度任务重试成功。而日志事实表在清洗时跨多个表分区,

spark-sql> dfs -du -h /bd/ods/xxx/ods_xxx_advertisement_xxx/;

....

/bd/ods/xxx/ods_xxx_advertisement_xxx/date_key=2021-07-02

/bd/ods/xxx/ods_xxx_advertisement_xxx/date_key=2021-07-02__f76d8c59_6952_46b9_a78e_667dc6ac9578

/bd/ods/xxx/ods_xxx_advertisement_xxx/date_key=2021-07-03

/bd/ods/xxx/ods_xxx_advertisement_xxx/date_key=2021-07-03__320cf8d9_7f5c_4f42_9634_68f15d94e151

为了方便大家理解我们说明任务报错的日期为2021-07-02和2021-07-03,而在任务中使用了where date_key >= date_sub(current_date,1),这就使得在查询是查询了失败任务生成的脏数据分区,而在脏数据分区中的生成数据问文件中的数据并不完整,最终造成了任务失败。

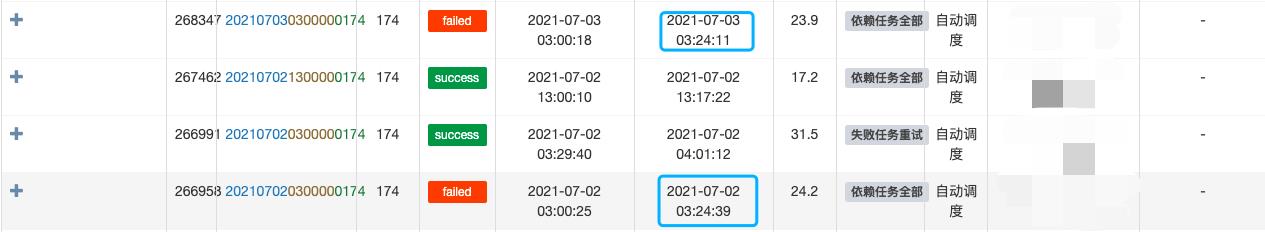

其实这个时候我们应该向下追这个问题。发现hera任务在每日3:24~3:25任务就会失败。

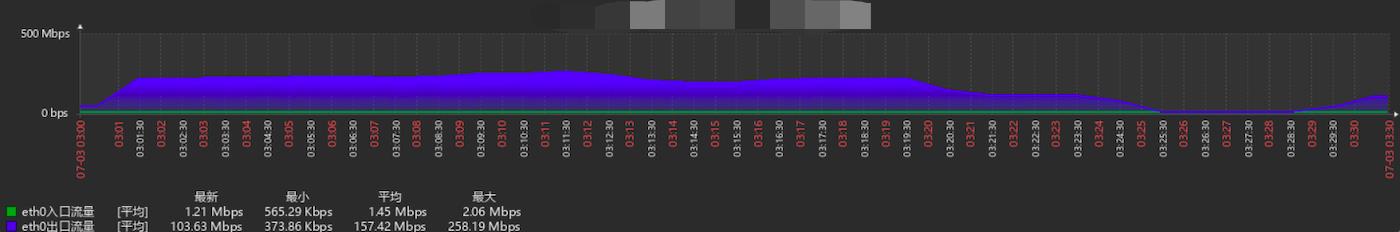

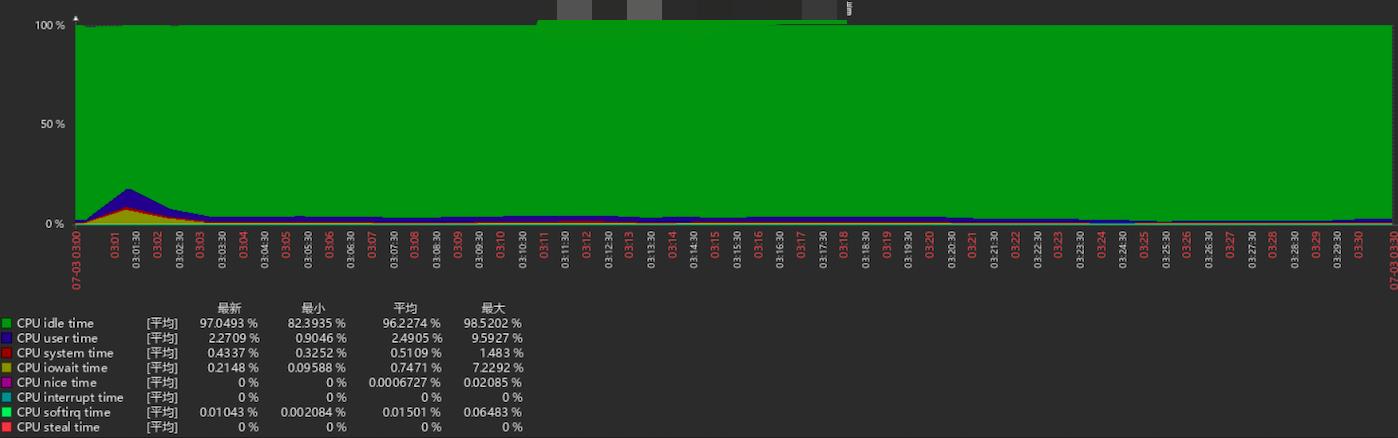

我们通过Zabbix查看源数据库服务器的网络IO和CPU进行了研究:

我们发现该服务器在3:24~3:25流量会被急速降下来,导致任务失败,运维排查服务后未找到什么解决方案,我们无奈只好调整了任务的调度时间。避开了该时间段。

解决方法

-- 1.删掉脏数据分区:

spark-sql> dfs -rm -r -skipTrash /bd/ods/xxx/ods_xxx_advertisement_xxx/date_key=2021-07-02__f76d8c59_6952_46b9_a78e_667dc6ac9578;

spark-sql> dfs -rm -r -skipTrash /bd/ods/xxx/ods_xxx_advertisement_xxx/date_key=2021-07-03__320cf8d9_7f5c_4f42_9634_68f15d94e151;

-- 2.刷新分区

msck repair table ods_xxx.ods_xxx_advertisement_xxx;

经过上述操作任务执行正常了。

以上是关于SparkSql报错——记java.io.IOException: Illegal type id 0. The valid range is 0 to -1报错的主要内容,如果未能解决你的问题,请参考以下文章