Resnet 残差网络使用案例

Posted qianbo_insist

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Resnet 残差网络使用案例相关的知识,希望对你有一定的参考价值。

Resnet 网络

Resnet是残差网络(Residual Network)的缩写,该系列网络广泛用于目标分类等领域以及作为计算机视觉任务主干经典神经网络的一部分,典型的网络有resnet50, resnet101等。Resnet网络的证明网络能够向更深(包含更多隐藏层)的方向发展。本篇是从国外的教程learnopencv 中的TensorFlow-Fully-Convolutional-Image-Classification而来,使用tensorflow2.1以上版本,文章中使用下载预训练的模型,改成了直接在本地中加载模型。

使用已经训练好的model

Resnet50_weights_tf_dim_ordering_tf_kernels_notop.h5 ,可以从https://storage.googleapis.com/tensorflow/keras-applications/resnet/ 直接下载

直接下载模型

效果

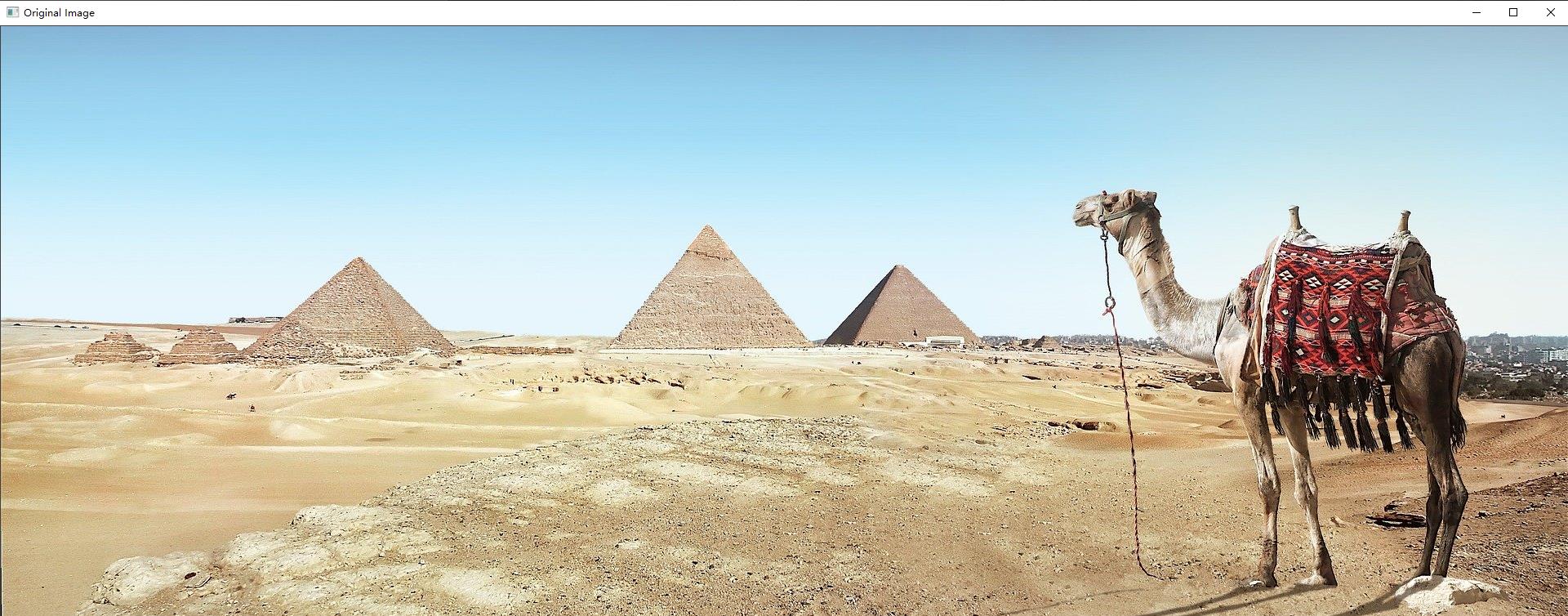

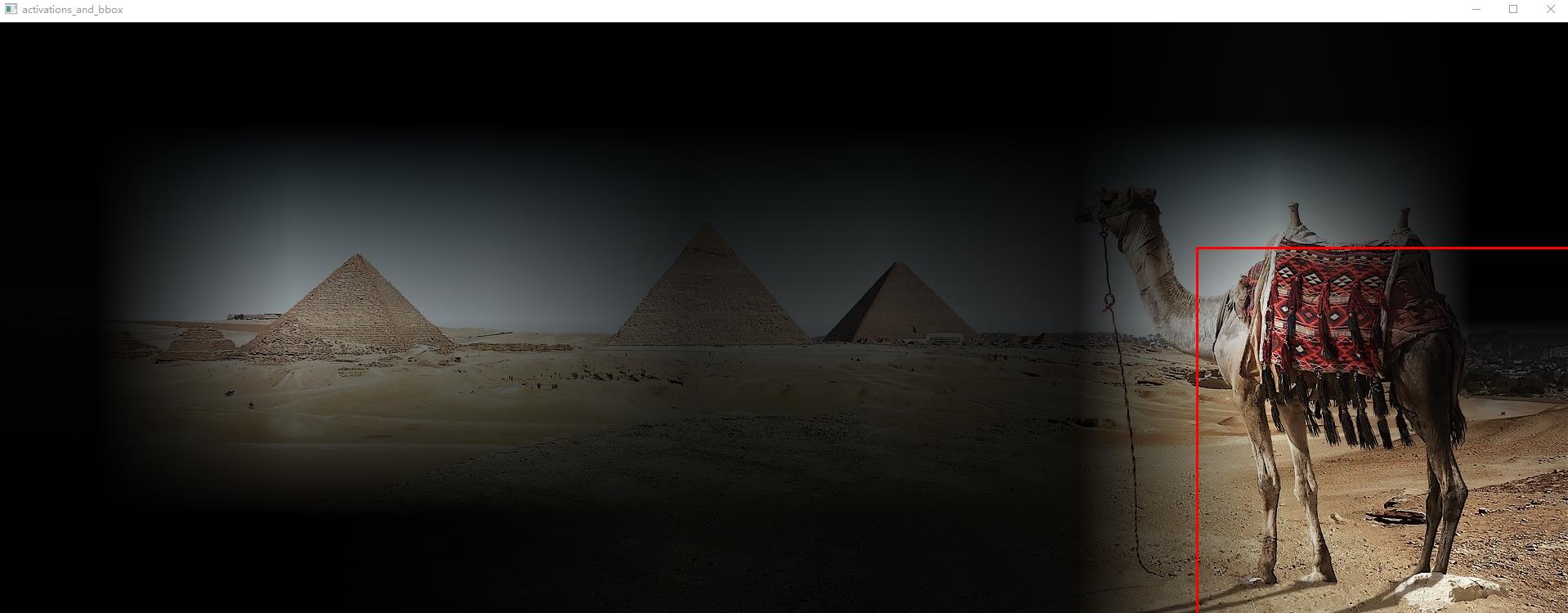

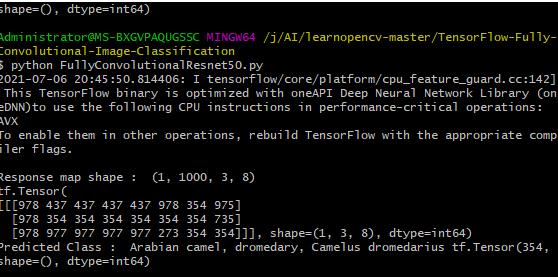

探测出一头阿拉伯单峰驼

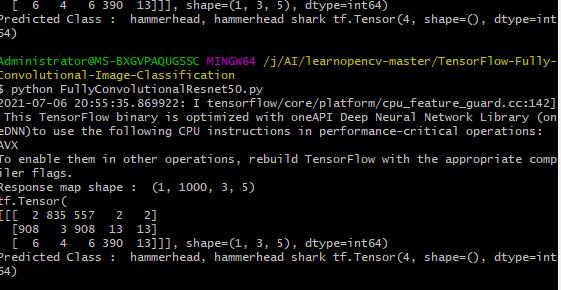

探测出是一头虎鲨

其他点

在卷积操作中,一般使用 padding=‘SAME’ 填充0,但有时不灵活,我们想自己去进行补零操作,此时可以使用tf.keras.layers.ZeroPadding2D

import cv2

import numpy as np

import tensorflow as tf

from tensorflow.keras import Input

from tensorflow.keras.applications import ResNet50

from tensorflow.keras.applications.resnet import preprocess_input

from tensorflow.keras.layers import (

Activation,

AveragePooling2D,

BatchNormalization,

Conv2D,

MaxPooling2D,

ZeroPadding2D,

)

from tensorflow.python.keras.engine import training

from tensorflow.python.keras.utils import data_utils

from utils import (

BASE_WEIGHTS_PATH,

WEIGHTS_HASHES,

stack1,

)

#setting FC weights to the final convolutional layer

def set_conv_weights(model, feature_extractor):

# get pre-trained ResNet50 FC weights

dense_layer_weights = feature_extractor.layers[-1].get_weights()

weights_list = [

tf.reshape(

dense_layer_weights[0], (1, 1, *dense_layer_weights[0].shape),

).numpy(),

dense_layer_weights[1],

]

model.get_layer(name="last_conv").set_weights(weights_list)

def fully_convolutional_resnet50(

input_shape, num_classes=1000, pretrained_resnet=True, use_bias=True,

):

# init input layer

img_input = Input(shape=input_shape)

# define basic model pipeline

x = ZeroPadding2D(padding=((3, 3), (3, 3)), name="conv1_pad")(img_input)

x = Conv2D(64, 7, strides=2, use_bias=use_bias, name="conv1_conv")(x)

x = BatchNormalization(axis=3, epsilon=1.001e-5, name="conv1_bn")(x)

x = Activation("relu", name="conv1_relu")(x)

x = ZeroPadding2D(padding=((1, 1), (1, 1)), name="pool1_pad")(x)

x = MaxPooling2D(3, strides=2, name="pool1_pool")(x)

# the sequence of stacked residual blocks

x = stack1(x, 64, 3, stride1=1, name="conv2")

x = stack1(x, 128, 4, name="conv3")

x = stack1(x, 256, 6, name="conv4")

x = stack1(x, 512, 3, name="conv5")

# add avg pooling layer after feature extraction layers

x = AveragePooling2D(pool_size=7)(x)

# add final convolutional layer

conv_layer_final = Conv2D(

filters=num_classes, kernel_size=1, use_bias=use_bias, name="last_conv",

)(x)

# configure fully convolutional ResNet50 model

model = training.Model(img_input, x)

# load model weights

if pretrained_resnet:

model_name = "resnet50"

# configure full file name

file_name = model_name + "_weights_tf_dim_ordering_tf_kernels_notop.h5"

# get the file hash from TF WEIGHTS_HASHES

#file_hash = WEIGHTS_HASHES[model_name][1]

# weights_path = data_utils.get_file(

# file_name,

# BASE_WEIGHTS_PATH + file_name,

# cache_subdir="models",

# file_hash=file_hash,

# )

model.load_weights(file_name)

# form final model

model = training.Model(inputs=model.input, outputs=[conv_layer_final])

if pretrained_resnet:

# get model with the dense layer for further FC weights extraction

resnet50_extractor = ResNet50(

include_top=True, weights="imagenet", classes=num_classes,

)

# set ResNet50 FC-layer weights to final convolutional layer

set_conv_weights(model=model, feature_extractor=resnet50_extractor)

return model

if __name__ == "__main__":

# read ImageNet class ids to a list of labels

with open("imagenet_classes.txt") as f:

labels = [line.strip() for line in f.readlines()]

# read image

original_image = cv2.imread("camel.jpg")

# convert image to the RGB format

image = cv2.cvtColor(original_image, cv2.COLOR_BGR2RGB)

# pre-process image

image = preprocess_input(image)

# convert image to NCHW tf.tensor

image = tf.expand_dims(image, 0)

# load modified resnet50 model with pre-trained ImageNet weights

model = fully_convolutional_resnet50(input_shape=(image.shape[-3:]))

# Perform inference.

# Instead of a 1×1000 vector, we will get a

# 1×1000×n×m output ( i.e. a probability map

# of size n × m for each 1000 class,

# where n and m depend on the size of the image).

preds = model.predict(image)

preds = tf.transpose(preds, perm=[0, 3, 1, 2])

preds = tf.nn.softmax(preds, axis=1)

print("Response map shape : ", preds.shape)

# find the class with the maximum score in the n × m output map

pred = tf.math.reduce_max(preds, axis=1)

class_idx = tf.math.argmax(preds, axis=1)

print(class_idx)

row_max = tf.math.reduce_max(pred, axis=1)

row_idx = tf.math.argmax(pred, axis=1)

col_idx = tf.math.argmax(row_max, axis=1)

predicted_class = tf.gather_nd(

class_idx, (0, tf.gather_nd(row_idx, (0, col_idx[0])), col_idx[0]),

)

# print top predicted class

print("Predicted Class : ", labels[predicted_class], predicted_class)

# find the n × m score map for the predicted class

score_map = tf.expand_dims(preds[0, predicted_class, :, :], 0).numpy()

score_map = score_map[0]

# resize score map to the original image size

score_map = cv2.resize(

score_map, (original_image.shape[1], original_image.shape[0]),

)

# binarize score map

_, score_map_for_contours = cv2.threshold(

score_map, 0.65, 1, type=cv2.THRESH_BINARY,

)

score_map_for_contours = score_map_for_contours.astype(np.uint8).copy()

# find the contour of the binary blob

contours, _ = cv2.findContours(

score_map_for_contours, mode=cv2.RETR_EXTERNAL, method=cv2.CHAIN_APPROX_SIMPLE,

)

# find bounding box around the object.

rect = cv2.boundingRect(contours[0])

# apply score map as a mask to original image

score_map = score_map - np.min(score_map[:])

score_map = score_map / np.max(score_map[:])

score_map = cv2.cvtColor(score_map, cv2.COLOR_GRAY2BGR)

masked_image = (original_image * score_map).astype(np.uint8)

# display bounding box

cv2.rectangle(

masked_image, rect[:2], (rect[0] + rect[2], rect[1] + rect[3]), (0, 0, 255), 2,

)

# display images

cv2.imshow("Original Image", original_image)

cv2.imshow("scaled_score_map", score_map)

cv2.imshow("activations_and_bbox", masked_image)

cv2.waitKey(0)

这里是util.py

from tensorflow.keras.layers import (

Activation,

Add,

BatchNormalization,

Conv2D,

)

#https://github.com/tensorflow/tensorflow/blob/2b96f3662bd776e277f86997659e61046b56c315/tensorflow/python/keras/applications/resnet.py#L32

BASE_WEIGHTS_PATH = (

"https://storage.googleapis.com/tensorflow/keras-applications/resnet/"

)

WEIGHTS_HASHES = {

"resnet50": "4d473c1dd8becc155b73f8504c6f6626",

}

#https://github.com/tensorflow/tensorflow/blob/2b96f3662bd776e277f86997659e61046b56c315/tensorflow/python/keras/applications/resnet.py#L262

def stack1(x, filters, blocks, stride1=2, name=None):

"""

A set of stacked residual blocks.

Arguments:

x: input tensor.

filters: integer, filters of the bottleneck layer in a block.

blocks: integer, blocks in the stacked blocks.

stride1: default 2, stride of the first layer in the first block.

name: string, stack label.

Returns:

Output tensor for the stacked blocks.

"""

x = block1(x, filters, stride=stride1, name=name + "_block1")

for i in range(2, blocks + 1):

x = block1(x, filters, conv_shortcut=False, name=name + "_block" + str(i))

return x

#https://github.com/tensorflow/tensorflow/blob/2b96f3662bd776e277f86997659e61046b56c315/tensorflow/python/keras/applications/resnet.py#L217

def block1(x, filters, kernel_size=3, stride=1, conv_shortcut=True, name=None):

"""

A residual block.

Arguments:

x: input tensor.

filters: integer, filters of the bottleneck layer.

kernel_size: default 3, kernel size of the bottleneck layer.

stride: default 1, stride of the first layer.

conv_shortcut: default True, use convolution shortcut if True,

otherwise identity shortcut.

name: string, block label.

Returns:

Output tensor for the residual block.

"""

# channels_last format

bn_axis = 3

if conv_shortcut:

shortcut = Conv2D(4 * filters, 1, strides=stride, name=name + "_0_conv")(x)

shortcut = BatchNormalization(

axis=bn_axis, epsilon=1.001e-5, name=name + "_0_bn",

)(shortcut)

else:

shortcut = x

x = Conv2D(filters, 1, strides=stride, name=name + "_1_conv")(x)

x = BatchNormalization(axis=bn_axis, epsilon=1.001e-5, name=name + "_1_bn")(x)

x = Activation("relu", name=name + "_1_relu")(x)

x = Conv2D(filters, kernel_size, padding="SAME", name=name + "_2_conv")(x)

x = BatchNormalization(axis=bn_axis, epsilon=1.001e-5, name=name + "_2_bn")(x)

x = Activation("relu", name=name + "_2_relu")(x)

x = Conv2D(4 * filters, 1, name=name + "_3_conv")(x)

x = BatchNormalization(axis=bn_axis, epsilon=1.001e-5, name=name + "_3_bn")(x)

x = Add(name=name + "_add")([shortcut, x])

x = Activation("relu", name=name + "_out")(x)

return x

代码和图片

以上是关于Resnet 残差网络使用案例的主要内容,如果未能解决你的问题,请参考以下文章