Python 自动下载论文

Posted yangbocsu

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Python 自动下载论文相关的知识,希望对你有一定的参考价值。

目录

0 导入相关的库

# 导入所需模块

import requests

import re

import os

from urllib.request import urlretrieve

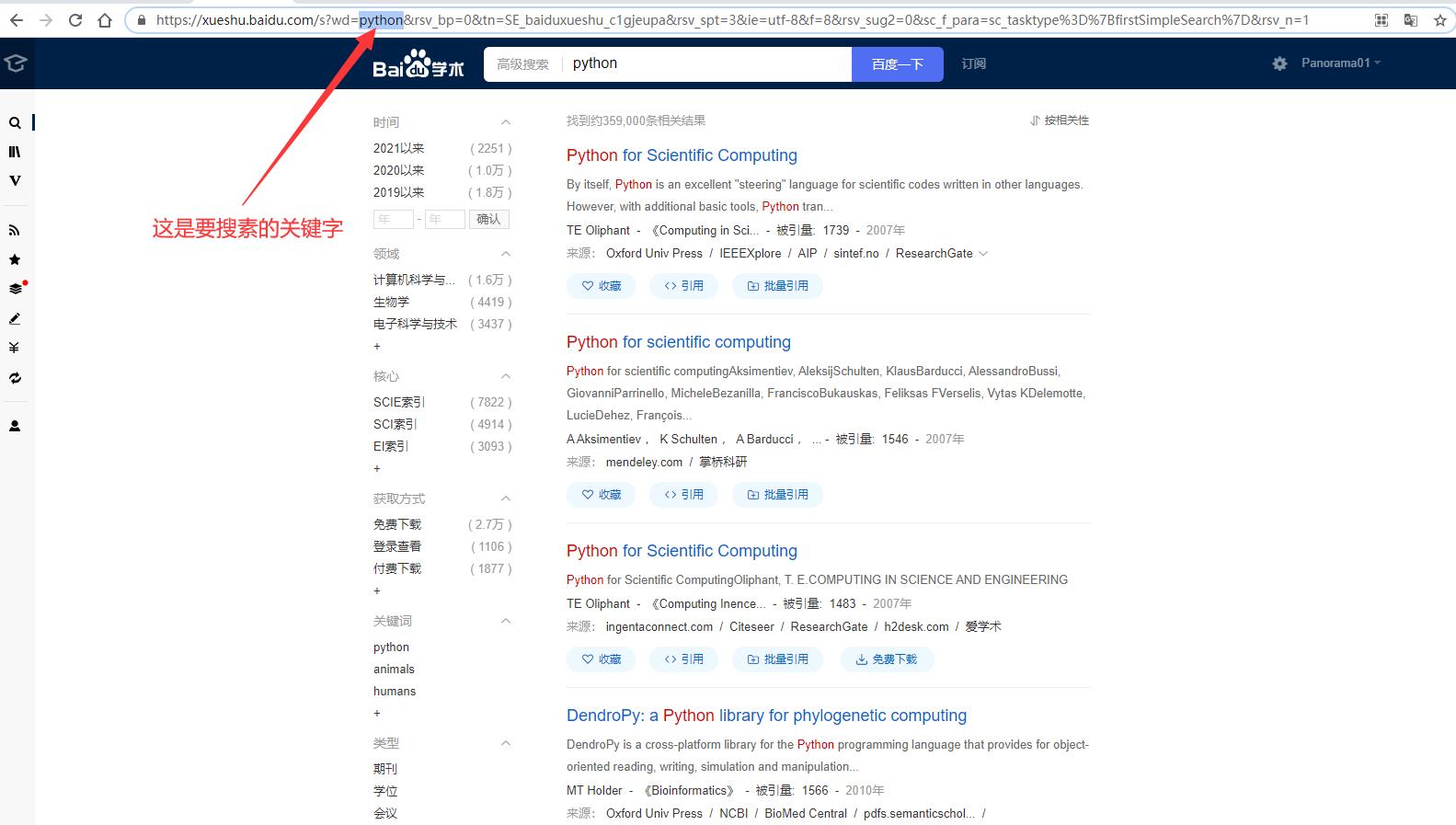

1 获取URL信息

# 获取URL信息

def get_url(key):

url = 'https://xueshu.baidu.com/s?wd=' + key + '&ie=utf-8&tn=SE_baiduxueshu_c1gjeupa&sc_from=&sc_as_para=sc_lib%3A&rsv_sug2=0'

return url

2 设置请求头

# 设置请求头 用Python 模拟浏览器的行为

headers = {

'user-agent':

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (Khtml, like Gecko) Chrome/86.0.4240.198 Safari/537.36',

'Referer': 'https://googleads.g.doubleclick.net/'

}

3 获取相关论文的DOI列表

# 获取相关论文的DOI列表

def get_paper_link(headers, key):

response = requests.get(url=get_url(key), headers=headers)

res1_data = response.text

#找论文链接

paper_link = re.findall(r'<h3 class=\\"t c_font\\">\\n +\\n +<a href=\\"(.*)\\"',

res1_data)

doi_list = [] #用一个列表接收论文的DOI

for link in paper_link:

paper_link = 'http:' + link

response2 = requests.get(url=paper_link, headers=headers)

res2_data = response2.text

#提取论文的DOI

try:

paper_doi = re.findall(r'\\'doi\\'}\\">\\n +(.*?)\\n ', res2_data)

if str(10) in paper_doi[0]:

doi_list.append(paper_doi)

except:

pass

return doi_list

4 构建sci-hub下载链接

#构建sci-hub下载链接

def doi_download(headers, key):

doi_list = get_paper_link(headers, key)

lst = []

for i in doi_list:

lst.append(list(i[0]))

for i in lst:

for j in range(8, len(i)):

if i[j] == '/':

i[j] = '%252F'

elif i[j] == '(':

i[j] = '%2528'

elif i[j] == ')':

i[j] = '%2529'

else:

i[j] = i[j].lower()

for i in range(len(lst)):

lst[i] = ''.join(lst[i])

for doi in lst:

down_link = 'https://sci.bban.top/pdf/' + doi + '.pdf'

print(down_link)

file_name = doi.split('/')[-1] + '.pdf'

try:

with open(file_name, 'wb') as f:

r = requests.get(url=down_link, headers=headers)

f.write(r.content)

print('下载完毕:' + file_name)

except:

print("该文章为空")

pass

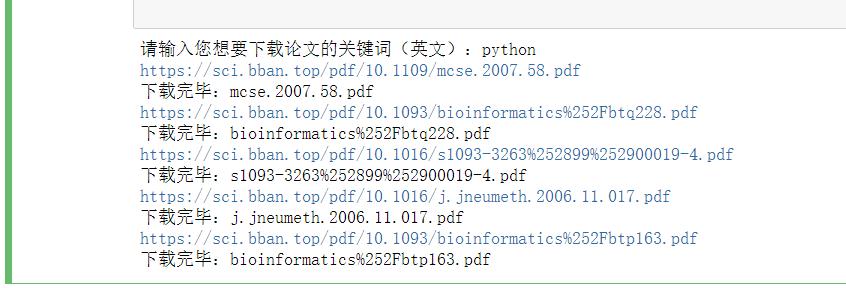

5 检索及下载

# 检索及下载

key = input("请输入您想要下载论文的关键词(英文):")

doi_download(headers, key)

6 完整代码

# 导入所需模块

import requests

import re

import os

from urllib.request import urlretrieve

# 获取URL信息

def get_url(key):

url = 'https://xueshu.baidu.com/s?wd=' + key + '&ie=utf-8&tn=SE_baiduxueshu_c1gjeupa&sc_from=&sc_as_para=sc_lib%3A&rsv_sug2=0'

return url

# 设置请求头

headers = {

'user-agent':

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.198 Safari/537.36',

'Referer': 'https://googleads.g.doubleclick.net/'

}

# 获取相关论文的DOI列表

def get_paper_link(headers, key):

response = requests.get(url=get_url(key), headers=headers)

res1_data = response.text

#找论文链接

paper_link = re.findall(r'<h3 class=\\"t c_font\\">\\n +\\n +<a href=\\"(.*)\\"',

res1_data)

doi_list = [] #用一个列表接收论文的DOI

for link in paper_link:

paper_link = 'http:' + link

response2 = requests.get(url=paper_link, headers=headers)

res2_data = response2.text

#提取论文的DOI

try:

paper_doi = re.findall(r'\\'doi\\'}\\">\\n +(.*?)\\n ', res2_data)

if str(10) in paper_doi[0]:

doi_list.append(paper_doi)

except:

pass

return doi_list

#构建sci-hub下载链接

def doi_download(headers, key):

doi_list = get_paper_link(headers, key)

lst = []

for i in doi_list:

lst.append(list(i[0]))

for i in lst:

for j in range(8, len(i)):

if i[j] == '/':

i[j] = '%252F'

elif i[j] == '(':

i[j] = '%2528'

elif i[j] == ')':

i[j] = '%2529'

else:

i[j] = i[j].lower()

for i in range(len(lst)):

lst[i] = ''.join(lst[i])

for doi in lst:

down_link = 'https://sci.bban.top/pdf/' + doi + '.pdf'

print(down_link)

file_name = doi.split('/')[-1] + '.pdf'

try:

with open(file_name, 'wb') as f:

r = requests.get(url=down_link, headers=headers)

f.write(r.content)

print('下载完毕:' + file_name)

except:

print("该文章为空")

pass

# 检索及下载

key = input("请输入您想要下载论文的关键词(英文):")

doi_download(headers, key)

以上是关于Python 自动下载论文的主要内容,如果未能解决你的问题,请参考以下文章

40行Python代码利用DOI下载英文论文(2022.3.7)

text [检查特定的数据片段]取自论文但有意思应用。 #python #pandas