OpenResty连接池使用不生效问题

Posted sysu_lluozh

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了OpenResty连接池使用不生效问题相关的知识,希望对你有一定的参考价值。

一、问题描述

在对OpenResty+ceph自建存储服务进行压测:

| 维度 | 数值 |

|---|---|

| 节点数 | 1 |

| 并发数 | 10 * 3 |

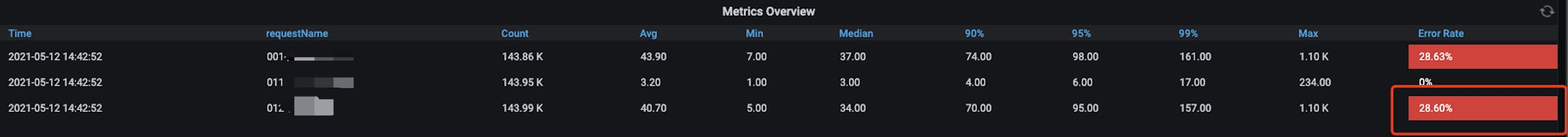

压测结果

结果数据

| 维度 | 数值 |

|---|---|

| qps | 650 |

| error rate | 25% |

| rt avg | 40ms |

很明显出现约25%的错误率,查看压测客户端接收到的响应结果为:

<html>

<head><title>500 Internal Server Error</title></head>

<body>

<center><h1>500 Internal Server Error</h1></center>

<hr><center>openresty/1.17.8.2</center>

</body>

</html>

二、服务日志

打印OpenResty的异常信息

2021/05/12 17:34:45 [error] 310#310: *86725 (/usr/local/openresty/lualib/rgw-log.lua):16: trace_id: 95fd611b45173b58e8d31e30bd51324b , cannot assign requested address, client: 172.28.12.15, server: public.lluozh.com, request: "POST / HTTP/1.1", host: "store-pub.lluozh.com"

2021/05/12 17:34:45 [error] 310#310: *86725 (/usr/local/openresty/lualib/rgw-log.lua):16: trace_id: 95fd611b45173b58e8d31e30bd51324b , form upload failed!, rgw endpoint: 10.180.1.19:80 , Error: cannot assign requested address, client: 172.28.12.15, server: public.lluozh.com, request: "POST / HTTP/1.1", host: "store-pub.lluozh.com"

其实在使用lua打印日志时遇到了很多坎坷,折腾了一通

通过错误日志信息cannot assign requested address,很明显无法分配地址

三、服务端口使用

既然无法分配地址,那接下来看看服务端口的使用情况

服务的错误日志中可以看到rgw endpoint: 10.180.1.19:80 ,那么查看端口使用

netstat -lpna |grep 10.180.1.19:80 | wc -l

发现端口数量持续上升,且均在TIME WAIT状态

四、抓包定位

不断的创建新的端口连接导致端口数量飙升,需要确认连接的使用清洁,抓包看看具体连接请求包数据

4.1 使用tcpdump抓包

tcpdump -i any port 80 -A -nn -w ceph.pcpang

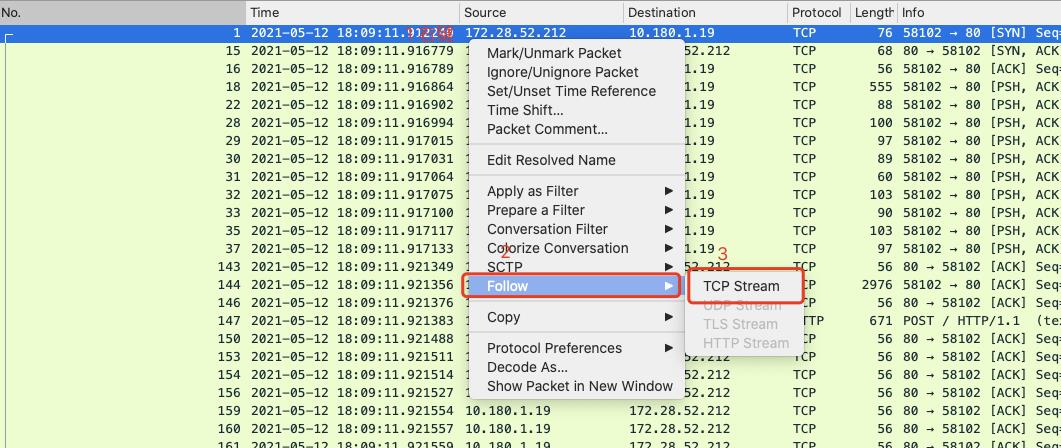

4.2 获取一个完整的TCP包

使用Wireshark打开ceph.pcpang文件后,在Wireshark中提取一个完整的TCP包

4.3 分析TCP包数据流

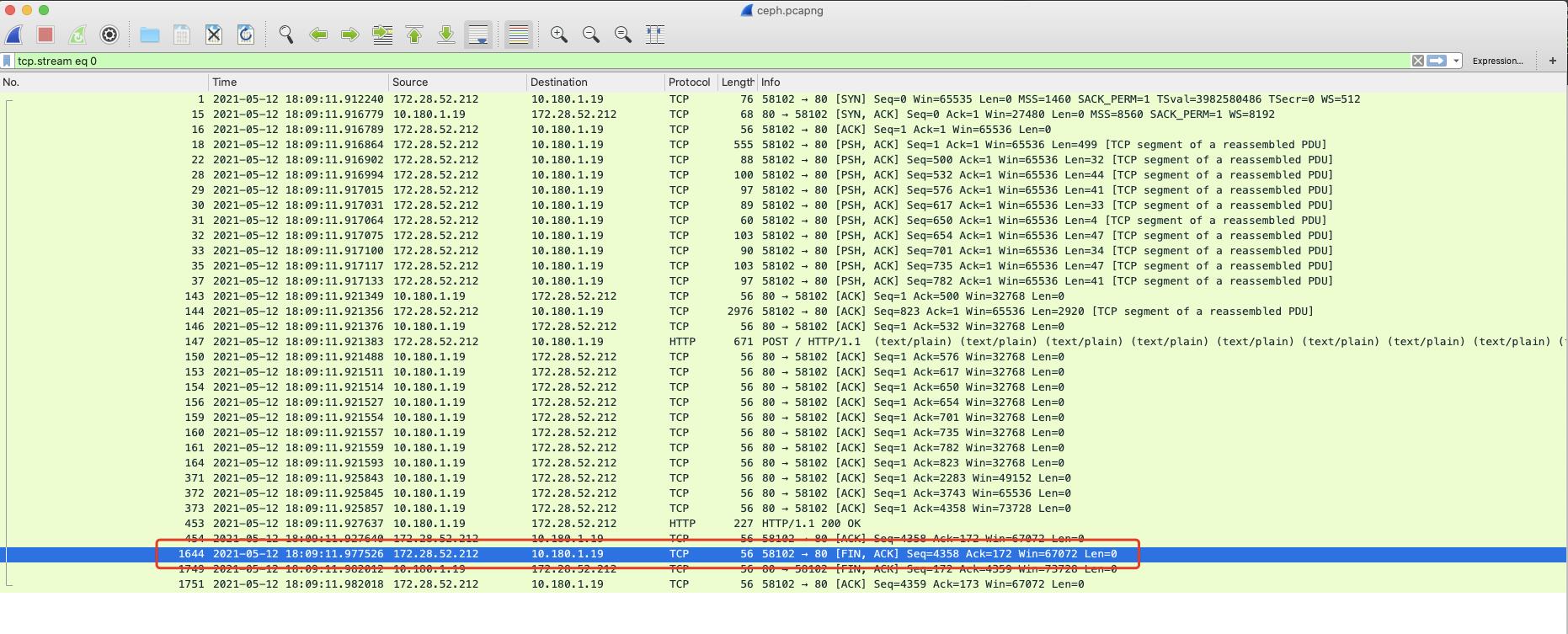

分析完整TCP包的数据

172.28.52.212为OpenResty的地址,10.180.1.19为Ceph的地址,则很明显在一次数据流传输结束后OpenResty主动发起FIn包关闭连接

五、连接池使用

很明显,OpenResty的连接并未复用

5.1 Streamed request

local httpc = require("resty.http").new()

-- First establish a connection

local ok, err = httpc:connect({

scheme = "https",

host = "127.0.0.1",

port = 8080,

})

if not ok then

ngx.log(ngx.ERR, "connection failed: ", err)

return

end

-- Then send using `request`, supplying a path and `Host` header instead of a

-- full URI.

local res, err = httpc:request({

path = "/helloworld",

headers = {

["Host"] = "example.com",

},

})

if not res then

ngx.log(ngx.ERR, "request failed: ", err)

return

end

-- At this point, the status and headers will be available to use in the `res`

-- table, but the body and any trailers will still be on the wire.

-- We can use the `body_reader` iterator, to stream the body according to our

-- desired buffer size.

local reader = res.body_reader

local buffer_size = 8192

repeat

local buffer, err = reader(buffer_size)

if err then

ngx.log(ngx.ERR, err)

break

end

if buffer then

-- process

end

until not buffer

local ok, err = httpc:set_keepalive()

if not ok then

ngx.say("failed to set keepalive: ", err)

return

end

-- At this point, the connection will either be safely back in the pool, or closed.

5.2 简化连接请求过程

- new

-- Creates the HTTP connection object. In case of failures, returns nil and a string describing the error.

httpc.new()

- connect

-- Attempts to connect to the web server

local ok, err = httpc:connect(host, port)

- request

-- request

local res, err = httpc:request(param)

- set_timeout

-- Sets the socket timeout (in ms) for subsequent operations. See set_timeouts below for a more declarative approach

httpc:set_timeout(time)

- set_keepalive

-- Either places the current connection into the pool for future reuse, or closes the connection. Calling this instead of close is "safe" in that it will conditionally close depending on the type of request. Specifically, a 1.0 request without Connection: Keep-Alive will be closed, as will a 1.1 request with Connection: Close.

-- In case of success, returns 1. In case of errors, returns nil, err. In the case where the connection is conditionally closed as described above, returns 2 and the error string connection must be closed, so as to distinguish from unexpected errors.

local ok, err = httpc:set_keepalive(max_idle_timeout, pool_size)

5.3 实现源码

- new

使用http.new()获取连接池连接的方式有些诡异,查看resty.http 的源码

function _M.new(_)

local sock, err = ngx_socket_tcp()

if not sock then

return nil, err

end

return setmetatable({ sock = sock, keepalive = true }, mt)

end

可以发现,虽然命名为new,其实并非是创建一个新的连接对象,而是复用连接池连接

- set_keepalive

set_keepalive即使用结束后将连接放回连接池中,后续的连接复用该连接,查看resty.http 的源码

function _M.set_keepalive(self, ...)

local sock = self.sock

if not sock then

return nil, "not initialized"

end

if self.keepalive == true then

return sock:setkeepalive(...)

else

-- The server said we must close the connection, so we cannot setkeepalive.

-- If close() succeeds we return 2 instead of 1, to differentiate between

-- a normal setkeepalive() failure and an intentional close().

local res, err = sock:close()

if res then

return 2, "connection must be closed"

else

return res, err

end

end

end

通过具体调用以及源码分析,该方式应该为复用连接池,那到底哪里出了问题导致?

六、处理顺序

看到网上有针对处理顺序的坑分享

server {

location /test {

content_by_lua '

local redis = require "resty.redis"

local red = redis:new()

local ok, err = red:connect("127.0.0.1", 6379)

if not ok then

ngx.say("failed to connect: ", err)

return

end

-- red:set_keepalive(10000, 100) -- 坑①

ok, err = red:set("dog", "an animal")

if not ok then

-- red:set_keepalive(10000, 100) -- 坑②

return

end

-- 坑③

red:set_keepalive(10000, 100)

';

}

}

-- 坑①:只有数据传输完毕了,才能放到池子里,系统无法帮你自动做这个事情

-- 坑②:不能把状态位置的连接放回池子里,你不知道这个连接后面会触发什么错误

-- 坑③:逗你玩,这个不是坑,是正确的

反复确认set_keepalive在数据request结束后再进行设置,那究竟是哪里出现了误会呢?

七、业务分析

既然实现及使用方式没有问题,那有可能是调用的逻辑出现了错误,具体再撸一下整个调用

if ceph_endpoint then

res, err = httpc:request(chunksize)

if err then

ngx_log(ngx.ERR,err)

return {}, err

-- 表单上传时自定义 response body,不透传response body

elseif req_type ~= "form_upload" then

httpc:proxy_response(res, chunksize)

else

return res, nil, res.body_reader(),httpc

end

local ok, err = httpc:set_keepalive(max_idle_timeout, pool_size)

return res, nil

发现该请求逻辑为form_upload 模式,这样直接return,导致其实并没有set_keepalive,这样所有的连接均未放入连接池,故均创建新的连接

现在看起来很容易发现未执行set_keepalive的问题,由于第一次接触lua语言,且实际业务中这个处理仅仅为其中一小部分,故在定位问题的时候一直未发现由于该逻辑引起

八、修复问题

所有的数据请求后均进行httpc:set_keepalive 处理,验证后发现问题解决

以上是关于OpenResty连接池使用不生效问题的主要内容,如果未能解决你的问题,请参考以下文章