yolov5检测视频流的原理detect.py解读

Posted dlage

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了yolov5检测视频流的原理detect.py解读相关的知识,希望对你有一定的参考价值。

基于yolov5的谷物视频检测专栏链接:点我

前言

yolov5可以检测视频,实验室为该实验配备了一个工业相机,并想使用该相机进行实时检测,为了能使用该相机,今天去看了下yolov5的源代码。记录下自己的体会。

一、先从readme开始

detect.pyruns inference on a variety of sources, downloading models automatically from the latest YOLOv5 release and saving results toruns/detect.

$ python detect.py --source 0 # webcam

file.jpg # image

file.mp4 # video

path/ # directory

path/*.jpg # glob

'https://youtu.be/NUsoVlDFqZg' # YouTube video

'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream

上面的语句是yolov5的readme文件的教程。传送门

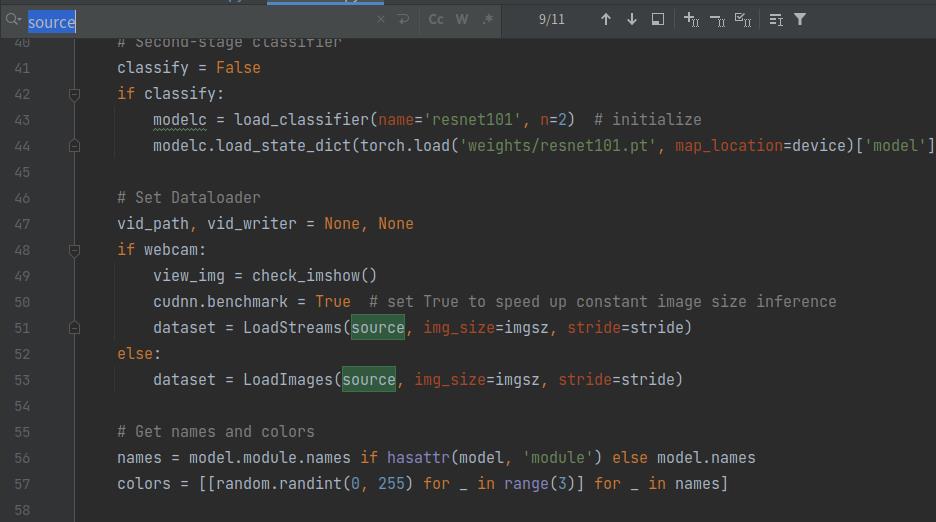

可以看出,调用摄像头使用的是–source 参数,到detect.py去查看该参数是如何使用的。

很明显是调用的是LoadStream()函数。

二、查看LoadStream()具体实现

class LoadStreams: # multiple IP or RTSP cameras

def __init__(self, sources='streams.txt', img_size=640, stride=32):

self.mode = 'stream'

self.img_size = img_size

self.stride = stride

# 如果参数为源的列表,则读取为一个列表

if os.path.isfile(sources):

with open(sources, 'r') as f:

sources = [x.strip() for x in f.read().strip().splitlines() if len(x.strip())]

else:

sources = [sources]

n = len(sources)

self.imgs = [None] * n

self.sources = [clean_str(x) for x in sources] # clean source names for later

# 遍历列表

for i, s in enumerate(sources):

# Start the thread to read frames from the video stream

print(f'{i + 1}/{n}: {s}... ', end='')

url = eval(s) if s.isnumeric() else s

if 'youtube.com/' in url or 'youtu.be/' in url: # if source is YouTube video

check_requirements(('pafy', 'youtube_dl'))

import pafy

url = pafy.new(url).getbest(preftype="mp4").url

# 使用opencv读取帧

cap = cv2.VideoCapture(url)

assert cap.isOpened(), f'Failed to open {s}'

# 帧的宽高

w = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

h = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

self.fps = cap.get(cv2.CAP_PROP_FPS) % 100

_, self.imgs[i] = cap.read() # guarantee first frame

# 使用多线程读取视频帧

thread = Thread(target=self.update, args=([i, cap]), daemon=True)

print(f' success ({w}x{h} at {self.fps:.2f} FPS).')

thread.start()

print('') # newline

# check for common shapes

s = np.stack([letterbox(x, self.img_size, stride=self.stride)[0].shape for x in self.imgs], 0) # shapes

self.rect = np.unique(s, axis=0).shape[0] == 1 # rect inference if all shapes equal

if not self.rect:

print('WARNING: Different stream shapes detected. For optimal performance supply similarly-shaped streams.')

def update(self, index, cap):

# Read next stream frame in a daemon thread

n = 0

# 只要cap没有关闭,就是一直读取视频的帧

while cap.isOpened():

n += 1

# _, self.imgs[index] = cap.read()

# 从视频文件或捕获设备抓取下一帧。

cap.grab()

if n == 4: # read every 4th frame

# cap.retrieve() 解码并安返回抓取的视频帧

success, im = cap.retrieve()

self.imgs[index] = im if success else self.imgs[index] * 0

n = 0

time.sleep(1 / self.fps) # wait time

# 作为可迭代对象,必须实现下面两个方法

def __iter__(self):

self.count = -1

return self

def __next__(self):

self.count += 1

# 复制图片列表

img0 = self.imgs.copy()

# 按q退出

if cv2.waitKey(1) == ord('q'): # q to quit

cv2.destroyAllWindows()

raise StopIteration

# Letterbox

img = [letterbox(x, self.img_size, auto=self.rect, stride=self.stride)[0] for x in img0]

# Stack

img = np.stack(img, 0)

# Convert

img = img[:, :, :, ::-1].transpose(0, 3, 1, 2) # BGR to RGB, to bsx3x416x416

img = np.ascontiguousarray(img)

return self.sources, img, img0, None

def __len__(self):

return 0 # 1E12 frames = 32 streams at 30 FPS for 30 years

总结

对视频处理,工业相机需要使用第三方工具包来调用。

以上是关于yolov5检测视频流的原理detect.py解读的主要内容,如果未能解决你的问题,请参考以下文章