HttpWebRequest模拟登陆页面,已登陆成功,但是用WebClient抓取主页面时,总是提示操作超时

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了HttpWebRequest模拟登陆页面,已登陆成功,但是用WebClient抓取主页面时,总是提示操作超时相关的知识,希望对你有一定的参考价值。

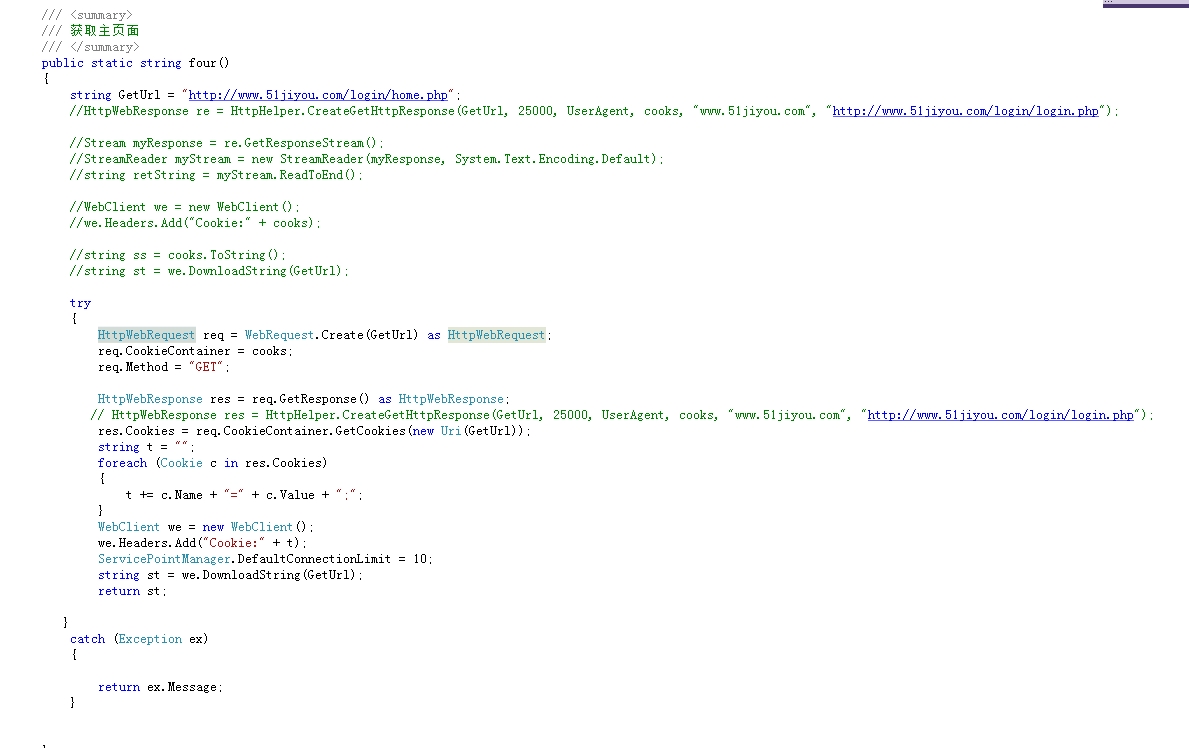

这是登陆成功后,抓取页面的代码,我是新手,这方面不是很懂,不知道怎么弄,在线等

(1)你的Cookie设置方式有问题,不要用setHeader把Cookie设置进去;这样设置和addCookie的方式是有差别的;

(2)除了Cookie之外,可能存在其它的header元素需要你添加进去。setHeader(“refer”,"").

你要保证所有必须的Header都要放进去。

总结:缺东西,导致网站不认你。

Scrapy模拟登陆

Scrapy模拟登陆

学习目标:

- 应用 请求对象cookies参数的使用

- 了解 start_requests函数的作用

- 应用 构造并发送post请求

1. 回顾之前的模拟登陆的方法

1.1 requests模块是如何实现模拟登陆的?

- 直接携带cookies请求页面

- 找url地址,发送post请求存储cookie

1.2 selenium是如何模拟登陆的?

- 找到对应的input标签,输入文本点击登陆

1.3 scrapy的模拟登陆

- 直接携带cookies

- 找url地址,发送post请求存储cookie

2. scrapy携带cookies直接获取需要登陆后的页面

应用场景

- cookie过期时间很长,常见于一些不规范的网站

- 能在cookie过期之前把所有的数据拿到

- 配合其他程序使用,比如其使用selenium把登陆之后的cookie获取到保存到本地,scrapy发送请求之前先读取本地cookie

2.1 实现:重构scrapy的starte_rquests方法

scrapy中start_url是通过start_requests来进行处理的,其实现代码如下

# 这是源代码

def start_requests(self):

cls = self.__class__

if method_is_overridden(cls, Spider, 'make_requests_from_url'):

warnings.warn(

"Spider.make_requests_from_url method is deprecated; it "

"won't be called in future Scrapy releases. Please "

"override Spider.start_requests method instead (see %s.%s)." % (

cls.__module__, cls.__name__

),

)

for url in self.start_urls:

yield self.make_requests_from_url(url)

else:

for url in self.start_urls:

yield Request(url, dont_filter=True)

所以对应的,如果start_url地址中的url是需要登录后才能访问的url地址,则需要重写start_request方法并在其中手动添加上cookie

2.2 携带cookies登陆github

测试账号 noobpythoner zhoudawei123

import scrapy

import re

class Login1Spider(scrapy.Spider):

name = 'login1'

allowed_domains = ['github.com']

start_urls = ['https://github.com/NoobPythoner'] # 这是一个需要登陆以后才能访问的页面

def start_requests(self): # 重构start_requests方法

# 这个cookies_str是抓包获取的

cookies_str = '...' # 抓包获取

# 将cookies_str转换为cookies_dict

cookies_dict = {i.split('=')[0]:i.split('=')[1] for i in cookies_str.split('; ')}

yield scrapy.Request(

self.start_urls[0],

callback=self.parse,

cookies=cookies_dict

)

def parse(self, response): # 通过正则表达式匹配用户名来验证是否登陆成功

# 正则匹配的是github的用户名

result_list = re.findall(r'noobpythoner|NoobPythoner', response.body.decode())

print(result_list)

pass

注意:

- scrapy中cookie不能够放在headers中,在构造请求的时候有专门的cookies参数,能够接受字典形式的coookie

- 在setting中设置ROBOTS协议、USER_AGENT

3. scrapy.Request发送post请求

我们知道可以通过scrapy.Request()指定method、body参数来发送post请求;但是通常使用scrapy.FormRequest()来发送post请求

3.1 发送post请求

注意:scrapy.FormRequest()能够发送表单和ajax请求,参考阅读 https://www.jb51.net/article/146769.htm

3.1.1 思路分析

-

找到post的url地址:点击登录按钮进行抓包,然后定位url地址为

https://github.com/session -

找到请求体的规律:分析post请求的请求体,其中包含的参数均在前一次的响应中

-

是否登录成功:通过请求个人主页,观察是否包含用户名

3.1.2 代码实现如下

import scrapy

import re

class Login2Spider(scrapy.Spider):

name = 'login2'

allowed_domains = ['github.com']

start_urls = ['https://github.com/login']

def parse(self, response):

authenticity_token = response.xpath("//input[@name='authenticity_token']/@value").extract_first()

utf8 = response.xpath("//input[@name='utf8']/@value").extract_first()

commit = response.xpath("//input[@name='commit']/@value").extract_first()

#构造POST请求,传递给引擎

yield scrapy.FormRequest(

"https://github.com/session",

formdata={

"authenticity_token":authenticity_token,

"utf8":utf8,

"commit":commit,

"login":"noobpythoner",

"password":"***"

},

callback=self.parse_login

)

def parse_login(self,response):

ret = re.findall(r"noobpythoner|NoobPythoner",response.text)

print(ret)

小技巧

在settings.py中通过设置COOKIES_DEBUG=TRUE 能够在终端看到cookie的传递传递过程

4. 完整代码

携带cookie:

import scrapy

'''

cookie: _octo=GH1.1.578198759.1625640541; _device_id=73970f074a72a725ce83e5614a029e94; user_session=Vi81uuZiPlIf3qArUwSsgacHOhALXHsz0qyT0hmZ_vsM2Yc2; __Host-user_session_same_site=Vi81uuZiPlIf3qArUwSsgacHOhALXHsz0qyT0hmZ_vsM2Yc2; logged_in=yes; dotcom_user=ZS22YL; has_recent_activity=1; color_mode=%7B%22color_mode%22%3A%22light%22%2C%22light_theme%22%3A%7B%22name%22%3A%22light%22%2C%22color_mode%22%3A%22light%22%7D%2C%22dark_theme%22%3A%7B%22name%22%3A%22dark%22%2C%22color_mode%22%3A%22dark%22%7D%7D; tz=Asia%2FShanghai; _gh_sess=XlUioEY6UokxY%2B9s70Nux38MEhGjD0ToEd6DNogQFPeLQVQ3swInqD4p07cF2xTGT7HXEMB4F%2FIct0fpz2vrRBve4OE83Oaa1Hrxg817QdvarqZS15oTIqmAl%2BVfkRHp9%2Fv2zaWq%2Fe4vE1XyryEavzvBeNOqagA4PZGVUzFjT632R7Spcs0J5lhRXbW4Hspw--lO5I7cZqd%2Bxyv13Q--qk7MIsiu0UOti98YInJ%2BvA%3D%3D

'''

class Git1Spider(scrapy.Spider):

name = 'git1'

allowed_domains = ['github.com']

start_urls = ['https://github.com/username']

def start_requests(self):

url = self.start_urls[0]

temp = '_octo=GH1.1.578198759.1625640541; _device_id=73970f074a72a725ce83e5614a029e94; user_session=Vi81uuZiPlIf3qArUwSsgacHOhALXHsz0qyT0hmZ_vsM2Yc2; __Host-user_session_same_site=Vi81uuZiPlIf3qArUwSsgacHOhALXHsz0qyT0hmZ_vsM2Yc2; logged_in=yes; dotcom_user=ZS22YL; has_recent_activity=1; color_mode=%7B%22color_mode%22%3A%22light%22%2C%22light_theme%22%3A%7B%22name%22%3A%22light%22%2C%22color_mode%22%3A%22light%22%7D%2C%22dark_theme%22%3A%7B%22name%22%3A%22dark%22%2C%22color_mode%22%3A%22dark%22%7D%7D; tz=Asia%2FShanghai; _gh_sess=XlUioEY6UokxY%2B9s70Nux38MEhGjD0ToEd6DNogQFPeLQVQ3swInqD4p07cF2xTGT7HXEMB4F%2FIct0fpz2vrRBve4OE83Oaa1Hrxg817QdvarqZS15oTIqmAl%2BVfkRHp9%2Fv2zaWq%2Fe4vE1XyryEavzvBeNOqagA4PZGVUzFjT632R7Spcs0J5lhRXbW4Hspw--lO5I7cZqd%2Bxyv13Q--qk7MIsiu0UOti98YInJ%2BvA%3D%3D'

# 字典推导式生成cookie

cookies = {data.split('=')[0]: data.split('=')[-1] for data in temp.split('; ')}

# 发送给引擎,新的请求

yield scrapy.Request(

url=url,

callback=self.parse,

cookies=cookies

)

# 解析响应,查看是够成功登录(名字后面之后有.github)

def parse(self, response):

print(response.xpath('/html/head/title/text()').extract_first())

模拟登录

import scrapy

class Git2Spider(scrapy.Spider):

name = 'git2'

allowed_domains = ['github.com']

start_urls = ['http://github.com/login']

def parse(self, response):

# 从登录页面响应中解析出post数据

token = response.xpath('//input[@name="authenticity_token"]/@value').extract_first()

post_data = {

'commit': 'Sign in',

'authenticity_token': token,

'login': 'username',

'password': 'password',

'webauthn-support': 'supported',

'webauthn-iuvpaa-support': 'unsupported',

'return_to': 'https://github.com/login',

'timestamp': '1629970060446',

'timestamp_secret': 'e472904d9d6e3c5512dd549e9a4b03d4d869090b895fa8f1ddfd8dde8d72c5f6'

}

print(post_data)

# 针对登录url发送post请求

yield scrapy.FormRequest(

url='http://github.com/session',

callback=self.after_login,

formdata=post_data

)

def after_login(self, response):

yield scrapy.Request('https://github.com/username', callback=self.check_login)

def check_login(self, response):

print(response.xpath('/html/head/title/text()').extract_first())

5. 小结

- start_urls中的url地址是交给start_request处理的,如有必要,可以重写start_request函数

- 直接携带cookie登陆:cookie只能传递给cookies参数接收

- scrapy.Request()发送post请求

以上是关于HttpWebRequest模拟登陆页面,已登陆成功,但是用WebClient抓取主页面时,总是提示操作超时的主要内容,如果未能解决你的问题,请参考以下文章