基于JDK1.8的Kafka集群搭建

Posted Lossdate

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了基于JDK1.8的Kafka集群搭建相关的知识,希望对你有一定的参考价值。

一、环境配置

- 三台Linux,安装拥有三个节点的Kafka集群

nodeB(192.168.200.139)

nodeC(192.168.200.140)

nodeD(192.168.200.141) - 分别配置hosts

192.168.200.139 nodeB

192.168.200.140 nodeC

192.168.200.141 nodeD - 版本

jdk -> 1.8(jdk-8u261-linux-x64.rpm)

zookeeper -> 3.4.14(zookeeper-3.4.14.tar.gz)

kafka -> 2.12-1.0.2(kafka_2.12-1.0.2.tgz)

二、JDK1.8安装

- nodeBCD安装JDK1.8

#rpm安装JDK rpm -ivh jdk-8u261-linux-x64.rpm #默认的安装路径是/usr/java/jdk1.8.0_261-amd64 - 配置环境变量

#配置JAVA_HOME vim /etc/profile #文件最末尾添加 export JAVA_HOME=/usr/java/jdk1.8.0_261-amd64 export PATH=$PATH:$JAVA_HOME/bin - 生效配置

. /etc/profile - 验证

java -version

三、Zookeeper集群搭建

nodeBCD都安装Zookeeper,以搭建Zookeeper集群

- nodeB解压到/opt目录

tar -zxf zookeeper-3.4.14.tar.gz -C /opt - nodeB配置zoo.cfg

zoo.cfg配置cd /opt/zookeeper-3.4.14/conf cp zoo_sample.cfg zoo.cfg vim zoo.cfg#设置 dataDir=/var/lossdate/zookeeper/data #添加 server.1=nodeB:2881:3881 server.2=nodeC:2881:3881 server.3=nodeD:2881:3881 - nodeB基本目录和文件创建

mkdir -p /var/lossdate/zookeeper/data echo 1 > /var/lossdate/zookeeper/data/myid - nodeB配置环境变量

vim /etc/profile #添加 export ZOOKEEPER_PREFIX=/opt/zookeeper-3.4.14 export PATH=$PATH:$ZOOKEEPER_PREFIX/bin export ZOO_LOG_DIR=/var/lossdate/zookeeper/log - nodeB生效配置

. /etc/profile - nodeB将/opt/zookeeper-3.4.14复制到nodeC,nodeD

scp -r /opt/zookeeper-3.4.14/ nodeC:/opt scp -r /opt/zookeeper-3.4.14/ nodeD:/opt - nodeC配置

#配置环境变量 vim /etc/profile #最末尾添加 export ZOOKEEPER_PREFIX=/opt/zookeeper-3.4.14 export PATH=$PATH:$ZOOKEEPER_PREFIX/bin export ZOO_LOG_DIR=/var/lossdate/zookeeper/log #生效配置 . /etc/profile #基本目录和文件创建 mkdir -p /var/lossdate/zookeeper/data echo 2 > /var/lossdate/zookeeper/data/myid - nodeD配置

#配置环境变量 vim /etc/profile #最末尾添加 export ZOOKEEPER_PREFIX=/opt/zookeeper-3.4.14 export PATH=$PATH:$ZOOKEEPER_PREFIX/bin export ZOO_LOG_DIR=/var/lossdate/zookeeper/log #生效配置 . /etc/profile #基本目录和文件创建 mkdir -p /var/lossdate/zookeeper/data echo 3 > /var/lossdate/zookeeper/data/myid - 启动

#在nodeBCD上启动Zookeeper zkServer.sh start #在nodeBCD上查看Zookeeper的状态 zkServer.sh status #可以看到一个Leader和两个Follower

四、Kafka集群搭建

- 解压Kafka到/opt

tar -zxf kafka_2.12-1.0.2.tgz -C /opt - nodeBCD配置环境变量

vim /etc/profile #末尾追加 export KAFKA_HOME=/opt/kafka_2.12-1.0.2 export PATH=$PATH:$KAFKA_HOME/bin - nodeBCD生效配置

. /etc/profile - nodeB配置 server.properties

vim /opt/kafka_2.12-1.0.2/config/server.properties #配置 broker.id=0 listeners=PLAINTEXT://:9092 advertised.listeners=PLAINTEXT://nodeB:9092 log.dirs=/var/lossdate/kafka/kafka-logs zookeeper.connect=nodeB:2181,nodeC:2181,nodeD:2181/myKafka #其他使用默认配置 - nodeB复制到nodeC和nodeD

scp -r /opt/kafka_2.12-1.0.2/ nodeC:/opt scp -r /opt/kafka_2.12-1.0.2/ nodeD:/opt - nodeC配置 server.properties

vim /opt/kafka_2.12-1.0.2/config/server.properties #配置 broker.id=1 listeners=PLAINTEXT://:9092 advertised.listeners=PLAINTEXT://nodeC:9092 log.dirs=/var/lossdate/kafka/kafka-logs zookeeper.connect=nodeB:2181,nodeC:2181,nodeD:2181/myKafka #其他使用默认配置 - nodeD配置 server.properties

vim /opt/kafka_2.12-1.0.2/config/server.properties #配置 broker.id=2 listeners=PLAINTEXT://:9092 advertised.listeners=PLAINTEXT://nodeD:9092 log.dirs=/var/lossdate/kafka/kafka-logs zookeeper.connect=nodeB:2181,nodeC:2181,nodeD:2181/myKafka #其他使用默认配置 - nodeBCD分别启动

kafka-server-start.sh /opt/kafka_2.12-1.0.2/config/server.properties #或者后台启动 kafka-server-start.sh -daemon /opt/kafka_2.12-1.0.2/config/server.properties - 验证

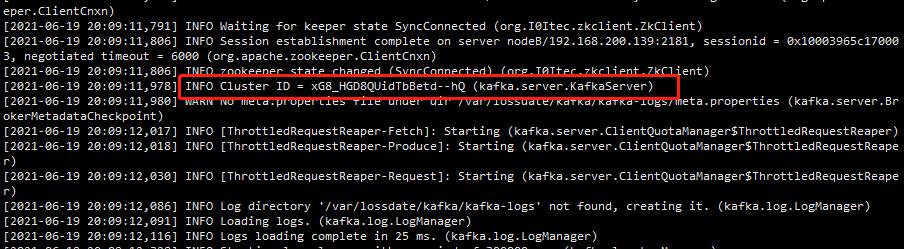

1)查看Cluster Id是否一致

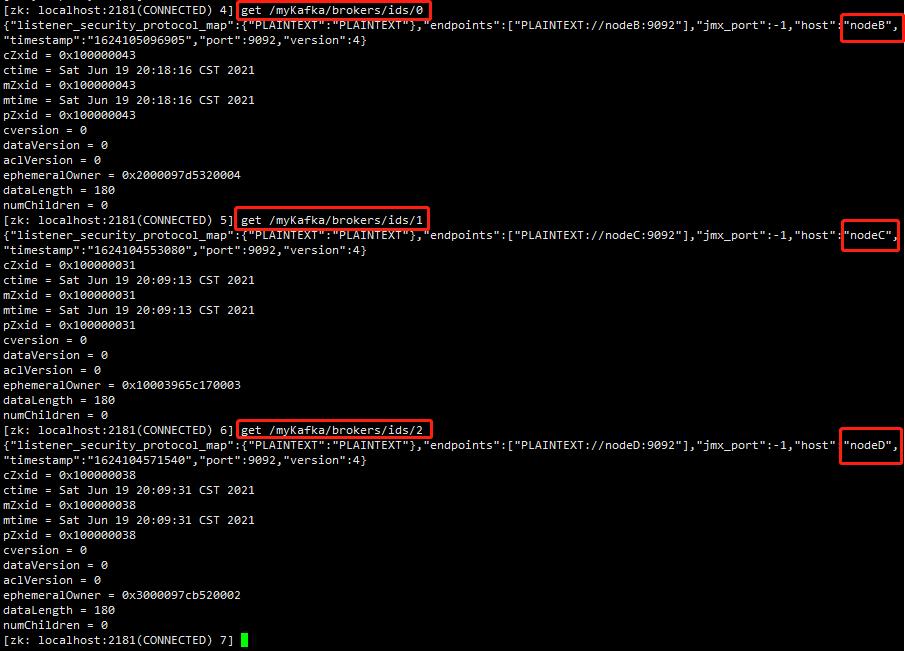

2)查看zookeeper上每个broker的信息zkCli.sh get /myKafka/brokers/ids/0 get /myKafka/brokers/ids/1 get /myKafka/brokers/ids/2

五、监控度量指标

- nodeBCD Kafka开启Jmx端口

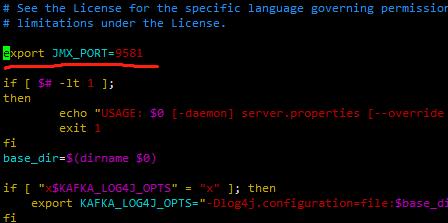

vim /opt/kafka_2.12-1.0.2/bin/kafka-server-start.sh #追加 export JMX_PORT=9581

- nodeBCD 重启kafka

kafka-server-stop.sh kafka-server-start.sh /opt/kafka_2.12-1.0.2/config/server.properties - 验证

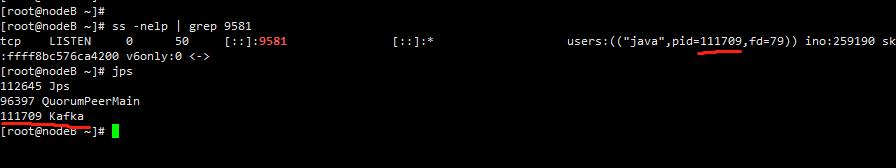

ss -nelp | grep 9581

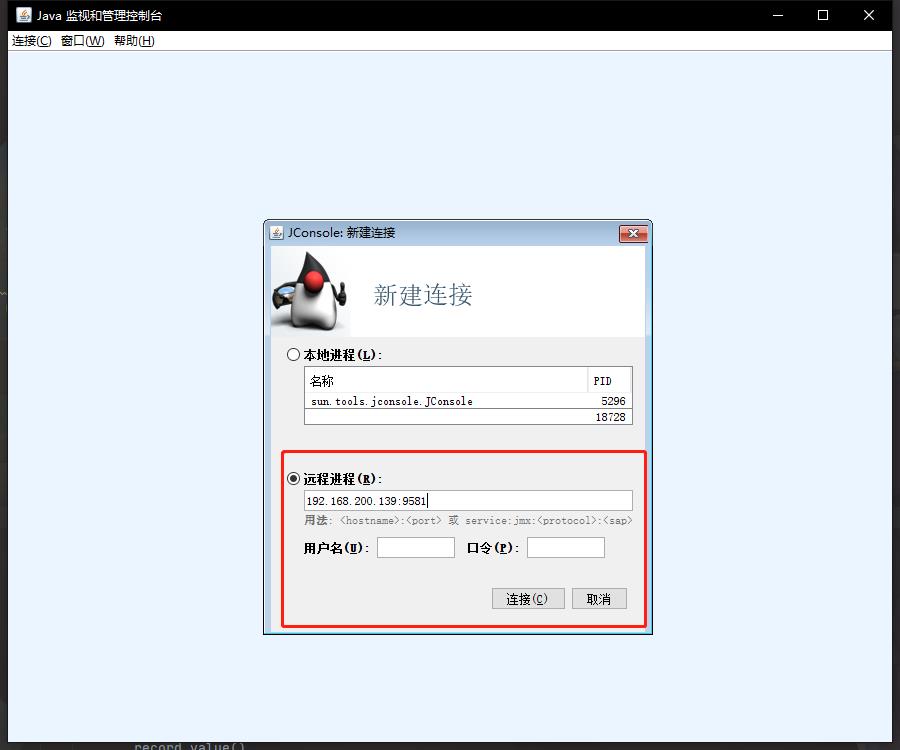

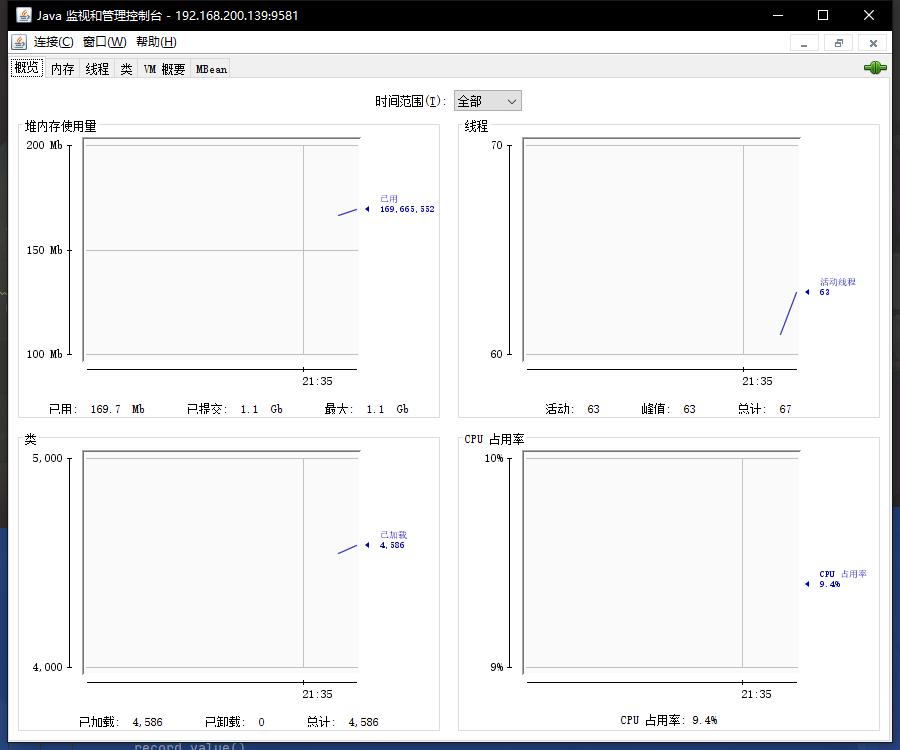

- 使用JConsole链接JMX端口

windows: cmd -> jconsole

未设置用户名和密码,所以无需输入用户名和密码

六、Kafka Eagle 监控工具

- nodeBCD开启JMX

上面的模块五已开启过,则可以跳过

nodeBCD 重启kafkavim /opt/kafka_2.12-1.0.2/bin/kafka-server-start.sh #追加 export JMX_PORT=9581kafka-server-stop.sh kafka-server-start.sh /opt/kafka_2.12-1.0.2/config/server.properties - 下载kafka-eagle

wget http://pkgs-linux.cvimer.com/kafka-eagle.zip - 配置kafka-eagle

unzip kafka-eagle.zip cd kafka-eagle/kafka-eagle-web/target mkdir -p test cp kafka-eagle-web-2.0.1-bin.tar.gz test/ tar xf kafka-eagle-web-2.0.1-bin.tar.gz cd kafka-eagle-web-2.0.1 - 配置环境变量

vim /etc/profile export KE_HOME=/root/kafka-eagle/kafka-eagle-web/target/test/kafka-eagle-web-2.0.1 export PATH=$PATH:$KE_HOME/bin - 生效配置

. /etc/profile - 配置 system-config.properties

cd conf/ vim system-config.properties #修改 kafka.eagle.zk.cluster.alias=cluster1 cluster1.zk.list=nodeB:2181,nodeC:2181,nodeD:2181/myKafka kafka.eagle.url=jdbc:sqlite:/root/hadoop/kafka-eagle/db/ke.db - 创建目录

mkdir -p /root/hadoop/kafka-eagle/db - kafka-run-class.sh 配置

#nodeBCD kafka-run-class.sh 配置 vim /opt/kafka_2.12-1.0.2/bin/kafka-run-class.sh #第一行添加 JMX_PORT=9581 - 启动

#启动kafka kafka-server-stop.sh kafka-server-start.sh -daemon /opt/kafka_2.12-1.0.2/config/server.properties #启动Kafka Eagle ke.sh start - 访问

地址:http://192.168.200.139:8048

账号:admin

密码:123456

以上是关于基于JDK1.8的Kafka集群搭建的主要内容,如果未能解决你的问题,请参考以下文章