深度学习100例 - 卷积神经网络(Inception V3)识别手语 | 第13天

Posted K同学啊

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了深度学习100例 - 卷积神经网络(Inception V3)识别手语 | 第13天相关的知识,希望对你有一定的参考价值。

🔱 大家好,我是 👉 K同学啊,《深度学习100例》系列将持续更新 欢迎 点赞👍、收藏⭐、关注👀

本文将采用 Inception V3 模型实现手语识别,重点是了解 Inception V3 模型的结构及其搭建方法。

一、前期工作

我的环境:

- 语言环境:Python3.6.5

- 编译器:jupyter notebook

- 深度学习环境:TensorFlow2.4.1

推荐阅读:

✨ 这也太强了吧,我用它识别交通标志,准确率竟然高达97.9%

✨ 卷积神经网络(AlexNet)手把手教学-深度学习100例 | 第11天

✨ 循环神经网络(LSTM)实现股票预测-深度学习100例 | 第10天

✨ 深度学习100例-卷积神经网络(CNN)实现mnist手写数字识别 | 第1天

🚀 来自专栏:《深度学习100例》

1. 设置GPU

如果使用的是CPU可以注释掉这部分的代码。

import tensorflow as tf

gpus = tf.config.list_physical_devices("GPU")

if gpus:

tf.config.experimental.set_memory_growth(gpus[0], True) #设置GPU显存用量按需使用

tf.config.set_visible_devices([gpus[0]],"GPU")

2. 导入数据

import matplotlib.pyplot as plt

# 支持中文

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

import os,PIL,pathlib

# 设置随机种子尽可能使结果可以重现

import numpy as np

np.random.seed(1)

# 设置随机种子尽可能使结果可以重现

import tensorflow as tf

tf.random.set_seed(1)

from tensorflow import keras

from tensorflow.keras import layers,models

data_dir = "D:/jupyter notebook/DL-100-days/datasets/gestures"

data_dir = pathlib.Path(data_dir)

3. 查看数据

image_count = len(list(data_dir.glob('*/*')))

print("图片总数为:",image_count)

图片总数为: 12547

二、数据预处理

本文主要是识别24个英文字母的手语姿势(另外两个字母的手语是动作),其中每一个手语姿势图片均有500+张。

1. 加载数据

使用image_dataset_from_directory方法将磁盘中的数据加载到tf.data.Dataset中

batch_size = 8

img_height = 224

img_width = 224

TensorFlow版本是2.2.0的同学可能会遇到module 'tensorflow.keras.preprocessing' has no attribute 'image_dataset_from_directory'的报错,升级一下TensorFlow就OK了。

"""

关于image_dataset_from_directory()的详细介绍可以参考文章:https://mtyjkh.blog.csdn.net/article/details/117018789

"""

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="training",

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

Found 12547 files belonging to 24 classes.

Using 10038 files for training.

"""

关于image_dataset_from_directory()的详细介绍可以参考文章:https://mtyjkh.blog.csdn.net/article/details/117018789

"""

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="validation",

seed=123,

image_size=(img_height, img_width),

batch_size=batch_size)

Found 12547 files belonging to 24 classes.

Using 2509 files for validation.

我们可以通过class_names输出数据集的标签。标签将按字母顺序对应于目录名称。

class_names = train_ds.class_names

print(class_names)

['a', 'b', 'c', 'd', 'e', 'f', 'g', 'h', 'i', 'k', 'l', 'm', 'n', 'o', 'p', 'q', 'r', 's', 't', 'u', 'v', 'w', 'x', 'y']

2. 可视化数据

plt.figure(figsize=(10, 5)) # 图形的宽为10高为5

for images, labels in train_ds.take(1):

for i in range(8):

ax = plt.subplot(2, 4, i + 1)

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(class_names[labels[i]])

plt.axis("off")

plt.imshow(images[1].numpy().astype("uint8"))

3. 再次检查数据

for image_batch, labels_batch in train_ds:

print(image_batch.shape)

print(labels_batch.shape)

break

(8, 224, 224, 3)

(8,)

Image_batch是形状的张量(8, 224, 224, 3)。这是一批形状240x240x3的8张图片(最后一维指的是彩色通道RGB)。Label_batch是形状(8,)的张量,这些标签对应8张图片

4. 配置数据集

- shuffle() : 打乱数据,关于此函数的详细介绍可以参考:https://zhuanlan.zhihu.com/p/42417456

- prefetch() :预取数据,加速运行,其详细介绍可以参考我前两篇文章,里面都有讲解。

- cache() :将数据集缓存到内存当中,加速运行

AUTOTUNE = tf.data.AUTOTUNE

train_ds = train_ds.cache().shuffle(1000).prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)

如果报错AttributeError: module 'tensorflow._api.v2.data' has no attribute 'AUTOTUNE',可以将AUTOTUNE=tf.data.AUTOTUNE更换为AUTOTUNE = tf.data.experimental.AUTOTUNE

三、Inception V3介绍

关于Inception系列的介绍可以见:https://baike.baidu.com/item/Inception%E7%BB%93%E6%9E%84 ,个人认为这些在现阶段只需要将模型走一遍(学会搭建),后期如果需要的话,可以再回头来进行详细研究。

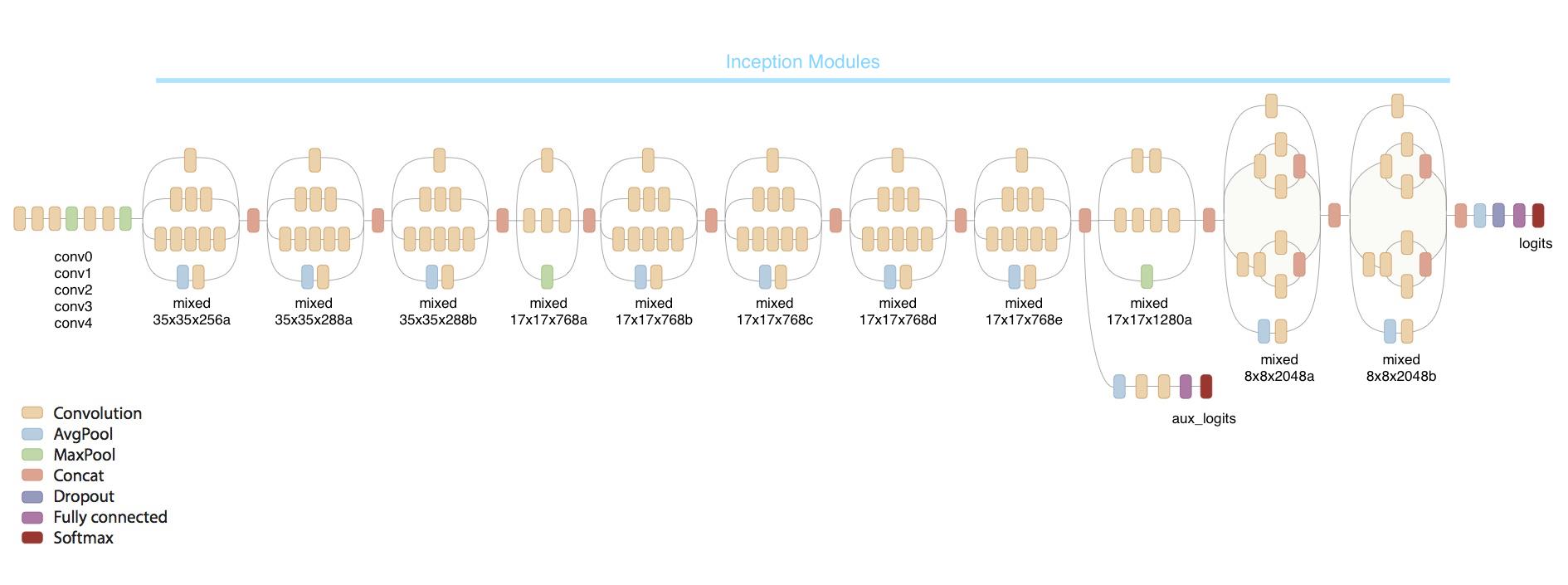

这个模型相比之前写过的一些模型可能较为复杂一些,先放一张图整体感受一下它

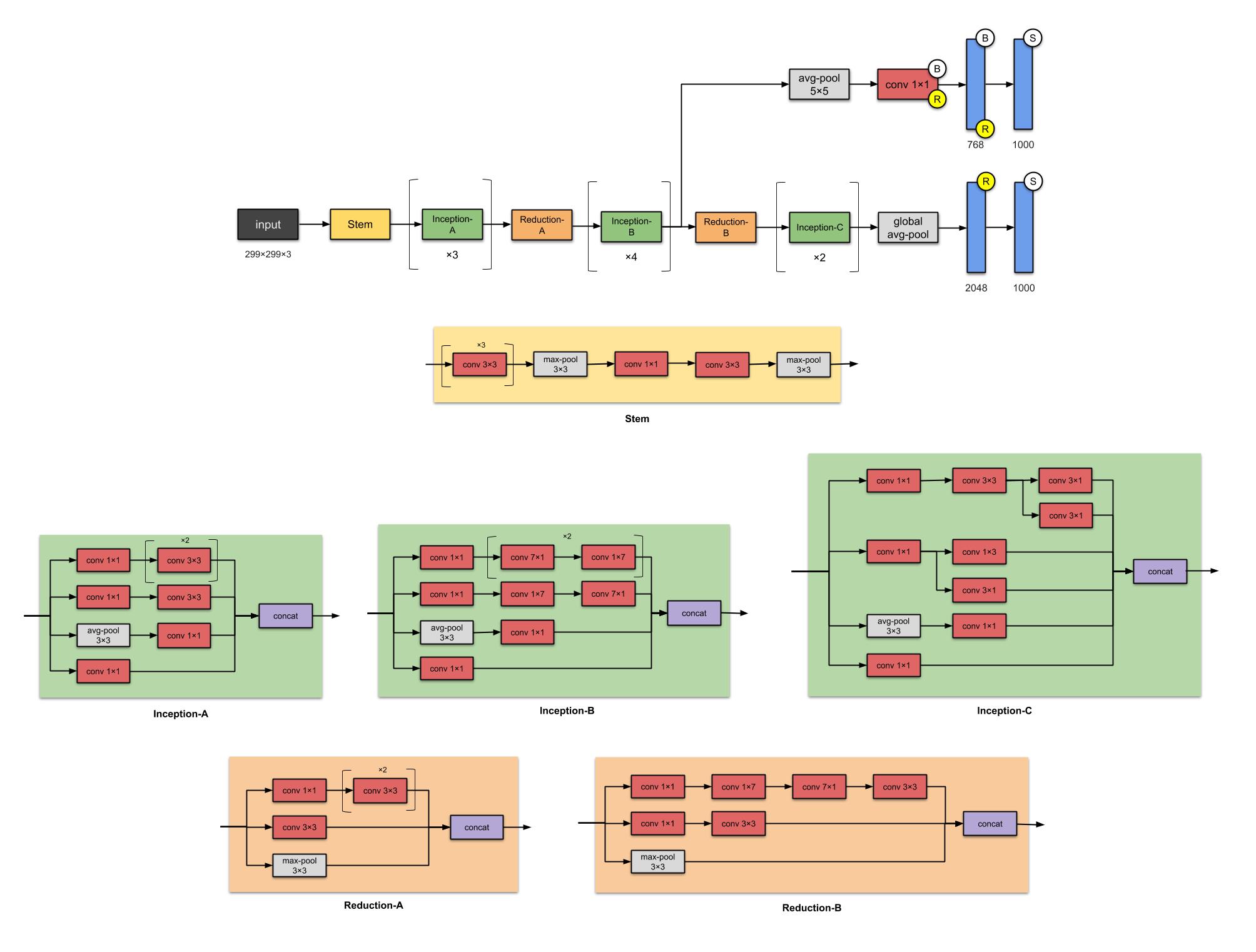

结构图再来一张,这张更为详细,可点击查看大图

关于上面卷积的计算还比较蒙的同学可以参考我这篇文章哈:卷积的计算

四、构建Inception V3网络模型

1.自己搭建

下面是本文的重点 Inception V3 网络模型的构建,可以试着按照上面的图自己构建一下 Inception V3,这部分我主要是参考官网的构建过程,将其单独拎了出来。

#=============================================================

# Inception V3 网络

#=============================================================

from tensorflow.keras.models import Model

from tensorflow.keras import layers

from tensorflow.keras.layers import Activation,Dense,Input,BatchNormalization,Conv2D,AveragePooling2D

from tensorflow.keras.layers import GlobalAveragePooling2D,MaxPooling2D

def conv2d_bn(x,filters,num_row,num_col,padding='same',strides=(1, 1),name=None):

if name is not None:

bn_name = name + '_bn'

conv_name = name + '_conv'

else:

bn_name = None

conv_name = None

x = Conv2D(filters,(num_row, num_col),strides=strides,padding=padding,use_bias=False,name=conv_name)(x)

x = BatchNormalization(scale=False, name=bn_name)(x)

x = Activation('relu', name=name)(x)

return x

def InceptionV3(input_shape=[224,224,3],classes=1000):

img_input = Input(shape=input_shape)

x = conv2d_bn(img_input, 32, 3, 3, strides=(2, 2), padding='valid')

x = conv2d_bn(x, 32, 3, 3, padding='valid')

x = conv2d_bn(x, 64, 3, 3)

x = MaxPooling2D((3, 3), strides=(2, 2))(x)

x = conv2d_bn(x, 80, 1, 1, padding='valid')

x = conv2d_bn(x, 192, 3, 3, padding='valid')

x = MaxPooling2D((3, 3), strides=(2, 2))(x)

#================================#

# Block1 35x35

#================================#

# Block1 part1

# 35 x 35 x 192 -> 35 x 35 x 256

branch1x1 = conv2d_bn(x, 64, 1, 1)

branch5x5 = conv2d_bn(x, 48, 1, 1)

branch5x5 = conv2d_bn(branch5x5, 64, 5, 5)

branch3x3dbl = conv2d_bn(x, 64, 1, 1)

branch3x3dbl = conv2d_bn(branch3x3dbl, 96, 3, 3)

branch3x3dbl = conv2d_bn(branch3x3dbl, 96, 3, 3)

branch_pool = AveragePooling2D((3, 3), strides=(1, 1), padding='same')(x)

branch_pool = conv2d_bn(branch_pool, 32, 1, 1)

x = layers.concatenate([branch1x1, branch5x5, branch3x3dbl, branch_pool],axis=3,name='mixed0')

# Block1 part2

# 35 x 35 x 256 -> 35 x 35 x 288

branch1x1 = conv2d_bn(x, 64, 1, 1)

branch5x5 = conv2d_bn(x, 48, 1, 1)

branch5x5 = conv2d_bn(branch5x5, 64, 5, 5)

branch3x3dbl = conv2d_bn(x, 64, 1, 1)

branch3x3dbl = conv2d_bn(branch3x3dbl, 96, 3, 3)

branch3x3dbl = conv2d_bn(branch3x3dbl, 96, 3, 3)

branch_pool = AveragePooling2D((3, 3), strides=(1, 1), padding='same')(x)

branch_pool = conv2d_bn(branch_pool, 64, 1, 1)

x = layers.concatenate([branch1x1, branch5x5, branch3x3dbl, branch_pool],axis=3,name='mixed1')

# Block1 part3

# 35 x 35 x 288 -> 35 x 35 x 288

branch1x1 = conv2d_bn(x, 64, 1, 1)

branch5x5 = conv2d_bn(x, 48, 1, 1)

branch5x5 = conv2d_bn(branch5x5, 64, 5, 5)

branch3x3dbl = conv2d_bn(x, 64, 1, 1)

branch3x3dbl = conv2d_bn(branch3x3dbl, 96, 3, 3)

branch3x3dbl = conv2d_bn(branch3x3dbl, 96, 3, 3)

branch_pool = AveragePooling2D((3, 3), strides=(1, 1), padding='same')(x)

branch_pool = conv2d_bn(branch_pool, 64, 1, 1)

x = layers.concatenate([branch1x1, branch5x5, branch3x3dbl, branch_pool],axis=3,name='mixed2')

#================================#

# Block2 17x17

#================================#

# Block2 part1

# 35 x 35 x 288 -> 17 x 17 x 768

branch3x3 = conv2d_bn(x, 384, 3, 3, strides=(2, 2), padding='valid')

branch3x3dbl = conv2d_bn(x, 64, 1, 1)

branch3x3dbl = conv2d_bn(branch3x3dbl, 96, 3, 3)

branch3x3dbl = conv2d_bn(branch3x3dbl, 96, 3, 3, strides=(2, 2), padding='valid')

branch_pool = MaxPooling2D((3, 3), strides=(2, 2))(x)

x = layers.concatenate([branch3x3, branch3x3dbl, branch_pool], axis=3, name='mixed3')

# Block2 part2

# 17 x 17 x 768 -> 17 x 17 x 768

branch1x1 = conv2d_bn(x, 192, 1, 1)

branch7x7 = conv2d_bn(x, 128, 1, 1)

branch7x7 = conv2d_bn(branch7x7, 128, 1, 7)

branch7x7 = conv2d_bn(branch7x7, 192, 7, 1)

branch7x7dbl = conv2d_bn(x, 128, 1, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 128, 7, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 128, 1, 7)

branch7x7dbl = conv2d_bn(branch7x7dbl, 128, 7, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 192, 1, 7)

branch_pool = AveragePooling2D((3, 3), strides=(1, 1), padding='same')(x)

branch_pool = conv2d_bn(branch_pool, 192, 1, 1)

x = layers.concatenate([branch1x1, branch7x7, branch7x7dbl, branch_pool],axis=3,name='mixed4')

# Block2 part3 and part4

# 17 x 17 x 768 -> 17 x 17 x 768 -> 17 x 17 x 768

for i in range(2):

branch1x1 = conv2d_bn(x, 192, 1, 1)

branch7x7 = conv2d_bn(x, 160, 1, 1)

branch7x7 = conv2d_bn(branch7x7, 160, 1, 7)

branch7x7 = conv2d_bn(branch7x7, 192, 7, 1)

branch7x7dbl = conv2d_bn(x, 160, 1, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 160, 7, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 160, 1, 7)

branch7x7dbl = conv2d_bn(branch7x7dbl, 160, 7, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 192, 1, 7)

branch_pool = AveragePooling2D(

(3, 3), strides=(1, 1), padding='same')(x)

branch_pool = conv2d_bn(branch_pool, 192, 1, 1)

x = layers.concatenate([branch1x1, branch7x7, branch7x7dbl, branch_pool],axis=3,name='mixed' + str(5 + i))

# Block2 part5

# 17 x 17 x 768 -> 17 x 17 x 768

branch1x1 = conv2d_bn(x, 192, 1, 1)

branch7x7 = conv2d_bn(x, 192, 1, 1)

branch7x7 = conv2d_bn(branch7x7, 192, 1, 7)

branch7x7 = conv2d_bn(branch7x7, 192, 7, 1)

branch7x7dbl = conv2d_bn(x, 192, 1, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 192, 7, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 192, 1, 7)

branch7x7dbl = conv2d_bn(branch7x7dbl, 192, 7, 1)

branch7x7dbl = conv2d_bn(branch7x7dbl, 192, 1, 7)

branch_pool = AveragePooling2D((3, 3), strides=(1, 1), padding='same')(x)

branch_pool = conv2d_bn(branch_pool, 192, 1, 1)

x = layers.concatenate([branch1x1, branch7x7, branch7x7dbl, branch_pool],axis=3,name='mixed7')

#================================#

# Block3 8x8

#================================#

# Block3 part1

# 17 x 17 x 768 -> 8 x 8 x 1280

branch3x3 = conv2d_bn(x, 192, 1, 1)

branch3x3 = conv2d_bn(branch3x3, 320, 3以上是关于深度学习100例 - 卷积神经网络(Inception V3)识别手语 | 第13天的主要内容,如果未能解决你的问题,请参考以下文章

记录|深度学习100例-卷积神经网络(CNN)服装图像分类 | 第3天

记录|深度学习100例-卷积神经网络(CNN)minist数字分类 | 第1天

记录|深度学习100例-卷积神经网络(CNN)彩色图片分类 | 第2天

深度学习100例-卷积神经网络(CNN)识别验证码 | 第12天