小白视角大数据基础实践 分布式数据库HBase的常用操作

Posted 小生凡一

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了小白视角大数据基础实践 分布式数据库HBase的常用操作相关的知识,希望对你有一定的参考价值。

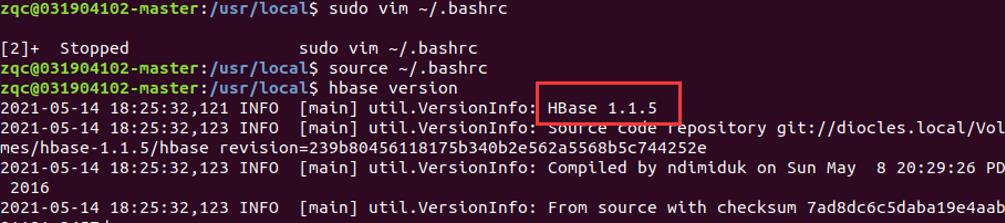

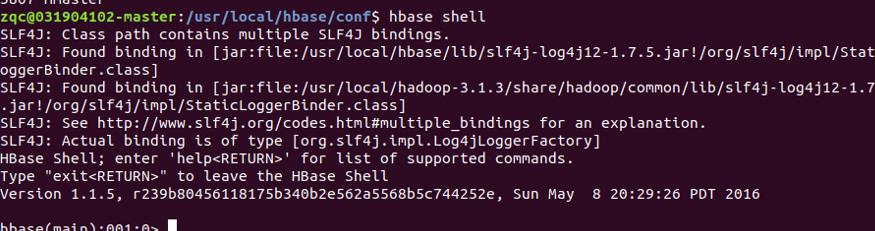

1. 环境配置

⚫ 操作系统:Linux(建议 Ubuntu18.04);

⚫ Hadoop 版本:3.1.3;

⚫ JDK 版本:1.8;

⚫ Java IDE:IDEA;

⚫ Hadoop 伪分布式配置

⚫ HBase1.1.5

2. 操作步骤:

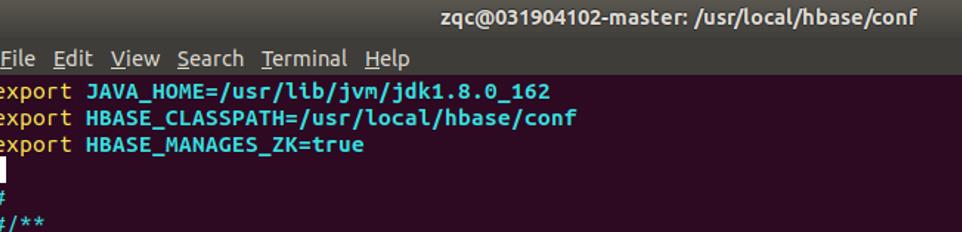

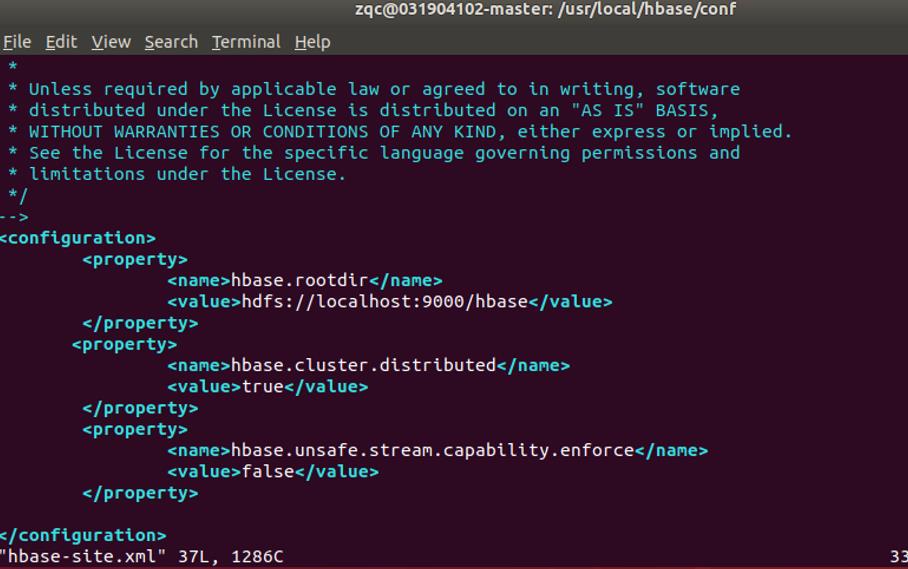

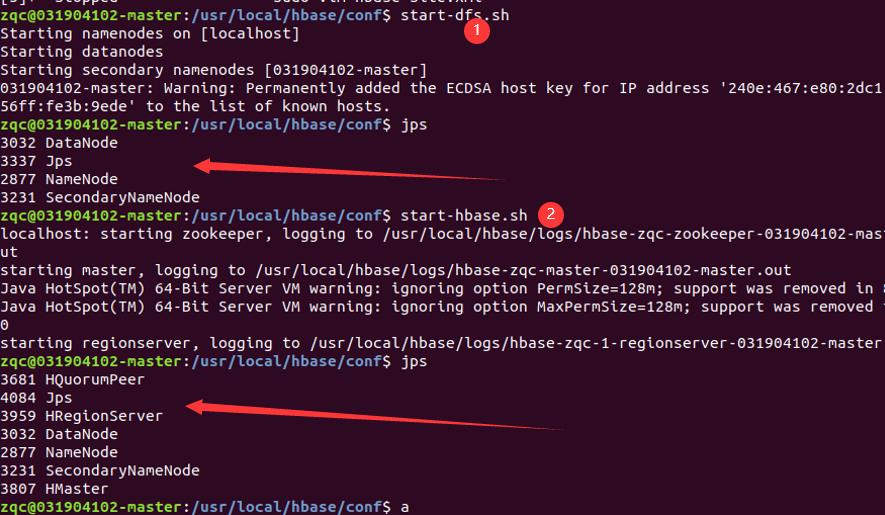

2.1 环境搭建

-

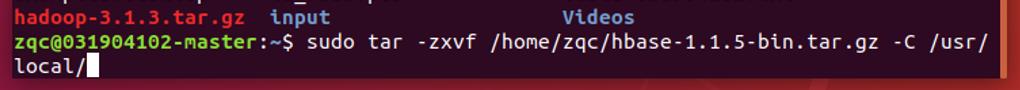

解压压缩包

-

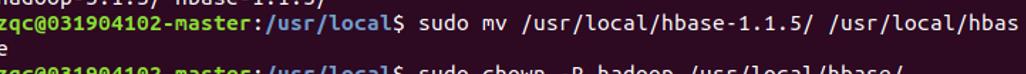

重命名并把权限赋予用户

-

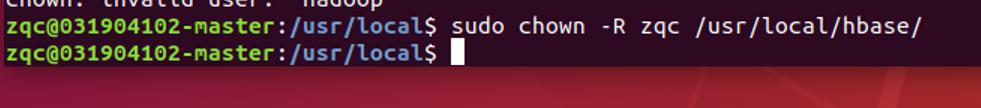

配置环境变量

-

注意一点启动完hadoop之后才能启动hbase

-

进入shell

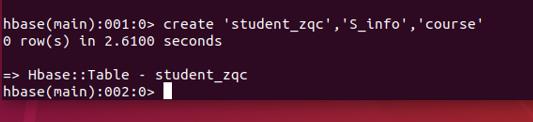

2.2 Hbase Shell

利用Hbase Shell命令完成以下任务,截图要求包含所执行的命令以及命令运行的结果:

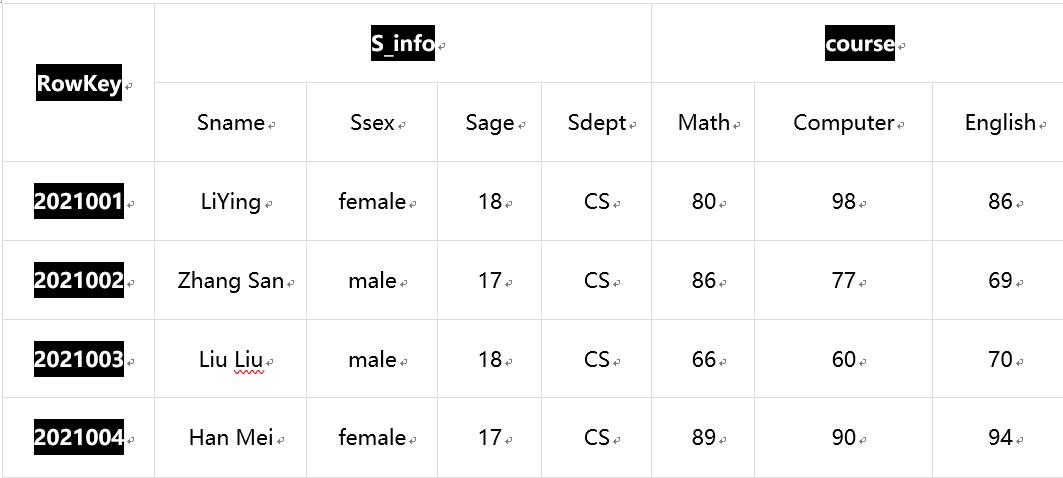

表student_xxx:

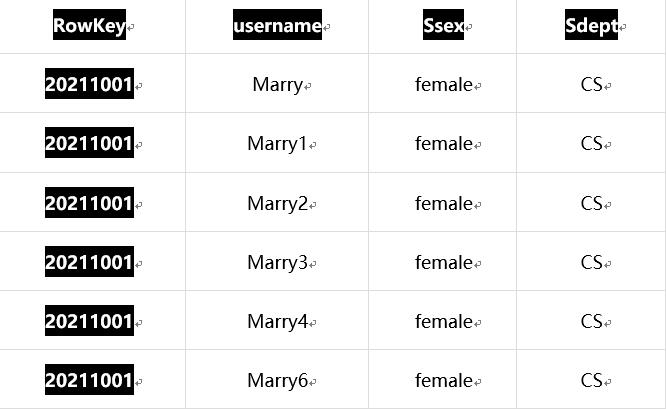

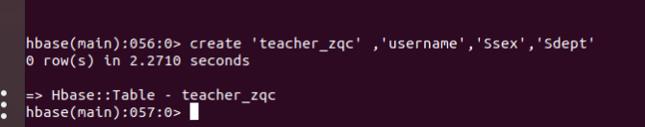

表teacher_xxx

(1) 创建Hbase数据表student_xxx和teacher_xxx(表名称以姓名首字母结尾);

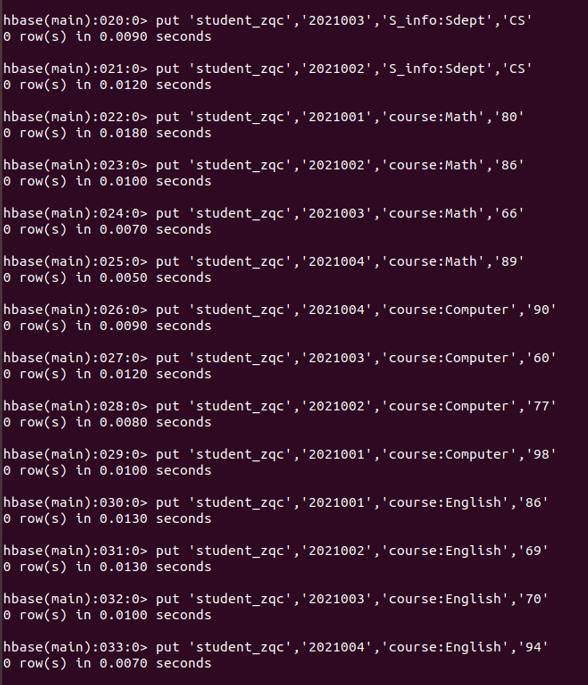

(2) 向student_xxx表中插入数据;

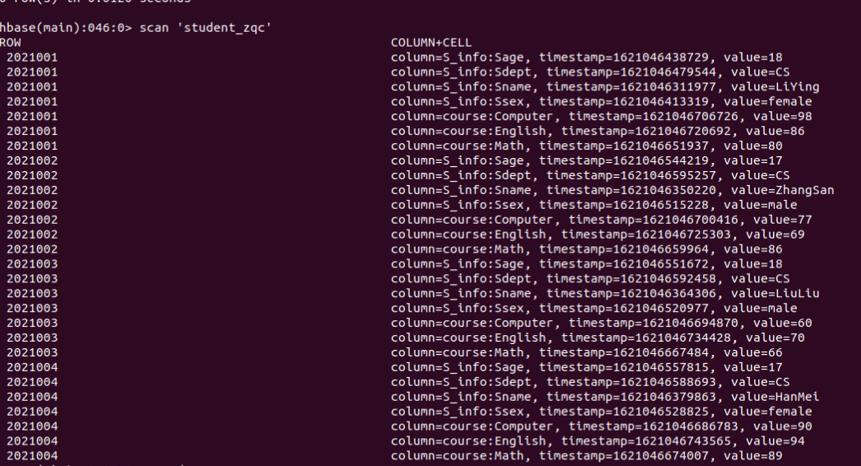

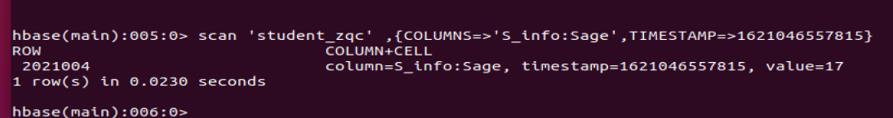

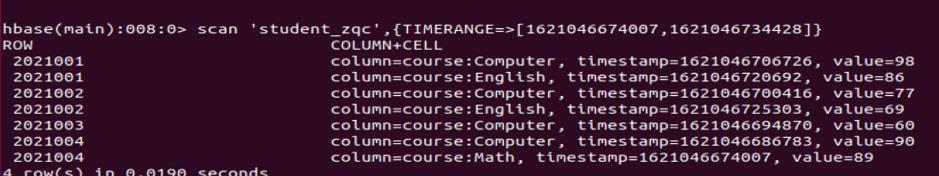

(3) 分别查看student_xxx表所有数据、指定时间戳、指定时间戳范围的数据;

所有数据

指定时间戳

指定时间戳范围

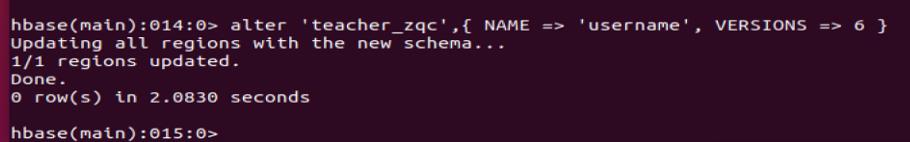

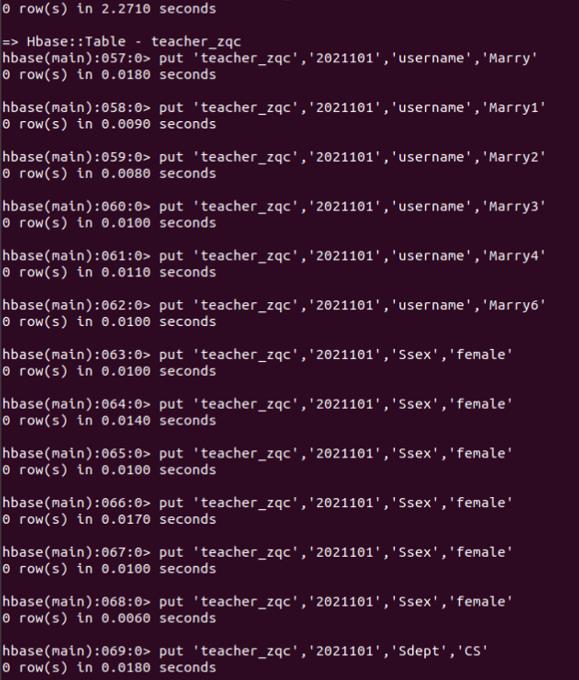

(4) 更改teacher_xxx表的username的VERSIONS>=6,并参考下面teacher表插入数据查看Hbase中所有表;

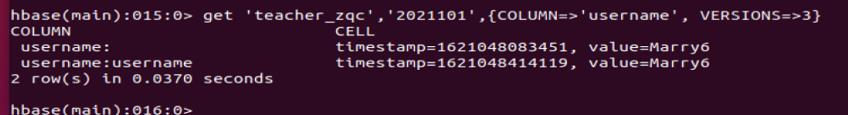

(5) 查看teacher_xxx表特定VERSIONS范围内的数据;

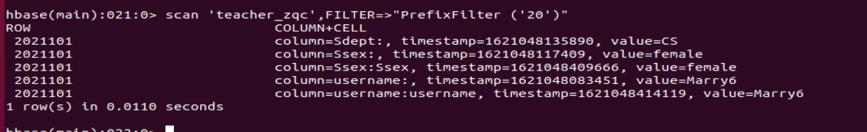

(6) 使用除ValueFilter以外的任意一个过滤器查看teacher_xxx表的值;

rowkey为20开头的值

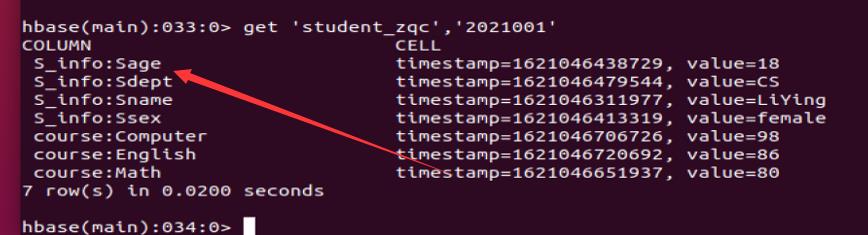

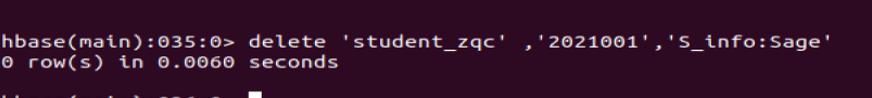

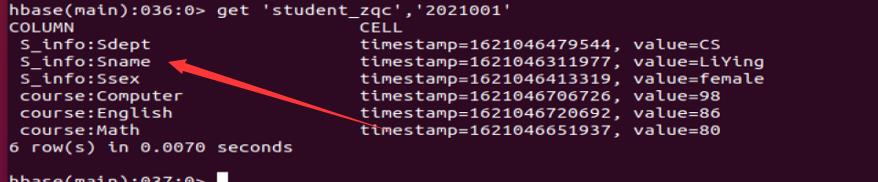

(7) 删除Hbase表中的数据;

-

查看删除前

-

删除Sage

-

查看没有Sage了

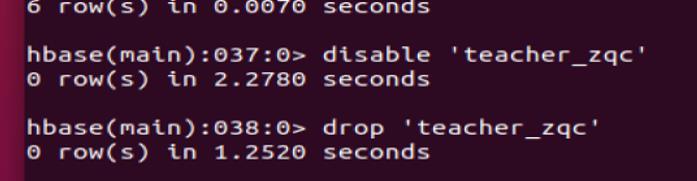

(8) 删除Hbase中的表;

通过hbase shell删除一个表,首先需要将表禁用,然后再进行删除,命令如下:

disable 'tablename'

drop 'tablename'

检验是否存在

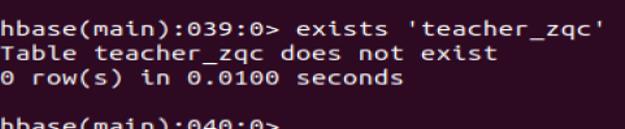

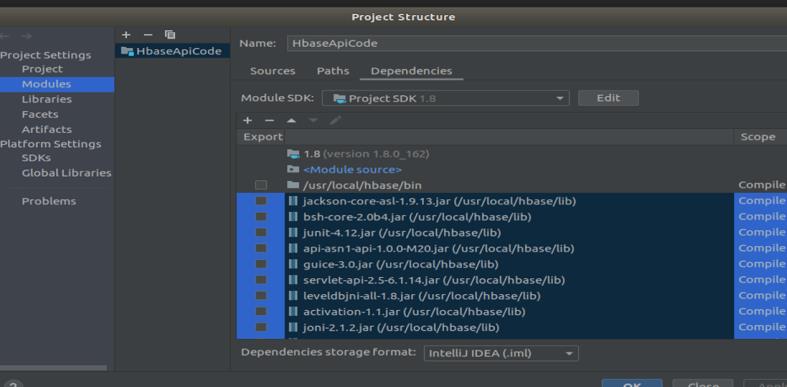

2.3 Java Api

利用Java API编程实现Hbase的相关操作,要求在实验报告中附上完整的源代码以及程序运行前后的Hbase表和数据的情况的截图:

导入所需要的jar包

callrecord_xxx表

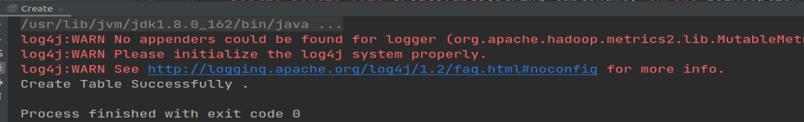

(1) 创建Hbase中的数据表callrecord_xxx(表名称以姓名拼音首字母结尾);

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.HBaseConfiguration;

import java.io.IOException;

public class Create {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

//建立连接

public static void init(){

configuration = HBaseConfiguration.create();

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch (IOException e){

e.printStackTrace();

}

}

//关闭连接

public static void close(){

try{

if(admin != null){

admin.close();

}

if(null != connection){

connection.close();

}

}catch (IOException e){

e.printStackTrace();

}

}

public static void CreateTable(String tableName) throws IOException {

if (admin.tableExists(TableName.valueOf(tableName))) {

System.out.println("Table Exists!!!");

}

else{

HTableDescriptor tableDesc = new HTableDescriptor(tableName);

tableDesc.addFamily(new HColumnDescriptor("baseinfo"));

tableDesc.addFamily(new HColumnDescriptor("baseinfo.calltime"));

tableDesc.addFamily(new HColumnDescriptor("baseinfo.calltype"));

tableDesc.addFamily(new HColumnDescriptor("baseinfo.phonebrand"));

tableDesc.addFamily(new HColumnDescriptor("baseinfo.callplace"));

tableDesc.addFamily(new HColumnDescriptor("baseinfo.callsecond"));

admin.createTable(tableDesc);

System.out.println("Create Table Successfully .");

}

}

public static void main(String[] args) {

String tableName = "callrecord_zqc";

try {

init();

CreateTable(tableName);

close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

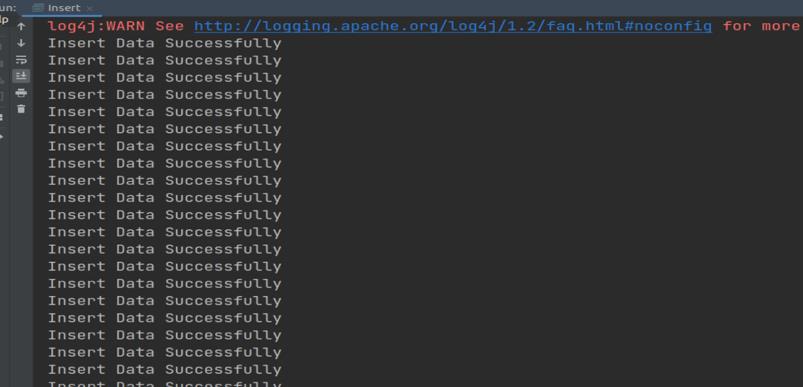

(2) 向Hbase表中插入如下数据;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import java.io.IOException;

public class Insert {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

//建立连接

public static void init(){

configuration = HBaseConfiguration.create();

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch (IOException e){

e.printStackTrace();

}

}

//关闭连接

public static void close(){

try{

if(admin != null){

admin.close();

}

if(null != connection){

connection.close();

}

}catch (IOException e){

e.printStackTrace();

}

}

public static void InsertRow(String tableName, String rowKey, String colFamily, String col, String val) throws IOException {

Table table = connection.getTable(TableName.valueOf(tableName));

Put put = new Put(rowKey.getBytes());

put.addColumn(colFamily.getBytes(), col.getBytes(), val.getBytes());

System.out.println("Insert Data Successfully");

table.put(put);

table.close();

}

public static void main(String[] args) {

String tableName = "callrecord_zqc";

String[] RowKeys = {

"16920210616-20210616-1",

"18820210616-20210616-1",

"16920210616-20210616-2",

"16901236367-20210614-1",

"16920210616-20210614-1",

"16901236367-20210614-2",

"16920210616-20210614-2",

"17720210616-20210614-1",

};

String[] CallTimes = {

"2021-06-16 14:12:16",

"2021-06-16 14:13:16",

"2021-06-16 14:23:16",

"2021-06-14 09:13:16",

"2021-06-14 10:23:16",

"2021-06-14 11:13:16",

"2021-06-14 12:23:16",

"2021-06-14 16:23:16",

};

String[] CallTypes = {

"call",

"call",

"called",

"call",

"called",

"call",

"called",

"called",

};

String[] PhoneBrands = {

"vivo",

"Huawei",

"Vivo",

"Huawei",

"Vivo",

"Huawei",

"Vivo",

"Oppo",

};

String[] CallPlaces = {

"Fuzhou",

"Fuzhou",

"Fuzhou",

"Fuzhou",

"Fuzhou",

"Fuzhou",

"Fuzhou",

"Fuzhou",

};

String[] CallSeconds = {

"66",

"96",

"136",

"296",

"16",

"264",

"616",

"423",

};

try {

init();

int i = 0;

while (i < RowKeys.length){

InsertRow(tableName, RowKeys[i], "baseinfo", "calltime", CallTimes[i]);

InsertRow(tableName, RowKeys[i], "baseinfo", "calltype", CallTypes[i]);

InsertRow(tableName, RowKeys[i], "baseinfo", "phonebrand", PhoneBrands[i]);

InsertRow(tableName, RowKeys[i], "baseinfo", "callplace", CallPlaces[i]);

InsertRow(tableName, RowKeys[i], "baseinfo", "callsecond", CallSeconds[i]);

i++;

}

close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

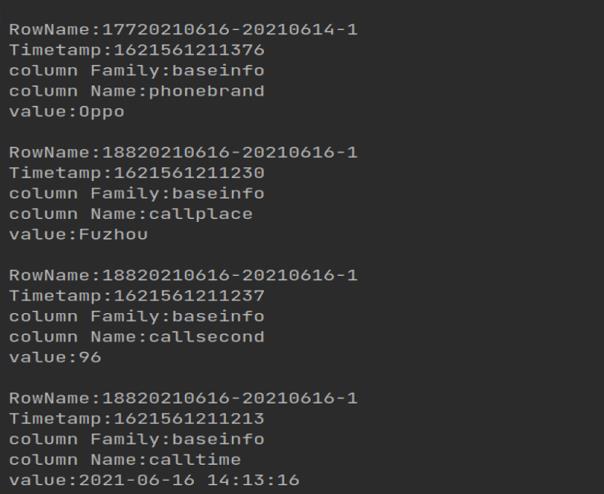

(3) 获取Hbase某张表的所有数据,并返回查询结果;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import java.io.IOException;

public class List {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

//建立连接

public static void init(){

configuration = HBaseConfiguration.create();

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch (IOException e){

e.printStackTrace();

}

}

//关闭连接

public static void close(){

try{

if(admin != null){

admin.close();

}

if(null != connection){

connection.close();

}

}catch (IOException e){

e.printStackTrace();

}

}

public static void GetData(String tableName)throws IOException{

Table table = connection.getTable(TableName.valueOf(tableName));

Scan scan = new Scan();

ResultScanner scanner = table.getScanner(scan);

for(Result result:scanner)

{

ShowCell((result));

}

}

public static void ShowCell(Result result){

Cell[] cells = result.rawCells();

for(Cell cell:cells){

System.out.println("RowName:"+new String(CellUtil.cloneRow(cell))+" ");

System.out.println("Timetamp:"+cell.getTimestamp()+" ");

System.out.println("column Family:"+new String(CellUtil.cloneFamily(cell))+" ");

System.out.println("column Name:"+new String(CellUtil.cloneQualifier(cell))+" ");

System.out.println("value:"+new String(CellUtil.cloneValue(cell))+" ");

System.out.println();

}

}

public static void main(String[] args) {

String tableName = "callrecord_zqc";

try {

init();

GetData(tableName);

close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

(4) 删除Hbase表中的某条或者某几条数据,并查看删除前后表中的数据情况;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.*;

import java.io.IOException;

public class DeleteData {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

//建立连接

public static void init(){

configuration = HBaseConfiguration.create();

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch (IOException e){

e.printStackTrace();

}

}

//关闭连接

public static void close(){

try{

if(admin != null){

admin.close(以上是关于小白视角大数据基础实践 分布式数据库HBase的常用操作的主要内容,如果未能解决你的问题,请参考以下文章