K8S——关于K8S控制台的yaml文件编写(基于上一章多节点K8S部署)

Posted 小白的成功进阶之路

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了K8S——关于K8S控制台的yaml文件编写(基于上一章多节点K8S部署)相关的知识,希望对你有一定的参考价值。

K8S——关于K8S控制台的yaml文件编写(基于上一章多节点K8S部署)

一、yaml文件编写流程

rbac.yaml---->secret.yaml---->configmap.yaml---->controller.yaml----->dashboard.yaml

#dashboard-rbac.yaml文件

vim dashboard-rbac.yaml

kind: Role #角色

apiVersion: rbac.authorization.k8s.io/v1 #api版本号(有专门的版本号控制)

metadata: #源信息

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-minimal #创建的资源名称

namespace: kube-system

rules: #参数信息的传入

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system #名称空间的管理(默认为default)

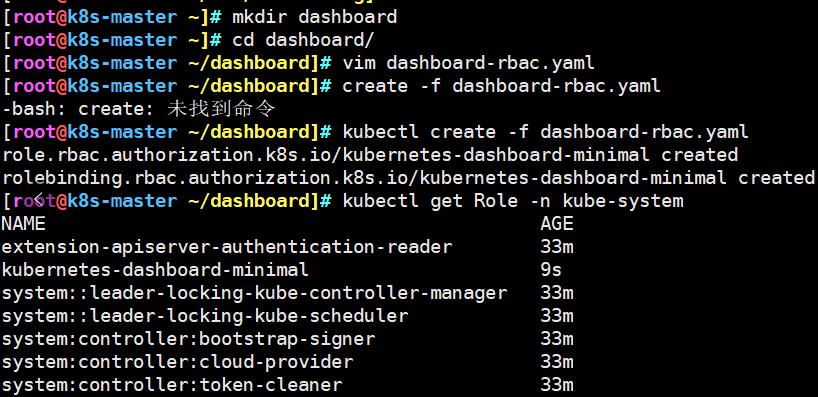

#创建dashboard-rbac.yaml资源

kubectl create -f dashboard-rbac.yaml

#使用-n 查看Role角色kube-system名称空间中的资源

kubectl get Role -n kube-system

------------------------------------------------------------------------

#dashboard-secret.yaml文件

vim dashboard-secret.yaml

apiVersion: v1

kind: Secret #角色

metadata: #源信息

labels:

k8s-app: kubernetes-dashboard

# Allows editing resource and makes sure it is created first.

addonmanager.kubernetes.io/mode: EnsureExists

name: kubernetes-dashboard-certs #资源名称

namespace: kube-system #命名空间

type: Opaque

--- #--- 分段

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

# Allows editing resource and makes sure it is created first.

addonmanager.kubernetes.io/mode: EnsureExists

name: kubernetes-dashboard-key-holder #密钥

namespace: kube-system

type: Opaque

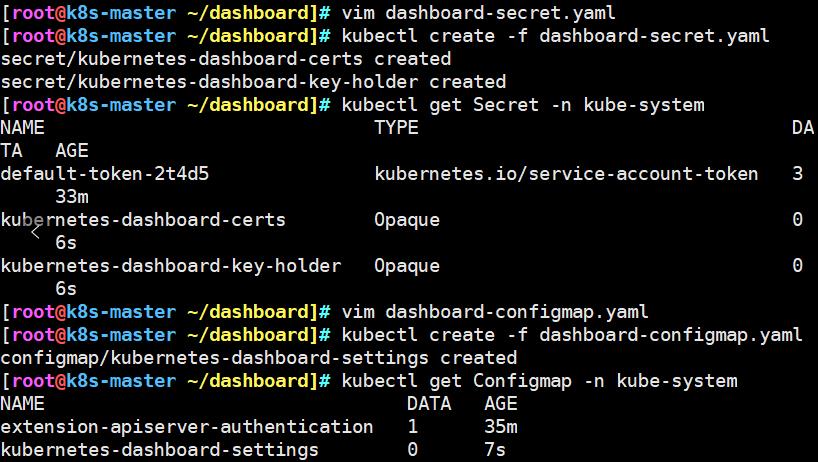

#创建dashboard-secret.yaml 资源

kubectl create -f dashboard-secret.yaml

kubectl get Secret -n kube-system

------------------------------------------------------------------------

#dashboard-configmap.yaml 配置管理文件

vim dashboard-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

k8s-app: kubernetes-dashboard

# Allows editing resource and makes sure it is created first.

addonmanager.kubernetes.io/mode: EnsureExists

name: kubernetes-dashboard-settings

namespace: kube-system

#创建dashboard-configmap.yaml资源

kubectl create -f dashboard-configmap.yaml

kubectl get Configmap -n kube-system

------------------------------------------------------------------------

#dashboard-controller.yaml 控制器文件

vim dashboard-controller.yaml

apiVersion: v1

kind: ServiceAccount #控制器名称(服务访问)

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment #控制器名称

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

priorityClassName: system-cluster-critical

containers: #资源指定的名称、镜像

- name: kubernetes-dashboard

image: siriuszg/kubernetes-dashboard-amd64:v1.8.3

resources: #设置了CPU和内存的上限

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 50m

memory: 100Mi

ports:

- containerPort: 8443 #8443提供对外的端口号(HTTPS协议)

protocol: TCP

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

volumeMounts: #容器卷

- name: kubernetes-dashboard-certs

mountPath: /certs

- name: tmp-volume

mountPath: /tmp

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

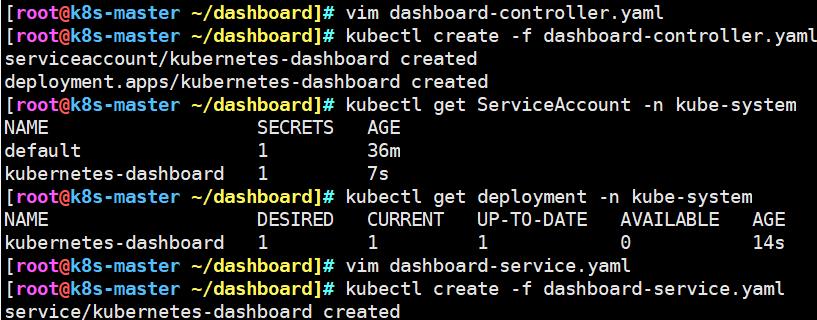

#创建 dashboard-controller.yaml 资源

kubectl create -f dashboard-controller.yaml

kubectl get ServiceAccount -n kube-system

kubectl get deployment -n kube-system

------------------------------------------------------------------------

#dashboard-service.yaml 服务

vim dashboard-service.yaml

apiVersion: v1

kind: Service #控制器名称

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

type: NodePort #提供的形式(访问node节点提供出来的端口,即nodeport

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 443 #内部提供

targetPort: 8443 #Pod内部端口

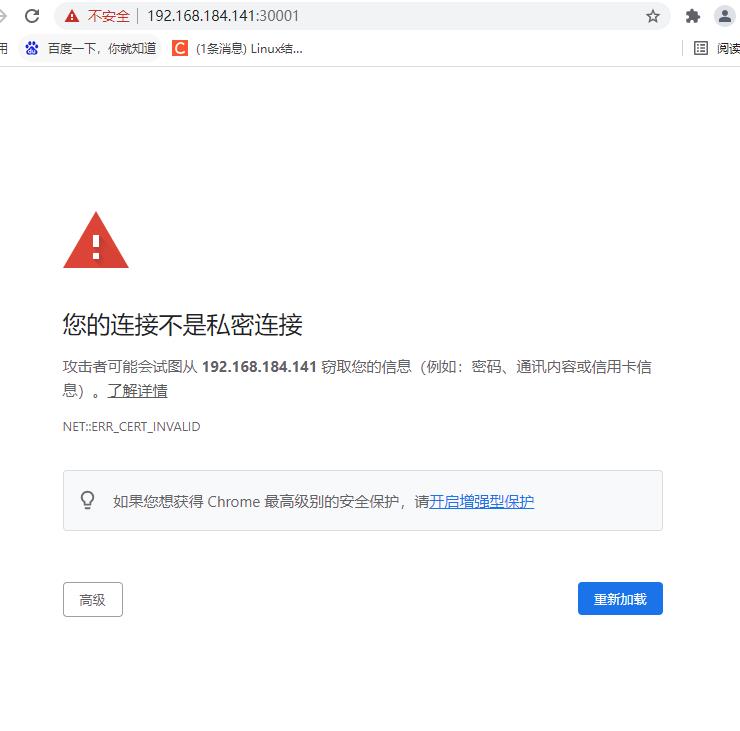

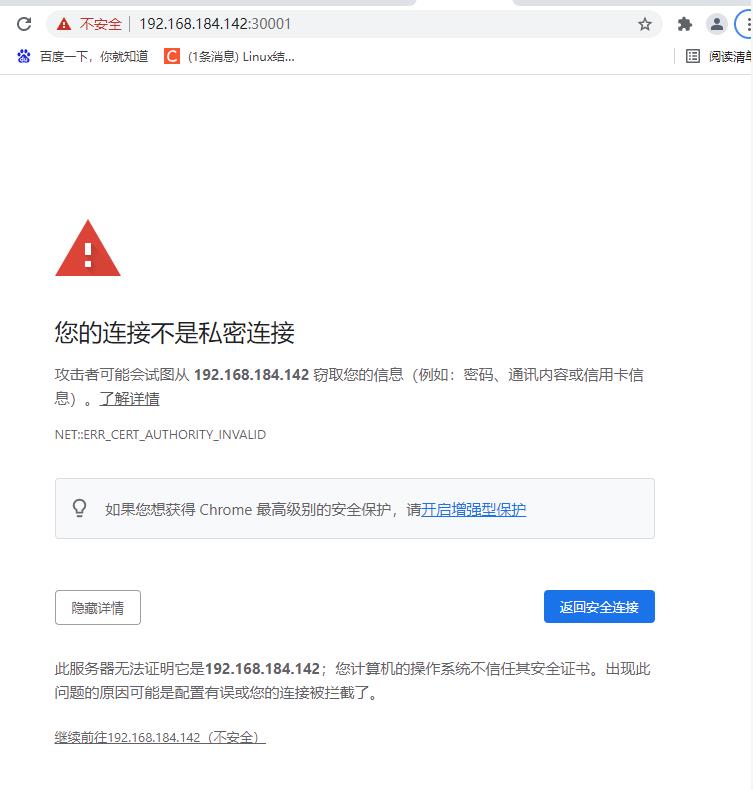

nodePort: 30001 #节点对外提供的端口(映射端口)

#创建dashboard-service.yaml资源

kubectl create -f dashboard-service.yaml

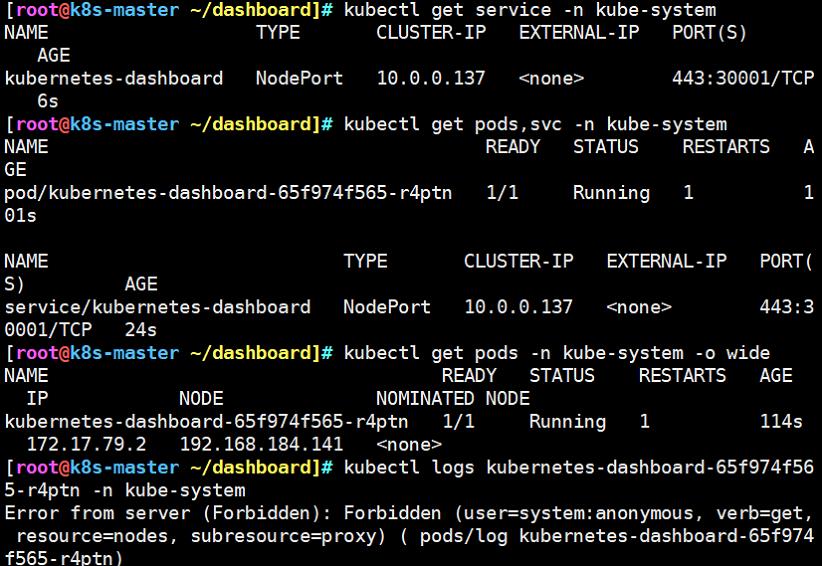

kubectl get service -n kube-system

------------------------------------------------------------------------

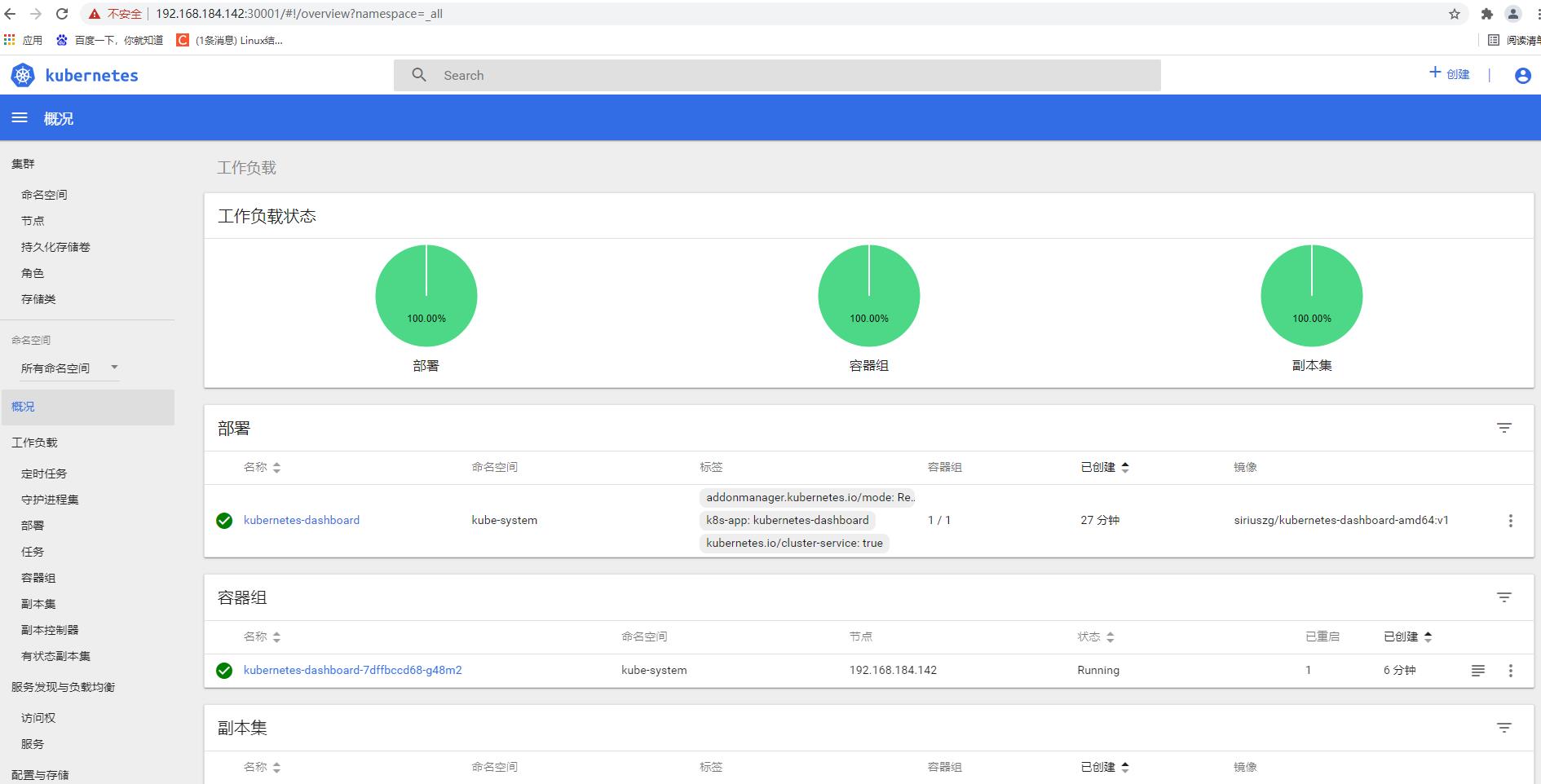

#查看pod资源

kubectl get pods,svc -n kube-system

#查看资源分配的位置

kubectl get pods -n kube-system -o wide

#查看此pod资源的日志

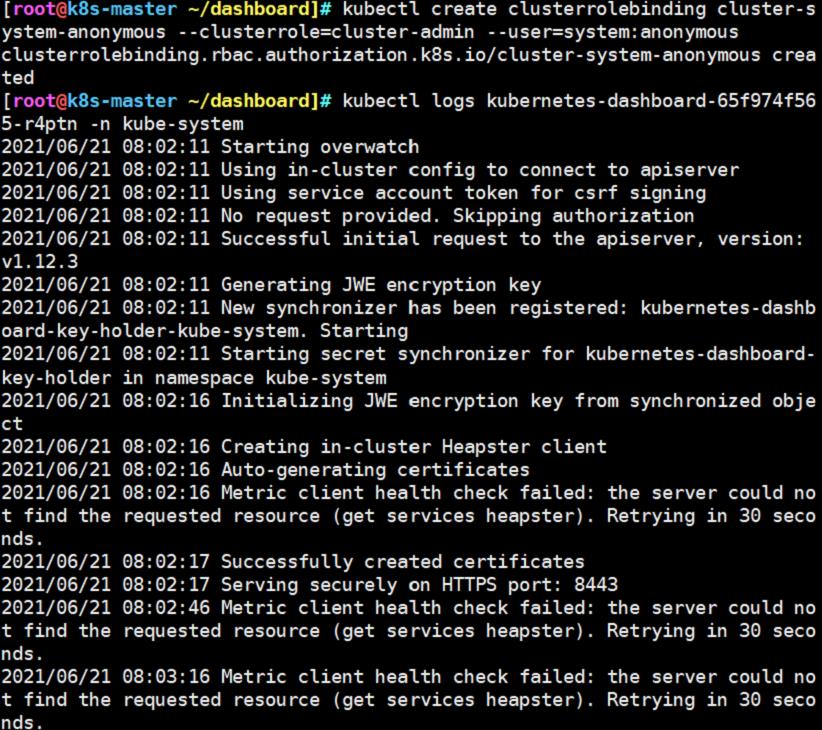

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

clusterrolebinding.rbac.authorization.k8s.io/cluster-system-anonymous created

kubectl logs kubernetes-dashboard-65f974f565-bh5zh -n kube-system

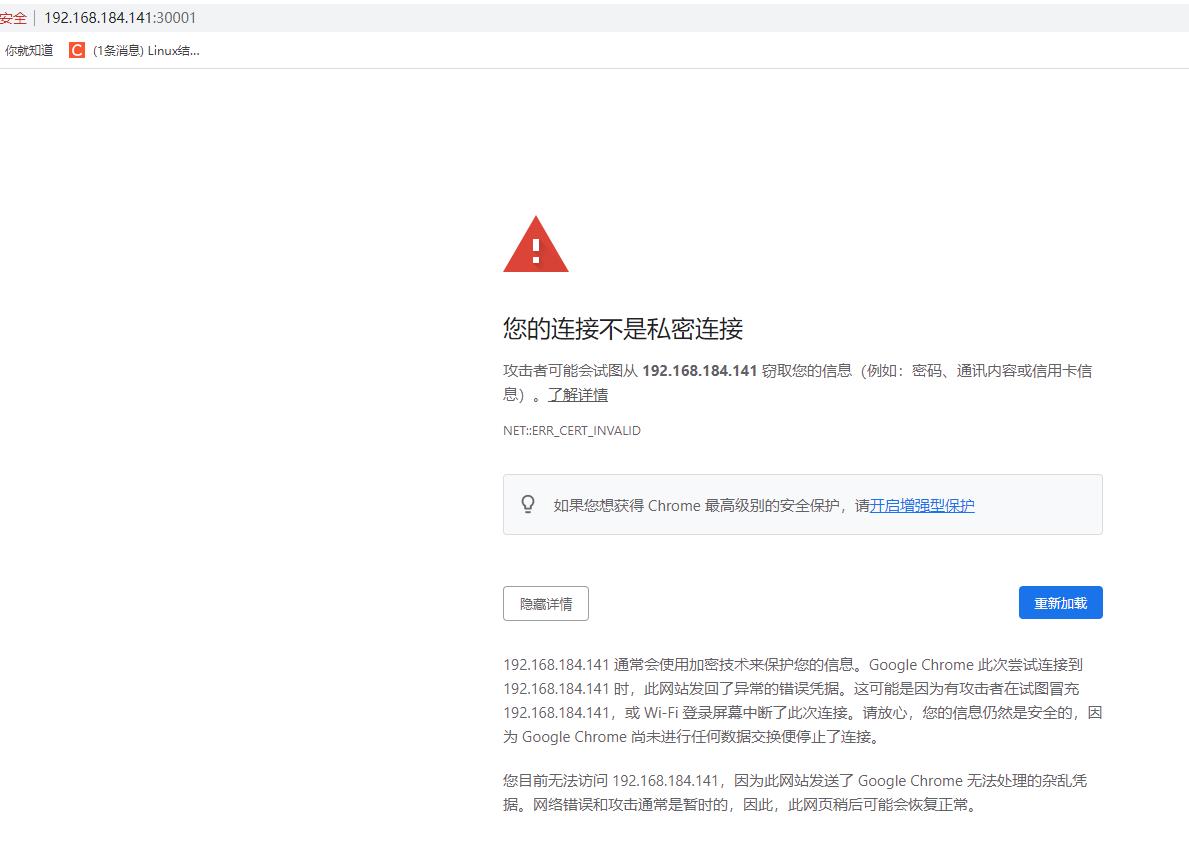

二、证书自签

vim dashboard-cert.sh

cat > dashboard-csr.json <<EOF #创建json格式的csr签名文件

{

"CN": "Dashboard",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

K8S_CA=$1

#以下产生CA证书

cfssl gencert -ca=$K8S_CA/ca.pem -ca-key=$K8S_CA/ca-key.pem -config=$K8S_CA/ca-config.json -profile=kubernetes dashboard-csr.json | cfssljson -bare dashboard

#删除原本的证书凭据

kubectl delete secret kubernetes-dashboard-certs -n kube-system

#重新创建一个证书凭据

kubectl create secret generic kubernetes-dashboard-certs --from-file=./ -n kube-system

------》wq

#生成证书

bash dashboard-cert.sh /root/k8s/k8s-cert/

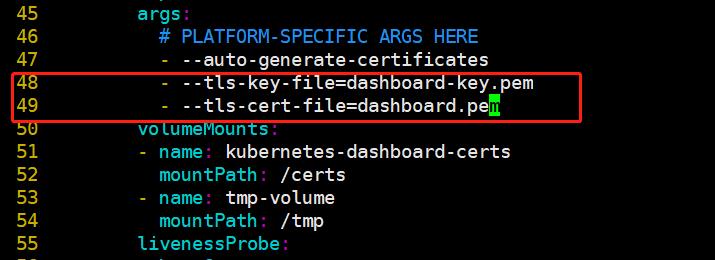

#编辑dashboard-controller.yaml,指向证书位置,完成证书自签

vim dashboard-controller.yaml

----47行左右添加/修改

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

- --tls-key-file=dashboard-key.pem

- --tls-cert-file=dashboard.pem

#重新部署

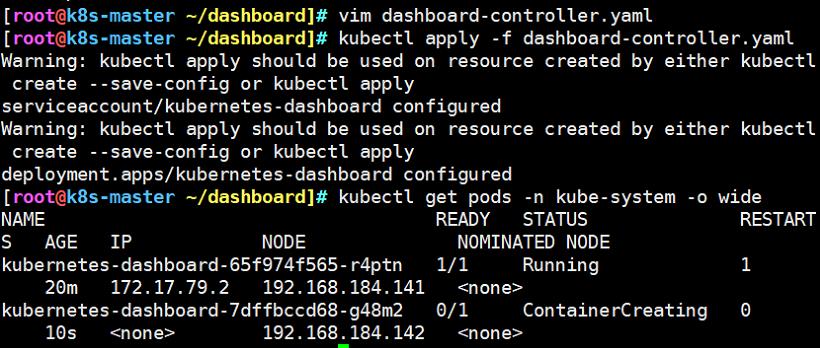

kubectl apply -f dashboard-controller.yaml

#需要注意一个问题,在重新部署的时候,资源可能会分配到其他节点,再次查看pod资源位置

kubectl get pods -n kube-system -o wide

#生成令牌 k8s-admin.yaml

vim k8s-admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard-admin #创建dashboard-admin的资源,相当于一个管理员账户

namespace: kube-system

---

kind: ClusterRoleBinding #绑定群集用户角色

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: dashboard-admin #群集用户角色其实就是管理员的身份

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

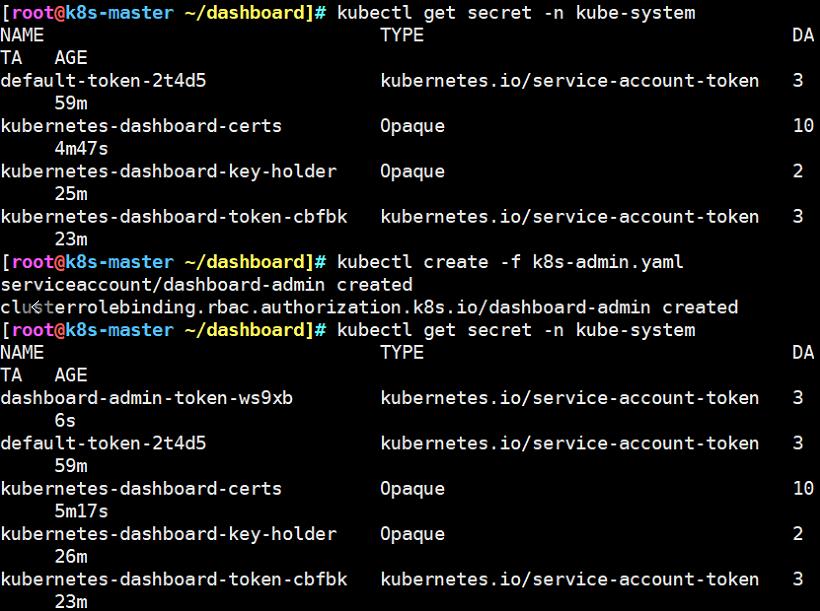

#先查看secret(安全角色)的命名空间中的资源

kubectl get secret -n kube-system

#生成令牌

kubectl create -f k8s-admin.yaml

#再次查看secret资源

kubectl get secret -n kube-system

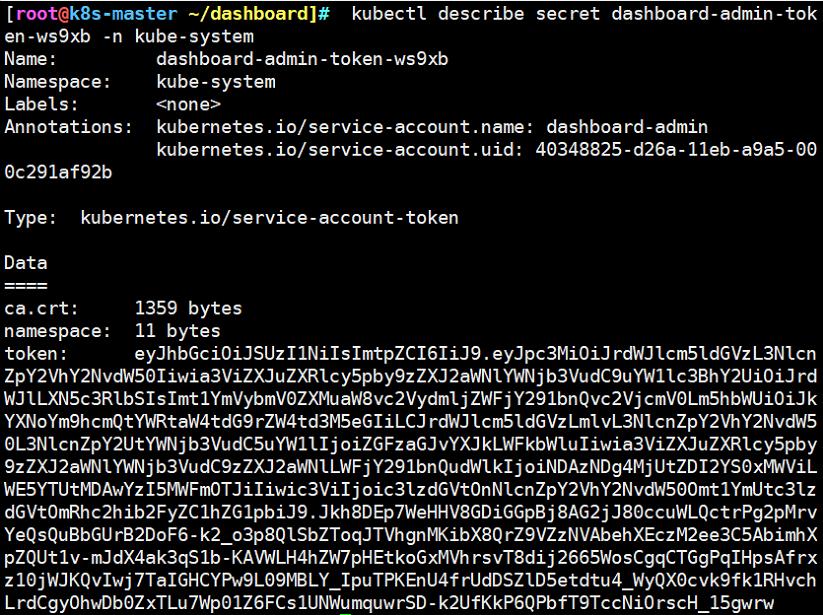

#详细查看令牌信息

kubectl describe secret dashboard-admin-token-dpjdk -n kube-system

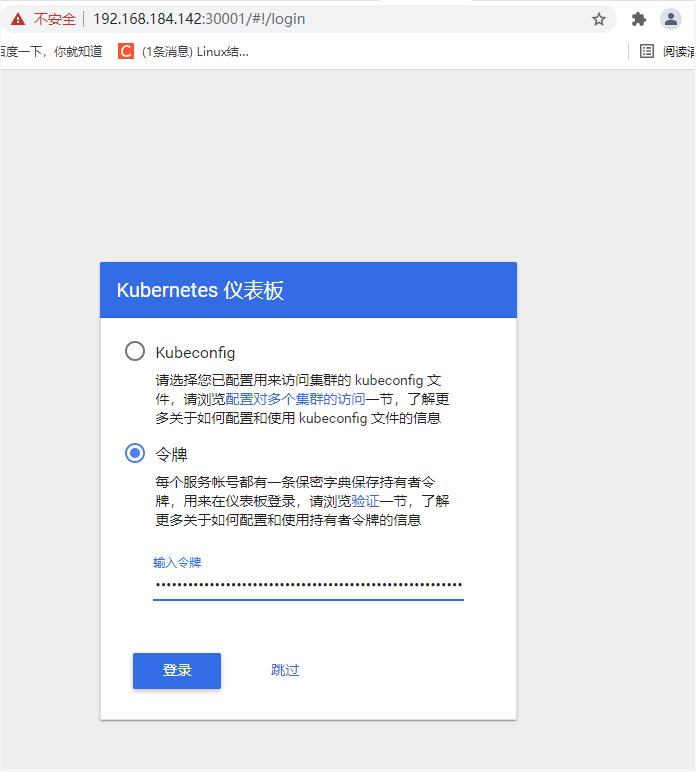

(最下方为token令牌,进行复制,登录web控制台需要使用)

以上是关于K8S——关于K8S控制台的yaml文件编写(基于上一章多节点K8S部署)的主要内容,如果未能解决你的问题,请参考以下文章

❤️不会写K8S资源编排yaml文件?一文教会你如何掌握编写yaml文件的技巧❤️