MySQL进阶垫脚石:线程长时间处于killed状态怎么破?

Posted java构架师

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了MySQL进阶垫脚石:线程长时间处于killed状态怎么破?相关的知识,希望对你有一定的参考价值。

一、背景

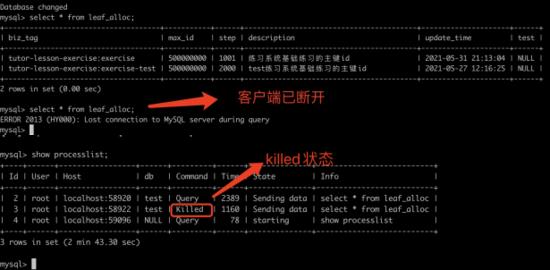

mysql中使用kill命令去杀死连接时,如果使用show processlist会发现线程会处于killed状态一段时间,而不是立即杀掉。一些情况下,killed状态可能会存在很久,甚至可能会一直存在直到发送第二次kill命令才能杀掉连接。下面从 MySQL 执行kill命令代码流程(基于5.7版本的 MySQL )简单分析下出现这种现象的原因。

二、源码分析

1、MySQL 执行流程简介

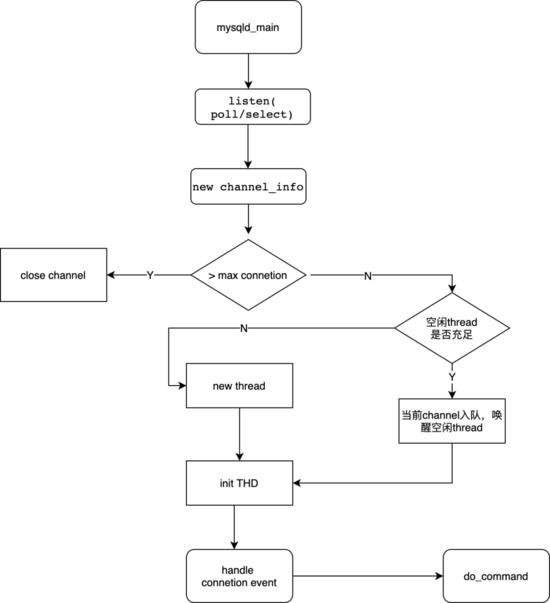

MySQL 的启动入口函数为mysqld中的main函数,主要流程会启动一个线程去listen端口,accept tcp连接,并创建一个connection并与具体的线程绑定,去处理来自客户端的消息。

执行流程:

日常执行kill流程,一般是通过mysql命令行客户端新起一个连接,通过show processlist找到需要kill掉的连接的conncetion_id,然后发送kill命令。

注:kill + 连接id 默认是kill connection,代表断开连接,如果是kill query + 连接id则只是终止本次执行的语句,连接还会继续监听来自client的命令。(具体执行区别可以参考下面KILL工作流程1中(1)、(2)部分)

2、KILL工作流程

概念:

- connection: socket连接,默认有一个max_connection,实际上可以接受max_connection + 1个连接,最后一个连接是预留给SUPER用户的。

- pthread: mysql的工作线程,每个connection建立时都会分配一个pthread,connection断开后pthread仍旧可以被其他connection复用。

- thd: 线程描述类,每个connection对应一个thd,其中包含很多连接状态的描述,其中thd->killed字段标识线程是否需要被kill。

为方便说明,假设有两个连接connection1, connection2, 对应上述流程,则是connection1在do_command或者listen socket event中时,通过connection2发送kill命令,中断connection1的执行流程。

kill connection之后,对应此连接的pthread可能会被新连接复用(具体后面会分析)。

1)执行kill命令的线程发起kill

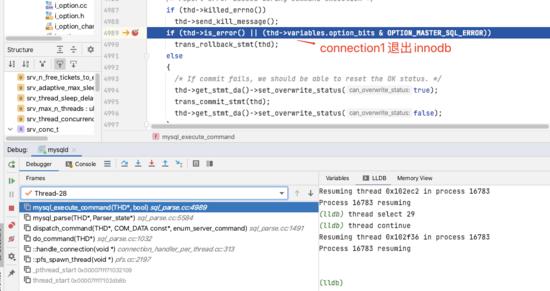

以connection2的执行流程来分析kill的执行过程,跟踪do_command之后的代码堆栈可以看到:

* frame #0: 0x00000001068a8853 mysqld`THD::awake(this=0x00007fbede88b400, state_to_set=KILL_CONNECTION) at sql_class.cc:2029:27

frame #1: 0x000000010695961f mysqld`kill_one_thread(thd=0x00007fbed6bc9c00, id=2, only_kill_query=false) at sql_parse.cc:6479:14

frame #2: 0x0000000106946529 mysqld`sql_kill(thd=0x00007fbed6bc9c00, id=2, only_kill_query=false) at sql_parse.cc:6507:16

frame #3: 0x000000010694e0fa mysqld`mysql_execute_command(thd=0x00007fbed6bc9c00, first_level=true) at sql_parse.cc:4210:5

frame #4: 0x0000000106945d62 mysqld`mysql_parse(thd=0x00007fbed6bc9c00, parser_state=0x000070000de2f340) at sql_parse.cc:5584:20

frame #5: 0x0000000106942bf0 mysqld`dispatch_command(thd=0x00007fbed6bc9c00, com_data=0x000070000de2fe78, command=COM_QUERY) at sql_parse.cc:1491:5

frame #6: 0x0000000106944e70 mysqld`do_command(thd=0x00007fbed6bc9c00) at sql_parse.cc:1032:17

frame #7: 0x0000000106ad3976 mysqld`::handle_connection(arg=0x00007fbee220b8d0) at

connection_handler_per_thread.cc:313:13

frame #8: 0x000000010749e74c mysqld`::pfs_spawn_thread(arg=0x00007fbee15dcf90) at pfs.cc:2197:3

frame #9: 0x00007fff734b6109 libsystem_pthread.dylib`_pthread_start + 148

frame #10: 0x00007fff734b1b8b libsystem_pthread.dylib`thread_start + 15

核心代码为awake函数(为方便,分为3段分析):

① 设置线程killed flag状态

if (this->m_server_idle && state_to_set == KILL_QUERY)

{ /* nothing */ }

else

{

killed= state_to_set;

}

如果当前线程处于idle状态(代表命令已执行完),而且kill级别只是终止查询,而不是kill整个连接,那么不会去设置thd -> killed状态,防止影响下一次正常的请求。

(认为需要被kill的查询已经执行结束了,不需要再做操作了)

② 关闭socket连接&中断引擎等待

if (state_to_set != THD::KILL_QUERY && state_to_set != THD::KILL_TIMEOUT)

{

if (this != current_thd)

{

shutdown_active_vio();

}

/* Send an event to the scheduler that a thread should be killed. */

if (!slave_thread)

MySQL_CALLBACK(Connection_handler_manager::event_functions, post_kill_notification, (this)); //post_kill

}

if (state_to_set != THD::NOT_KILLED)

ha_kill_connection(this);

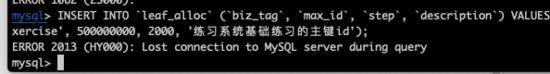

之后会首先关闭socket连接(注如果是kill query,则不会关闭连接)不再接收新的命令。客户端报下面这个错就是在这步之后:

另外会执行ha_close_connection,这里实际是将处于innodb层等待状态的线程唤醒,具体代码在ha_innodb.cc中innobase_kill_connection里会调用lock_trx_handle_wait方法。

trx: 一个mysql线程对应的innodb的事务描述类。

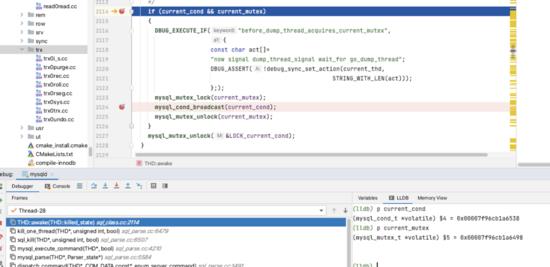

③ 通过信号量通知处于wait状态的线程

if (is_killable)

{

mysql_mutex_lock(&LOCK_current_cond);

if (current_cond && current_mutex)

{

DBUG_EXECUTE_IF("before_dump_thread_acquires_current_mutex",

{

const char act[]=

"now signal dump_thread_signal wait_for go_dump_thread";

DBUG_ASSERT(!debug_sync_set_action(current_thd,

STRING_WITH_LEN(act)));

};);

mysql_mutex_lock(current_mutex); mysql_cond_broadcast(current_cond); mysql_mutex_unlock(current_mutex);

}

mysql_mutex_unlock(&LOCK_current_cond);

这里看到除了设置connection1的thd->killed状态外,还会获取current_mutex锁,唤醒wait条件变量current_cond的线程(connection2)。注意上述②和③中唤醒的对象不同:

ha_close_connection唤醒的是本次对应的innodb事务中的锁(trx->lock.wait_lock),对应的一般是在innodb层事务中等待的某个行锁。mysql_cond_broadcast(current_cond ) 则是唤醒thd中的锁,等待锁是通过THD::enter_cond()进入(如open table时获取表锁,或者sleep等)

具体可参考下面本地debug复现部分的分析。

为什么在发送信号量之前先关闭socket连接?

不关闭socket连接,并发情况下有什么问题?代码中提及了一种case,假设connection1运行已经过了主动检查flag的点,之后connection2调用awake设置flag及发送信号量唤醒,然后connection1进入到socket read中,那么相当于这次信号量会丢失,connection1就会一直阻塞在read中,所以需要关闭socket 连接中断read。BUG#37780

相当于是通过io中断解决信号量丢失的情况。

所以如果connection1在其他阶段发生信号量丢失(如connection2先broadcast,connection1再wait),就需要发送第二次kill命令才能唤醒。

(sql_class.cc 2090,但是注意KILL_CONNECTION是不会重复进入awake的)

注: 一般出现这种情况是,connection2修改了killed状态,但是由于cpu缓存一致性等问题,connection1看不到killed状态,然后通过了主动检查点,进入了wait状态。

2)被kill线程响应kill命令

被kill线程感知(响应)kill命令主要有两种方式:

- 主动检查: c onne ction1在一些 代 码处会去主动检查killed状态 ;

- 被通过信号量唤醒: connection1在执行某些命令时(如引擎层去做一些操作),会主动去await一个condition,释放掉相应的锁,connection2执行kill命令时,会通 过锁和condition唤醒connection1,执行终止操作;

killed状态:

enum killed_state

{

NOT_KILLED=0, KILL_BAD_DATA=1,

KILL_CONNECTION=ER_SERVER_SHUTDOWN, KILL_QUERY=ER_QUERY_INTERRUPTED, KILL_TIMEOUT=ER_QUERY_TIMEOUT,

KILLED_NO_VALUE /* means neither of the states */

};

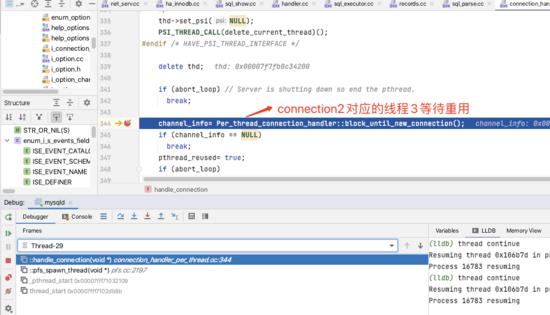

3)connection真正被kill掉

真正被kill指的是show processlist看不到这个线程的时机。mysql在新建一个connection之后,会不断的去监听连接(do_command),前面提到kill时会主动把连接的socket关闭(shutdown_active_vio)。所以真正连接终止的逻辑是在此处,判断thd_connection_alive的状态是待杀死之后,会关闭connection,并且release_resources,此时再去show processlist,则killed的线程才会消失。相应的pthread也会等待其他连接复用。

killed状态 :

if (thd_prepare_connection(thd))

handler_manager->inc_aborted_connects();

else

{

while (thd_connection_alive(thd))

{

if (do_command(thd)) break;

}

end_connection(thd);

}

close_connection(thd, 0, false, false);

thd->get_stmt_da()->reset_diagnostics_area();

thd->release_resources();

// Clean up errors now, before possibly waiting for a new connection.

#if OPENSSL_VERSION_NUMBER < 0x10100000L

ERR_remove_thread_state(0);

#endif /* OPENSSL_VERSION_NUMBER < 0x10100000L */

thd_manager->remove_thd(thd);

Connection_handler_manager::dec_connection_count();

........

channel_info= Per_thread_connection_handler::block_until_new_connection();

//等待新连接复用线程

3、被KILL线程主动检查点和唤醒机制分析

由前面kill过程分析,大致可以分为两种情况,一种是connection1代码一直在执行中(占据cpu),那么总会执行到某些地方可以检查thd->killed状态,另外一种是connection1线程wait状态,需要其他线程通过信号量唤醒connection1的线程,实现kill中断目的。具体地,这两类又可以分为下面4种情况:

1)第一类:主动检查断点

① connection2发送kill命令时,connection1已执行完命令 (主动检查)

此时connection1阻塞在socket_read上,由于前面提到connection2会去shutdown_active_vio,connection1很容易感知到,执行后续操作,如回滚等。

if (thd_prepare_connection(thd))

handler_manager->inc_aborted_connects();

else

{

while (thd_connection_alive(thd))

{

if (do_command(thd))

break;

}

end_connection(thd);

}

close_connection(thd, 0, false, false);

thd->get_stmt_da()->reset_diagnostics_area();

thd->release_resources(); //clean_up

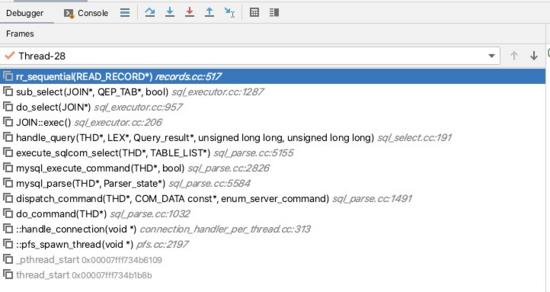

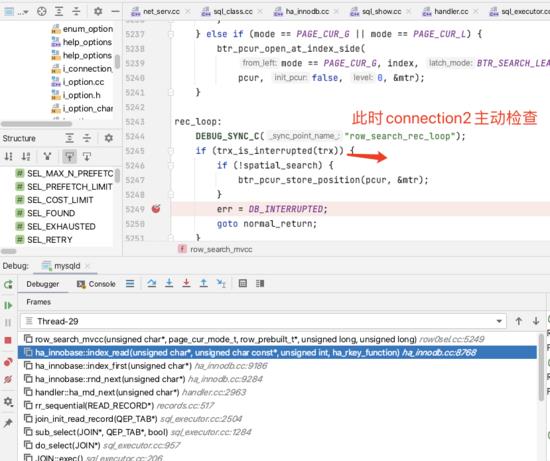

②内部innodb引擎在获取记录时,也会去判断thd->killed状态,决定是否中断操作,进行返回。

这一类检查点很多。如下面两处:

- select每行读取都会检查;

int rr_sequential(READ_RECORD *info)

{

int tmp;

while ((tmp=info->table->file->ha_rnd_next(info->record)))

{

/*

ha_rnd_next can return RECORD_DELETED for MyISAM when one thread is reading and another deleting without locks.

*/

if (info->thd->killed || (tmp != HA_ERR_RECORD_DELETED))

{

tmp= rr_handle_error(info, tmp); break;

}

}

return tmp;

}

- 内部innodb引擎在获取记录时,也会去判断thd->killed状态,决定是否中断操作,进行返回。

* frame #0: 0x0000000106629a7e mysqld`trx_is_interrupted(trx=0x00007f8266dbd8a0) at ha_innodb.cc:3234:9

frame #1: 0x000000010686d901 mysqld`row_search_mvcc(buf=", mode=PAGE_CUR_G, prebuilt=0x00007f826b81faa0,

match_mode=0, direction=0) at row0sel.cc:5245:6

frame #2: 0x0000000106636cda mysqld`ha_innobase::index_read(this=0x00007f825c8c1430, buf=", key_ptr=0x0000000000000000, key_len=0, find_flag=HA_READ_AFTER_KEY) at ha_innodb.cc:8768:10

frame #3: 0x000000010663798c mysqld`ha_innobase::index_first(this=0x00007f825c8c1430, buf=") at ha_innodb. cc:9186:14

frame #4: 0x0000000106637c2a mysqld`ha_innobase::rnd_next(this=0x00007f825c8c1430, buf=") at ha_innodb.cc: 9284:11

frame #5: 0x000000010563f7a5 mysqld`handler::ha_rnd_next(this=0x00007f825c8c1430, buf=") at handler.cc:2963:

3

frame #6: 0x0000000105d954d4 mysqld`rr_sequential(info=0x00007f826780d208) at records.cc:517:34

frame #7: 0x0000000105e85c78 mysqld`join_init_read_record(tab=0x00007f826780d1b8) at sql_executor.cc:2504:10

frame #8: 0x0000000105e82a1c mysqld`sub_select(join=0x00007f825e37a4d0, qep_tab=0x00007f826780d1b8,

end_of_records=false) at sql_executor.cc:1284:14

frame #9: 0x0000000105e7f299 mysqld`do_select(join=0x00007f825e37a4d0) at sql_executor.cc:957:12

frame #10: 0x0000000105e7ec26 mysqld`JOIN::exec(this=0x00007f825e37a4d0) at sql_executor.cc:206:10

frame #11: 0x0000000105f5fe90 mysqld`handle_query(thd=0x00007f825e35ec00, lex=0x00007f825e361058,

result=0x00007f825e379cf8, added_options=0, removed_options=0) at sql_select.cc:191:21

frame #12: 0x0000000105f006f7 mysqld`execute_sqlcom_select(thd=0x00007f825e35ec00,

all_tables=0x00007f825e3796b0) at sql_parse.cc:5155:12

frame #13: 0x0000000105ef6527 mysqld`mysql_execute_command(thd=0x00007f825e35ec00, first_level=true) at sql_parse.cc:2826:12

frame #14: 0x0000000105ef3d62 mysqld`mysql_parse(thd=0x00007f825e35ec00, parser_state=0x00007000097d5340) at sql_parse.cc:5584:20

frame #15: 0x0000000105ef0bf0 mysqld`dispatch_command(thd=0x00007f825e35ec00, com_data=0x00007000097d5e78, command=COM_QUERY) at sql_parse.cc:1491:5

frame #16: 0x0000000105ef2e70 mysqld`do_command(thd=0x00007f825e35ec00) at sql_parse.cc:1032:17

frame #17: 0x0000000106081976 mysqld`::handle_connection(arg=0x00007f82681d84f0) at

connection_handler_per_thread.cc:313:13

frame #18: 0x0000000106a4c74c mysqld`::pfs_spawn_thread(arg=0x00007f8268012fc0) at pfs.cc:2197:3

frame #19: 0x00007fff734b6109 libsystem_pthread.dylib`_pthread_start + 148

frame #20: 0x00007fff734b1b8b libsystem_pthread.dylib`thread_start + 15

2)第二类:需要其他线程通过信号量唤醒

① connection2发送kill命令时,connection1处于innodb层wait行锁状态

主要通过awake中的下面这行触发唤醒 (也可能由系统的后台线程lock_wait_timeout_thread唤醒)

/* Interrupt target waiting inside a storage engine. */

if (state_to_set != THD::NOT_KILLED)

ha_kill_connection(this);

参 考 下 面 debug 分 析 的 case3 。

② connection2发送kill命令时,connection1处于msyql层wait状态(由connection2唤醒)

主要通过下面的方法实现唤醒:

/* Broadcast a condition to kick the target if it is waiting on it. */

if (is_killable)

{

mysql_mutex_lock(&LOCK_current_cond);

if (current_cond && current_mutex)

{

DBUG_EXECUTE_IF("before_dump_thread_acquires_current_mutex",

{

const char act[]=

"now signal dump_thread_signal wait_for go_dump_thread";

DBUG_ASSERT(!debug_sync_set_action(current_thd,

STRING_WITH_LEN(act)));

};);

mysql_mutex_lock(current_mutex);

mysql_cond_broadcast(current_cond); mysql_mutex_unlock(current_mutex);

}

mysql_mutex_unlock(&LOCK_current_cond);

参考下面debug分析的case4。

三、原因总结

通过上面代码分析可以得知,kill之后会进行回滚操作(大事务)或清理临时表(比如较慢的ddl),都有可能导致长时间处于killed状态。

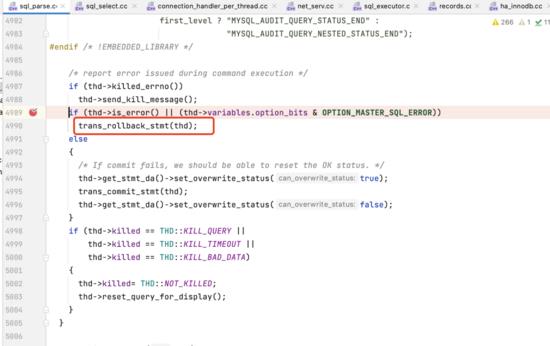

具体回滚是上面提到的handle_connection中的thd->release_resources()中执行clean_up进行回滚或者在sql_parse中trans_rollback_stmt中。

除开回滚操作的影响,如果本身mysql机器负载较高,一样会导致主动检查thd->killed会有延迟或者影响线程的唤醒调度。

四、案例复现

1、本地debug复现

1)case 1

connection1已执行完命令,connection2去kill连接1

(较为简单,略)

2)case2

connection1正在执行(如parse阶段,还没有真正到innodb层),connection2去kill连接1

(较为简单,略)

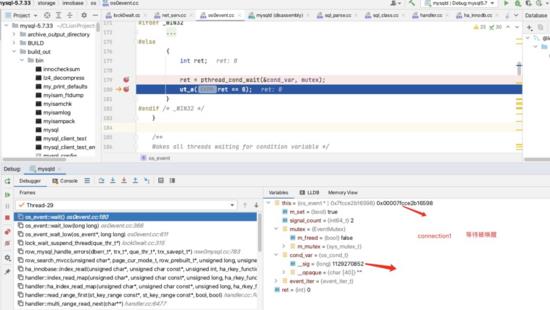

3)case3(innodb层唤醒)

新起一个session作为connection0开启事务,update某一行row1

再起一个session作为connection1 update同一行row1

* thread #29, stop reason = breakpoint 127.1

* frame #0: 0x00000001060f87cb mysqld`os_event::wait(this=0x00007fcce2b16598) at os0event.cc:180:4

frame #1: 0x00000001060f8773 mysqld`os_event::wait_low(this=0x00007fcce2b16598, reset_sig_count=1) at

os0event.cc:366:3

frame #2: 0x00000001060f91ed mysqld`os_event_wait_low(event=0x00007fcce2b16598, reset_sig_count=0) at os0event.cc:611:9

frame #3: 0x00000001060a9332 mysqld`lock_wait_suspend_thread(thr=0x00007fccd90936f0) at lock0wait.cc:315:2

frame #4: 0x00000001061b4f77 mysqld`row_mysql_handle_errors(new_err=0x0000700009f3af6c,

trx=0x00007fcce1cc0ce0, thr=0x00007fccd90936f0, savept=0x0000000000000000) at row0mysql.cc:783:3

frame #5: 0x0000000106212fa6 mysqld`row_search_mvcc(buf=", mode=PAGE_CUR_GE, prebuilt=0x00007fccd9092ea0, match_mode=1, direction=0) at row0sel.cc:6292:6

frame #6: 0x0000000105fd8cda mysqld`ha_innobase::index_read(this=0x00007fccd9091430, buf=", key_ptr="\\x1e", key_len=514, find_flag=HA_READ_KEY_EXACT) at ha_innodb.cc:8768:10

frame #7: 0x0000000104ff2c67 mysqld`handler::index_read_map(this=0x00007fccd9091430, buf=", key="\\x1e", keypart_map=1, find_flag=HA_READ_KEY_EXACT) at handler.h:2824:13

frame #8: 0x0000000104fe1f14 mysqld`handler::ha_index_read_map(this=0x00007fccd9091430, buf=", key="\\x1e", keypart_map=1, find_flag=HA_READ_KEY_EXACT) at handler.cc:3047:3

frame #9: 0x0000000104feeb62 mysqld`handler::read_range_first(this=0x00007fccd9091430, start_key=0x00007fccd9091518, end_key=0x00007fccd9091538, eq_range_arg=true, sorted=true) at handler.cc:7412:13

frame #10: 0x0000000104fec01b mysqld`handler::multi_range_read_next(this=0x00007fccd9091430, range_info=0x0000700009f3bc10) at handler.cc:6477:15

frame #11: 0x0000000104fed4ba mysqld`DsMrr_impl::dsmrr_next(this=0x00007fccd9091698, range_info=0x0000700009f3bc10) at handler.cc:6869:24

frame #12: 0x0000000105fee7f6 mysqld`ha_innobase::multi_range_read_next(this=0x00007fccd9091430, range_info=0x0000700009f3bc10) at ha_innodb.cc:20585:18

frame #13: 0x00000001056ec7c8 mysqld`QUICK_RANGE_SELECT::get_next(this=0x00007fcce2b15fa0) at opt_range.cc: 11247:21

frame #14: 0x00000001057371ad mysqld`rr_quick(info=0x0000700009f3c320) at records.cc:405:29

frame #15: 0x0000000105984e35 mysqld`mysql_update(thd=0x00007fcce585f400, fields=0x00007fcce5863618, values=0x00007fcce58643d8, limit=18446744073709551615, handle_duplicates=DUP_ERROR, found_return=0x0000700009f3cb48, updated_return=0x0000700009f3cb40) at sql_update.cc:819:14

frame #16: 0x000000010598cc87 mysqld`Sql_cmd_update::try_single_table_update(this=0x00007fcce58643c8, thd=0x00007fcce585f400, switch_to_multitable=0x0000700009f3cc07) at sql_update.cc:2927:21

frame #17: 0x000000010598d457 mysqld`Sql_cmd_update::execute(this=0x00007fcce58643c8, thd=0x00007fcce585f400) at sql_update.cc:3058:7

frame #18: 0x000000010589b475 mysqld`mysql_execute_command(thd=0x00007fcce585f400, first_level=true) at sql_parse.cc:3616:26

frame #19: 0x0000000105895d62 mysqld`mysql_parse(thd=0x00007fcce585f400, parser_state=0x0000700009f40340) at sql_parse.cc:5584:20

frame #20: 0x0000000105892bf0 mysqld`dispatch_command(thd=0x00007fcce585f400, com_data=0x0000700009f40e78, command=COM_QUERY) at sql_parse.cc:1491:5

frame #21: 0x0000000105894e70 mysqld`do_command(thd=0x00007fcce585f400) at sql_parse.cc:1032:17

frame #22: 0x0000000105a23976 mysqld`::handle_connection(arg=0x00007fcce2555370) at

connection_handler_per_thread.cc:313:13

frame #23: 0x00000001063ee74c mysqld`::pfs_spawn_thread(arg=0x00007fcce2555f80) at pfs.cc:2197:3

frame #24: 0x00007fff71032109 libsystem_pthread.dylib`_pthread_start + 148

frame #25: 0x00007fff7102db8b libsystem_pthread.dylib`thread_start + 15

可以看到connection1 在等待row1的行锁

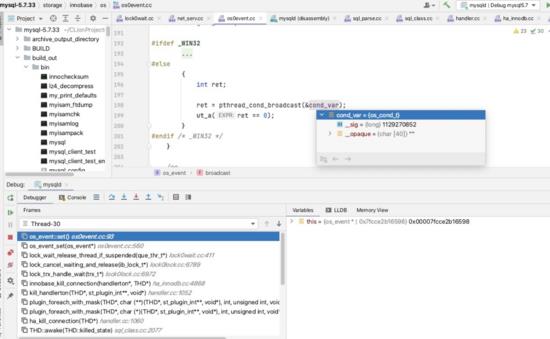

开启新的session作为connection2执行kill co nnection1的 命令,走到前面代码中分析到的ha_close_connection时,会去中断innodb行锁的等待。堆栈为

thread #30

frame #0: 0x00000001060f910e mysqld`os_event::set(this=0x00007fcce2b16598) at os0event.cc:93:2

frame #1: 0x00000001060f90b5 mysqld`os_event_set(event=0x00007fcce2b16598) at os0event.cc:560:9

frame #2: 0x00000001060aa06e mysqld`lock_wait_release_thread_if_suspended(thr=0x00007fccd90936f0) at lock0wait.cc:411:3

frame #3: 0x0000000106089a99 mysqld`lock_cancel_waiting_and_release(lock=0x00007fccd8866c18) at lock0lock. cc:6789:3

frame #4: 0x0000000106096679 mysqld`lock_trx_handle_wait(trx=0x00007fcce1cc0ce0) at lock0lock.cc:6972:3

frame #5: 0x0000000105ff93a6 mysqld`innobase_kill_connection(hton=0x00007fccd7e094d0,

thd=0x00007fcce585f400) at ha_innodb.cc:4868:3

frame #6: 0x0000000104fdcb96 mysqld`kill_handlerton(thd=0x00007fcce585f400, plugin=0x0000700009f809d8, (null)=0x0000000000000000) at handler.cc:1052:7

frame #7: 0x00000001058d659c mysqld`plugin_foreach_with_mask(thd=0x00007fcce585f400, funcs=0x0000700009f80a60, type=1, state_mask=4294967287, arg=0x0000000000000000)(THD*, st_plugin_int**, void*), int, unsigned int, void*) at sql_plugin.cc:2524:21

frame #8: 0x00000001058d66a2 mysqld`plugin_foreach_with_mask(thd=0x00007fcce585f400, func= (mysqld`kill_handlerton(THD*, st_plugin_int**, void*) at handler.cc:1046), type=1, state_mask=8, arg=0x0000000000000000)(THD*, st_plugin_int**, void*), int, unsigned int, void*) at sql_plugin.cc:2539:10

frame #9: 0x0000000104fdcb1b mysqld`ha_kill_connection(thd=0x00007fcce585f400) at handler.cc:1060:3

frame #10: 0x00000001057f8923 mysqld`THD::awake(this=0x00007fcce585f400, state_to_set=KILL_CONNECTION) at sql_class.cc:2077:5

frame #11: 0x00000001058a961f mysqld`kill_one_thread(thd=0x00007fccd8b9ea00, id=3, only_kill_query=false) at sql_parse.cc:6479:14

frame #12: 0x0000000105896529 mysqld`sql_kill(thd=0x00007fccd8b9ea00, id=3, only_kill_query=false) at sql_parse.cc:6507:16

frame #13: 0x000000010589e0fa mysqld`mysql_execute_command(thd=0x00007fccd8b9ea00, first_level=true) at sql_parse.cc:4210:5

frame #14: 0x0000000105895d62 mysqld`mysql_parse(thd=0x00007fccd8b9ea00, parser_state=0x0000700009f84340) at sql_parse.cc:5584:20

frame #15: 0x0000000105892bf0 mysqld`dispatch_command(thd=0x00007fccd8b9ea00, com_data=0x0000700009f84e78, command=COM_QUERY) at sql_parse.cc:1491:5

frame #16: 0x0000000105894e70 mysqld`do_command(thd=0x00007fccd8b9ea00) at sql_parse.cc:1032:17

frame #17: 0x0000000105a23976 mysqld`::handle_connection(arg=0x00007fcce5307d10) at

connection_handler_per_thread.cc:313:13

frame #18: 0x00000001063ee74c mysqld`::pfs_spawn_thread(arg=0x00007fcce2405030) at pfs.cc:2197:3

frame #19: 0x00007fff71032109 libsystem_pthread.dylib`_pthread_start + 148

frame #20: 0x00007fff7102db8b libsystem_pthread.dylib`thread_start + 15

注 :mysql启动时也会启动一个线程检测锁是否超时(间隔1s),也会去调用lock_cancel_waiting_and_release中断等待行锁的线程。

这里的超时机制也可以防止信号量丢失无法唤醒的问题。

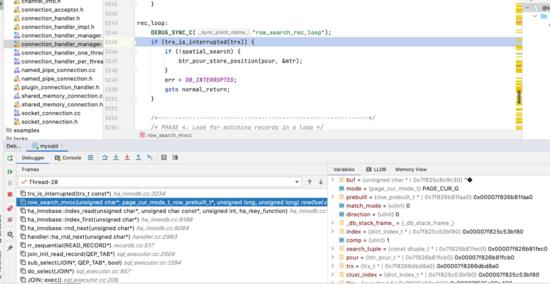

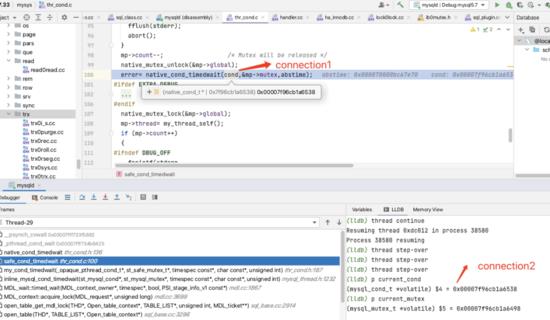

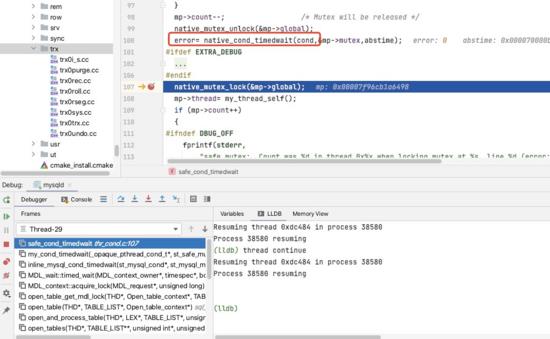

4)case4 (mysql层唤醒)

开启connection0,lock一张表。

然后开启connection1, 去update这张表,可以看到线程会阻塞在wait表锁状态。

thread #29

frame #0: 0x00007fff733f5882 libsystem_kernel.dylib` psynch_cvwait + 10

frame #1: 0x00007fff734b6425 libsystem_pthread.dylib`_pthread_cond_wait + 698

frame #2: 0x000000010ef6d495 mysqld`native_cond_timedwait(cond=0x00007f96cb1a6538,

mutex=0x00007f96cb1a64d8, abstime=0x000070000bc67e70) at thr_cond.h:136:10

frame #3: 0x000000010ef6d37a mysqld`safe_cond_timedwait(cond=0x00007f96cb1a6538, mp=0x00007f96cb1a6498, abstime=0x000070000bc67e70, file="/Users/zhaoxiaojie/CLionProjects/mysql-5.7.33/sql/mdl.cc", line=1868) at thr_cond.c:100:10

frame #4: 0x000000010ea69955 mysqld`my_cond_timedwait(cond=0x00007f96cb1a6538, mp=0x00007f96cb1a6498, abstime=0x000070000bc67e70, file="/Users/zhaoxiaojie/CLionProjects/mysql-5.7.33/sql/mdl.cc", line=1868) at thr_cond.h:187:10

frame #5: 0x000000010ea63341 mysqld`inline_mysql_cond_timedwait(that=0x00007f96cb1a6538, mutex=0x00007f96cb1a6498, abstime=0x000070000bc67e70, src_file="/Users/zhaoxiaojie/CLionProjects/mysql-5.7.33

/sql/mdl.cc", src_line=1868) at mysql_thread.h:1232:13

frame #6: 0x000000010ea631f8 mysqld`MDL_wait::timed_wait(this=0x00007f96cb1a6498, owner=0x00007f96cb1a6400, abs_timeout=0x000070000bc67e70, set_status_on_timeout=true, wait_state_name=0x00000001100b4b58) at mdl.cc:1867: 18

frame #7: 0x000000010ea660e5 mysqld`MDL_context::acquire_lock(this=0x00007f96cb1a6498, mdl_request=0x00007f96cb0e71a0, lock_wait_timeout=31536000) at mdl.cc:3699:25

frame #8: 0x000000010eb8a090 mysqld`open_table_get_mdl_lock(thd=0x00007f96cb1a6400, ot_ctx=0x000070000bc689a0, table_list=0x00007f96cb0e6e00, flags=0, mdl_ticket=0x000070000bc68318) at sql_base. cc:2914:35

frame #9: 0x000000010eb885ec mysqld`open_table(thd=0x00007f96cb1a6400, table_list=0x00007f96cb0e6e00, ot_ctx=0x000070000bc689a0) at sql_base.cc:3296:9

frame #10: 0x000000010eb8e77b mysqld`open_and_process_table(thd=0x00007f96cb1a6400, lex=0x00007f96cb1a8858, tables=0x00007f96cb0e6e00, counter=0x00007f96cb1a8918, flags=0, prelocking_strategy=0x000070000bc68ab8, has_prelocking_list=false, ot_ctx=0x000070000bc689a0) at sql_base.cc:5260:14

frame #11: 0x000000010eb8d8dc mysqld`open_tables(thd=0x00007f96cb1a6400, start=0x000070000bc68ac8, counter=0x00007f96cb1a8918, flags=0, prelocking_strategy=0x000070000bc68ab8) at sql_base.cc:5883:14

frame #12: 0x000000010eb90c6d mysqld`open_tables_for_query(thd=0x00007f96cb1a6400, tables=0x00007f96cb0e6e00, flags=0) at sql_base.cc:6660:7

frame #13: 0x000000010ed48b0c mysqld`Sql_cmd_update::try_single_table_update(this=0x00007f96cb0e6dc8, thd=0x00007f96cb1a6400, switch_to_multitable=0x000070000bc68c07) at sql_update.cc:2911:7

frame #14: 0x000000010ed49457 mysqld`Sql_cmd_update::execute(this=0x00007f96cb0e6dc8, thd=0x00007f96cb1a6400) at sql_update.cc:3058:7

frame #15: 0x000000010ec57475 mysqld`mysql_execute_command(thd=0x00007f96cb1a6400, first_level=true) at sql_parse.cc:3616:26

frame #16: 0x000000010ec51d62 mysqld`mysql_parse(thd=0x00007f96cb1a6400, parser_state=0x000070000bc6c340) at sql_parse.cc:5584:20

frame #17: 0x000000010ec4ebf0 mysqld`dispatch_command(thd=0x00007f96cb1a6400, com_data=0x000070000bc6ce78, command=COM_QUERY) at sql_parse.cc:1491:5

frame #18: 0x000000010ec50e70 mysqld`do_command(thd=0x00007f96cb1a6400) at sql_parse.cc:1032:17

frame #19: 0x000000010eddf976 mysqld`::handle_connection(arg=0x00007f96cfe2e1b0) at

connection_handler_per_thread.cc:313:13

frame #20: 0x000000010f7aa74c mysqld`::pfs_spawn_thread(arg=0x00007f96d5c1b300) at pfs.cc:2197:3

frame #21: 0x00007fff734b6109 libsystem_pthread.dylib`_pthread_start + 148

frame #22: 0x00007fff734b1b8b libsystem_pthread.dylib`thread_start + 15

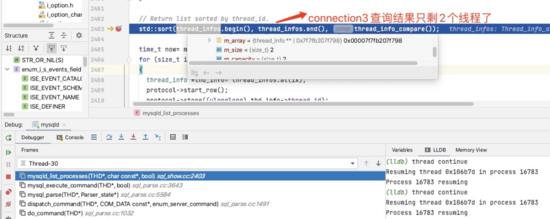

开启connection2,去kill connection1,执行到awake时,可以看到要唤醒的条件变量和 connection1在等待的是同一个对象。(0x00007f96cb1a6538)

connection2执行完broadcast之后,connection1线程即被唤醒

之后一层层返回错误,在sql_parse中会执行trans_rollback_stmt执行回滚操作。最后在handle_connection中检查到connection需要被关闭。

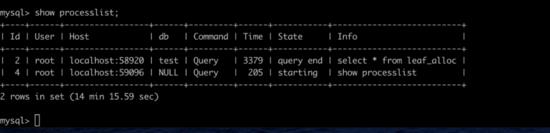

2、实际案例1

之前在工作中实际碰到过一个执行kill命令后,连接数反而持续上涨的案例,现象简单概括就是:

异常流量导致数据库实例连接数过多后,dba执行kill连接,连接数会短暂下降,之后连接数会继续上涨,继续kill之后,连接数还是会上涨。并且大量线程一直处于killed状态,看起来像是无法kill连接,只能重启解决。

1)问题

连接数为什么反而会上涨?

前面KILL工作流程1.(2)部分中提到,kill线程会在唤醒被kill线程之前先关闭连接,客户端就是这个时候报lost connection的错误。这样大量客户端在线程还未被kill结束时已经开始了重连(sdk连接池),这样造成了问题加剧。

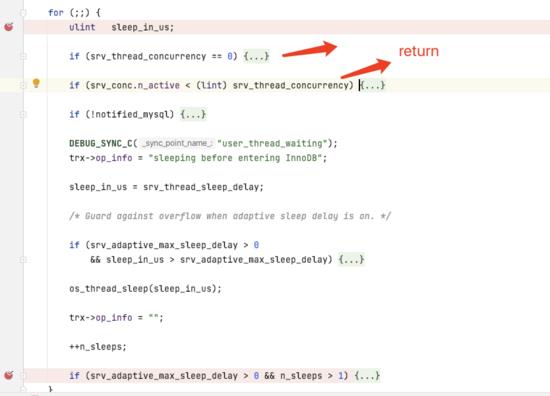

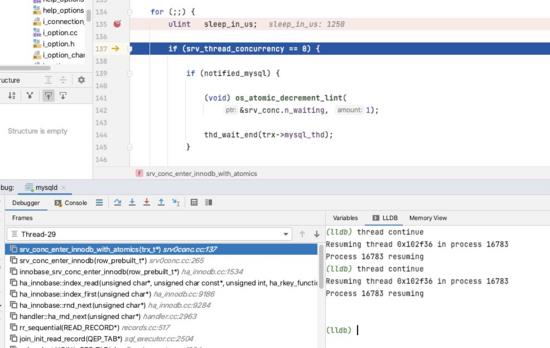

另外MySQL中innodb_thread_concurrency会限制进入innodb的线程并发度。那么当进入innodb层的线程达到阈值后,后面重建的大量连接会在 mysq l 层执行for循环判断是否可以进入innodb。但是这个过程是没有检查killed状态的,导致这些线程一直无法被kill(尽管show processlist显示为Killed)。除非innodb里一个线程退出,使得某个线程可以进入innodb,从而执行代码到主动检查处或被唤醒执行kill逻辑。

srv0conc.cc srv_conc_enter_innodb_with_atomics

注:实际 mysq l 会有相关参数控制进入innodb时的最大等待时间,为简化描述问题,暂不展开。

2)复现

将mysql innodb_thread_concurrency设置为1(默认为0代表不限制)。connection1执行select进入innodb后暂停住线程。

connection2也执行select,那么会发现connection2会卡在检查是否可以进入innodb。具体堆栈为:

* frame #0: 0x000000010c9772bd mysqld`srv_conc_enter_innodb(prebuilt=0x00007f7fb1065ca0) at srv0conc.cc:265:37

frame #1: 0x000000010c6d9498 mysqld`innobase_srv_conc_enter_innodb(prebuilt=0x00007f7fb1065ca0) at ha_innodb.cc: 1534:4

frame #2: 0x000000010c6dcc19 mysqld`ha_innobase::index_read(this=0x00007f7fb10a3630, buf=", key_ptr=0x0000000000000000, key_len=0, find_flag=HA_READ_AFTER_KEY) at ha_innodb.cc:8753:3

frame #3: 0x000000010c6dd98c mysqld`ha_innobase::index_first(this=0x00007f7fb10a3630, buf=") at ha_innodb.cc: 9186:14

frame #4: 0x000000010c6ddc2a mysqld`ha_innobase::rnd_next(this=0x00007f7fb10a3630, buf=") at ha_innodb.cc:9284: 11

frame #5: 0x000000010b6e57a5 mysqld`handler::ha_rnd_next(this=0x00007f7fb10a3630, buf=") at handler.cc:2963:3

frame #6: 0x000000010be3b4d4 mysqld`rr_sequential(info=0x00007f7fb0c1dd90) at records.cc:517:34

frame #7: 0x000000010bf2bc78 mysqld`join_init_read_record(tab=0x00007f7fb0c1dd40) at sql_executor.cc:2504:10

frame #8: 0x000000010bf28a1c mysqld`sub_select(join=0x00007f7fb206fc48, qep_tab=0x00007f7fb0c1dd40, end_of_records=false) at sql_executor.cc:1284:14

frame #9: 0x000000010bf25299 mysqld`do_select(join=0x00007f7fb206fc48) at sql_executor.cc:957:12

frame #10: 0x000000010bf24c26 mysqld`JOIN::exec(this=0x00007f7fb206fc48) at sql_executor.cc:206:10 frame #11: 0x000000010c005e90 mysqld`handle_query(thd=0x00007f7fb2065a00, lex=0x00007f7fb2067e58, result=0x00007f7fb206f2f8, added_options=0, removed_options=0) at sql_select.cc:191:21

frame #12: 0x000000010bfa66f7 mysqld`execute_sqlcom_select(thd=0x00007f7fb2065a00, all_tables=0x00007f7fb206ecb0) at sql_parse.cc:5155:12

frame #13: 0x000000010bf9c527 mysqld`mysql_execute_command(thd=0x00007f7fb2065a00, first_level=true) at sql_parse.cc:2826:12

frame #14: 0x000000010bf99d62 mysqld`mysql_parse(thd=0x00007f7fb2065a00, parser_state=0x0000700007205340) at sql_parse.cc:5584:20

frame #15: 0x000000010bf96bf0 mysqld`dispatch_command(thd=0x00007f7fb2065a00, com_data=0x0000700007205e78, command=COM_QUERY) at sql_parse.cc:1491:5

frame #16: 0x000000010bf98e70 mysqld`do_command(thd=0x00007f7fb2065a00) at sql_parse.cc:1032:17

frame #17: 0x000000010c127976 mysqld`::handle_connection(arg=0x00007f7fbcb5e650) at connection_handler_per_thread.cc:313:13

frame #18: 0x000000010caf274c mysqld`::pfs_spawn_thread(arg=0x00007f7fbcb6a5e0) at pfs.cc:2197:3

frame #19: 0x00007fff71032109 libsystem_pthread.dylib`_pthread_start + 148

frame #20: 0x00007fff7102db8b libsystem_pthread.dylib`thread_start + 15

再开启一个connection3, 去kill connection2,可以发现connection2会一直处于killed状态(客户端会断开连接)

即使connection3完成了前面提到的ha_close_connection和broadcast信号量,connection2的堆栈还是一直在上面for循环中。

直到connection1退出innodb 。

connection2进入innodb之后通过主动检查的方式执行kill逻辑。

此时show processlist显示:

好了…

以上是关于MySQL进阶垫脚石:线程长时间处于killed状态怎么破?的主要内容,如果未能解决你的问题,请参考以下文章

vb.net主线程使用BackgroundWorker进行后台长时间的检索操作的同时怎么做到前台画面的其他操作能够响应?