迁移学习之快速实现文本分类

Posted 一颗小树x

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了迁移学习之快速实现文本分类相关的知识,希望对你有一定的参考价值。

前言

本文介绍神经网络的案例,通过搭建和训练一个模型,来对电影评论进行“文本分类”;将影评分为积极或消极两类;是一个二分类问题。

使用到网络电影数据库的 IMDB 数据集,包含 50,000 条影评文本,这是二元情绪分类的数据集。

IMDB数据集信息参考:https://ai.stanford.edu/~amaas/data/sentiment/

意义

本篇文章主要的意义是带大家熟悉神经网络的开发流程,包括数据集处理、搭建模型、训练模型、使用模型等;更重要的认识“迁移学习”的开发流程,使用业界认可的网络模型作为“预训练模型”,提高开发效率。

思路流程

- 导入数据集

- 探索集数据,并进行数据预处理

- 构建模型(搭建神经网络、编译模型)

- 训练模型(把数据输入模型、评估准确性、作出预测、验证预测)

- 使用训练好的模型

- 优化模型、重新构建模型、训练模型、使用模型

一、导入数据集

IMDB数据集在 Tensorflow数据集 获取,它是一个二元情绪分类的数据集,包含 50,000 条影评文本,从该数据集分割出的 25,000 条评论用作训练,另外 25,000 条用作测试。

25,000 条评论用作训练中,需要进一步划分为60%训练集、40%验证集。(即:测试集25,000 条影评、训练集15,000 条影评、验证集10,000 条影评)

train_data, validation_data, test_data = tfds.load(

name="imdb_reviews",

split=('train[:60%]', 'train[60%:]', 'test'),

as_supervised=True)

二、探索集数据,并进行数据预处理

每个样本是一个句子(包含电影评论 和 相应标签),句子没有进行预处理。标签是一个值为 0 或 1 的整数,其中 0 代表消极评论,1 代表积极评论。

查看前十个样本

train_examples_batch, train_labels_batch = next(iter(train_data.batch(10)))

# 查看前十个评论

train_examples_batch

# 查看前是个标签

train_labels_batch前十个评论:

array([b"This was an absolutely terrible movie. Don't be lured in by Christopher Walken or Michael Ironside. Both are great actors, but this must simply be their worst role in history. Even their great acting could not redeem this movie's ridiculous storyline. This movie is an early nineties US propaganda piece. The most pathetic scenes were those when the Columbian rebels were making their cases for revolutions. Maria Conchita Alonso appeared phony, and her pseudo-love affair with Walken was nothing but a pathetic emotional plug in a movie that was devoid of any real meaning. I am disappointed that there are movies like this, ruining actor's like Christopher Walken's good name. I could barely sit through it.",

b'I have been known to fall asleep during films, but this is usually due to a combination of things including, really tired, being warm and comfortable on the sette and having just eaten a lot. However on this occasion I fell asleep because the film was rubbish. The plot development was constant. Constantly slow and boring. Things seemed to happen, but with no explanation of what was causing them or why. I admit, I may have missed part of the film, but i watched the majority of it and everything just seemed to happen of its own accord without any real concern for anything else. I cant recommend this film at all.',

b'Mann photographs the Alberta Rocky Mountains in a superb fashion, and Jimmy Stewart and Walter Brennan give enjoyable performances as they always seem to do. <br /><br />But come on Hollywood - a Mountie telling the people of Dawson City, Yukon to elect themselves a marshal (yes a marshal!) and to enforce the law themselves, then gunfighters battling it out on the streets for control of the town? <br /><br />Nothing even remotely resembling that happened on the Canadian side of the border during the Klondike gold rush. Mr. Mann and company appear to have mistaken Dawson City for Deadwood, the Canadian North for the American Wild West.<br /><br />Canadian viewers be prepared for a Reefer Madness type of enjoyable howl with this ludicrous plot, or, to shake your head in disgust.',

b'This is the kind of film for a snowy Sunday afternoon when the rest of the world can go ahead with its own business as you descend into a big arm-chair and mellow for a couple of hours. Wonderful performances from Cher and Nicolas Cage (as always) gently row the plot along. There are no rapids to cross, no dangerous waters, just a warm and witty paddle through New York life at its best. A family film in every sense and one that deserves the praise it received.',

b'As others have mentioned, all the women that go nude in this film are mostly absolutely gorgeous. The plot very ably shows the hypocrisy of the female libido. When men are around they want to be pursued, but when no "men" are around, they become the pursuers of a 14 year old boy. And the boy becomes a man really fast (we should all be so lucky at this age!). He then gets up the courage to pursue his true love.',

b"This is a film which should be seen by anybody interested in, effected by, or suffering from an eating disorder. It is an amazingly accurate and sensitive portrayal of bulimia in a teenage girl, its causes and its symptoms. The girl is played by one of the most brilliant young actresses working in cinema today, Alison Lohman, who was later so spectacular in 'Where the Truth Lies'. I would recommend that this film be shown in all schools, as you will never see a better on this subject. Alison Lohman is absolutely outstanding, and one marvels at her ability to convey the anguish of a girl suffering from this compulsive disorder. If barometers tell us the air pressure, Alison Lohman tells us the emotional pressure with the same degree of accuracy. Her emotional range is so precise, each scene could be measured microscopically for its gradations of trauma, on a scale of rising hysteria and desperation which reaches unbearable intensity. Mare Winningham is the perfect choice to play her mother, and does so with immense sympathy and a range of emotions just as finely tuned as Lohman's. Together, they make a pair of sensitive emotional oscillators vibrating in resonance with one another. This film is really an astonishing achievement, and director Katt Shea should be proud of it. The only reason for not seeing it is if you are not interested in people. But even if you like nature films best, this is after all animal behaviour at the sharp edge. Bulimia is an extreme version of how a tormented soul can destroy her own body in a frenzy of despair. And if we don't sympathise with people suffering from the depths of despair, then we are dead inside.",

b'Okay, you have:<br /><br />Penelope Keith as Miss Herringbone-Tweed, B.B.E. (Backbone of England.) She\\'s killed off in the first scene - that\\'s right, folks; this show has no backbone!<br /><br />Peter O\\'Toole as Ol\\' Colonel Cricket from The First War and now the emblazered Lord of the Manor.<br /><br />Joanna Lumley as the ensweatered Lady of the Manor, 20 years younger than the colonel and 20 years past her own prime but still glamourous (Brit spelling, not mine) enough to have a toy-boy on the side. It\\'s alright, they have Col. Cricket\\'s full knowledge and consent (they guy even comes \\'round for Christmas!) Still, she\\'s considerate of the colonel enough to have said toy-boy her own age (what a gal!)<br /><br />David McCallum as said toy-boy, equally as pointlessly glamourous as his squeeze. Pilcher couldn\\'t come up with any cover for him within the story, so she gave him a hush-hush job at the Circus.<br /><br />and finally:<br /><br />Susan Hampshire as Miss Polonia Teacups, Venerable Headmistress of the Venerable Girls\\' Boarding-School, serving tea in her office with a dash of deep, poignant advice for life in the outside world just before graduation. Her best bit of advice: "I\\'ve only been to Nancherrow (the local Stately Home of England) once. I thought it was very beautiful but, somehow, not part of the real world." Well, we can\\'t say they didn\\'t warn us.<br /><br />Ah, Susan - time was, your character would have been running the whole show. They don\\'t write \\'em like that any more. Our loss, not yours.<br /><br />So - with a cast and setting like this, you have the re-makings of "Brideshead Revisited," right?<br /><br />Wrong! They took these 1-dimensional supporting roles because they paid so well. After all, acting is one of the oldest temp-jobs there is (YOU name another!)<br /><br />First warning sign: lots and lots of backlighting. They get around it by shooting outdoors - "hey, it\\'s just the sunlight!"<br /><br />Second warning sign: Leading Lady cries a lot. When not crying, her eyes are moist. That\\'s the law of romance novels: Leading Lady is "dewy-eyed."<br /><br />Henceforth, Leading Lady shall be known as L.L.<br /><br />Third warning sign: L.L. actually has stars in her eyes when she\\'s in love. Still, I\\'ll give Emily Mortimer an award just for having to act with that spotlight in her eyes (I wonder . did they use contacts?)<br /><br />And lastly, fourth warning sign: no on-screen female character is "Mrs." She\\'s either "Miss" or "Lady."<br /><br />When all was said and done, I still couldn\\'t tell you who was pursuing whom and why. I couldn\\'t even tell you what was said and done.<br /><br />To sum up: they all live through World War II without anything happening to them at all.<br /><br />OK, at the end, L.L. finds she\\'s lost her parents to the Japanese prison camps and baby sis comes home catatonic. Meanwhile (there\\'s always a "meanwhile,") some young guy L.L. had a crush on (when, I don\\'t know) comes home from some wartime tough spot and is found living on the street by Lady of the Manor (must be some street if SHE\\'s going to find him there.) Both war casualties are whisked away to recover at Nancherrow (SOMEBODY has to be "whisked away" SOMEWHERE in these romance stories!)<br /><br />Great drama.',

b'The film is based on a genuine 1950s novel.<br /><br />Journalist Colin McInnes wrote a set of three "London novels": "Absolute Beginners", "City of Spades" and "Mr Love and Justice". I have read all three. The first two are excellent. The last, perhaps an experiment that did not come off. But McInnes\\'s work is highly acclaimed; and rightly so. This musical is the novelist\\'s ultimate nightmare - to see the fruits of one\\'s mind being turned into a glitzy, badly-acted, soporific one-dimensional apology of a film that says it captures the spirit of 1950s London, and does nothing of the sort.<br /><br />Thank goodness Colin McInnes wasn\\'t alive to witness it.',

b'I really love the sexy action and sci-fi films of the sixties and its because of the actress\\'s that appeared in them. They found the sexiest women to be in these films and it didn\\'t matter if they could act (Remember "Candy"?). The reason I was disappointed by this film was because it wasn\\'t nostalgic enough. The story here has a European sci-fi film called "Dragonfly" being made and the director is fired. So the producers decide to let a young aspiring filmmaker (Jeremy Davies) to complete the picture. They\\'re is one real beautiful woman in the film who plays Dragonfly but she\\'s barely in it. Film is written and directed by Roman Coppola who uses some of his fathers exploits from his early days and puts it into the script. I wish the film could have been an homage to those early films. They could have lots of cameos by actors who appeared in them. There is one actor in this film who was popular from the sixties and its John Phillip Law (Barbarella). Gerard Depardieu, Giancarlo Giannini and Dean Stockwell appear as well. I guess I\\'m going to have to continue waiting for a director to make a good homage to the films of the sixties. If any are reading this, "Make it as sexy as you can"! I\\'ll be waiting!',

b'Sure, this one isn\\'t really a blockbuster, nor does it target such a position. "Dieter" is the first name of a quite popular German musician, who is either loved or hated for his kind of acting and thats exactly what this movie is about. It is based on the autobiography "Dieter Bohlen" wrote a few years ago but isn\\'t meant to be accurate on that. The movie is filled with some sexual offensive content (at least for American standard) which is either amusing (not for the other "actors" of course) or dumb - it depends on your individual kind of humor or on you being a "Bohlen"-Fan or not. Technically speaking there isn\\'t much to criticize. Speaking of me I find this movie to be an OK-movie.'],

dtype=object)>前十个标签:

<tf.Tensor: shape=(10,), dtype=int64, numpy=array([0, 0, 0, 1, 1, 1, 0, 0, 0, 0])>

能看到第4,5,6条电影评论是积极的,其他都是消极的。

三、构建模型

我们使用顺序堆叠的方式构建神经网络,即 tf.keras.Sequential() 。构建神经网络之前,首先思想几个问题:

- 如何表示文本信息?

- 模型里有多少层?

- 每个层里有多少个隐藏单元?

3.1 表示文本信息

表示文本信息的一种方式是将句子转换为嵌入向量(embeddings vectors),我们可以使用一个预先训练好的文本嵌入(text embedding)作为首层。使用 TensorFlow Hub 中名为 google/tf2-preview/gnews-swivel-20dim/1 的一种预训练文本嵌入(text embedding)模型 。

优点

- 不必担心文本预处理(不考虑如何把句子转换为嵌入向量)

- 从迁移学习中受益(使用预训练模型)

- 嵌入向量具有固定长度,更易于处理(无论输入文本的长度如何,嵌入(embeddings)输出的形状都是:

(num_examples, embedding_dimension))

实践

embedding = "https://tfhub.dev/google/tf2-preview/gnews-swivel-20dim/1"

hub_layer = hub.KerasLayer(embedding, input_shape=[],

dtype=tf.string, trainable=True)

hub_layer(train_examples_batch[:3])3.2 设计模型

使用顺序堆叠的方式构建神经网络,我还需要考虑隐藏层。

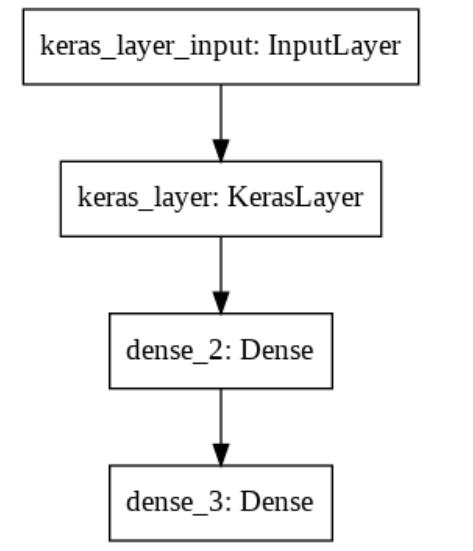

输入层 需要输入IMDB数据集影评文本

第一层隐藏层 需要表示文本信息,所以将句子转换为嵌入向量。这一层使用一个预训练的保存好的模型来将句子映射为嵌入向量(embedding vector)。使用了预训练文本嵌入模型。将句子切割为符号,嵌入每个符号然后进行合并。

第二层隐藏层 需要将上一层输出的向量,发现规则或提取映射方式,我们可以使用全连接层Dense;那个用多少个隐藏单元(节点)呢,这个得具体看第一层得输出了;比如第一层输出是20,那么第二层得隐藏单元可以用16个;比如第一层输出是100,那么第二层得隐藏单元可以用64个;

输出层 需要进行二分类(判断影评文本是“积极”,还是“消极”),这里使用一个节点即可,这个节点与 第二层隐藏层 紧密相连,我们可以使用全连接层Dense。然后使用 Sigmoid 激活函数,其函数值为介于 0 与 1 之间的浮点数,表示置信度。

好啦,搭建模型;

model = tf.keras.Sequential()

model.add(hub_layer)

model.add(tf.keras.layers.Dense(16, activation='relu'))

model.add(tf.keras.layers.Dense(1))查看一下网络模型:tf.keras.utils.plot_model(model) ,

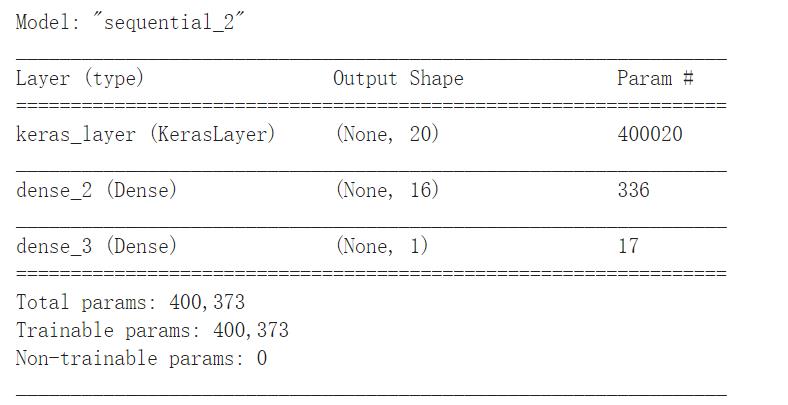

或者用这样方式看看:model.summary()

3.3 编译模型

一个模型需要损失函数和优化器来进行训练。由于这是一个二分类问题且模型输出概率值(一个使用 sigmoid 激活函数的单一单元层),我们将使用 binary_crossentropy 损失函数。

model.compile(optimizer='adam',

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=['accuracy'])

四、训练模型

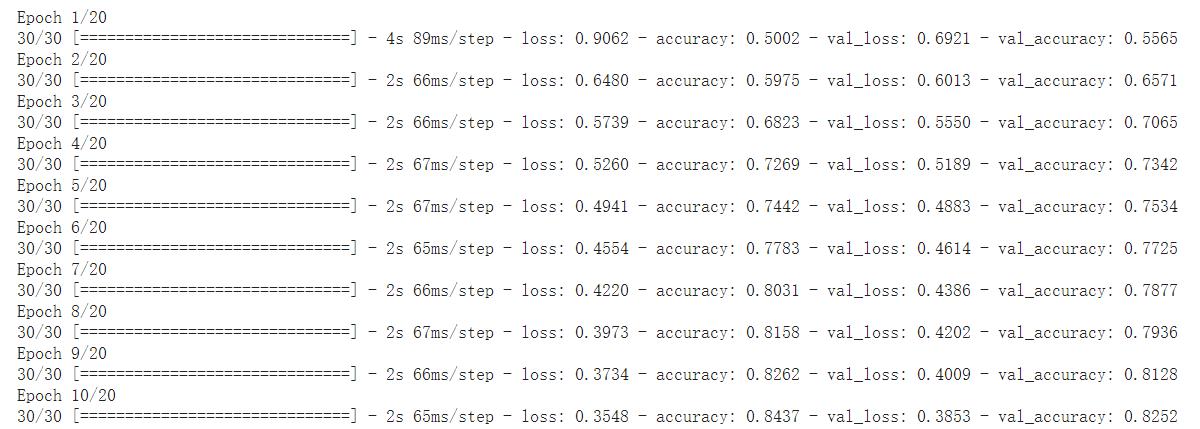

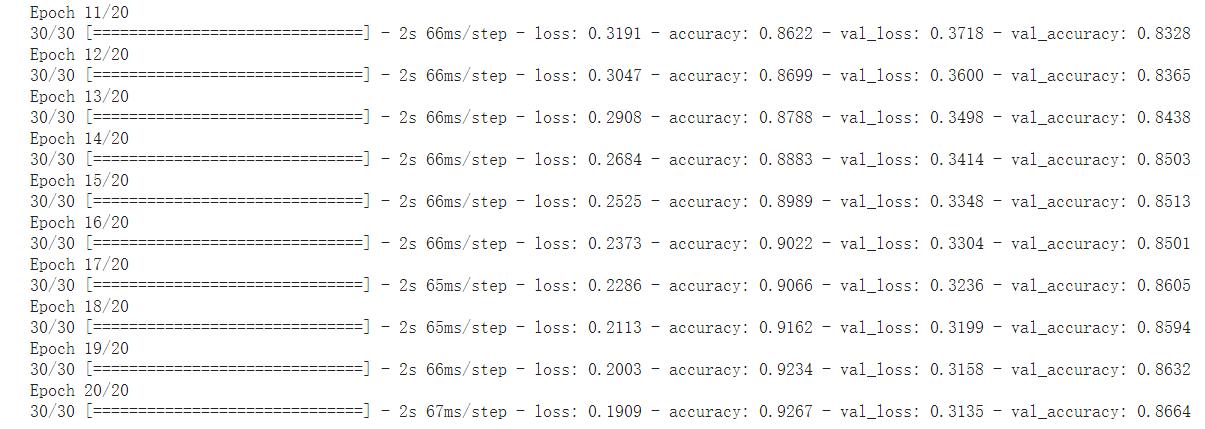

这里我们输入准备好的训练集数据(包括影评文本、对应标签),测试集的数据(包括影评文本、对应标签),模型一共训练20次。

以 512 个样本的 mini-batch 大小迭代 20 个 epoch 来训练模型。 这是指对 x_train 和 y_train 中所有样本的的 20 次迭代。

history = model.fit(train_data.shuffle(10000).batch(512),

epochs=20,

validation_data=validation_data.batch(512),

verbose=1)下图是训练过程的截图:

通常loss越小越好,对了解释下什么是loss;简单来说是 模型预测值 和 真实值 的相差的值,反映模型预测的结果和真实值的相差程度;

通常准确度accuracy 越高,模型效果越好。

评估模型

看下模型效果,返回两个值,损失值(loss)与准确率(accuracy)。

results = model.evaluate(test_data.batch(512), verbose=2)

for name, value in zip(model.metrics_names, results):

print("%s: %.3f" % (name, value))显示:loss: 0.326 accuracy: 0.857,即:模型准确率约 85%

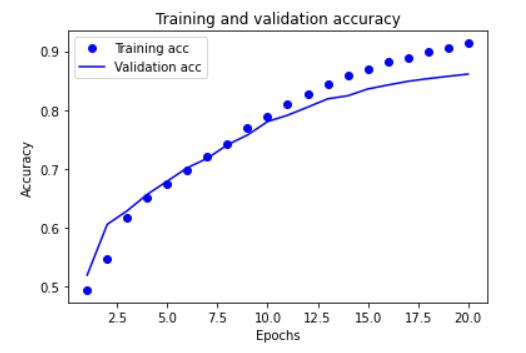

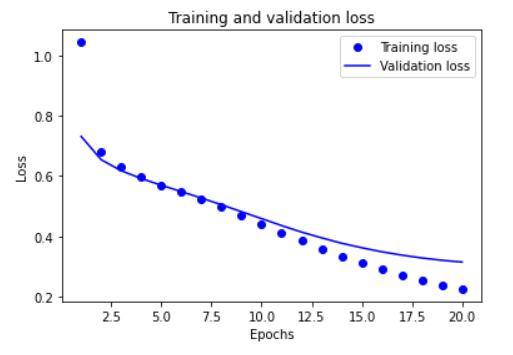

创建一个准确率和损失值随时间变化的图表,model.fit() 返回一个 History 对象,该对象包含一个字典,其中包含训练阶段的参数:

dict_keys(['loss', 'accuracy', 'val_loss', 'val_accuracy'])

准确率随时间变化的图表:

history_dict = history.history

history_dict.keys()

import matplotlib.pyplot as plt

acc = history_dict['accuracy']

val_acc = history_dict['val_accuracy']

loss = history_dict['loss']

val_loss = history_dict['val_loss']

epochs = range(1, len(acc) + 1)

# “bo”代表 "蓝点"

plt.plot(epochs, loss, 'bo', label='Training loss')

# b代表“蓝色实线”

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

损失值随时间变化的图表:

plt.clf() # 清除数字

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

五、使用模型

通常使用 model.predict( ) 函数进行预测。

六、完整代码

# 导入tensorflow、tensorflow-hub等依赖库

import numpy as np

import tensorflow as tf

import tensorflow_hub as hub

import tensorflow_datasets as tfds

import matplotlib.pyplot as plt

# 打印版本和是否有使用GPU

print("Version: ", tf.__version__)

print("Eager mode: ", tf.executing_eagerly())

print("Hub version: ", hub.__version__)

print("GPU is", "available" if tf.config.experimental.list_physical_devices("GPU") else "NOT AVAILABLE")

# 导入IMDB数据集,它是一个二元情绪分类的数据集,包含 50,000 条影评文本

# 试集25,000 条影评、训练集15,000 条影评、验证集10,000 条影评

train_data, validation_data, test_data = tfds.load(

name="imdb_reviews",

split=('train[:60%]', 'train[60%:]', 'test'),

as_supervised=True)

# 探索集数据,每个样本是一个句子(包含电影评论和相应标签),句子没有进行预处理。标签是一个值为0或1,其0代表消极评论,1代表积极评论。

train_examples_batch, train_labels_batch = next(iter(train_data.batch(10)))

# 查看前十个评论

train_examples_batch

# 查看前是个标签

train_labels_batch

# 表示文本的一种方式是将句子转换为嵌入向量(embeddings vectors),使用一个预先训练好的文本嵌入(text embedding)作为首层。

embedding = "https://tfhub.dev/google/tf2-preview/gnews-swivel-20dim/1"

hub_layer = hub.KerasLayer(embedding, input_shape=[],dtype=tf.string, trainable=True)

hub_layer(train_examples_batch[:3])

# 搭建模型

model = tf.keras.Sequential()

model.add(hub_layer)

model.add(tf.keras.layers.Dense(16, activation='relu'))

model.add(tf.keras.layers.Dense(1))

# 查看一下网络模型

tf.keras.utils.plot_model(model)

model.summary()

# 编译模型

model.compile(optimizer='adam',

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=['accuracy'])

# 训练模型

history = model.fit(train_data.shuffle(10000).batch(512),

epochs=20,

validation_data=validation_data.batch(512),

verbose=1)

# 评估模型

results = model.evaluate(test_data.batch(512), verbose=2)

for name, value in zip(model.metrics_names, results):

print("%s: %.3f" % (name, value))

# model.fit()返回一个History对象

history_dict = history.history

history_dict.keys()

# 准确率随时间变化的图表

acc = history_dict['accuracy']

val_acc = history_dict['val_accuracy']

loss = history_dict['loss']

val_loss = history_dict['val_loss']

epochs = range(1, len(acc) + 1)

# “bo”代表 "蓝点"

plt.plot(epochs, loss, 'bo', label='Training loss')

# b代表“蓝色实线”

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

# 损失值随时间变化的图表

plt.clf() # 清除数字

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

声明:本篇文章,未经许可,谢绝转载。

TensorFlow Hub 中名为 google/tf2-preview/gnews-swivel-20dim/1 的一种预训练文本嵌入(text embedding)模型

模型可以表示文本信息,所以将句子转换为嵌入向量,相同效果的还有其他三种预训练模型可以使用:

- google/tf2-preview/gnews-swivel-20dim-with-oov/1 ——类似 google/tf2-preview/gnews-swivel-20dim/1,但 2.5%的词汇转换为OOV buckets。如果任务的词汇与模型的词汇没有完全重叠,这将会有所帮助。

- google/tf2-preview/nnlm-en-dim50/1 ——一个拥有约 1M 词汇量且维度为 50 的更大的模型。

- google/tf2-preview/nnlm-en-dim128/1 ——拥有约 1M 词汇量且维度为128的更大的模型。

神经网络 概念认识:【神经网络】综合篇——人工神经网络、卷积神经网络、循环神经网络、生成对抗网络

TensorFlow2 概念认识:【TensorFlow2.x开发—基础】 简介、安装、入门应用案例

以上是关于迁移学习之快速实现文本分类的主要内容,如果未能解决你的问题,请参考以下文章