impala 4.0.0 开发环境搭建

Posted PeersLee

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了impala 4.0.0 开发环境搭建相关的知识,希望对你有一定的参考价值。

代码

https://github.com/apache/impala

编译

系统配置

cat /etc/redhat-release

CentOS Linux release 7.8.2003 (Core)Mem: 7.6G

Swap: 20.0G

CPU: 4Core# 创建 20G 的 swap 空间

# 参考: https://blog.csdn.net/vincentuva/article/details/83111447

sudo mkdir swapfile

cd /swapfile

sudo dd if=/dev/zero of=swap bs=1024 count=20000000

sudo mkswap -f swap

sudo swapon swap准备

sudo yum -y install lzo-devel python-devel cyrus-sasl-devel krb5-devel cmake java-1.8.0-openjdk-devel python-setuptools gcc-c++ libstdc++-develHDFS domain sockets:

sudo mkdir /var/lib/hadoop-hdfs/

sudo chown <user> /var/lib/hadoop-hdfs/postgresql:

sudo yum install postgresql-server postgresql-contrib

sudo postgresql-setup initdb

sudo systemctl start postgresql

sudo systemctl enable postgresql

sudo -u postgres psql -c "SELECT version();"

/var/lib/pgsql/data/pg_hba.conf change peer or ident to trust:

setting:

sudo -u postgres psql postgres

hive-site.xml

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:postgresql://localhost:5432/HMS_xxx_ooo</value>

</property>

CREATE DATABASE “HMS_xxx_ooo”;

CREATE USER hiveuser WITH PASSWORD 'password';

GRANT ALL PRIVILEGES ON DATABASE “HMS_xxx_ooo” TO hiveuser;

https://www.runoob.com/linux/linux-comm-useradd.html

cd /data/impala/thirdparty/hive-1.1.0-cdh5.15.1/bin打包

export JAVA_HOME=/opt/java8

export PATH=$PATH:$JAVA_HOME/bin

export M2_HOME=~/opt/apache-maven-3.6.3

export M2=$M2_HOME/bin

export PATH=$M2:$PATH

export IMPALA_HOME=`pwd`

./bin/bootstrap_system.sh

source ./bin/impala-config.sh

./buildall.sh -noclean -notests -skiptests测试

启动 HDFS、HMS、impala

./testdata/bin/run-mini-dfs.sh

Stopping kudu

Stopping yarn

Stopping kms

Stopping hdfs

Starting hdfs (Web UI - http://localhost:5070)

Namenode started

Starting kms (Web UI - http://localhost:9600)

Starting yarn (Web UI - http://localhost:8088)

Starting kudu (Web UI - http://localhost:8051)

The cluster is running

jps -ml

36609 org.apache.hadoop.hdfs.server.datanode.DataNode

37169 org.apache.hadoop.crypto.key.kms.server.KMSWebServer

37281 org.apache.hadoop.yarn.server.resourcemanager.ResourceManager

36594 org.apache.hadoop.hdfs.server.datanode.DataNode

36629 org.apache.hadoop.hdfs.server.datanode.DataNode

37271 org.apache.hadoop.yarn.server.nodemanager.NodeManager

36648 org.apache.hadoop.hdfs.server.namenode.NameNode

38408 sun.tools.jps.Jps -ml./testdata/bin/run-hive-server.sh

No handlers could be found for logger "thrift.transport.TSocket"

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Waiting for the Metastore at localhost:9083...

Metastore service is up at localhost:9083.

No handlers could be found for logger "thrift.transport.TSocket"

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

Waiting for HiveServer2 at localhost:11050...

Could not connect to any of [('::1', 11050, 0, 0), ('127.0.0.1', 11050)]

HiveServer2 service is up at localhost:11050.

#org.apache.hive.service.server.HiveServer2

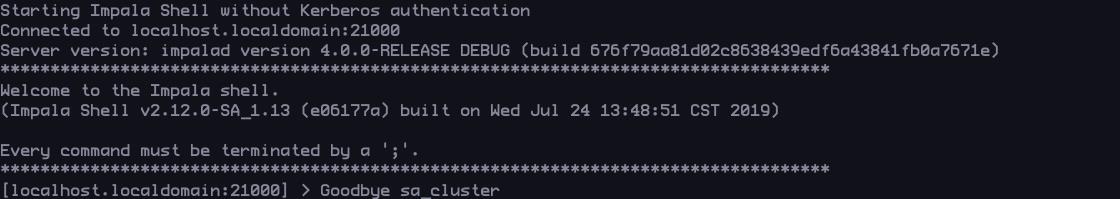

#org.apache.hadoop.hive.metastore.HiveMetaStore${IMPALA_HOME}/bin/start-impala-cluster.py停止 HDFS、HMS、impala

${IMPALA_HOME}/bin/start-impala-cluster.py --kill

./testdata/bin/killxxx

以上是关于impala 4.0.0 开发环境搭建的主要内容,如果未能解决你的问题,请参考以下文章