K8s + Docker 部署ELK日志系统

Posted Java软件编程之家

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了K8s + Docker 部署ELK日志系统相关的知识,希望对你有一定的参考价值。

K8s + Docker 部署ELK日志系统,分布式/微服务架构下必备技能!

前提:假定你已经安装并集成好docker、docker私服和k8s基础环境!

部署Elasticsearch

https://www.elastic.co/guide/en/elasticsearch/reference/7.8/docker.htmlELK集成需要配套版本号,这里统一为:7.8.0版本(如果不想使用官方的镜像,自己制作也是可以的)。

2、确定运行es的节点服务器,例如node7,给对应的节点打上运行es的标签,执行如下命令:

kubectl label nodes node7 deploy.elk=true3、在node7节点创建绑定目录,并授权:

mkdir -p /opt/apps-mgr/es/chmod -R 777 /opt/apps-mgr/es

4、系统内核优化,使之符合es的生产模式:

vi /etc/security/limits.conf,然后末尾追加:--ulimit - nofile 65535vi /etc/security/limits.conf,然后末尾追加:--ulimit - nproc 4096vi /etc/fstab,然后注释所有包含swap的行vi /etc/sysctl.conf,然后末尾追加:vm.max_map_count=262144,执行sysctl -p生效

5、从docker官方私服拉取镜像,并推送镜像到内部私服:

docker pull docker.elastic.co/elasticsearch/elasticsearch:7.8.0docker tag docker.elastic.co/elasticsearch/elasticsearch:7.8.0 10.68.60.103:5000/elasticsearch:7.8.0docker push 10.68.60.103:5000/elasticsearch:7.8.0

6、编写es-deployment.yaml文件,内容如下:

apiVersion: apps/v1kind: Deploymentmetadata:name: es-deploymentnamespace: my-namespacelabels:app: es-deploymentspec:replicas: 1selector:matchLabels:app: es-podtemplate:metadata:labels:app: es-podspec:nodeSelector:: "true"restartPolicy: Alwayscontainers:name: tsale-server-containerimage: "10.68.60.103:5000/elasticsearch:7.8.0"ports:containerPort: 9200env:name: node.namevalue: "es01"name: cluster.namevalue: "tsale-sit2-es"name: discovery.seed_hostsvalue: "10.68.60.111"name: cluster.initial_master_nodesvalue: "es01"name: bootstrap.memory_lockvalue: "false"name: ES_JAVA_OPTSvalue: "-Xms512m -Xmx1g":limits:memory: "1G"cpu: "1"requests:: "512Mi": "500m"volumeMounts:mountPath: "/usr/share/elasticsearch/data"name: "es-data-volume"mountPath: "/usr/share/elasticsearch/logs"name: "es-logs-volume"imagePullSecrets:name: regcredvolumes:name: "es-data-volume"hostPath:path: "/opt/apps-mgr/es/data"type: DirectoryOrCreatename: "es-logs-volume"hostPath:path: "/opt/apps-mgr/es/logs"type: DirectoryOrCreate

注意:elasticsearch docker 镜像默认情况下使用uid:gid (1000:0)作为运行es的用户和用户组。

7、执行deployment:

kubectl apply -f es-deployment.yaml8、创建对外暴露端口Service:

apiVersion: v1kind: Servicemetadata:namespace: my-namespacename: es-service-9200spec:type: NodePortselector:app: es-podports:protocol: TCPport: 9200targetPort: 9200: 9200---apiVersion: v1kind: Servicemetadata:namespace: my-namespacename: es-service-9300spec:type: NodePortselector:: es-podports:protocol: TCPport: 9300targetPort: 9300nodePort: 9300

9、执行service:

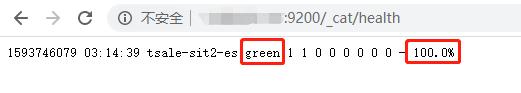

kubectl apply -f es-service.yaml10、浏览器访问:

http://node7:9200/_cat/health如果返回下面信息则表示部署成功:

11、如果启动失败,可以通过下面方式查询日志进行分析:

kubectl get pods -n my-namespace -o widekubectl logs -f es-deployment-67f47f8d44-7cj5p -n my-namespacekubectl describe pod es-deployment-986bc449f-gnjb4 -n my-namespace# 或者直接进入node7查看绑定宿主机的日志:less /opt/apps-mgr/es/logs/

部署Kibana

https://www.elastic.co/guide/en/kibana/7.8/docker.html#environment-variable-config2、从docker官方私服拉取镜像,并推送镜像到内部私服:

docker pull docker.elastic.co/kibana/kibana:7.8.0docker tag docker.elastic.co/kibana/kibana:7.8.0 10.68.60.103:5000/kibana:7.8.0docker push 10.68.60.103:5000/kibana:7.8.0

如果不想使用官方的镜像,自己制作也是可以的

3、确定运行es的节点服务器,例如node7,给对应的节点打上运行es的标签,执行如下命令:

kubectl label nodes node7 deploy.elk=true3、在node7节点创建绑定目录,并授权:

mkdir -p /opt/apps-mgr/kibana/chmod -R 777 /opt/apps-mgr/kibana

4、创建kibana.yaml配置文件,并配置几个关键项:

vi /opt/apps-mgr/kibana/kibana.yaml,内容如下:elasticsearch.hosts: http://10.68.60.111:9200server.host: 0.0.0.0

关于kibana.yaml配置文件更多配置请查阅官方文档:

https://www.elastic.co/guide/en/kibana/7.8/settings.html5、编写kibana-deployment.yaml配置文件:

apiVersion: apps/v1kind: Deploymentmetadata:name: kibana-deploymentnamespace: my-namespacelabels:app: kibana-deploymentspec:replicas: 1selector:matchLabels:app: kibana-podtemplate:metadata:labels:app: kibana-podspec:nodeSelector:: "true"restartPolicy: Alwayscontainers:name: kibana-containerimage: "10.68.60.103:5000/kibana:7.8.0"ports:: 5601volumeMounts:mountPath: "/usr/share/kibana/config/kibana.yml"name: "kibana-conf-volume"imagePullSecrets:name: regcredvolumes:name: "kibana-conf-volume"hostPath:path: "/opt/apps-mgr/kibana/kibana.yml"type: File

6、执行deployment:

kubectl apply -f kibana-deployment.yaml7、查看启动情况:

kubectl get pods -n my-namespace -o widekubectl logs -f kibana-deployment-67f47f8d44-7cj5p -n my-namespacekubectl describe pod kibana-deployment-986bc449f-gnjb4 -n my-namespace

8、创建对外暴露端口Service:

apiVersion: v1kind: Servicemetadata:namespace: my-namespacename: kibana-servicespec:type: NodePortselector:app: kibana-podports:protocol: TCPport: 5601targetPort: 5601nodePort: 5601

9、执行Service:

kubectl apply -f kibana-service.yamlkubectl get service -n my-namespace

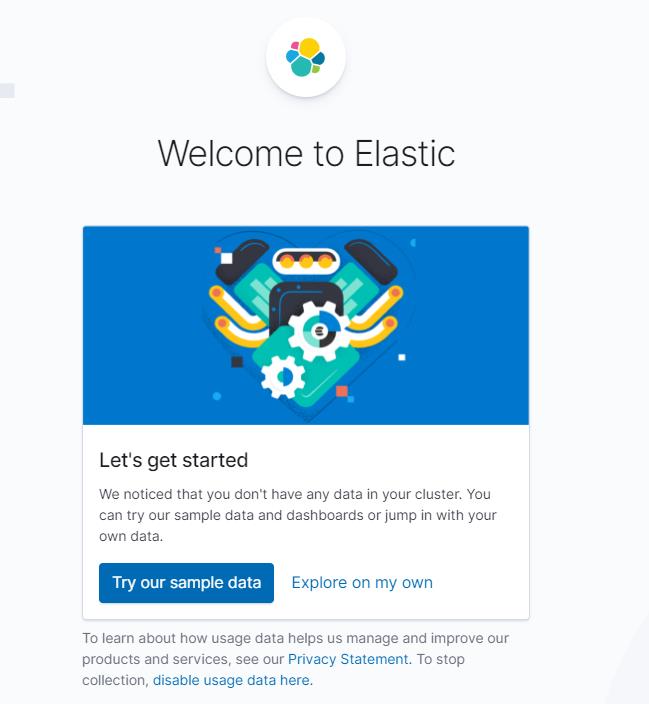

10、浏览器访问:

http://node7:5601出现如下图,证明部署成功,且可以正常使用:

现在我们只是部署好了kibana,等到我们后面将logstash配置好之后就可以进一步从界面化去配置kibana的访问索引信息,配置对应的日志es索引进行日志查看。

部署logstash

1、logstash我们计划自行制作镜像,因为官方提供的镜像设置了目录用户权限,在进行配置数据注入时会报Read-only file system异常。创建构建docker镜像目录:

mkdir -p /opt/docker/build/logstash2、下载配套的logstash版本:

wget https://artifacts.elastic.co/downloads/logstash/logstash-7.8.0.tar.gz3、编写Dockerfile文件,内容如下:

FROM 10.68.60.103:5000/jdk:v1.8.0_181_x64="lazy"ADD logstash-7.8.0.tar.gz /RUN mkdir -p /opt/soft &&mv /logstash-7.8.0 /opt/soft/logstash &&mkdir -p /opt/soft/logstash/pipeline &&cp -R /usr/share/zoneinfo/Asia/Shanghai /etc/localtimeWORKDIR /opt/soft/logstashENV LOGSTASH_HOME /opt/soft/logstash:$PATHENTRYPOINT [ "sh", "-c", "/opt/soft/logstash/bin/logstash" ]

注意:FROM 10.68.60.103:5000/jdk:v1.8.0_181_x64镜像是我们自己构建的jdk8镜像,并推送到内部私服。

4、构建镜像,并推送到私服:

docker build -t 10.68.60.103:5000/logstash:7.8.0 -f Dockerfile .docker push 10.68.60.103:5000/logstash:7.8.0

5、编写logstash-daemonset.yaml文件,内容如下:

---# 创建ConfigMap定义logstash配置文件内容apiVersion: v1kind: ConfigMapmetadata:name: logstash-confignamespace: my-namespacelabels:: logstashdata:: |-# 节点描述: ${NODE_NAME}# 持久化数据存储路径: /opt/soft/logstash/data# 管道ID: ${NODE_NAME}# 主管道配置目录(需要手工创建该目录): /opt/soft/logstash/pipeline# 定期检查配置是否已更改,并在配置发生更改时重新加载配置: true# 几秒钟内,Logstash会检查配置文件: 30s# 绑定网卡地址: "0.0.0.0"# 绑定端口: 9600# logstash 日志目录: /opt/soft/logstash/logs: |-# 输入块定义input {# 文件收集插件file {# 收集器IDid => "admin-server"# 以 换行符结尾作为一个事件发送# 排除.gz结尾的文件exclude => "*.gz"# tail模式mode => tail# 从文件尾部开始读取start_position => "end"# 收据数据源路径文件path => ["/opt/apps-mgr/admin-server/logs/*.log"]# 为每个事件添加type字段type => "admin-server"# 每个事件编解码器,类似过滤器# multiline支持多行拼接起来作为一个事件codec => multiline {# 不以时间戳开头的pattern => "^%{TIMESTAMP_ISO8601}"# 如果匹配上面的pattern模式,将执行what策略negate => true# previous策略是拼接到前一个事件后面what => "previous"#总结:凡是不以时间戳开头的事件行直接拼接到上一个事件行后面作为一个事件处理,# 例如Java堆栈处理结果是整个Java错误堆栈作为一条数据,因为Java报错信息是不以时间戳开头的}}}input {# 文件收集插件file {# 收集器IDid => "api-server"# 以 换行符结尾作为一个事件发送# 排除.gz结尾的文件exclude => "*.gz"# tail模式mode => tail# 从文件尾部开始读取start_position => "end"# 收据数据源路径文件path => ["/opt/apps-mgr/api-server/logs/*.log"]# 为每个事件添加type字段type => "api-server"# 每个事件编解码器,类似过滤器# multiline支持多行拼接起来作为一个事件codec => multiline {# 不以时间戳开头的pattern => "^%{TIMESTAMP_ISO8601}"# 如果匹配上面的pattern模式,将执行what策略negate => true# previous策略是拼接到前一个事件后面what => "previous"#总结:凡是不以时间戳开头的事件行直接拼接到上一个事件行后面作为一个事件处理,# 例如Java堆栈处理结果是整个Java错误堆栈作为一条数据,因为Java报错信息是不以时间戳开头的}}}# 过滤块定义filter {#不做任何过滤,原样发送给输出阶段}# 输出块定义output {# 输出到elasticsearchif [type] == "admin-server" {elasticsearch {hosts => ["http://${ELASTICSEARCH_HOST}:${ELASTICSEARCH_PORT}"]=> "admin_server_%{+YYYY.MM.dd}"}}if [type] == "api-server" {elasticsearch {hosts => ["http://${ELASTICSEARCH_HOST}:${ELASTICSEARCH_PORT}"]index => "api_server_%{+YYYY.MM.dd}"}}}---# 通过DaemonSet对象使指定的多个节点分别运行一个PodapiVersion: apps/v1kind: DaemonSetmetadata:name: logstashnamespace: my-namespacelabels:: logstashspec:selector:matchLabels:: logstashtemplate:metadata:labels:: logstashspec:# 指定运行节点标签nodeSelector:: biz_app# 指定当前DaemonSet权限账号: logstashterminationGracePeriodSeconds: 30hostNetwork: truednsPolicy: ClusterFirstWithHostNet# 禁用环境变量DNSenableServiceLinks: falsecontainers:name: logstash-containerimage: 10.68.60.103:5000/logstash:7.8.0# 如果本地存在镜像则不拉取imagePullPolicy: IfNotPresent# 注入环境变量信息env:name: ELASTICSEARCH_HOSTvalue: "10.68.60.111"name: ELASTICSEARCH_PORTvalue: "9200"name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName# resources:# limits:# memory: 256Mi# requests:# cpu: 200m# memory: 100MisecurityContext:# 指定运行的用户0=rootrunAsUser: 0# 挂载volumeMounts:name: logstash-config-volumemountPath: /opt/soft/logstash/config/logstash.ymlsubPath: logstash.ymlname: logstash-pipeline-volumemountPath: /opt/soft/logstash/pipeline/logstash.confsubPath: logstash.confname: logstash-collect-volumemountPath: /opt/apps-mgrname: logstash-data-volumemountPath: /opt/soft/logstash/dataname: logstash-logs-volumemountPath: /opt/soft/logstash/logsvolumes:name: logstash-config-volumeconfigMap:name: logstash-configdefaultMode: 0777items:key: logstash.ymlpath: logstash.ymlname: logstash-pipeline-volumeconfigMap:name: logstash-configdefaultMode: 0777items:key: logstash.confpath: logstash.confname: logstash-collect-volumehostPath:path: /opt/apps-mgrtype: DirectoryOrCreatename: logstash-data-volumehostPath:path: /opt/soft/logstash/datatype: DirectoryOrCreatename: logstash-logs-volumehostPath:path: /opt/soft/logstash/logstype: DirectoryOrCreate---# 将角色和账号绑定apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: logstashsubjects:kind: ServiceAccountname: logstashnamespace: my-namespaceroleRef:kind: ClusterRolename: logstashapiGroup: rbac.authorization.k8s.io---# 创建一个集群级别的角色对象apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:name: logstashlabels:: logstash# 配置角色权限rules:apiGroups: [""] # "" indicates the core API groupresources:namespacespodsverbs:getwatchlist---# 创建一个抽象的ServiceAccount逻辑账号对象apiVersion: v1kind: ServiceAccountmetadata:name: logstashnamespace: my-namespacelabels:: logstash---

6、执行配置文件:

kubectl apply -f logstash-daemonset.yaml配置Kibana

1、登录kibana

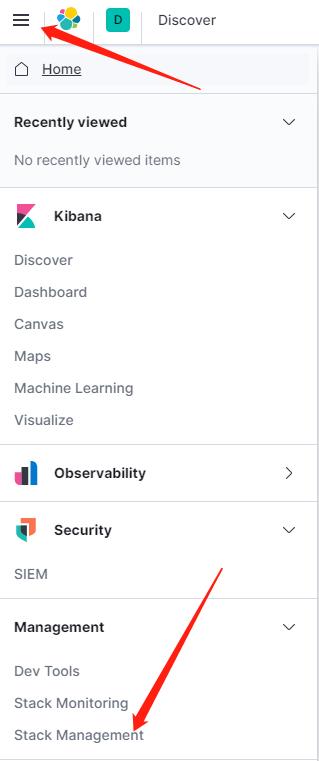

http://node7:5601/app/kibana2、点击管理菜单(stack mangerment):

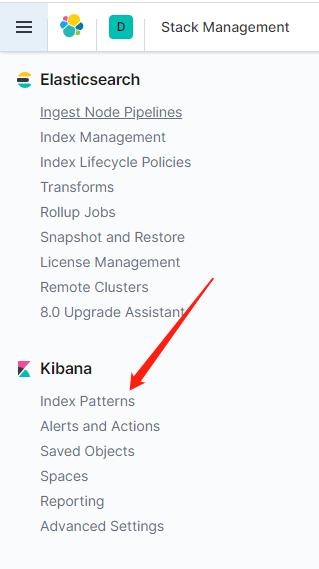

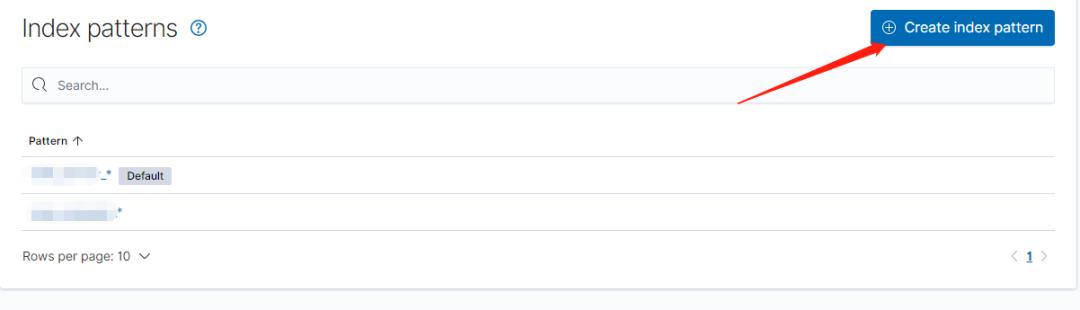

3、点击索引模板菜单:

4、添加索引模板:

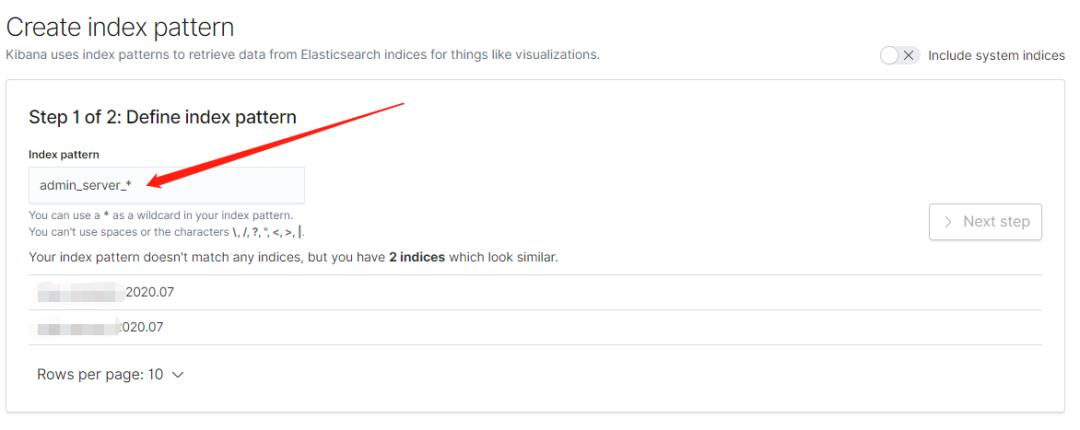

5、输入logstash 输出部分配置的索引前缀,例如:admin_server_*

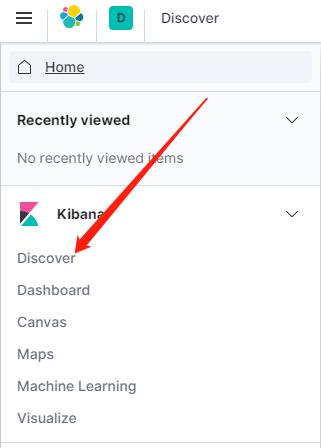

6、添加成功后就可以在Discover菜单进行选择查看:

7、效果如下:

---------- 正文结束 ----------

Java软件编程之家

以上是关于K8s + Docker 部署ELK日志系统的主要内容,如果未能解决你的问题,请参考以下文章