Spark处理json数据

Posted Shall潇

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Spark处理json数据相关的知识,希望对你有一定的参考价值。

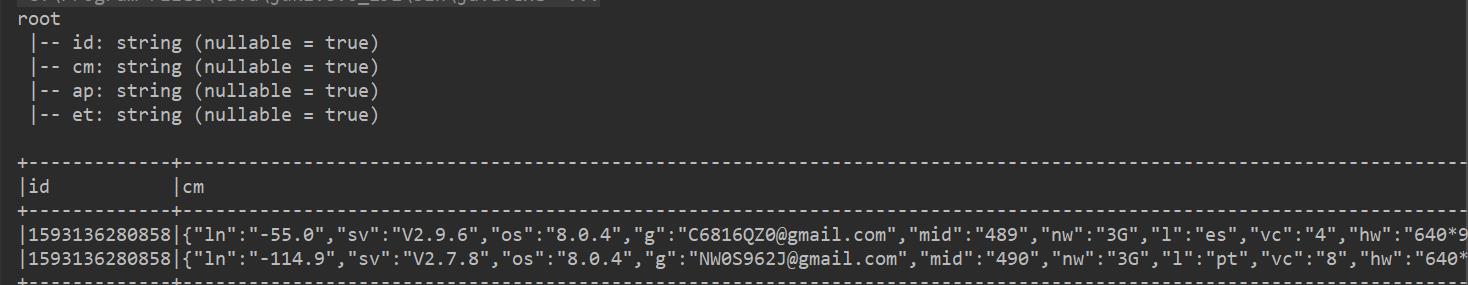

基于上一篇:Spark处理json数据 的实战应用

一、要处理的 json 文件

【这种json文件可以是通过 fastJson处理后要分析的数据(json里面套json)】

op.log

1593136280858|{"cm":{"ln":"-55.0","sv":"V2.9.6","os":"8.0.4","g":"C6816QZ0@gmail.com","mid":"489","nw":"3G","l":"es","vc":"4","hw":"640*960","ar":"MX","uid":"489","t":"1593123253541","la":"5.2","md":"sumsung-18","vn":"1.3.4","ba":"Sumsung","sr":"I"},"ap":"app","et":[{"ett":"1593050051366","en":"loading","kv":{"extend2":"","loading_time":"14","action":"3","extend1":"","type":"2","type1":"201","loading_way":"1"}},{"ett":"1593108791764","en":"ad","kv":{"activityId":"1","displayMills":"78522","entry":"1","action":"1","contentType":"0"}},{"ett":"1593111271266","en":"notification","kv":{"ap_time":"1593097087883","action":"1","type":"1","content":""}},{"ett":"1593066033562","en":"active_background","kv":{"active_source":"3"}},{"ett":"1593135644347","en":"comment","kv":{"p_comment_id":1,"addtime":"1593097573725","praise_count":973,"other_id":5,"comment_id":9,"reply_count":40,"userid":7,"content":"辑赤蹲慰鸽抿肘捎"}}]}

1593136280858|{"cm":{"ln":"-114.9","sv":"V2.7.8","os":"8.0.4","g":"NW0S962J@gmail.com","mid":"490","nw":"3G","l":"pt","vc":"8","hw":"640*1136","ar":"MX","uid":"490","t":"1593121224789","la":"-44.4","md":"Huawei-8","vn":"1.0.1","ba":"Huawei","sr":"O"},"ap":"app","et":[{"ett":"1593063223807","en":"loading","kv":{"extend2":"","loading_time":"0","action":"3","extend1":"","type":"1","type1":"102","loading_way":"1"}},{"ett":"1593095105466","en":"ad","kv":{"activityId":"1","displayMills":"1966","entry":"3","action":"2","contentType":"0"}},{"ett":"1593051718208","en":"notification","kv":{"ap_time":"1593095336265","action":"2","type":"3","content":""}},{"ett":"1593100021275","en":"comment","kv":{"p_comment_id":4,"addtime":"1593098946009","praise_count":220,"other_id":4,"comment_id":9,"reply_count":151,"userid":4,"content":"抄应螟皮釉倔掉汉蛋蕾街羡晶"}},{"ett":"1593105344120","en":"praise","kv":{"target_id":9,"id":7,"type":1,"add_time":"1593098545976","userid":8}}]}

把数据放入 :json在线解析,可以看到

二、处理的过程

【注意:这里我的spark-core和spark-sql 版本都是2.4.7,有些低的版本下面代码会报错,

原因:之前低版本的不支持 from_json 函数里面放 ArrayType 类型】

1、全部代码

import org.apache.spark.sql.functions._

import org.apache.spark.sql.types._

import org.apache.spark.sql.SparkSession

import org.apache.spark.{SparkConf, SparkContext}

object Fsp {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster("local[*]").setAppName("11")

val sc = SparkContext.getOrCreate(conf)

val spark = new SparkSession.Builder().config(conf).getOrCreate()

import spark.implicits._

val rdd = sc.textFile("in/op.log")

val jsonRdd = rdd.map(x=>x.split('|')).map(x=>(x(0),x(1))).map(x=>{

x._2.replaceFirst("\\\\{","{\\"id\\":\\""+x._1+"\\"," )

})

/*原始数据*/

val jsonDF = jsonRdd.toDF()

// jsonDF.printSchema()

// jsonDF.show(false)

/*先分出大类*/

val jsonDF1 = jsonDF.select(

get_json_object($"value", "$.id").as("id"),

get_json_object($"value", "$.cm").as("cm"),

get_json_object($"value", "$.ap").as("ap"),

get_json_object($"value", "$.et").as("et"))

// jsonDF1.printSchema()

// jsonDF1.show(false)

/*再将cm 展开*/

val jsonDF2 = jsonDF1.select($"id", $"ap",

get_json_object($"cm", "$.ln").as("ln"),

get_json_object($"cm", "$.sv").as("sv"),

get_json_object($"cm", "$.os").as("os"),

get_json_object($"cm", "$.g").as("g"),

get_json_object($"cm", "$.mid").as("mid"),

get_json_object($"cm", "$.nw").as("nw"),

get_json_object($"cm", "$.l").as("l"),

get_json_object($"cm", "$.vc").as("vc"),

get_json_object($"cm", "$.hw").as("hw"),

get_json_object($"cm", "$.ar").as("ar"),

get_json_object($"cm", "$.uid").as("uid"),

get_json_object($"cm", "$.t").as("t"),

get_json_object($"cm", "$.la").as("la"),

get_json_object($"cm", "$.md").as("md"),

get_json_object($"cm", "$.vn").as("vn"),

get_json_object($"cm", "$.ba").as("ba"),

get_json_object($"cm", "$.sr").as("sr"),

$"et" //由于 et 每个组结构都相同,所以采用 from_json 函数处理更好

)

// jsonDF2.printSchema()

// jsonDF2.show(false)

val jsonDF3 = jsonDF2.select($"id", $"ap", $"ln", $"sv", $"os", $"g", $"mid", $"nw", $"l", $"vc", $"hw", $"ar", $"uid", $"t", $"la", $"md", $"vn", $"ba", $"sr",

from_json($"et", ArrayType(StructType(StructField("ett", StringType) :: StructField("en", StringType) :: StructField("kv", StringType) :: Nil))).as("events")

)

// jsonDF3.printSchema()

// jsonDF3.show(false)

val jsonDF4 = jsonDF3.select($"id", $"ap", $"ln", $"sv", $"os", $"g", $"mid", $"nw", $"l", $"vc", $"hw", $"ar", $"uid", $"t", $"la", $"md", $"vn", $"ba", $"sr",

explode($"events").as("events")

)

// jsonDF4.printSchema()

// jsonDF4.show(false)

val jsonDF5 = jsonDF4.select($"id", $"ap", $"ln", $"sv", $"os", $"g", $"mid", $"nw", $"l", $"vc", $"hw", $"ar", $"uid", $"t", $"la", $"md", $"vn", $"ba", $"sr",

$"events.ett",$"events.en",$"events.kv")

jsonDF5.printSchema()

jsonDF5.show(false)

}

}

2、代码分析过程

1、首先加载数据

你也可以上传至HDFS,从其上面读取

val rdd = sc.textFile("in/op.log")

2、初步处理

给电话号加一个字段 id ,并且放入json的{}里,使得整体统一,方便后序处理

val jsonRdd = rdd.map(x=>x.split('|')).map(x=>(x(0),x(1))).map(x=>{

x._2.replaceFirst("\\\\{","{\\"id\\":\\""+x._1+"\\"," )

})

3、转化成DataFrame

val jsonDF = jsonRdd.toDF()

4、利用 get_json_object 函数初步分解 json

val jsonDF1 = jsonDF.select(

get_json_object($"value", "$.id").as("id"),

get_json_object($"value", "$.cm").as("cm"),

get_json_object($"value", "$.ap").as("ap"),

get_json_object($"value", "$.et").as("et"))

5、将 cm 展开

我们看到 cm 那一列还包含一个json,所以我们继续用 get_json_object来处理,et 虽然也有json,但是不同于它,所以et 那列先不处理

val jsonDF2 = jsonDF1.select($"id", $"ap",

get_json_object($"cm", "$.ln").as("ln"),

get_json_object($"cm", "$.sv").as("sv"),

get_json_object($"cm", "$.os").as("os"),

get_json_object($"cm", "$.g").as("g"),

get_json_object($"cm", "$.mid").as("mid"),

get_json_object($"cm", "$.nw").as("nw"),

get_json_object($"cm", "$.l").as("l"),

get_json_object($"cm", "$.vc").as("vc"),

get_json_object($"cm", "$.hw").as("hw"),

get_json_object($"cm", "$.ar").as("ar"),

get_json_object($"cm", "$.uid").as("uid"),

get_json_object($"cm", "$.t").as("t"),

get_json_object($"cm", "$.la").as("la"),

get_json_object($"cm", "$.md").as("md"),

get_json_object($"cm"