2万字,实战 Docker 部署:完整的前后端,主从热备高可用服务!!

Posted 搜云库技术团队

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了2万字,实战 Docker 部署:完整的前后端,主从热备高可用服务!!相关的知识,希望对你有一定的参考价值。

要解决的系统问题

1、 解决物理机不够用的问题 2、 解决物理机资源使用不充分问题 3、 解决系统高可用问题 4、 解决不停机更新问题

系统部署准备工作

文末有:3625页互联网大厂面试题

系统部署方案设计图

相关概念

1 LVS

2 Keepalived作用

3 keepalived和其工作原理

4 VRRP协议:Virtual Route

5 VRRP的工作流程

6 Docker

7 nginx

开始部署

安装Docker

1 卸载旧版本Docker,系统未安装则可跳过

sudo apt-get remove docker docker-engine docker.io containerd runc

2 更新索引列表

sudo apt-get update

3 允许apt通过https使用repository安装软件包

sudo apt-get-y install apt-transport-https ca-certificates curl software-properties-common

4 安装GPG证书

sudo curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | apt-key add -

5 验证key的指纹

sudo apt-key fingerprint 0EBFCD88

6 添加稳定的仓库并更新索引

sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable" sudo apt-get update

7 查看docker版本列表

apt-cache madison docker-ce

8 下载自定义版本docker

sudo apt-get install -y docker-ce=17.12.1~ce-0~ubuntu

9 验证docker 是否安装成功

docker --version

10 将root用户加入docker 组,以允许免sudo执行docker

sudo gpasswd -a 用户名 docker #用户名改成自己的登录名

11 重启服务并刷新docker组成员,到此完成

sudo service docker restartnewgrp - docker

Docker自定义网络

docker network create --subnet=172.18.0.0/24 mynet

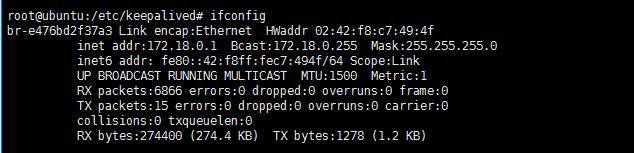

使用ifconfig查看我们创建的网络

宿主机安装Keepalived

1 预装编译环境

sudo apt-get install -y gcc

sudo apt-get install -y g++

sudo apt-get install -y libssl-dev

sudo apt-get install -y daemon

sudo apt-get install -y make

sudo apt-get install -y sysv-rc-conf

2 下载并安装keepalived

cd /usr/local/

wget http://www.keepalived.org/software/keepalived-1.2.18.tar.gz

tar zxvf keepalived-1.2.18.tar.gz

cd keepalived-1.2.18

./configure --prefix=/usr/local/keepalived

make && make insta

3 将keepalived设置为系统服务

mkdir /etc/keepalived

mkdir /etc/sysconfig

cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

cp /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/

cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

ln -s /usr/local/sbin/keepalived /usr/sbin/

ln -s /usr/local/keepalived/sbin/keepalived /sbin/

4 修改keepalived启动的配置文件

#!/bin/sh

#

# Startup script for the Keepalived daemon

#

# processname: keepalived

# pidfile: /var/run/keepalived.pid

# config: /etc/keepalived/keepalived.conf

# chkconfig: - 21 79

# description: Start and stop Keepalived

# Source function library

#. /etc/rc.d/init.d/functions

. /lib/lsb/init-functions

# Source configuration file (we set KEEPALIVED_OPTIONS there)

. /etc/sysconfig/keepalived

RETVAL=0

prog="keepalived"

start() {

echo -n $"Starting $prog: "

daemon keepalived start

RETVAL=$?

echo

[ $RETVAL -eq 0] && touch /var/lock/subsys/$prog

}

stop() {

echo -n $"Stopping $prog: "

killproc keepalived

RETVAL=$?

echo

[ $RETVAL -eq 0] && rm -f /var/lock/subsys/$prog

}

reload() {

echo -n $"Reloading $prog: "

killproc keepalived -1

RETVAL=$?

echo

}

# See how we were called.

case"$1"in

start)

start

;;

stop)

stop

;;

reload)

reload

;;

restart)

stop

start

;;

condrestart)

if[ -f /var/lock/subsys/$prog ]; then

stop

start

fi

;;

status)

status keepalived

RETVAL=$?

;;

*)

echo "Usage: $0 {start|stop|reload|restart|condrestart|status}"

RETVAL=1

esac

exit

5 修改keepalived配置文件

cd /etc/keepalived

cp keepalived.conf keepalived.conf.back

rm keepalived.conf

vim keepalived.conf

添加内容如下

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.227.88

192.168.227.99

}

}

virtual_server 192.168.227.8880{

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

real_server 172.18.0.210{

weight 1

}

}

virtual_server 192.168.227.9980{

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

real_server 172.18.0.220{

weight 1

需要注意的是:interface 这个是根据自己服务器网卡名称设定的,不然无法做VIP映射

6 启动keepalived

systemctl daemon-reload

systemctl enable keepalived.service

systemctl start keepalived.service

每次更改配置文件之后必须执行 systemctl daemon-reload 操作,不然配置无效。

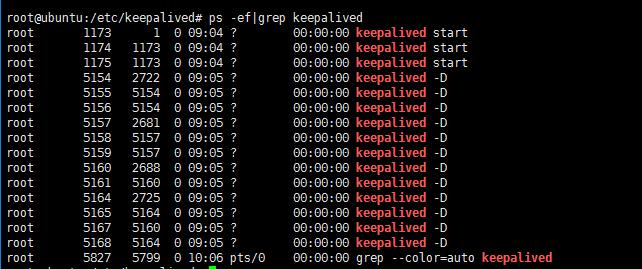

7 查看keepalived进程是否存在

ps -ef|grep keepalived

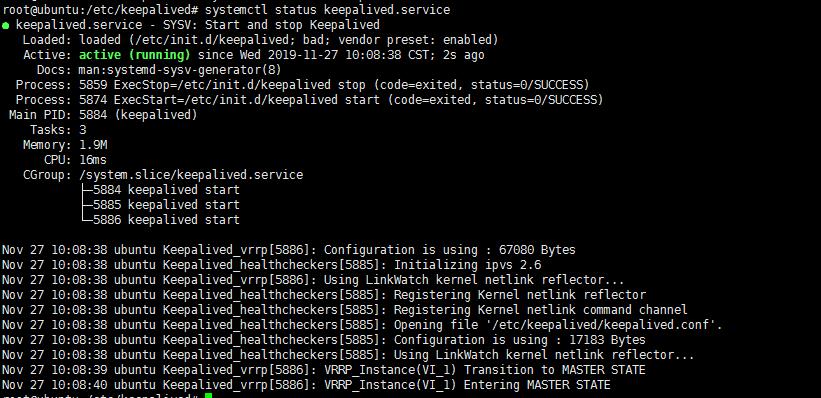

8 查看keepalived运行状态

systemctl status keepalived.service

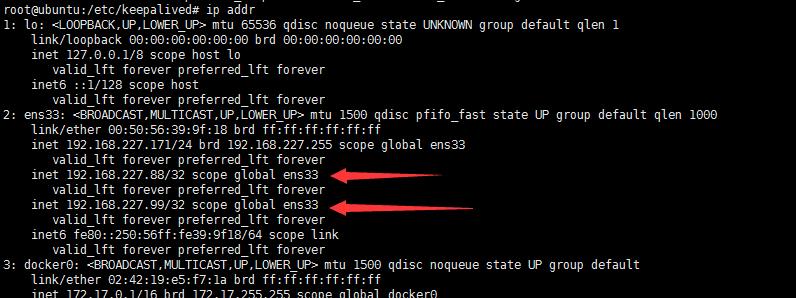

9 查看虚拟IP是否完成映射

ip addr

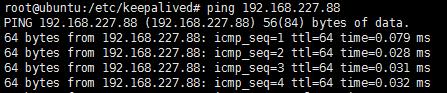

10 Ping下两个IP

可以看到两个IP都是通的,到此keepalived安装成功

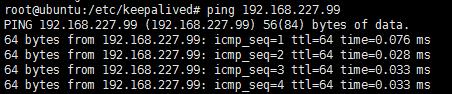

Docker容器实现前端主从热备系统

图中访问的IP应该是容器内部虚拟出来的172.18.0.210,此处更正说明下。

接下来我们安装前端服务器的主从系统部分

1 拉取centos7镜像

docker pull centos:7

2 创建容器

docker run -it -d --name centos1 -d centos:7

3 进入容器centos1

docker exec-it centos1 bash

4 安装常用工具

yum update -y

yum install -y vim

yum install -y wget

yum install -y gcc-c++

yum install -y pcre pcre-devel

yum install -y zlib zlib-devel

yum install -y openssl-devel

yum install -y popt-devel

yum install -y initscripts

yum install -y net-tools

5 将容器打包成新的镜像,以后直接以该镜像创建容器

docker commit -a 'cfh'-m 'centos with common tools' centos1 centos_base

6 删除之前创建的centos1 容器,重新以基础镜像创建容器,安装keepalived+nginx

docker rm -f centos1

#容器内需要使用systemctl服务,需要加上/usr/sbin/init

docker run -it --name centos_temp -d --privileged centos_base /usr/sbin/init

docker exec-it centos_temp bash

7 安装nginx

#使用yum安装nginx需要包括Nginx的库,安装Nginx的库

rpm -Uvh http://nginx.org/packages/centos/7/noarch/RPMS/nginx-release-centos-7-0.el7.ngx.noarch.rpm

# 使用下面命令安装nginx

yum install -y nginx

#启动nginx

systemctl start nginx.service

8 安装keepalived

1.下载keepalived

wget http://www.keepalived.org/software/keepalived-1.2.18.tar.gz

2.解压安装:

tar -zxvf keepalived-1.2.18.tar.gz -C /usr/local/

3.下载插件openssl

yum install -y openssl openssl-devel(需要安装一个软件包)

4.开始编译keepalived

cd /usr/local/keepalived-1.2.18/ && ./configure --prefix=/usr/local/keepalived

5.make一下

make && make ins

9 将keepalived 安装成系统服务

mkdir /etc/keepalived

cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

cp /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/

cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

ln -s /usr/local/sbin/keepalived /usr/sbin/

可以设置开机启动:chkconfig keepalived on

到此我们安装完毕!

#若启动报错,则执行下面命令

cd /usr/sbin/

rm -f keepalived

cp /usr/local/keepalived/sbin/keepalived /usr/sbin/

#启动keepalived

systemctl daemon-reload 重新加载

systemctl enable keepalived.service 设置开机自动启动

systemctl start keepalived.service 启动

systemctl status keepalived.service 查看服务状

10 修改/etc/keepalived/keepalived.conf文件

#备份配置文件

cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.backup

rm -f keepalived.conf

vim keepalived.conf

#配置文件如下

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh"

interval 2

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 121

mcast_src_ip 172.18.0.201

priority 100

nopreempt

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

172.18.0.210

}

11 修改nginx的配置文件

vim /etc/nginx/conf.d/default.conf

upstream tomcat{

server 172.18.0.11:80;

server 172.18.0.12:80;

server 172.18.0.13:80;

}

server {

listen 80;

server_name 172.18.0.210;

#charset koi8-r;

#access_log /var/log/nginx/host.access.log main;

location / {

proxy_pass http://tomcat;

index index.html index.html;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500502503504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

# proxy the php scripts to Apache listening on 127.0.0.1:80

#

#location ~ .php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ .php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /.ht {

# deny all;

12 添加心跳检测文件

vim nginx_check.sh

#以下是脚本内容

#!/bin/bash

A=`ps -C nginx –no-header |wc -l`

if[ $A -eq 0];then

/usr/local/nginx/sbin/nginx

sleep 2

if[ `ps -C nginx --no-header |wc -l`-eq 0];then

killall keepalived

fi

fi

13 给脚本赋予执行权限

chmod +x nginx_check.sh

14 设置开机启动

systemctl enable keepalived.service

#开启keepalived

systemctl daemon-reload

systemctl start keepalived.service

15 检测虚拟IP是否成功

ping 172.18.0.210

16 将centos_temp 容器重新打包成镜像

docker commit -a 'cfh'-m 'centos with keepalived nginx' centos_temp centos_kn

17 删除所有容器

docker rm -f `docker ps -a -q`

18 使用之前打包的镜像重新创建容器

取名为centos_web_master,和centos_web_slave

docker run --privileged -tid

--name centos_web_master --restart=always

--net mynet --ip 172.18.0.201

centos_kn /usr/sbin/init

docker run --privileged -tid

--name centos_web_slave --restart=always

--net mynet --ip 172.18.0.202

centos_kn /usr/sbin/init

19 修改centos_web_slave里面的nginx和keepalived的配置文件

keepalived修改地方如下

state SLAVE #设置为从服务器

mcast_src_ip 172.18.0.202 #修改为本机的IP

priority 80 #权重设置比master小

Nginx配置如下

upstream tomcat{

server 172.18.0.14:80;

server 172.18.0.15:80;

server 172.18.0.16:80;

}

server {

listen 80;

server_name 172.18.0.210;

#charset koi8-r;

#access_log /var/log/nginx/host.access.log main;

location / {

proxy_pass http://tomcat;

index index.html index.html;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500502503504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ .php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ .php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /.ht {

# deny all;

重启keepalived和nginx

systemctl daemon-reload

systemctl restart keepalived.service

systemctl restart nginx.service

20 使用Nginx启动6台前端服务器

docker pull nginx

nginx_web_1='/home/root123/cfh/nginx1'

nginx_web_2='/home/root123/cfh/nginx2'

nginx_web_3='/home/root123/cfh/nginx3'

nginx_web_4='/home/root123/cfh/nginx4'

nginx_web_5='/home/root123/cfh/nginx5'

nginx_web_6='/home/root123/cfh/nginx6'

mkdir -p ${nginx_web_1}/conf ${nginx_web_1}/conf.d ${nginx_web_1}/html ${nginx_web_1}/logs

mkdir -p ${nginx_web_2}/conf ${nginx_web_2}/conf.d ${nginx_web_2}/html ${nginx_web_2}/logs

mkdir -p ${nginx_web_3}/conf ${nginx_web_3}/conf.d ${nginx_web_3}/html ${nginx_web_3}/logs

mkdir -p ${nginx_web_4}/conf ${nginx_web_4}/conf.d ${nginx_web_4}/html ${nginx_web_4}/logs

mkdir -p ${nginx_web_5}/conf ${nginx_web_5}/conf.d ${nginx_web_5}/html ${nginx_web_5}/logs

mkdir -p ${nginx_web_6}/conf ${nginx_web_6}/conf.d ${nginx_web_6}/html ${nginx_web_6}/logs

docker run -it --name temp_nginx -d nginx

docker ps

docker cp temp_nginx:/etc/nginx/nginx.conf ${nginx_web_1}/conf

docker cp temp_nginx:/etc/nginx/conf.d/default.conf ${nginx_web_1}/conf.d/default.conf

docker cp temp_nginx:/etc/nginx/nginx.conf ${nginx_web_2}/conf

docker cp temp_nginx:/etc/nginx/conf.d/default.conf ${nginx_web_2}/conf.d/default.conf

docker cp temp_nginx:/etc/nginx/nginx.conf ${nginx_web_3}/conf

docker cp temp_nginx:/etc/nginx/conf.d/default.conf ${nginx_web_3}/conf.d/default.conf

docker cp temp_nginx:/etc/nginx/nginx.conf ${nginx_web_4}/conf

docker cp temp_nginx:/etc/nginx/conf.d/default.conf ${nginx_web_4}/conf.d/default.conf

docker cp temp_nginx:/etc/nginx/nginx.conf ${nginx_web_5}/conf

docker cp temp_nginx:/etc/nginx/conf.d/default.conf ${nginx_web_5}/conf.d/default.conf

docker cp temp_nginx:/etc/nginx/nginx.conf ${nginx_web_6}/conf

docker cp temp_nginx:/etc/nginx/conf.d/default.conf ${nginx_web_6}/conf.d/default.conf

docker rm -f temp_nginx

docker run -d --name nginx_web_1

--network=mynet --ip 172.18.0.11

-v /etc/localtime:/etc/localtime -e TZ=Asia/Shanghai

-v ${nginx_web_1}/html/:/usr/share/nginx/html

-v ${nginx_web_1}/conf/nginx.conf:/etc/nginx/nginx.conf

-v ${nginx_web_1}/conf.d/default.conf:/etc/nginx/conf.d/default.conf

-v ${nginx_web_1}/logs/:/var/log/nginx --privileged --restart=always nginx

docker run -d --name nginx_web_2

--network=mynet --ip 172.18.0.12

-v /etc/localtime:/etc/localtime -e TZ=Asia/Shanghai

-v ${nginx_web_2}/html/:/usr/share/nginx/html

-v ${nginx_web_2}/conf/nginx.conf:/etc/nginx/nginx.conf

-v ${nginx_web_2}/conf.d/default.conf:/etc/nginx/conf.d/default.conf

-v ${nginx_web_2}/logs/:/var/log/nginx --privileged --restart=always nginx

docker run -d --name nginx_web_3

--network=mynet --ip 172.18.0.13

-v /etc/localtime:/etc/localtime -e TZ=Asia/Shanghai

-v ${nginx_web_3}/html/:/usr/share/nginx/html

-v ${nginx_web_3}/conf/nginx.conf:/etc/nginx/nginx.conf

-v ${nginx_web_3}/conf.d/default.conf:/etc/nginx/conf.d/default.conf

-v ${nginx_web_3}/logs/:/var/log/nginx --privileged --restart=always nginx

docker run -d --name nginx_web_4

--network=mynet --ip 172.18.0.14

-v /etc/localtime:/etc/localtime -e TZ=Asia/Shanghai

-v ${nginx_web_4}/html/:/usr/share/nginx/html

-v ${nginx_web_4}/conf/nginx.conf:/etc/nginx/nginx.conf

-v ${nginx_web_4}/conf.d/default.conf:/etc/nginx/conf.d/default.conf

-v ${nginx_web_4}/logs/:/var/log/nginx --privileged --restart=always nginx

docker run -d --name nginx_web_5

--network=mynet --ip 172.18.0.15

-v /etc/localtime:/etc/localtime -e TZ=Asia/Shanghai

-v ${nginx_web_5}/html/:/usr/share/nginx/html

-v ${nginx_web_5}/conf/nginx.conf:/etc/nginx/nginx.conf

-v ${nginx_web_5}/conf.d/default.conf:/etc/nginx/conf.d/default.conf

-v ${nginx_web_5}/logs/:/var/log/nginx --privileged --restart=always nginx

docker run -d --name nginx_web_6

--network=mynet --ip 172.18.0.16

-v /etc/localtime:/etc/localtime -e TZ=Asia/Shanghai

-v ${nginx_web_6}/html/:/usr/share/nginx/html

-v ${nginx_web_6}/conf/nginx.conf:/etc/nginx/nginx.conf

-v ${nginx_web_6}/conf.d/default.conf:/etc/nginx/conf.d/default.conf

-v ${nginx_web_6}/logs/:/var/log/nginx --privileged --restart=always nginx

cd ${nginx_web_1}/html

cp /home/server/envconf/index.html ${nginx_web_1}/html/index.html

cd ${nginx_web_2}/html

cp /home/server/envconf/index.html ${nginx_web_2}/html/index.html

cd ${nginx_web_3}/html

cp /home/server/envconf/index.html ${nginx_web_3}/html/index.html

cd ${nginx_web_4}/html

cp /home/server/envconf/index.html ${nginx_web_4}/html/index.html

cd ${nginx_web_5}/html

cp /home/server/envconf/index.html ${nginx_web_5}/html/index.html

cd ${nginx_web_6}/html

cp /home/server/envconf/index.html ${ngi

/home/server/envconf/ 是我自己存放文件的地方,读者可自行新建目录,下面附上index.html文件内容

<!DOCTYPE html>

<htmllang="en"xmlns:v-on="http://www.w3.org/1999/xhtml">

<head>

<metacharset="UTF-8">

<title>主从测试</title>

</head>

<scriptsrc="https://cdn.jsdelivr.net/npm/vue"></script>

<scriptsrc="https://cdn.staticfile.org/vue-resource/1.5.1/vue-resource.min.js"></script>

<body>

<divid="app"style="height: 300px;width: 600px">

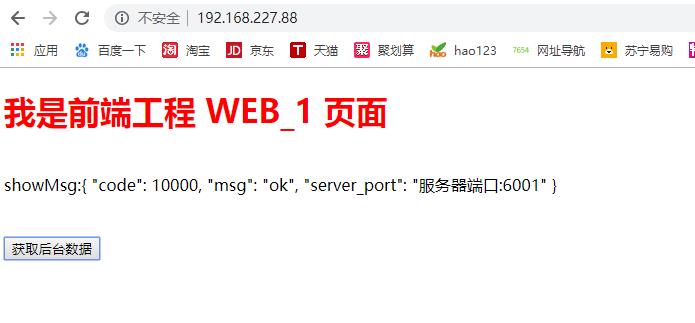

<h1style="color: red">我是前端工程 WEB 页面</h1>

<br>

showMsg:{{message}}

<br>

<br>

<br>

<buttonv-on:click="getMsg">获取后台数据</button>

</div>

</body>

</html>

<script>

var app = new Vue({

el: '#app',

data: {

message: 'Hello Vue!'

},

methods: {

getMsg: function () {

var ip="http://192.168.227.99"

var that=this;

//发送get请求

that.$http.get(ip+'/api/test').then(function(res){

that.message=res.data;

},function(){

console.log('请求失败处理');

});

; }

}

})

</

21 浏览器访问 192.168.227.88,会看到index.html显示的界面。

22 测试

Docker容器实现后端主从热备系统

1 创建Dockerfile文件

FROM openjdk:10

MAINTAINER cfh

WORKDIR /home/soft

CMD ["nohup","java","-jar","docker_server.jar"]

2 构建镜像

docker build -t myopenjdk .

3 使用构建的镜像创建6台后端服务器

docker volume create S1

docker volume inspect S1

docker volume create S2

docker volume inspect S2

docker volume create S3

docker volume inspect S3

docker volume create S4

docker volume inspect S4

docker volume create S5

docker volume inspect S5

docker volume create S6

docker volume inspect S6

cd /var/lib/docker/volumes/S1/_data

cp /home/server/envconf/docker_server.jar /var/lib/docker/volumes/S1/_data/docker_server.jar

cd /var/lib/docker/volumes/S2/_data

cp /home/server/envconf/docker_server.jar /var/lib/docker/volumes/S2/_data/docker_server.jar

cd /var/lib/docker/volumes/S3/_data

cp /home/server/envconf/docker_server.jar /var/lib/docker/volumes/S3/_data/docker_server.jar

cd /var/lib/docker/volumes/S4/_data

cp /home/server/envconf/docker_server.jar /var/lib/docker/volumes/S4/_data/docker_server.jar

cd /var/lib/docker/volumes/S5/_data

cp /home/server/envconf/docker_server.jar /var/lib/docker/volumes/S5/_data/docker_server.jar

cd /var/lib/docker/volumes/S6/_data

cp /home/server/envconf/docker_server.jar /var/lib/docker/volumes/S6/_data/docker_server.jar

docker run -it -d --name server_1 -v S1:/home/soft -v /etc/localtime:/etc/localtime -e TZ=Asia/Shanghai--net mynet --ip 172.18.0.101--restart=always myopenjdk

docker run -it -d --name server_2 -v S2:/home/soft -v /etc/localtime:/etc/localtime -e TZ=Asia/Shanghai--net mynet --ip 172.18.0.102--restart=always myopenjdk

docker run -it -d --name server_3 -v S3:/home/soft -v /etc/localtime:/etc/localtime -e TZ=Asia/Shanghai--net mynet --ip 172.18.0.103--restart=always myopenjdk

docker run -it -d --name server_4 -v S4:/home/soft -v /etc/localtime:/etc/localtime -e TZ=Asia/Shanghai--net mynet --ip 172.18.0.104--restart=always myopenjdk

docker run -it -d --name server_5 -v S5:/home/soft -v /etc/localtime:/etc/localtime -e TZ=Asia/Shanghai--net mynet --ip 172.18.0.105--restart=always myopenjdk

docker run -it -d --name server_6 -v S6:/home/soft -v /etc/localtime:/etc/localtime -e TZ=Asia/Shanghai--net mynet --ip 172.18.0.106--restar

docker_server.jar为测试程序,主要代码如下

import org.springframework.web.bind.annotation.RestController;

import javax.servlet.http.HttpServletResponse;

import java.util.LinkedHashMap;

import java.util.Map;

@RestController

@RequestMapping("api")

@CrossOrigin("*")

publicclassTestController{

@Value("${server.port}")

publicint port;

@RequestMapping(value = "/test",method = RequestMethod.GET)

publicMap<String,Object> test(HttpServletResponse response){

response.setHeader("Access-Control-Allow-Origin", "*");

response.setHeader("Access-Control-Allow-Methods", "GET");

response.setHeader("Access-Control-Allow-Headers","token");

Map<String,Object> objectMap=newLinkedHashMap<>();

objectMap.put("code",10000);

objectMap.put("msg","ok");

objectMap.put("server_port","服务器端口:"+port);

return objectMap;

}

4 创建后端的主从容器

主服务器

docker run --privileged -tid --name centos_server_master --restart=always --net mynet --ip 172.18.0.203 centos_kn /usr/sbin/init

主服务器keepalived配置

vrrp_script chk_nginx {

script "/etc/keepalived/nginx_check.sh"

interval 2

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 110

mcast_src_ip 172.18.0.203

priority 100

nopreempt

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

172.18.0.220

}

主服务器nginx配置

upstream tomcat{

server 172.18.0.101:6001;

server 172.18.0.102:6002;

server 172.18.0.103:6003;

}

server {

listen 80;

server_name 172.18.0.220;

#charset koi8-r;

#access_log /var/log/nginx/host.access.log main;

location / {

proxy_pass http://tomcat;

index index.html index.html;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500502503504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ .php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ .php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /.ht {

# deny all;

重启keepalived和nginx

systemctl daemon-reload

systemctl restart keepalived.service

systemctl restart nginx.service

从服务器

docker run --privileged -tid --name centos_server_slave --restart=always --net mynet --ip 172.18.0.204 centos_kn /usr/sbin/init

从服务器的keepalived配置

cript chk_nginx {

script "/etc/keepalived/nginx_check.sh"

interval 2

weight -20

}

vrrp_instance VI_1 {

state SLAVE

interface eth0

virtual_router_id 110

mcast_src_ip 172.18.0.204

priority 80

nopreempt

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nginx

}

virtual_ipaddress {

172.18.0.220

}

从服务器的nginx配置

upstream tomcat{

server 172.18.0.104:6004;

server 172.18.0.105:6005;

server 172.18.0.106:6006;

}

server {

listen 80;

server_name 172.18.0.220;

#charset koi8-r;

#access_log /var/log/nginx/host.access.log main;

location / {

proxy_pass http://tomcat;

index index.html index.html;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500502503504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ .php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ .php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /.ht {

# deny all;

重启keepalived和nginx

systemctl daemon-reload

systemctl restart keepalived.service

systemctl restart nginx.service

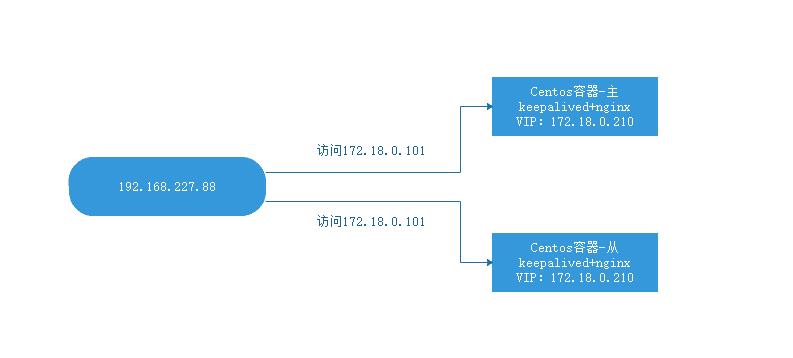

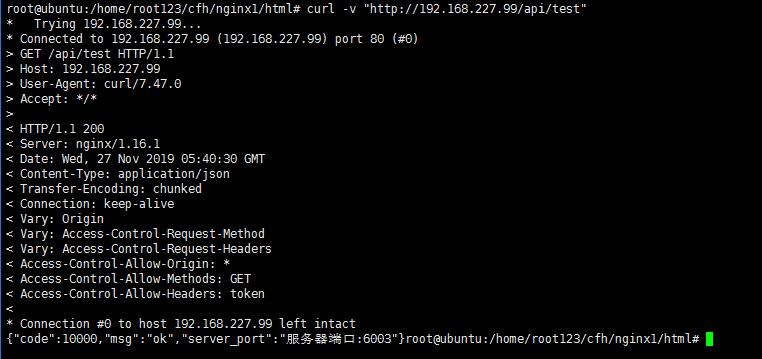

命令行验证

浏览器验证

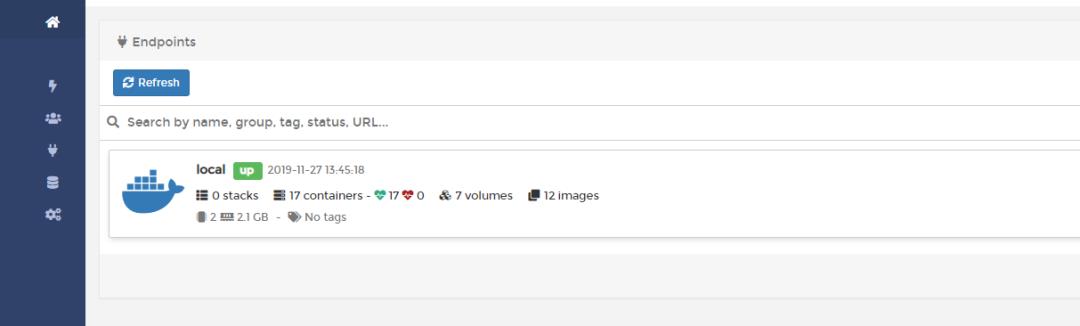

portainer安装

它是容器管理界面,可以看到容器的运行状态

docker search portainer

docker pull portainer/portainer

docker run -d -p 9000:9000

--restart=always

-v /var/run/docker.sock:/var/run/docker.sock

--name prtainer-eureka

portainer/portainer

http://192.168.227.171:90

首次进入的时候需要输入密码,默认账号为admin,密码创建之后页面跳转到下一界面,选择管理本地的容器,也就是Local,点击确定。

结语:

另外Docker的三要素要搞明白:镜像/容器,数据卷,网络管理。

文章永久链接:

https://tech.souyunku.com/?p=43138

阅读原文: 最新 3625页大厂面试题

以上是关于2万字,实战 Docker 部署:完整的前后端,主从热备高可用服务!!的主要内容,如果未能解决你的问题,请参考以下文章