NAACL2021长序列自然语言处理, 250页ppt

Posted 专知

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了NAACL2021长序列自然语言处理, 250页ppt相关的知识,希望对你有一定的参考价值。

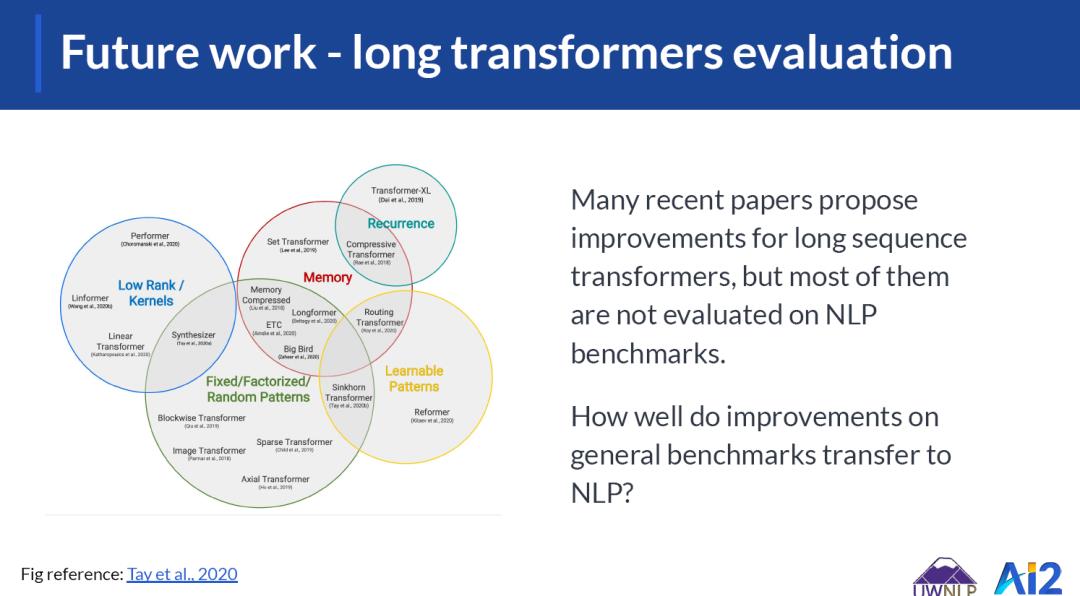

自然语言数据的一个重要子集包括跨越数千个token的文档。处理这样长的序列的能力对于许多NLP任务是至关重要的,包括文档分类、摘要、多跳和开放域问答,以及文档级或多文档关系提取和引用解析。然而,将最先进的模型扩展到较长的序列是一个挑战,因为许多模型都是为较短的序列设计的。一个值得注意的例子是Transformer模型,它在序列长度上有二次计算代价,这使得它们对于长序列任务的代价非常昂贵。这反映在许多广泛使用的模型中,如RoBERTa和BERT,其中序列长度被限制为只有512个tokens。在本教程中,我们将向感兴趣的NLP研究人员介绍最新和正在进行的文档级表示学习技术。此外,我们将讨论新的研究机会,以解决该领域现有的挑战。我们将首先概述已建立的长序列自然语言处理技术,包括层次、基于图和基于检索的方法。然后,我们将重点介绍最近的长序列转换器方法,它们如何相互比较,以及它们如何应用于NLP任务(参见Tay等人(2020)最近的综述)。我们还将讨论处理长序列的关键的各种存储器节省方法。在本教程中,我们将使用分类、问答和信息提取作为激励任务。我们还将有一个专注于总结的实际编码练习。

Part 1. Intro & Overview of tasks

Part 2. Graph based methods

Part 3. Long sequence transformers

Part 4. Pretraining and finetuning

Part 5. Use cases

Part 6. Future work & conclusion

Part 1. Intro & Overview of tasks

Andrew L. Maas, Raymond E. Daly, Peter T. Pham, Dan Huang, Andrew Y. Ng, Christopher Potts. Learning Word Vectors for Sentiment Analysis

Johannes Kiesel, Maria Mestre, Rishabh Shukla, Emmanuel Vincent, Payam Adineh, David Corney, Benno Stein, Martin Potthast. SemEval-2019 Task 4: Hyperpartisan News Detection

Zhilin Yang, Peng Qi, Saizheng Zhang, Yoshua Bengio, William W. Cohen, Ruslan Salakhutdinov, Christopher D. Manning. 2018. HotpotQA: A Dataset for Diverse, Explainable Multi-hop Question Answering

Johannes Welbl, Pontus Stenetorp, Sebastian Riedel. 2018. Constructing Datasets for Multi-hop Reading Comprehension Across Documents

Courtney Napoles, Matthew Gormley, Benjamin Van Durme. 2012. Annotated Gigaword

Arman Cohan, Franck Dernoncourt, Doo Soon Kim, Trung Bui, Seokhwan Kim, Walter Chang, Nazli Goharian. 2018. A Discourse-Aware Attention Model for Abstractive Summarization of Long Documents

Part 2. Graph based methods

Zichao Yang, Diyi Yang, Chris Dyer, Xiaodong He, Alex Smola, Eduard Hovy. 2016. Hierarchical Attention Networks for Document Classification

Sarthak Jain, Madeleine van Zuylen, Hannaneh Hajishirzi, Iz Beltagy. 2020. SciREX: A Challenge Dataset for Document-Level Information Extraction

Ming-Wei Chang, Kristina Toutanova, Kenton Lee, Jacob Devlin. 2019. Language Model Pre-training for Hierarchical Document Representation

Xingxing Zhang, Furu Wei, Ming Zhou. 2019. HIBERT: Document Level Pre-training of Hierarchical Bidirectional Transformers for Document Summarization

Kenton Lee, Luheng He, Luke Zettlemoyer. 2018. Higher-order Coreference Resolution with Coarse-to-fine Inference

David Wadden, Ulme Wennberg, Yi Luan, Hannaneh Hajishirzi. 2019. Entity, Relations, and Event Extraction with Contextualized Span Representations

Linfeng Song, Zhiguo Wang, Mo Yu, Yue Zhang, Radu Florian, Daniel Gildea. 2018. Exploring Graph-structured Passage Representation for Multi-hop Reading Comprehension with Graph Neural Networks

Yunxuan Xiao, Yanru Qu, Lin Qiu, Hao Zhou, Lei Li, Weinan Zhang, Yong Yu. 2019. Dynamically Fused Graph Network for Multi-hop Reasoning

Yuwei Fang, Siqi Sun, Zhe Gan, Rohit Pillai, Shuohang Wang, Jingjing Liu. 2020. Hierarchical Graph Network for Multi-hop Question Answering

Sewon Min, Danqi Chen, Luke Zettlemoyer, Hannaneh Hajishirzi. 2019. Knowledge-guided Text Retrieval and Reading for Open Domain Question Answering

Part 3. Long sequence transformers

Zihang Dai, Zhilin Yang, Yiming Yang, Jaime Carbonell, Quoc V. Le, Ruslan Salakhutdinov. 2019. Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context

Jack W. Rae, Anna Potapenko, Siddhant M. Jayakumar, Timothy P. Lillicrap. 2019. Compressive Transformers for Long-Range Sequence Modelling

Aurko Roy, Mohammad Saffar, Ashish Vaswani, David Grangier. 2020. Efficient Content-Based Sparse Attention with Routing Transformers

Yi Tay, Dara Bahri, Liu Yang, Donald Metzler, Da-Cheng Juan. 2020. Sparse Sinkhorn Attention

Nikita Kitaev, Łukasz Kaiser, Anselm Levskaya. 2020. Reformer: The Efficient Transformer

Rewon Child, Scott Gray, Alec Radford, Ilya Sutskever. 2019. Generating Long Sequences with Sparse Transformers

Iz Beltagy, Matthew E. Peters, Arman Cohan. 2020. Longformer: The Long-Document Transformer

Joshua Ainslie, Santiago Ontanon, Chris Alberti, Vaclav Cvicek, Zachary Fisher, Philip Pham, Anirudh Ravula, Sumit Sanghai, Qifan Wang, Li Yang. 2020. ETC: Encoding Long and Structured Inputs in Transformers

Manzil Zaheer, Guru Guruganesh, Avinava Dubey, Joshua Ainslie, Chris Alberti, Santiago Ontanon, Philip Pham, Anirudh Ravula, Qifan Wang, Li Yang, Amr Ahmed. 2020. Big Bird: Transformers for Longer Sequences

Tom B. Brown et al. 2020. Language Models are Few-Shot Learners

Scott Gray, Alec Radford and Diederik P. Kingma. 2017. GPU Kernels for Block-Sparse Weights

Angelos Katharopoulos, Apoorv Vyas, Nikolaos Pappas, François Fleuret. 2020. Transformers are RNNs: Fast Autoregressive Transformers with Linear Attention

Krzysztof Choromanski, Valerii Likhosherstov, David Dohan, Xingyou Song, Andreea Gane, Tamas Sarlos, Peter Hawkins, Jared Davis, Afroz Mohiuddin, Lukasz Kaiser, David Belanger, Lucy Colwell, Adrian Weller. 2020. Rethinking Attention with Performers

Hao Peng, Nikolaos Pappas, Dani Yogatama, Roy Schwartz, Noah A. Smith, Lingpeng Kong. 2021. Random Feature Attention

Sinong Wang, Belinda Z. Li, Madian Khabsa, Han Fang, Hao Ma. 2020. Linformer: Self-Attention with Linear Complexity

Yi Tay, Mostafa Dehghani, Samira Abnar, Yikang Shen, Dara Bahri, Philip Pham, Jinfeng Rao, Liu Yang, Sebastian Ruder, Donald Metzler. 2020. Long Range Arena: A Benchmark for Efficient Transformers

Part 4. Pretraining and finetuning

Yunyang Xiong, Zhanpeng Zeng, Rudrasis Chakraborty, Mingxing Tan, Glenn Fung, Yin Li, Vikas Singh. 2021. Nyströmformer: A Nyström-Based Algorithm for Approximating Self-Attention

Ofir Press, Noah A. Smith, Mike Lewis. 2020. Shortformer: Better Language Modeling using Shorter Inputs

Avi Caciularu, Arman Cohan, Iz Beltagy, Matthew E. Peters, Arie Cattan, Ido Dagan. 2021. Cross-Document Language Modeling

专知便捷查看

后台回复“NLPLS” 就可以获取《【NAACL2021】长序列自然语言处理, 250页ppt》专知下载链接

以上是关于NAACL2021长序列自然语言处理, 250页ppt的主要内容,如果未能解决你的问题,请参考以下文章

大会 | 自然语言处理顶会NAACL 2018最佳论文时间检验论文揭晓

自然语言处理顶级会议ACL,EMNLP,NAACL, COLING论文质量有区别吗?

聚焦机器同传前沿进展,第二届机器同传研讨会将在NAACL举办