bp神经网络前向传递和误差反向传递python实现加MATLAB实现

Posted 星代码119

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了bp神经网络前向传递和误差反向传递python实现加MATLAB实现相关的知识,希望对你有一定的参考价值。

一、前言

这里只张贴代码,如果想看原理请看:

**TensorFlow学习网站:**http://c.biancheng.net/view/1886.html

**bp神经网络:**https://www.cnblogs.com/duanhx/p/9655213.html

**CNN讲解:**https://www.cnblogs.com/charlotte77/p/7759802.html

二、代码实现思路

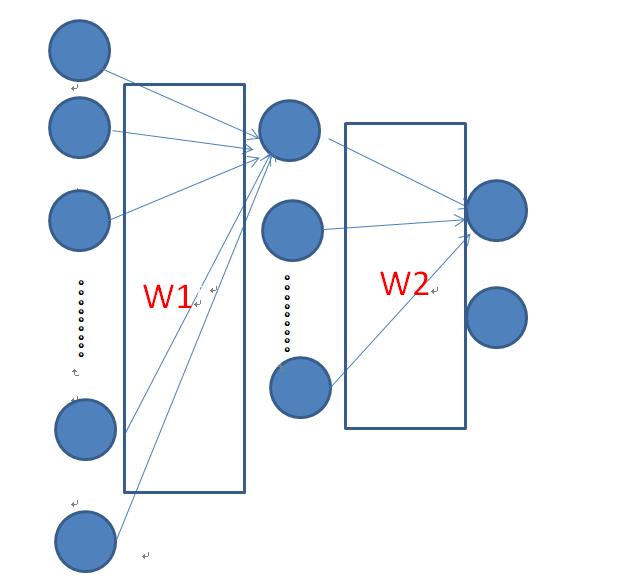

这里只搭建了一层隐含层神经网络,大体如下:

2.1代码前向传递

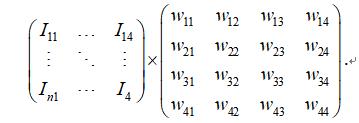

这里以4->3->2讲解:I矩阵为输入,W矩阵为权值矩阵,输出为隐含层矩阵输出,激活函数使用的sigmoid 函数

2.2误差反向传递

误差反向传递就是要找到误差反向传递到每个权值上的统一公式即可,这里就麻烦各位推一下。参考:https://www.cnblogs.com/duanhx/p/9655213.html

三、代码实现

一、python实现

Created on Thu Oct 29 16:49:22 2020

@author: lenovo

"""

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

class NeuralSetting(object):

"""docstring for ClassName"""

def __init__(self, input_num, mid_num, out_num, target_Set, input_Set):

self.input_num = input_num

self.mid_num = mid_num

self.out_num = out_num

self.traget_Set = target_Set

self.input_Set = input_Set

self.mid_out = None

self.mid_signoid_out = None

self.out = None

self.out_sigmoid = None

self.matrix_input = None

self.matrix_mid = None

self.praout = None

self.error = None

self.alphy = 0.5

#矩阵初始化

def matrix_init( self):

self.matrix_input = np.ones( (self.input_num, self.mid_num))

self.matrix_mid = np.ones( (self.mid_num, self.out_num))

#sigmoid函数,神经单元的输出

def sigmoid_function(self,x):

return 1 /( 1 +np.exp(-x))

def matrix_multiply(self,x,y):

return np.dot(x,y)

def Bperrorfunction(self):

#设置迭代步伐

j = 4000

#便于画图

count = 0

while(j):

for i in range(4):

#用来存储误差

sum_all = 0

#计算隐含层的输出

self.mid_out = self.matrix_multiply(self.input_Set[i,:],self.matrix_input)

#计算隐含层的sigmoid的输出

self.mid_signoid_out = self.sigmoid_function(self.mid_out)

#计算隐含层的输出

self.out = self.matrix_multiply(self.mid_signoid_out,self.matrix_mid)

#计算隐含层的sigmoid的输出

self.out_sigmoid = self.sigmoid_function(self.out)

#计算输出层的误差

self.error = self.traget_Set[i,:] - self.out_sigmoid

count = count + 1

sum_all_1 = self.error*self.error

#把误差打印出来

sum_all = (1/2)*(sum_all_1[0]) + (1/2)*(sum_all_1[1]) + (1/2)*(sum_all_1[2])

plt.scatter(count,sum_all,color = 'red', marker = '+')

print(self.mid_signoid_out.shape)

#误差传递

self.matrix_mid = self.matrix_mid + self.alphy*self.matrix_multiply(self.mid_signoid_out.reshape(4,1),(self.error * self.out_sigmoid * (1 - self.out_sigmoid)).reshape(1,3))

mid = self.matrix_multiply(self.error * self.out_sigmoid * (1 - self.out_sigmoid).reshape(1,3),self.matrix_mid.transpose())*self.mid_signoid_out*(1 - self.mid_signoid_out)

self.matrix_input = self.matrix_input + self.alphy*self.matrix_multiply( mid.transpose(), self.input_Set[i,:].reshape(1,5)).transpose()

j = j -1

#前向传递,通过误差传递算的权值,前向传递计算

def test_Function(self,testVlaues):

self.mid_out = self.matrix_multiply(testVlaues,self.matrix_input)

self.mid_signoid_out = self.sigmoid_function(self.mid_out)

self.out = self.matrix_multiply(self.mid_signoid_out,self.matrix_mid)

self.out_sigmoid = self.sigmoid_function(self.out)

return self.out_sigmoid

if __name__ == '__main__':

dataSet = pd.read_excel(r'C:\\Users\\lenovo\\Desktop\\笔记\\新建 Microsoft Excel 工作表.xlsx')

testSet = pd.read_excel(r'C:\\Users\\lenovo\\Desktop\\笔记\\predictSet.xlsx')

data = dataSet.values

#测试数据处理

testVlaues =testSet.values

traget_test = testVlaues[:,5:8]

input_test = testVlaues[:, 0:5]

traget_num = data[:,5:8] #把目标值付给traget

input_num = data[:, 0:5] #把输入数据付给input

neuralSetting = NeuralSetting( 5,4,3, traget_num, input_num) #根据输入层数和输出层数来设计权初值

neuralSetting.matrix_init() #权值矩阵初始化

neuralSetting.Bperrorfunction() #误差传递函数计算

practical_out = neuralSetting.test_Function(input_test) #测试集去测试神经网络训练对的怎么样

print(practical_out)

print(traget_test)

plt.show()

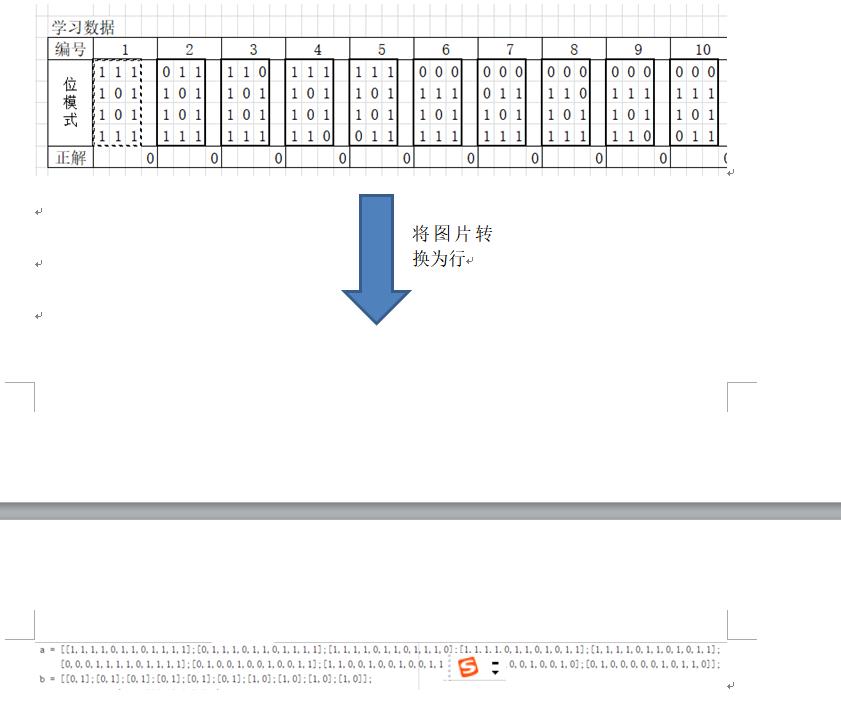

二、MATLAB实现

一、main函数

a = [[1,1,1,1,0,1,1,0,1,1,1,1];[0,1,1,1,0,1,1,0,1,1,1,1];[1,1,1,1,0,1,1,0,1,1,1,0];[1,1,1,1,0,1,1,0,1,0,1,1];[1,1,1,1,0,1,1,0,1,0,1,1];

[0,0,0,1,1,1,1,0,1,1,1,1];[0,1,0,0,1,0,0,1,0,0,1,1];[1,1,0,0,1,0,0,1,0,0,1,1];[1,1,0,0,1,0,0,1,0,0,1,0];[0,1,0,0,0,0,0,1,0,1,1,0]];

b = [[0,1];[0,1];[0,1];[0,1];[0,1];[0,1];[1,0];[1,0];[1,0];[1,0]];

Bperrorfunction(a,b,4000,12,6,2,0.1);

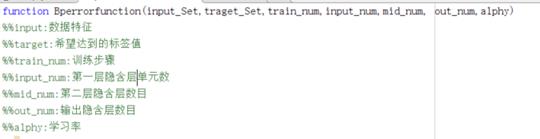

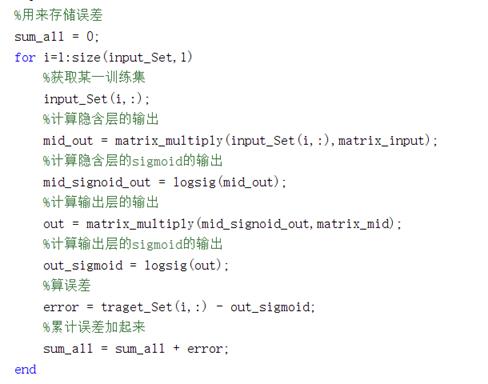

二、Bperrorfunction函数

function Bperrorfunction(input_Set,traget_Set,train_num,input_num,mid_num, out_num,alphy)

%%input:数据特征

%%target:希望达到的标签值

%%train_num:训练步骤

%%input_num:第一层隐含层单元数

%%mid_num:第二层隐含层数目

%%out_num:输出隐含层数目

%%alphy:学习率

j = train_num

matrix_input = rand( input_num, mid_num)

matrix_mid = rand( mid_num, out_num)

count_1 = 1;

while(j)

%用来存储误差

sum_all = 0;

for i=1:size(input_Set,1)

%获取某一训练集

input_Set(i,:);

%计算隐含层的输出

mid_out = matrix_multiply(input_Set(i,:),matrix_input);

%计算隐含层的sigmoid的输出

mid_signoid_out = logsig(mid_out);

%计算输出层的输出

out = matrix_multiply(mid_signoid_out,matrix_mid);

%计算输出层的sigmoid的输出

out_sigmoid = logsig(out);

%算误差

error = traget_Set(i,:) - out_sigmoid;

%累计误差加起来

sum_all = sum_all + error;

end

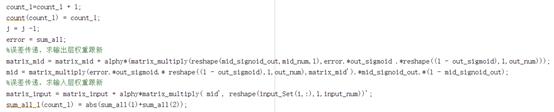

count_1=count_1 + 1;

count(count_1) = count_1;

j = j -1;

error = sum_all;

%误差传递,求输出层权重跟新

matrix_mid = matrix_mid + alphy*(matrix_multiply(reshape(mid_signoid_out,mid_num,1),error.*out_sigmoid .*reshape((1 - out_sigmoid),1,out_num)));

mid = matrix_multiply(error.*out_sigmoid.* reshape((1 - out_sigmoid),1,out_num),matrix_mid').*mid_signoid_out.*(1 - mid_signoid_out);

%误差传递,求输入层权重跟新

matrix_input = matrix_input + alphy*matrix_multiply( mid', reshape(input_Set(i,:),1,input_num))'

sum_all_1(count_1) = abs(sum_all(1)+sum_all(2));

end

plot(count,sum_all_1);

三、function b = matrix_multiply(x,y)函数

b = x*y;

function b = matrix_multiply(x,y)

b = x*y;

四、实现结果

4.1数据转换

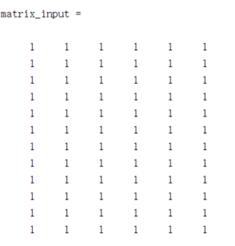

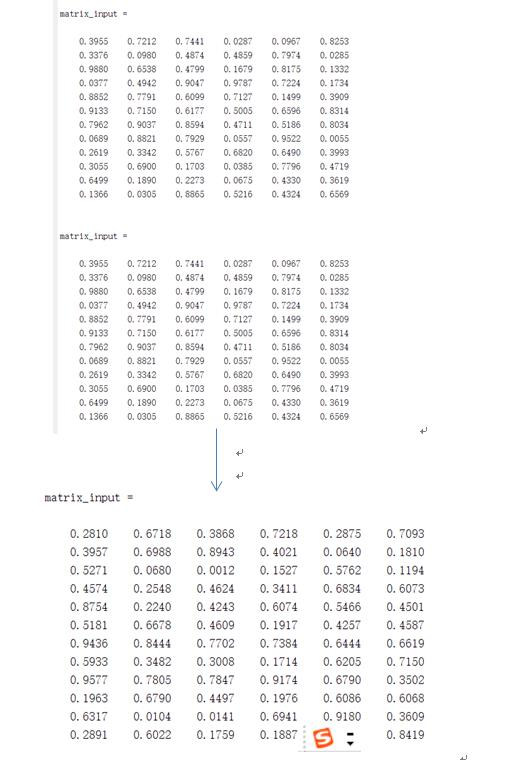

首先对各个隐含层的权重进行赋初值,代码中全部赋予1。

1、

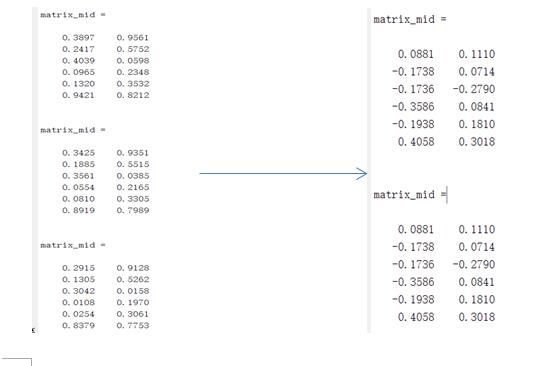

4.2前向传递:

4.3误差反向传递

4.4权重更新

输入层权值更新

4.5损失值变化:

以上是关于bp神经网络前向传递和误差反向传递python实现加MATLAB实现的主要内容,如果未能解决你的问题,请参考以下文章