Spark 报错 Failed to delete: C:UserslvaczAppDataLocalTempspark-*

Posted 终回首

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Spark 报错 Failed to delete: C:UserslvaczAppDataLocalTempspark-*相关的知识,希望对你有一定的参考价值。

一、问题

在win10,local模式执行完spark任务后不论是否可以执行出结果,都会报错:

Failed to delete: C:\\Users\\lvacz\\AppData\\Local\\Temp\\spark-7921735f-07fa-45db-875e-5a6440eb7e79

部分日志:

21/05/26 13:01:34 WARN SparkEnv: Exception while deleting Spark temp dir: C:\\Users\\lvacz\\AppData\\Local\\Temp\\spark-7921735f-07fa-45db-875e-5a6440eb7e79\\userFiles-af608dcd-6f5c-44a3-a295-3667472e8936

java.io.IOException: Failed to delete: C:\\Users\\lvacz\\AppData\\Local\\Temp\\spark-7921735f-07fa-45db-875e-5a6440eb7e79\\userFiles-af608dcd-6f5c-44a3-a295-3667472e8936\\org.mongodb_mongo-java-driver-3.4.2.jar

at org.apache.spark.network.util.JavaUtils.deleteRecursivelyUsingJavaIO(JavaUtils.java:144)

at org.apache.spark.network.util.JavaUtils.deleteRecursively(JavaUtils.java:118)

at org.apache.spark.network.util.JavaUtils.deleteRecursivelyUsingJavaIO(JavaUtils.java:128)

at org.apache.spark.network.util.JavaUtils.deleteRecursively(JavaUtils.java:118)

at org.apache.spark.network.util.JavaUtils.deleteRecursively(JavaUtils.java:91)

at org.apache.spark.util.Utils$.deleteRecursively(Utils.scala:1062)

at org.apache.spark.SparkEnv.stop(SparkEnv.scala:103)

at org.apache.spark.SparkContext$$anonfun$stop$11.apply$mcV$sp(SparkContext.scala:1974)

at org.apache.spark.util.Utils$.tryLogNonFatalError(Utils.scala:1340)

at org.apache.spark.SparkContext.stop(SparkContext.scala:1973)

at org.apache.spark.SparkContext$$anonfun$2.apply$mcV$sp(SparkContext.scala:575)

at org.apache.spark.util.SparkShutdownHook.run(ShutdownHookManager.scala:216)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1$$anonfun$apply$mcV$sp$1.apply$mcV$sp(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1$$anonfun$apply$mcV$sp$1.apply(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1$$anonfun$apply$mcV$sp$1.apply(ShutdownHookManager.scala:188)

at org.apache.spark.util.Utils$.logUncaughtExceptions(Utils.scala:1945)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1.apply$mcV$sp(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1.apply(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1.apply(ShutdownHookManager.scala:188)

at scala.util.Try$.apply(Try.scala:192)

at org.apache.spark.util.SparkShutdownHookManager.runAll(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anon$2.run(ShutdownHookManager.scala:178)

at org.apache.hadoop.util.ShutdownHookManager$1.run(ShutdownHookManager.java:54)

21/05/26 13:01:34 ERROR ShutdownHookManager: Exception while deleting Spark temp dir: C:\\Users\\lvacz\\AppData\\Local\\Temp\\spark-7921735f-07fa-45db-875e-5a6440eb7e79

java.io.IOException: Failed to delete: C:\\Users\\lvacz\\AppData\\Local\\Temp\\spark-7921735f-07fa-45db-875e-5a6440eb7e79\\userFiles-af608dcd-6f5c-44a3-a295-3667472e8936\\org.mongodb_mongo-java-driver-3.4.2.jar

at org.apache.spark.network.util.JavaUtils.deleteRecursivelyUsingJavaIO(JavaUtils.java:144)

at org.apache.spark.network.util.JavaUtils.deleteRecursively(JavaUtils.java:118)

at org.apache.spark.network.util.JavaUtils.deleteRecursivelyUsingJavaIO(JavaUtils.java:128)

at org.apache.spark.network.util.JavaUtils.deleteRecursively(JavaUtils.java:118)

at org.apache.spark.network.util.JavaUtils.deleteRecursivelyUsingJavaIO(JavaUtils.java:128)

at org.apache.spark.network.util.JavaUtils.deleteRecursively(JavaUtils.java:118)

at org.apache.spark.network.util.JavaUtils.deleteRecursively(JavaUtils.java:91)

at org.apache.spark.util.Utils$.deleteRecursively(Utils.scala:1062)

at org.apache.spark.util.ShutdownHookManager$$anonfun$1$$anonfun$apply$mcV$sp$3.apply(ShutdownHookManager.scala:65)

at org.apache.spark.util.ShutdownHookManager$$anonfun$1$$anonfun$apply$mcV$sp$3.apply(ShutdownHookManager.scala:62)

at scala.collection.IndexedSeqOptimized$class.foreach(IndexedSeqOptimized.scala:33)

at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:186)

at org.apache.spark.util.ShutdownHookManager$$anonfun$1.apply$mcV$sp(ShutdownHookManager.scala:62)

at org.apache.spark.util.SparkShutdownHook.run(ShutdownHookManager.scala:216)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1$$anonfun$apply$mcV$sp$1.apply$mcV$sp(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1$$anonfun$apply$mcV$sp$1.apply(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1$$anonfun$apply$mcV$sp$1.apply(ShutdownHookManager.scala:188)

at org.apache.spark.util.Utils$.logUncaughtExceptions(Utils.scala:1945)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1.apply$mcV$sp(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1.apply(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1.apply(ShutdownHookManager.scala:188)

at scala.util.Try$.apply(Try.scala:192)

at org.apache.spark.util.SparkShutdownHookManager.runAll(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anon$2.run(ShutdownHookManager.scala:178)

at org.apache.hadoop.util.ShutdownHookManager$1.run(ShutdownHookManager.java:54)

21/05/26 13:01:34 ERROR ShutdownHookManager: Exception while deleting Spark temp dir: C:\\Users\\lvacz\\AppData\\Local\\Temp\\spark-7921735f-07fa-45db-875e-5a6440eb7e79\\userFiles-af608dcd-6f5c-44a3-a295-3667472e8936

java.io.IOException: Failed to delete: C:\\Users\\lvacz\\AppData\\Local\\Temp\\spark-7921735f-07fa-45db-875e-5a6440eb7e79\\userFiles-af608dcd-6f5c-44a3-a295-3667472e8936\\org.mongodb_mongo-java-driver-3.4.2.jar

at org.apache.spark.network.util.JavaUtils.deleteRecursivelyUsingJavaIO(JavaUtils.java:144)

at org.apache.spark.network.util.JavaUtils.deleteRecursively(JavaUtils.java:118)

at org.apache.spark.network.util.JavaUtils.deleteRecursivelyUsingJavaIO(JavaUtils.java:128)

at org.apache.spark.network.util.JavaUtils.deleteRecursively(JavaUtils.java:118)

at org.apache.spark.network.util.JavaUtils.deleteRecursively(JavaUtils.java:91)

at org.apache.spark.util.Utils$.deleteRecursively(Utils.scala:1062)

at org.apache.spark.util.ShutdownHookManager$$anonfun$1$$anonfun$apply$mcV$sp$3.apply(ShutdownHookManager.scala:65)

at org.apache.spark.util.ShutdownHookManager$$anonfun$1$$anonfun$apply$mcV$sp$3.apply(ShutdownHookManager.scala:62)

at scala.collection.IndexedSeqOptimized$class.foreach(IndexedSeqOptimized.scala:33)

at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:186)

at org.apache.spark.util.ShutdownHookManager$$anonfun$1.apply$mcV$sp(ShutdownHookManager.scala:62)

at org.apache.spark.util.SparkShutdownHook.run(ShutdownHookManager.scala:216)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1$$anonfun$apply$mcV$sp$1.apply$mcV$sp(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1$$anonfun$apply$mcV$sp$1.apply(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1$$anonfun$apply$mcV$sp$1.apply(ShutdownHookManager.scala:188)

at org.apache.spark.util.Utils$.logUncaughtExceptions(Utils.scala:1945)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1.apply$mcV$sp(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1.apply(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anonfun$runAll$1.apply(ShutdownHookManager.scala:188)

at scala.util.Try$.apply(Try.scala:192)

at org.apache.spark.util.SparkShutdownHookManager.runAll(ShutdownHookManager.scala:188)

at org.apache.spark.util.SparkShutdownHookManager$$anon$2.run(ShutdownHookManager.scala:178)

at org.apache.hadoop.util.ShutdownHookManager$1.run(ShutdownHookManager.java:54)

二、解决

1 修改Spark日志配置

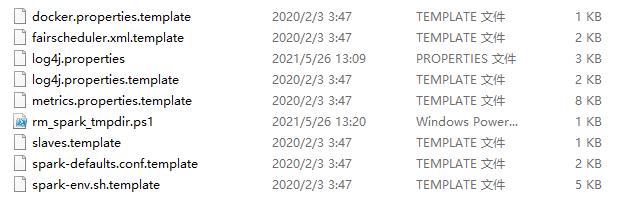

- 找到%SPARK_HOME%\\conf下的log4j.properties,如果没有log4j.properties,重命名log4j.properties.template为log4j.properties

- 用文本编辑器编辑log4j.properties

- 在文件末尾追加如下2行配置

log4j.logger.org.apache.spark.util.ShutdownHookManager=OFF

log4j.logger.org.apache.spark.SparkEnv=ERROR

再次执行就不会报错了,但存在一个问题,这个零时目录(C:\\Users\\lvacz\\AppData\\Local\\Temp)下spakr创建的以spark开头的临时文件会越来越多。

2 编写删除临时文件脚本

请注意路径里的lvacz是电脑的用户名,请替换为自己的对应路径

在%SPARK_HOME%\\conf目录创建powershell脚本,脚本名字为rm_spark_tmpdir.ps1

Remove-Item C:\\Users\\lvacz\\AppData\\Local\\Temp\\spark-* -Recurse -Force

鼠标移动到脚本文件名上 ——> 右键 ——> 点创建快捷方式

重命名创建好的快捷方式(非必须)

3 添加到开机启动

将上面创建的(如果重命名了就是重命名后的)快捷方式移动到如下路径

%APPDATA%\\Microsoft\\Windows\\Start Menu\\Programs\\Startup

以上是关于Spark 报错 Failed to delete: C:UserslvaczAppDataLocalTempspark-*的主要内容,如果未能解决你的问题,请参考以下文章