HBASE基础使用Java API实现DDL与DML

Posted 杀智勇双全杀

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了HBASE基础使用Java API实现DDL与DML相关的知识,希望对你有一定的参考价值。

HBASE基础(三)使用Java API实现DDL与DML

概述

上一篇简述了如何部署HBASE集群,并且简单玩了几下HBASE的命令行。事实上,不可能手工一个Value一个Value地插入到HBASE,这种方式过于低效,怎么可能喂饱这头

庞大的虎鲸。。。

大概率是要用程序put值的方式操作HBASE的。HBASE是Java写的,当然最常用的就是调用Java API。

准备工作

新建Maven项目

在IDEA中新建Maven工程的Java项目,都懂的,不解释。

启动HBASE

启动顺序之前的稿子也写过,先启动HDFS、YARN、Zookeeper(这几个不分顺序),确保都启动完毕后再启动HBASE。启动后可以:

hbase shell

启动个shell会话,方便后续验证。

配置Maven

在pom.xml中找个空位填写:

<!-- <repositories>-->

<!-- <repository>-->

<!-- <id>aliyun</id>-->

<!-- <url>http://maven.aliyun.com/nexus/content/groups/public/</url>-->

<!-- </repository>-->

<!-- </repositories>-->

<properties>

<hbase.version>2.1.2</hbase.version>

</properties>

<dependencies>

<!-- Hbase Client依赖 -->

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>${hbase.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>${hbase.version}</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

</dependencies>

老规矩,使用阿里云还是咳。咳。咳。科学上网。。。都可以。。。

小版本号无所谓的,自己玩都能用。。。

放置Log4j

在项目的resources里放一个log4j.properties,如果没有就自己用txt新建一个:

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# Define some default values that can be overridden by system properties

hbase.root.logger=INFO,console

hbase.security.logger=INFO,console

hbase.log.dir=.

hbase.log.file=hbase.log

hbase.log.level=INFO

# Define the root logger to the system property "hbase.root.logger".

log4j.rootLogger=${hbase.root.logger}

# Logging Threshold

log4j.threshold=ALL

#

# Daily Rolling File Appender

#

log4j.appender.DRFA=org.apache.log4j.DailyRollingFileAppender

log4j.appender.DRFA.File=${hbase.log.dir}/${hbase.log.file}

# Rollver at midnight

log4j.appender.DRFA.DatePattern=.yyyy-MM-dd

# 30-day backup

#log4j.appender.DRFA.MaxBackupIndex=30

log4j.appender.DRFA.layout=org.apache.log4j.PatternLayout

# Pattern format: Date LogLevel LoggerName LogMessage

log4j.appender.DRFA.layout.ConversionPattern=%d{ISO8601} %-5p [%t] %c{2}: %.1000m%n

# Rolling File Appender properties

hbase.log.maxfilesize=256MB

hbase.log.maxbackupindex=20

# Rolling File Appender

log4j.appender.RFA=org.apache.log4j.RollingFileAppender

log4j.appender.RFA.File=${hbase.log.dir}/${hbase.log.file}

log4j.appender.RFA.MaxFileSize=${hbase.log.maxfilesize}

log4j.appender.RFA.MaxBackupIndex=${hbase.log.maxbackupindex}

log4j.appender.RFA.layout=org.apache.log4j.PatternLayout

log4j.appender.RFA.layout.ConversionPattern=%d{ISO8601} %-5p [%t] %c{2}: %.1000m%n

#

# Security audit appender

#

hbase.security.log.file=SecurityAuth.audit

hbase.security.log.maxfilesize=256MB

hbase.security.log.maxbackupindex=20

log4j.appender.RFAS=org.apache.log4j.RollingFileAppender

log4j.appender.RFAS.File=${hbase.log.dir}/${hbase.security.log.file}

log4j.appender.RFAS.MaxFileSize=${hbase.security.log.maxfilesize}

log4j.appender.RFAS.MaxBackupIndex=${hbase.security.log.maxbackupindex}

log4j.appender.RFAS.layout=org.apache.log4j.PatternLayout

log4j.appender.RFAS.layout.ConversionPattern=%d{ISO8601} %p %c: %.1000m%n

log4j.category.SecurityLogger=${hbase.security.logger}

log4j.additivity.SecurityLogger=false

#log4j.logger.SecurityLogger.org.apache.hadoop.hbase.security.access.AccessController=TRACE

#log4j.logger.SecurityLogger.org.apache.hadoop.hbase.security.visibility.VisibilityController=TRACE

#

# Null Appender

#

log4j.appender.NullAppender=org.apache.log4j.varia.NullAppender

#

# console

# Add "console" to rootlogger above if you want to use this

#

log4j.appender.console=org.apache.log4j.ConsoleAppender

log4j.appender.console.target=System.err

log4j.appender.console.layout=org.apache.log4j.PatternLayout

log4j.appender.console.layout.ConversionPattern=%d{ISO8601} %-5p [%t] %c{2}: %.1000m%n

log4j.appender.asyncconsole=org.apache.hadoop.hbase.AsyncConsoleAppender

log4j.appender.asyncconsole.target=System.err

# Custom Logging levels

log4j.logger.org.apache.zookeeper=${hbase.log.level}

#log4j.logger.org.apache.hadoop.fs.FSNamesystem=DEBUG

log4j.logger.org.apache.hadoop.hbase=${hbase.log.level}

log4j.logger.org.apache.hadoop.hbase.META=${hbase.log.level}

# Make these two classes INFO-level. Make them DEBUG to see more zk debug.

log4j.logger.org.apache.hadoop.hbase.zookeeper.ZKUtil=${hbase.log.level}

log4j.logger.org.apache.hadoop.hbase.zookeeper.ZKWatcher=${hbase.log.level}

#log4j.logger.org.apache.hadoop.dfs=DEBUG

# Set this class to log INFO only otherwise its OTT

# Enable this to get detailed connection error/retry logging.

# log4j.logger.org.apache.hadoop.hbase.client.ConnectionImplementation=TRACE

# Uncomment this line to enable tracing on _every_ RPC call (this can be a lot of output)

#log4j.logger.org.apache.hadoop.ipc.HBaseServer.trace=DEBUG

# Uncomment the below if you want to remove logging of client region caching'

# and scan of hbase:meta messages

# log4j.logger.org.apache.hadoop.hbase.client.ConnectionImplementation=INFO

# EventCounter

# Add "EventCounter" to rootlogger if you want to use this

# Uncomment the line below to add EventCounter information

# log4j.appender.EventCounter=org.apache.hadoop.log.metrics.EventCounter

# Prevent metrics subsystem start/stop messages (HBASE-17722)

log4j.logger.org.apache.hadoop.metrics2.impl.MetricsConfig=WARN

log4j.logger.org.apache.hadoop.metrics2.impl.MetricsSinkAdapter=WARN

log4j.logger.org.apache.hadoop.metrics2.impl.MetricsSystemImpl=WARN

这玩意儿是用来看日志的,没有也凑合能用。。。

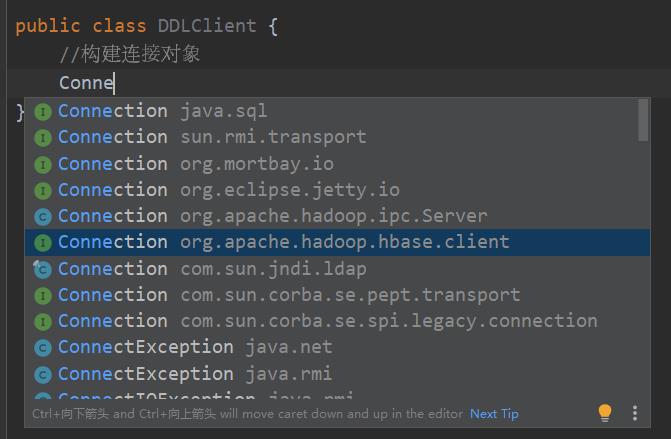

构建连接

参照之前的做法,还是得先构建连接。注意导包!!!一定要导入HBASE的包,导如sql的包会报错。。。

//构建连接对象

Connection connection = null;

@Before

//构建连接

public void getConnection() throws IOException {

//构建配置管理对象

Configuration configuration = HBaseConfiguration.create();//返回的是org.apache.hadoop.conf

//HBASE所有Client请求HBASE的Server都必须请求连接Zookeeper

configuration.set("hbase.zookeeper.quorum","node1:2181,node2:2181,node3:2181");

//构建连接

connection= ConnectionFactory.createConnection(configuration);

}

//先获取管理员对象才能使用管理员实现DDL

public HBaseAdmin getHbaseAdmin() throws IOException {

HBaseAdmin hBaseAdmin = (HBaseAdmin) connection.getAdmin();

return hBaseAdmin;

}

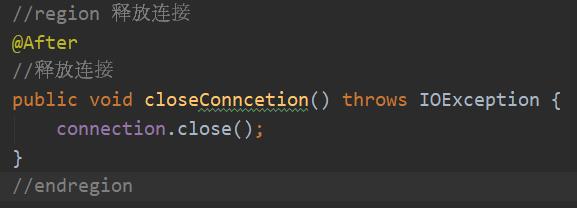

释放连接

释放连接写先写上:

@After

//释放连接

public void closeConncetion() throws IOException {

connection.close();

}

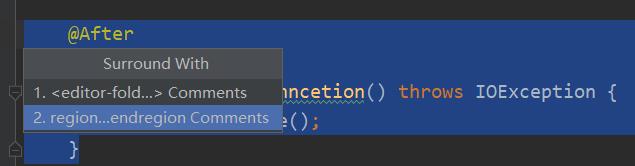

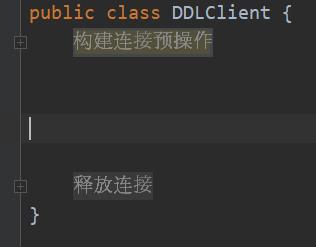

Visual studio中可以使用#region和#endregion折叠代码段,今天偶然发现IDEA也可以!!!ctrl+art+t后:

原来IDEA中使用//region和//endregion就可以折叠代码段了!!!

之后的单元测试就可以写在中间。。。折叠了这些代码,之后就很舒服。。。

DDL

构建管理员

已经构建好。

操作NameSpace

列举

//region 列举所有NameSpace

@Test

//列举所有NameSpace

public void listNS() throws IOException {

//构建管理员

HBaseAdmin hBaseAdmin = getHbaseAdmin();

//实现列举

NamespaceDescriptor[] namespaceDescriptors = hBaseAdmin.listNamespaceDescriptors();

//遍历打印每个NameSpace

for (NamespaceDescriptor namespaceDescriptor : namespaceDescriptors) {

System.out.println("namespaceDescriptor.getName() = " + namespaceDescriptor.getName());

}

//释放

hBaseAdmin.close();

}

//endregion

运行后有这么一段:

namespaceDescriptor.getName() = default

2021-05-25 18:50:48,003 INFO [main] client.ConnectionImplementation: Closing master protocol: MasterService

namespaceDescriptor.getName() = hbase

namespaceDescriptor.getName() = test210524

2021-05-25 18:50:48,008 INFO [ReadOnlyZKClient-node1:2181,node2:2181,node3:2181@0x3c9d0b9d] zookeeper.ZooKeeper: Session: 0x379a32020f60003 closed

Process finished with exit code 0

hbase shell中:

hbase(main):001:0> list_namespace

NAMESPACE

default

hbase

test210524

3 row(s)

Took 0.6682 seconds

成功!!!

创建

//region 创建NameSpace

@Test

//创建NameSpace

public void createNS() throws IOException {

//构建管理员

HBaseAdmin hBaseAdmin = getHbaseAdmin();

//构建NS描述器

NamespaceDescriptor descriptor = NamespaceDescriptor

.create("test210525")//指定NS名称

.build();

//创建NS

hBaseAdmin.createNamespace(descriptor);

//释放管理员

hBaseAdmin.close();

}

//endregion

链式编程中,使用.方法时,一大串不需要都写在同一行,可以跨行!!!后边还能写注释(编译时会自动忽略注释,校正格式)。。。

hbase(main):005:0> list_namespace

NAMESPACE

default

hbase

test210524

test210525

4 row(s)

Took 0.0120 seconds

可以看到创建成功!!!

删除

//region 删除NameSpace

@Test

//删除NameSpace

public void deleteNS() throws IOException {

//构建管理员

HBaseAdmin hBaseAdmin = getHbaseAdmin();

//删除NameSpace

hBaseAdmin.deleteNamespace("test210525");

//释放管理员

hBaseAdmin.close();

}

//endregion

查看一下:

hbase(main):006:0> list_namespace

NAMESPACE

default

hbase

test210524

3 row(s)

Took 0.0183 seconds

删除成功。。。

操作Table

再执行一次创建命名空间的操作恢复它,后边用它。

列举

//region 列举所有表

@Test

public void listTable() throws IOException {

HBaseAdmin hBaseAdmin=getHbaseAdmin();

//列举

//List<TableDescriptor> tableDescriptors = hBaseAdmin.listTableDescriptors();

HTableDescriptor[] tableDescriptors = hBaseAdmin.listTableDescriptorsByNamespace("test210524");

//iter遍历打印

for (TableDescriptor tableDescriptor : tableDescriptors) {

System.out.println("tableDescriptor.getTableName().getNameAsString() = "

+ tableDescriptor.getTableName().getNameAsString());

}

hBaseAdmin.close();

}

//endregion

突然意识到默认的default中并没有创建过表。。。为了方便测试,先换一个方法,选昨天建的NameSpace。。。

hbase(main):009:0> list_namespace_tables 'test210524'

TABLE

t1

1 row(s)

Took 0.0427 seconds

=> ["t1"]

tableDescriptor.getTableName().getNameAsString() = test210524:t1

2021-05-25 20:13:17,599 INFO [main] client.ConnectionImplementation: Closing master protocol: MasterService

2021-05-25 20:13:17,603 INFO [ReadOnlyZKClient-node1:2181,node2:2181,node3:2181@0x2f112965] zookeeper.ZooKeeper: Session: 0x379a32020f60005 closed

2021-05-25 20:13:17,605 INFO [ReadOnlyZKClient-node1:2181,node2:2181,node3:2181@0x2f112965-EventThread] zookeeper.ClientCnxn: EventThread shut down for session: 0x379a32020f60005

Process finished with exit code 0

吻合。。。但是这是个过时方法(也还能用),被屏蔽的方法其实也能用:

tableDescriptor.getTableName().getNameAsString() = test210524:t1

2021-05-25 20:21:17,426 INFO [main] client.ConnectionImplementation: Closing master protocol: MasterService

2021-05-25 20:21:17,430 INFO [ReadOnlyZKClient-node1:2181,node2:2181,node3:2181@0x3c9d0b9d] zookeeper.ZooKeeper: Session: 0x179a32021190006 closed

Process finished with exit code 0

创建与删除

创建之前必须先判断是否已经有这个表,已经存在还强制创建会报错!!!需要提前删除已经存在的同名表才能防止创建失败。删除表需要先停用才能删除。。。还是个比较麻烦的问题。。。

//region 创建和删除表

@Test

//如果有同名表,需要先禁用、删除后才能创建

public void createTable() throws IOException {

HBaseAdmin hBaseAdmin = getHbaseAdmin();

//构建操作的表名

TableName tableName = TableName.valueOf("test210525:testTable1");

//判断是否存在

if (hBaseAdmin.tableExists(tableName)) {

System.out.println("已经存在同名表");

//禁用

hBaseAdmin.disableTable(tableName);

System.out.println("已经禁用该同名表");

//删除

hBaseAdmin.deleteTable(tableName);

System.out.println("已经删除该同名表");

}

//构建列族描述器

ColumnFamilyDescriptor cf1 = ColumnFamilyDescriptorBuilder

.newBuilder(Bytes.toBytes("cf1"))//指定列族名称

.setMaxVersions(3)//设置最大版本数

.build();

ColumnFamilyDescriptor cf2 = ColumnFamilyDescriptorBuilder

.newBuilder(Bytes.toBytes("cf2"))//指定列族名称

.build();

//构建表的描述器

TableDescriptor descriptor = TableDescriptorBuilder

.newBuilder(tableName)//指定创建的表名

.setColumnFamily(cf1)//第一个列族

.setColumnFamily(cf2)//第二个列族

.build();

//创建表

hBaseAdmin.createTable(descriptor);

hBaseAdmin.close();

}

//endregion

首次运行:

2021-05-25 20:45:06,916 INFO [ReadOnlyZKClient-node1:2181,node2:2181,node3:2181@0x3c9d0b9d-EventThread] zookeeper.ClientCnxn: EventThread shut down for session: 0x379a32020f60006

Process finished with exit code 0

hbase(main):010:0> list_namespace_tables 'test210525'

TABLE

testTable1

1 row(s)

Took 0.0257 seconds

=> ["testTable1"]

再次运行后:

已经存在同名表

2021-05-25 20:49:06,609 INFO [main] client.HBaseAdmin: Started disable of test210525:testTable1

2021-05-25 20:49:07,088 INFO [main] client.HBaseAdmin: Operation: DISABLE, Table Name: test210525:testTable1, procId: 14 completed

已经禁用该同名表

2021-05-25 20:49:07,541 INFO [main] client.HBaseAdmin: Operation: DELETE, Table Name: test210525:testTable1, procId: 16 completed

已经删除该同名表

DML

大同小异。。。直接进入Talk is cheap. Show me the code.阶段。

构建表的对象

换另一个类里写,CV大法把构建连接、释放资源一并拷贝过去。

由于DML操作都需要基于表的对象实现,∴需要先获取表的对象:

//所有DML操作都需要基于表的对象实现

public Table getHbaseTable() throws IOException {

//获取表的对象

Table table = connection.getTable(TableName.valueOf("test210525:testTable1"));

return table;

}

put

先查看内容:

hbase(main):012:0> scan 'test210525:testTable1'

ROW COLUMN+CELL

0 row(s)

Took 0.1825 seconds

运行:

//region put

@Test

public void putData() throws IOException {

//获取表的对象

Table table = getHbaseTable();

//构建put对象

Put put = new Put(Bytes.toBytes("20210525_001"));

//指定列族、列、值

put.addColumn(Bytes.toBytes("cf1"),Bytes.toBytes("name"),Bytes.toBytes("张三"));

//put.addColumn(Bytes.toBytes("cf1"),Bytes.toBytes("name"),Bytes.toBytes("李四"));

//put.addColumn(Bytes.toBytes("cf1"),Bytes.toBytes("name"),Bytes.toBytes("王五"));

put.addColumn(Bytes.toBytes("cf2"),Bytes.toBytes("age"),Bytes.toBytes("20"));

//put.addColumn(Bytes.toBytes("cf2"),Bytes.toBytes("age"),Bytes.toBytes("25"));

//执行put

table.put(put);

//释放表对象

table.close();

}

//endregion

再次查看:

hbase(main):013:0> scan 'test210525:testTable1'

ROW COLUMN+CELL

20210525_001 column=cf1:name, timestamp=1621948744065, value=\\xE5\\xBC\\xA0\\xE4\\xB8\\x89

20210525_001 column=cf2:age, timestamp=1621948744065, value=20

1 row(s)

Took 0.0373 seconds

之前有设置过列族cf1的最大版本数为3,换成"李四"再执行一次。

以上是关于HBASE基础使用Java API实现DDL与DML的主要内容,如果未能解决你的问题,请参考以下文章