番外.3.情感计算与情绪识别

Posted oldmao_2001

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了番外.3.情感计算与情绪识别相关的知识,希望对你有一定的参考价值。

重新再复习一下NLP,把一些内容以番外的内容记录一下。本节实现一个简单的情感技术与情绪识别模型。

公式输入请参考: 在线Latex公式

实例代码

数据集

用的是ISEAR数据,github上面搜索可以找到下载链接。

joy,“On days when I feel close to my partner and other friends.

When I feel at peace with myself and also experience a close

contact with people whom I regard greatly.”,

fear,“Every time I imagine that someone I love or I could contact a

serious illness, even death.”,

anger,“When I had been obviously unjustly treated and had no possibility

of elucidating this.”,

sadness,“When I think about the short time that we live and relate it to

the periods of my life when I think that I did not use this

short time.”,

disgust,“At a gathering I found myself involuntarily sitting next to two

people who expressed opinions that I considered very low and

discriminating.”,

shame,“When I realized that I was directing the feelings of discontent

with myself at my partner and this way was trying to put the blame

on him instead of sorting out my own feeliings.”,

guilt,“I feel guilty when when I realize that I consider material things

more important than caring for my relatives. I feel very

self-centered.”,

joy,“After my girlfriend had taken her exam we went to her parent’s

place.”,

fear,“When, for the first time I realized the meaning of death.”,

数据第一列是情绪标签,第二列是文字内容。

代码

读取数据

import pandas as pd

import numpy as np

# 读取csv的标准操作,注意数据要和代码文件放在同一个文件夹

data = pd.read_csv('ISEAR.csv',header=None)

data.head()#打印前五行看看

划分训练和测试数据

from sklearn.model_selection import train_test_split

labels = data[0].values.tolist()#切割第一列标签

sents = data[1].values.tolist()#切割第二列语句

X_train, X_test, y_train, y_test = train_test_split(sents, labels, test_size=0.2, random_state=5)#划分训练和测试集(20%),random_state:设置随机数种子,保证每次都是同一个随机数。若为0或不填,则每次得到数据都不一样

抽取特征,sklearn提供的是词袋模型,这里抽取的是tfidf特征。

t

f

(

t

,

d

)

tf(t,d)

tf(t,d)是

t

f

tf

tf值,表示某一篇文本

d

d

d中,单词

t

t

t出现的次数,

t

f

tf

tf值越大,说明在单词

t

t

t在文本

d

d

d中出现的次数越多。

d

f

(

d

,

t

)

df(d,t)

df(d,t)表示包含单词

t

t

t的文档(这里是句子)总数。

n

d

n_d

nd表示文档的总数

i

d

f

(

t

)

idf(t)

idf(t)对频次表示的

t

f

(

t

,

d

)

tf(t,d)

tf(t,d)进行了改进(出现在文档或者句子中次数越多越重要),它不仅考虑了文本中单词出现的次数,同时考虑了单词在一般文本上的出现次数,如果一个单词总是在一般的文本中出现,表示它可提供的分类信息较少(同时出现在很多文章中,越不重要),比如代词或者虚词 “的”、“地”、“得”等。

i

d

f

(

t

)

idf(t)

idf(t)平滑计算公式为:

i

d

f

(

t

)

=

log

n

d

+

1

d

f

(

d

,

t

)

+

1

idf(t)=\\log\\cfrac{n_d+1}{df(d,t)+1}

idf(t)=logdf(d,t)+1nd+1

最后把两者都考虑进来:

t

f

i

d

f

(

t

,

d

)

=

t

f

(

t

,

d

)

×

i

d

f

(

t

)

tfidf(t,d)=tf(t,d)\\times idf(t)

tfidf(t,d)=tf(t,d)×idf(t)

from sklearn.feature_extraction.text import TfidfVectorizer

vectorizer = TfidfVectorizer()

X_train = vectorizer.fit_transform(X_train)# X_train原来是一个list,里面是一句句话,经过fit_transform后,变成了一个矩阵(大小是:句数量*词库大小),里面的值是tfidf值

X_test = vectorizer.transform(X_test)#这里不是fit_transform,训练才用fit_transform来进行拟合

这里可以考虑使用别的特征,例如词性、n-gram等。

抽取完特征后开始训练,这里用的逻辑回归模型,参数可以参考官网:https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LogisticRegression.html

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import GridSearchCV

parameters = {'C':[0.00001, 0.0001, 0.001, 0.005,0.01,0.05, 0.1, 0.5,1,2,5,10]}#使用不同的正则参数进行交叉验证

lr = LogisticRegression()#训练

lr.fit(X_train, y_train).score(X_test, y_test)#测试

clf = GridSearchCV(lr, parameters, cv=10)#cv是cross validation,分10份的意思

clf.fit(X_train, y_train)

clf.score(X_test, y_test)

print (clf.best_params_)#根据交叉验证的结果打印出最佳超参数,这里是2

打印预测结果的混淆矩阵

from sklearn.metrics import confusion_matrix

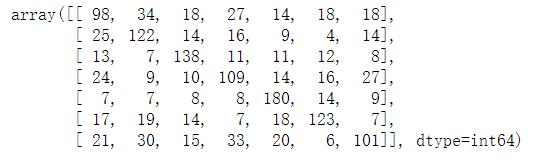

confusion_matrix(y_test, clf.predict(X_test))

混淆矩阵里面是类别*类别大小的结果,对角线对应的是当前类别分类正确的结果,其他列是分到对应错误类别的结果。

以上是关于番外.3.情感计算与情绪识别的主要内容,如果未能解决你的问题,请参考以下文章