深度学习系列15:tensorRT基础

Posted IE06

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了深度学习系列15:tensorRT基础相关的知识,希望对你有一定的参考价值。

tensorrt官方库:https://github.com/NVIDIA/TensorRT, git clone一下即可

1. onnx转tensorRT

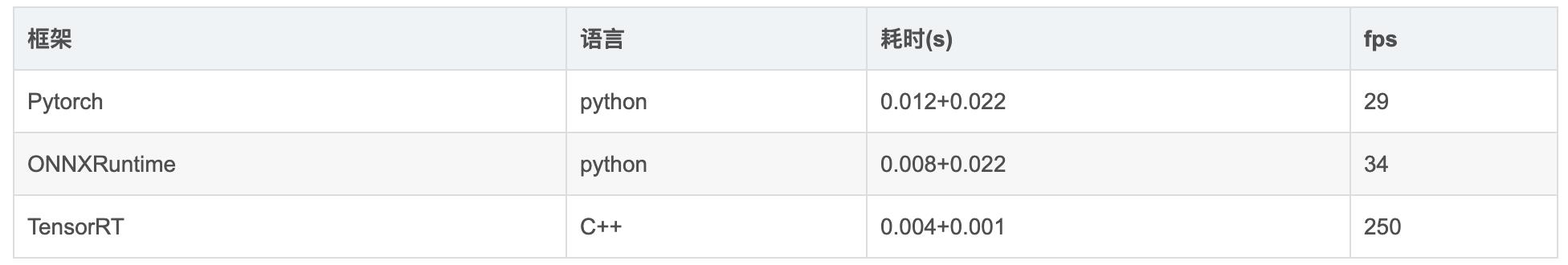

首先放一张对比图:

使用官方的tensorrt包,找到里面的trtexec文件,按照下面的语句执行:

trtexec --onnx=onnx-modifier/result.onnx --batch=1 --saveEngine=onnx-modifier/result.trt --workspace=8196

即可获得tensorRT的trt模型。

2. 使用onnx进行推理

import onnxruntime as rt

import numpy as np

import cv2

sess = rt.InferenceSession("/....onnx")

input_name = sess.get_inputs()[0].name

img = cv2.resize(cv2.imread("....png"),(672,384)).astype(np.float32)

X = np.array([np.transpose(img, (2,0,1))])

pred_onnx = sess.run(None, {input_name: X})

3. 使用tensorRT进行推理

import tensorrt as trt

import os

import pycuda.driver as cuda

import cv2

import numpy as np

import pycuda.autoinit

class TensorRTInference(object):

def __init__(self, engine_file_path, input_shape):

self.engine_file_path = engine_file_path

self.shape = input_shape

self.engine = self.load_engine()

def load_engine(self):

assert os.path.exists(self.engine_file_path)

with open(self.engine_file_path, 'rb') as f, trt.Runtime(trt.Logger()) as runtime:

engine_data = f.read()

engine = runtime.deserialize_cuda_engine(engine_data)

return engine

def infer_once(self, img):

engine = self.engine

if len(img.shape) == 4:

b, c, h, w = img.shape

elif len(img.shape) == 3:

c, h, w = img.shape

b = 1

with engine.create_execution_context() as context:

context.set_binding_shape(engine.get_binding_index('input'), (b, c, h,w))

bindings = []

for binding in engine:

binding_idx = engine.get_binding_index(binding)

size = trt.volume(context.get_binding_shape(binding_idx))

dtype = trt.nptype(engine.get_binding_dtype(binding))

if engine.binding_is_input(binding):

input_buffer = np.ascontiguousarray(img, dtype).astype(np.float32)

input_memory = cuda.mem_alloc(img.nbytes)

bindings.append(int(input_memory))

else:

output_buffer = cuda.pagelocked_empty(size, dtype)

bindings.append(int(output_memory))

stream = cuda.Stream()

cuda.memcpy_htod_async(input_memory, input_buffer, stream)

context.execute_async(bindings=bindings, stream_handle=stream.handle)

cuda.memcpy_dtoh_async(output_buffer, output_memory, stream)

stream.synchronize()

#res = np.reshape(output_buffer, (2, h, w))

return output_buffer

INPUT_SHAPE = (224, 224)

engine_file_path = '***.trt'

img_path = 's1.png'

img = cv2.resize(cv2.imread(img_path), INPUT_SHAPE) # hwc

img = np.transpose(img, (2,0,1)).astype(np.float32) # chw

trt_infer = TensorRTInference(engine_file_path, INPUT_SHAPE)

engine = trt_infer.load_engine()

trt_infer.infer_once(img)

以上是关于深度学习系列15:tensorRT基础的主要内容,如果未能解决你的问题,请参考以下文章