音视频系列--OpenSL ES基础用法总结

Posted narkang

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了音视频系列--OpenSL ES基础用法总结相关的知识,希望对你有一定的参考价值。

一、前置

OpenSL ES全称为Open Sound Library for Embedded Systems,及嵌入式音频加速标准。OpenSL ES是无授权费、跨平台、针对嵌入式系统封精心优化的硬件音频加速API。它为嵌入式移动多媒体设备上的本地应用程序开发提供了标准化、高性能、低响应时间的音频功能实现方法,同时还实现了软/硬件音频性能的直接跨平台部署,降低了执行难度。

在android中,High Level Audio Libs是音频Java层API输入输出,属于高级API,相对来说,OpenSL ES则是比较底层级的API,属于C语言API。这里记录下用法

二、OpenSL ES引入

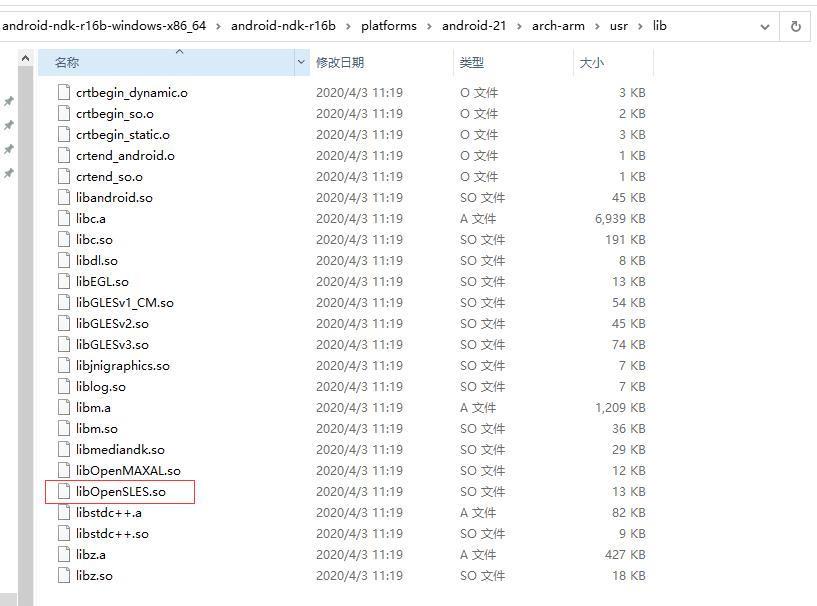

Android的OpenSL ES库是在NDK的platforms文件夹对应android平台相应cpu类型里面,如:

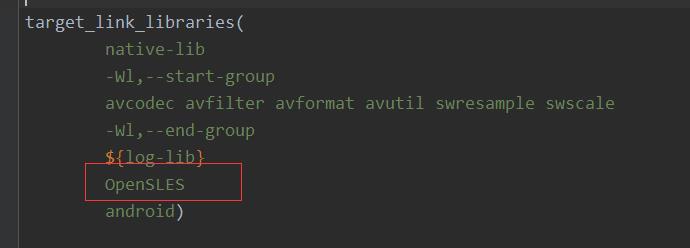

CmakeList中引入

三、开发流程

3.1、OpenSL ES的开发流程主要有如下6个步骤

1、创建接口对象

2、设置混音器

3、创建播放器(录音器)

4、设置缓冲队列和回调函数

5、设置播放状态

6、启动回调函数

其中4和6是播放PCM等数据格式的音频是需要用到的。

四、播放PCM文件

4.1、 创建播放器和混音器

SLObjectItf engineObject = NULL;//用SLObjectItf声明引擎接口对象

SLEngineItf engineEngine = NULL;//声明具体的引擎对象实例

void createEngine()

{

SLresult result;//返回结果

result = slCreateEngine(&engineObject, 0, NULL, 0, NULL, NULL);//第一步创建引擎

result = (*engineObject)->Realize(engineObject, SL_BOOLEAN_FALSE);//实现(Realize)engineObject接口对象

result = (*engineObject)->GetInterface(engineObject, SL_IID_ENGINE, &engineEngine);//通过engineObject的GetInterface方法初始化engineEngine

}

//第一步,创建引擎

createEngine();

//第二步,创建混音器

const SLInterfaceID mids[1] = {SL_IID_ENVIRONMENTALREVERB};

const SLboolean mreq[1] = {SL_BOOLEAN_FALSE};

result = (*engineEngine)->CreateOutputMix(engineEngine, &outputMixObject, 1, mids, mreq);

(void)result;

result = (*outputMixObject)->Realize(outputMixObject, SL_BOOLEAN_FALSE);

(void)result;

result = (*outputMixObject)->GetInterface(outputMixObject, SL_IID_ENVIRONMENTALREVERB, &outputMixEnvironmentalReverb);

if (SL_RESULT_SUCCESS == result) {

result = (*outputMixEnvironmentalReverb)->SetEnvironmentalReverbProperties(

outputMixEnvironmentalReverb, &reverbSettings);

(void)result;

}

SLDataLocator_OutputMix outputMix = {SL_DATALOCATOR_OUTPUTMIX, outputMixObject};

SLDataSink audiosnk = {&outputMix, NULL};

4.2、设置pcm格式的频率位数等信息并创建播放器

// 第三步,配置PCM格式信息

SLDataLocator_AndroidSimpleBufferQueue android_queue={SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE,2};

SLDataFormat_PCM pcm={

SL_DATAFORMAT_PCM,//播放pcm格式的数据

2,//2个声道(立体声)

SL_SAMPLINGRATE_44_1,//44100hz的频率

SL_PCMSAMPLEFORMAT_FIXED_16,//位数 16位

SL_PCMSAMPLEFORMAT_FIXED_16,//和位数一致就行

SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT,//立体声(前左前右)

SL_BYTEORDER_LITTLEENDIAN//结束标志

};

SLDataSource slDataSource = {&android_queue, &pcm};

const SLInterfaceID ids[3] = {SL_IID_BUFFERQUEUE, SL_IID_EFFECTSEND, SL_IID_VOLUME};

const SLboolean req[3] = {SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE};

result = (*engineEngine)->CreateAudioPlayer(engineEngine, &pcmPlayerObject, &slDataSource, &audioSnk, 3, ids, req);

//初始化播放器

(*pcmPlayerObject)->Realize(pcmPlayerObject, SL_BOOLEAN_FALSE);

// 得到接口后调用 获取Player接口

(*pcmPlayerObject)->GetInterface(pcmPlayerObject, SL_IID_PLAY, &pcmPlayerPlay);

4.3、设置缓冲队列和回调函数

// 注册回调缓冲区 获取缓冲队列接口

(*pcmPlayerObject)->GetInterface(pcmPlayerObject, SL_IID_BUFFERQUEUE, &pcmBufferQueue);

//缓冲接口回调

(*pcmBufferQueue)->RegisterCallback(pcmBufferQueue, pcmBufferCallBack, NULL);

//回调函数

//喇叭没数据时候会回调这个函数,把数据写入到opensl es

void pcmBufferCallBack(SLAndroidSimpleBufferQueueItf bf, void * context)

{

Audio *wlAudio = (Audio *) context;

if(wlAudio != NULL)

{

int buffersize = wlAudio->resampleAudio();

if(buffersize > 0)

{

(* wlAudio-> pcmBufferQueue)->Enqueue( wlAudio->pcmBufferQueue, (char *) wlAudio-> buffer, buffersize);

}

}

}

4.4、设置播放状态并手动开始调用回调函数

pcmBufferCallBack(pcmBufferQueue, NULL);

4.5、音频重采样

FFmpeg从网络解码的音频格式和OpenSL ES设置的音频播放格式是不一样的,这里有个重采样的过程,可以看示例程序的resampleAudio方法

五、示例代码

OpenSL ES代码基本是固定的,写好基本不用修改

#include "Audio.h"

Audio::Audio(Playstatus *playstatus, int sample_rate) {

this->playstatus = playstatus;

this->sample_rate = sample_rate;

queue = new Queue(playstatus);

buffer = (uint8_t *) av_malloc(sample_rate * 2 * 2);

}

Audio::~Audio() {

}

void *decodPlay(void *data)

{

Audio *wlAudio = (Audio *) data;

wlAudio->initOpenSLES();

pthread_exit(&wlAudio->thread_play);

}

void Audio::play() {

pthread_create(&thread_play, NULL, decodPlay, this);

}

//重采样

int Audio::resampleAudio() {

while(playstatus != NULL && !playstatus->exit)

{

avPacket = av_packet_alloc();

if(queue->getAvpacket(avPacket) != 0)

{

av_packet_free(&avPacket);

av_free(avPacket);

avPacket = NULL;

continue;

}

ret = avcodec_send_packet(avCodecContext, avPacket);

if(ret != 0)

{

av_packet_free(&avPacket);

av_free(avPacket);

avPacket = NULL;

continue;

}

avFrame = av_frame_alloc();

ret = avcodec_receive_frame(avCodecContext, avFrame);

if(ret == 0)

{

if(avFrame->channels && avFrame->channel_layout == 0)

{

avFrame->channel_layout = av_get_default_channel_layout(avFrame->channels);

}

else if(avFrame->channels == 0 && avFrame->channel_layout > 0)

{

avFrame->channels = av_get_channel_layout_nb_channels(avFrame->channel_layout);

}

SwrContext *swr_ctx;

swr_ctx = swr_alloc_set_opts(

NULL,

AV_CH_LAYOUT_STEREO,

AV_SAMPLE_FMT_S16,

avFrame->sample_rate,

avFrame->channel_layout,

(AVSampleFormat) avFrame->format,

avFrame->sample_rate,

NULL, NULL

);

if(!swr_ctx || swr_init(swr_ctx) <0)

{

av_packet_free(&avPacket);

av_free(avPacket);

avPacket = NULL;

av_frame_free(&avFrame);

av_free(avFrame);

avFrame = NULL;

swr_free(&swr_ctx);

continue;

}

int nb = swr_convert(

swr_ctx,

&buffer,

avFrame->nb_samples,

(const uint8_t **) avFrame->data,

avFrame->nb_samples);

int out_channels = av_get_channel_layout_nb_channels(AV_CH_LAYOUT_STEREO);

data_size = nb * out_channels * av_get_bytes_per_sample(AV_SAMPLE_FMT_S16);

if(LOG_DEBUG)

{

LOGE("data_size is %d", data_size);

}

av_packet_free(&avPacket);

av_free(avPacket);

avPacket = NULL;

av_frame_free(&avFrame);

av_free(avFrame);

avFrame = NULL;

swr_free(&swr_ctx);

break;

} else{

av_packet_free(&avPacket);

av_free(avPacket);

avPacket = NULL;

av_frame_free(&avFrame);

av_free(avFrame);

avFrame = NULL;

continue;

}

}

return data_size;

}

//喇叭没数据时候会回调这个函数,把数据写入到opensl es

void pcmBufferCallBack(SLAndroidSimpleBufferQueueItf bf, void * context)

{

Audio *wlAudio = (Audio *) context;

if(wlAudio != NULL)

{

int buffersize = wlAudio->resampleAudio();

if(buffersize > 0)

{

(* wlAudio-> pcmBufferQueue)->Enqueue( wlAudio->pcmBufferQueue, (char *) wlAudio-> buffer, buffersize);

}

}

}

void Audio::initOpenSLES() {

//第一步 创建一个引擎接口对象

SLresult result;

result = slCreateEngine(&engineObject, 0, 0, 0, 0, 0);

result = (*engineObject)->Realize(engineObject, SL_BOOLEAN_FALSE);

result = (*engineObject)->GetInterface(engineObject, SL_IID_ENGINE, &engineEngine);

//第二步,通过获取的引擎接口对象去创建混音器

const SLInterfaceID mids[1] = {SL_IID_ENVIRONMENTALREVERB};

const SLboolean mreq[1] = {SL_BOOLEAN_FALSE};

result = (*engineEngine)->CreateOutputMix(engineEngine, &outputMixObject, 1, mids, mreq);

(void)result;

result = (*outputMixObject)->Realize(outputMixObject, SL_BOOLEAN_FALSE);

(void)result;

result = (*outputMixObject)->GetInterface(outputMixObject, SL_IID_ENVIRONMENTALREVERB, &outputMixEnvironmentalReverb);

if (SL_RESULT_SUCCESS == result) {

result = (*outputMixEnvironmentalReverb)->SetEnvironmentalReverbProperties(

outputMixEnvironmentalReverb, &reverbSettings);

(void)result;

}

SLDataLocator_OutputMix outputMix = {SL_DATALOCATOR_OUTPUTMIX, outputMixObject};

SLDataSink audioSnk = {&outputMix, 0};

// 第三步,配置PCM格式信息

SLDataLocator_AndroidSimpleBufferQueue android_queue={SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE,2};

SLDataFormat_PCM pcm={

SL_DATAFORMAT_PCM,//播放pcm格式的数据

2,//2个声道(立体声)

static_cast<SLuint32>(getCurrentSampleRateForOpensles(sample_rate)),//44100hz的频率

SL_PCMSAMPLEFORMAT_FIXED_16,//位数 16位

SL_PCMSAMPLEFORMAT_FIXED_16,//和位数一致就行

SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT,//立体声(前左前右)

SL_BYTEORDER_LITTLEENDIAN//结束标志

};

SLDataSource slDataSource = {&android_queue, &pcm};

const SLInterfaceID ids[1] = {SL_IID_BUFFERQUEUE};

const SLboolean req[1] = {SL_BOOLEAN_TRUE};

(*engineEngine)->CreateAudioPlayer(engineEngine, &pcmPlayerObject, &slDataSource, &audioSnk, 1, ids, req);

//初始化播放器

(*pcmPlayerObject)->Realize(pcmPlayerObject, SL_BOOLEAN_FALSE);

// 得到接口后调用 获取Player接口

(*pcmPlayerObject)->GetInterface(pcmPlayerObject, SL_IID_PLAY, &pcmPlayerPlay);

// 注册回调缓冲区 获取缓冲队列接口

(*pcmPlayerObject)->GetInterface(pcmPlayerObject, SL_IID_BUFFERQUEUE, &pcmBufferQueue);

//缓冲接口回调

(*pcmBufferQueue)->RegisterCallback(pcmBufferQueue, pcmBufferCallBack, this);

// 获取播放状态接口

(*pcmPlayerPlay)->SetPlayState(pcmPlayerPlay, SL_PLAYSTATE_PLAYING);

pcmBufferCallBack(pcmBufferQueue, this);

}

int Audio::getCurrentSampleRateForOpensles(int sample_rate) {

int rate = 0;

switch (sample_rate)

{

case 8000:

rate = SL_SAMPLINGRATE_8;

break;

case 11025:

rate = SL_SAMPLINGRATE_11_025;

break;

case 12000:

rate = SL_SAMPLINGRATE_12;

break;

case 16000:

rate = SL_SAMPLINGRATE_16;

break;

case 22050:

rate = SL_SAMPLINGRATE_22_05;

break;

case 24000:

rate = SL_SAMPLINGRATE_24;

break;

case 32000:

rate = SL_SAMPLINGRATE_32;

break;

case 44100:

rate = SL_SAMPLINGRATE_44_1;

break;

case 48000:

rate = SL_SAMPLINGRATE_48;

break;

case 64000:

rate = SL_SAMPLINGRATE_64;

break;

case 88200:

rate = SL_SAMPLINGRATE_88_2;

break;

case 96000:

rate = SL_SAMPLINGRATE_96;

break;

case 192000:

rate = SL_SAMPLINGRATE_192;

break;

default:

rate = SL_SAMPLINGRATE_44_1;

}

return rate;

}

以上是关于音视频系列--OpenSL ES基础用法总结的主要内容,如果未能解决你的问题,请参考以下文章