mysql MHA集群安装

Posted 杂谈数据库

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了mysql MHA集群安装相关的知识,希望对你有一定的参考价值。

一、主机规划

IP |

Hostname |

Master/Slave |

Manager Node/Data Node |

10.22.83.42 |

node1 |

Master |

Data Node |

10.22.83.26 |

node2 |

Slave |

Data Node |

10.22.83.28 |

node3 |

Slave |

Data Node and Manager Node |

MHA为mysql主从架构提供switchover、failover的功能,MHA 能够在30秒内实现故障切换,并能在故障切换中,最大可能的保证数据一致性

datanode是主从架构的mysql,最少一主两从(可以是异步复制,也可以是半同步复制),data node需要安装mha4mysql-node包;manager node控制主从切换,需要安装mha4mysql-manager包;manager node可以装在datanode上,此时的节点既需要安装mha4mysql-manager也需要mha4mysql-node包

二、搭建一主二从mysql增强半同步复制架构

(1)配置master和两个slave节点

0. 配置/etc/hosts

cat /etc/hosts

10.22.83.42 node1

10.22.83.26 node2

10.22.83.28 node3

1. 先卸载系统自带的mariadb,解压mysql安装包

rpm -qa|grep mariadb

rpm -e mariadb-libs-5.5.56-2.el7.x86_64 --nodeps

cd /soft

tar -xvf mysql-5.7.30-1.el7.x86_64.rpm-bundle.tar

rpm -ivh mysql-community-common-5.7.30-1.el7.x86_64.rpm

rpm -ivh mysql-community-libs-5.7.30-1.el7.x86_64.rpm

rpm -ivh mysql-community-client-5.7.30-1.el7.x86_64.rpm

rpm -ivh mysql-community-devel-5.7.30-1.el7.x86_64.rpm

rpm -ivh mysql-community-libs-compat-5.7.30-1.el7.x86_64.rpm

rpm -ivh mysql-community-server-5.7.30-1.el7.x86_64.rpm

2. 配置mysql目录

mkdir /data1

cd /data1

mkdir binlog

mkdir data

mkdir log

mkdir relaylog

chown -R mysql.mysql /data1

3. 配置文件修改(master和slave只有下面标红的地方不同,其余相同)

cat /etc/my.cnf

[client]

socket=/var/lib/mysql/mysql.sock

[mysqld]

#加载半同步复制主备插件

plugin-load-add=semisync_master.so

plugin-load-add=semisync_slave.so

socket=/var/lib/mysql/mysql.sock

symbolic-links=0

log-error=/data1/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

slow_query_log_file=/data1/log/slow.log

slow_query_log=1

long_query_time=0.3

#server_id三台分别是1,2,3

server_id=1

#开启gtid模式

gtid_mode=ON

enforce_gtid_consistency=ON

master_info_repository=TABLE

relay_log_info_repository=TABLE

relay_log_recovery=1

binlog_checksum=NONE

log_slave_updates=ON

log_bin=/data1/binlog/binlog

relay_log=/data1/relaylog/relaylog

binlog_format=ROW

transaction_write_set_extraction=XXHASH64

datadir=/data1/data

slave_parallel_type=LOGICAL_CLOCK

slave_preserve_commit_order=1

slave_parallel_workers =4

innodb_file_per_table

sync_binlog = 1

binlog-group-commit-sync-delay=20

binlog_group_commit_sync_no_delay_count=5

innodb_lock_wait_timeout = 50

innodb_rollback_on_timeout = ON

innodb_io_capacity = 5000

innodb_io_capacity_max=15000

innodb_thread_concurrency = 0

innodb_sync_spin_loops = 200

innodb_spin_wait_delay = 6

innodb_status_file = 1

innodb_purge_threads=4

innodb_undo_log_truncate=1

innodb_max_undo_log_size=4G

innodb_use_native_aio = 1

innodb_autoinc_lock_mode = 2

log_slow_admin_statements=1

expire_logs_days=7

character-set-server=utf8mb4

collation-server= utf8mb4_bin

skip-name-resolve

lower_case_table_names

skip-external-locking

max_allowed_packet = 1024M

table_open_cache = 4000

table_open_cache_instances=16

max_connections = 4000

query_cache_size = 0

query_cache_type = 0

tmp_table_size = 1024M

max_heap_table_size = 1024M

innodb_log_files_in_group = 3

innodb_log_file_size = 1024M

innodb_flush_method= O_DIRECT

log_timestamps=SYSTEM

#三个节点auto_increment_offset的值可以跟server_id相同

auto_increment_offset=1

auto_increment_increment=6

explicit_defaults_for_timestamp

log_bin_trust_function_creators = 1

transaction-isolation = READ-COMMITTED

innodb_buffer_pool_instances=8

innodb_write_io_threads=4

innodb_read_io_threads=4

innodb_buffer_pool_size=20G

innodb_flush_log_at_trx_commit=1

#设置从节点应用relaylog后产生binlog

log_slave_updates=1

#设置不自动删除relaylog

relay_log_purge=0

#设置增强半同步复制参数

rpl_semi_sync_master_wait_point= AFTER_SYNC

rpl_semi_sync_master_enabled=1

rpl_semi_sync_master_timeout=1000

rpl_semi_sync_slave_enabled=1

4. 主从节点起mysql服务

systemctl start mysqld

5. 修改root密码(主从节点均需执行)

SET SQL_LOG_BIN=0;

alter user root@'localhost' identified by 'R00t_123';

SET SQL_LOG_BIN=1;

6. 新建root@%和rpl_user@%用户(主从节点均需执行)

SET SQL_LOG_BIN=0;

CREATE USER rpl_user@'%' IDENTIFIED BY 'R00t_123';

GRANT REPLICATION SLAVE ON *.* TO rpl_user@'%';

FLUSH PRIVILEGES;

CREATE USER root@'%' IDENTIFIED BY 'R00t_123';

GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' WITH GRANT OPTION ;

GRANT PROXY ON ''@'' TO 'root'@'%' WITH GRANT OPTION;

SET SQL_LOG_BIN=1;

7. 所有从节点配置复制通道

change master to master_host='node1',master_port=3306,master_user='rpl_user',master_password='R00t_123',MASTER_AUTO_POSITION=1;

因为开启了gtid,可以设置MASTER_AUTO_POSITION=1使主从复制自动按照gtid的位置复制

8. 主节点创建test库

create database test;

use test;

create table test (id int primary key)

insert into test values(1);

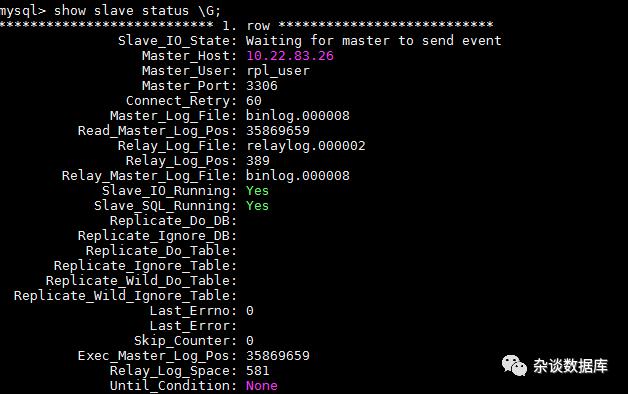

9. 从节点启动复制进程并检查复制状态

START SLAVE;

SHOW SLAVE STATUS\G;

10. 从节点开启super_read_only

set global super_read_only=1;这样slave端不可被更新

11. 测试主从切换

从节点node2:(新主节点)

stop slave;

set global read_only=0;

主节点node1:

set global super_read_only=1;

change master to master_host='10.22.83.26',master_port=3306,master_user='rpl_user',master_password='R00t_123',MASTER_AUTO_POSITION=1;

start slave;

show slave status \G;

从节点node3:

show slave status \G;

stop slave;

change master to master_host='10.22.83.26',master_port=3306,master_user='rpl_user',master_password='R00t_123',MASTER_AUTO_POSITION=1;

start slave;

show slave status \G;

12. 重新将主节点切成node1

三、部署Data Node和Manager Node

1. 安装依赖包

yum -y install perl-DBD-MySQL

yum -y install perl-ExtUtils-MakeMaker

yum -y install perl-ExtUtils-CBuilder

yum -y install perl-CPAN

yum -y install perl-Config-Tiny

yum -y install perl-DBI*

yum -y install perl-Log*

yum -y install perl-Param*

yum -y install perl-Mail*

yum -y install perl-Class*

yum -y install perl-Sys*

http://rpmfind.net/linux/rpm2html/search.php

rpm -ivh perl-DBD-MySQL-4.023-5.el7.x86_64.rpm

rpm -ivh perl-Config-Tiny-2.14-7.el7.noarch.rpm

rpm -ivh perl-Log-Dispatch-2.41-1.el7.1.noarch.rpm

rpm -ivh perl-MIME-Lite-3.030-1.el7.noarch.rpm

rpm -ivh perl-MIME-Types-1.38-2.el7.noarch.rpm

rpm -ivh perl-MIME-Lite-3.030-1.el7.noarch.rpm

rpm -ivh perl-Mail-Sender-0.8.23-1.el7.noarch.rpm

rpm -ivh perl-Parallel-ForkManager-1.18-2.el7.noarch.rpm

rpm -ivh perl-Mail-Sendmail-0.79-21.el7.noarch.rpm

rpm -ivh perl-MIME-Lite-3.030-1.el7.noarch.rpm

rpm -ivh perl-Parallel-ForkManager-1.18-2.el7.noarch.rpm

rpm -ivh perl-MIME-Types-1.38-2.el7.noarch.rpm

rpm -ivh perl-Log-Dispatch-2.41-1.el7.1.noarch.rpm

2. node1、node2、node3安装data node包(见附件)

rpm -ivh mha4mysql-node-0.57-0.el7.noarch.rpm

3. node3安装manager node包(见附件)

rpm -ivh mha4mysql-manager-0.57-0.el7.noarch.rpm

验证manager版本:

masterha_manager -v

root@node3[/soft]$ masterha_manager -v

masterha_manager version 0.57.

四、配置manager node

0. 配置3节点root用户免密

三节点均执行:

ssh-keygen -t rsa

ssh-copy-id -i ~/.ssh/id_rsa.pub root@node1

ssh-copy-id -i ~/.ssh/id_rsa.pub root@node2

ssh-copy-id -i ~/.ssh/id_rsa.pub root@node3

1. 建立配置文件目录

mkdir -p /etc/mha

cd /etc/mha

mkdir conf

mkdir log

mkdir scripts

2. 创建配置文件/etc/mha/mha.cnf

root@node3[/etc/mha/conf]$ cat mha.cnf

[server default]

manager_log=/etc/mha/log/manager.log

manager_workdir=/etc/mha/log

#failover和switchover脚本

master_ip_failover_script=/etc/mha/scripts/master_ip_failover

master_ip_online_change_script=/etc/mha/scripts/master_ip_online_change

password=R00t_123

#配置检查主节点的次数

ping_interval=5

repl_password=R00t_123

repl_user=rpl_user

ssh_user=root

user=root

# masters

[server1]

hostname=10.22.83.42

port=3306

#cadidate_master为1表示优先考虑此节点转为主节点

candidate_master=1

master_binlog_dir=/data1/binlog

remote_workdir=/etc/mha/log

[server2]

hostname=10.22.83.26

port=3306

candidate_master=1

check_repl_delay=0

master_binlog_dir=/data1/binlog

remote_workdir=/etc/mha/log

[server3]

hostname=10.22.83.28

port=3306

3. 创建failover和switchover脚本

root@node3[/etc/mha/scripts]$ cat master_ip_failover

#!/usr/bin/env perl

use strict;

use warnings FATAL => 'all';

use Getopt::Long;

my (

$command, $ssh_user, $orig_master_host, $orig_master_ip,

$orig_master_port, $new_master_host, $new_master_ip, $new_master_port

);

my $vip = '10.22.83.55'; #浮动ip

my $key = "1";

my $ssh_start_vip = "/sbin/ifconfig eth0:$key $vip";

my $ssh_stop_vip = "/sbin/ifconfig eth0:$key down";

GetOptions(

'command=s' => \$command,

'ssh_user=s' => \$ssh_user,

'orig_master_host=s' => \$orig_master_host,

'orig_master_ip=s' => \$orig_master_ip,

'orig_master_port=i' => \$orig_master_port,

'new_master_host=s' => \$new_master_host,

'new_master_ip=s' => \$new_master_ip,

'new_master_port=i' => \$new_master_port,

);

exit &main();

sub main {

print "\n\nIN SCRIPT TEST====$ssh_stop_vip==$ssh_start_vip===\n\n";

if ( $command eq "stop" || $command eq "stopssh" ) {

# $orig_master_host, $orig_master_ip, $orig_master_port are passed.

# If you manage master ip address at global catalog database,

# invalidate orig_master_ip here.

my $exit_code = 1;

eval {

print "Disabling the VIP on old master: $orig_master_host \n";

&stop_vip();

$exit_code = 0;

};

if ($@) {

warn "Got Error: $@\n";

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "start" ) {

# all arguments are passed.

# If you manage master ip address at global catalog database,

# activate new_master_ip here.

# You can also grant write access (create user, set read_only=0, etc) here.

my $exit_code = 10;

eval {

print "Enabling the VIP - $vip on the new master - $new_master_host \n";

&start_vip();

$exit_code = 0;

};

if ($@) {

warn $@;

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "status" ) {

print "Checking the Status of the script.. OK \n";

`ssh $ssh_user\@$orig_master_host \" $ssh_start_vip \"`;

exit 0;

}

else {

&usage();

exit 1;

}

}

# A simple system call that enable the VIP on the new master

sub start_vip() {

`ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`;

}

# A simple system call that disable the VIP on the old_master

sub stop_vip() {

`ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`;

}

sub usage {

"Usage: master_ip_failover --command=start|stop|stopssh|status --orig_master_host=host --orig_master_ip=ip --orig_master_port=port --new_master_host=host --new_master_ip=ip --new_master_port=port\n";

}

root@node3[/etc/mha/scripts]$ cat master_ip_online_change

#!/usr/bin/env perl

## Note: This is a sample script and is notcomplete. Modify the script based on your environment.

use strict;

use warnings FATAL => 'all';

use Getopt::Long;

use MHA::DBHelper;

use MHA::NodeUtil;

# use Time::HiRes qw( sleep gettimeofdaytv_interval );

use Time::HiRes qw(sleep gettimeofday tv_interval);

use Data::Dumper;

my $_tstart;

my $_running_interval = 0.1;

my (

$command, $orig_master_host, $orig_master_ip,

$orig_master_port, $orig_master_user,

$new_master_host, $new_master_ip, $new_master_port,

$new_master_user,

);

my $vip = '10.22.83.55'; # Virtual IP

my $key = "1";

my $ssh_start_vip = "/sbin/ifconfig eth0:$key $vip";

my $ssh_stop_vip = "/sbin/ifconfig eth0:$key down";

my $ssh_user = "root";

my $new_master_password = "R00t_123";

my $orig_master_password = "R00t_123";

GetOptions(

'command=s' =>\$command,

#'ssh_user=s' => \$ssh_user,

'orig_master_host=s' =>\$orig_master_host,

'orig_master_ip=s' =>\$orig_master_ip,

'orig_master_port=i' =>\$orig_master_port,

'orig_master_user=s' =>\$orig_master_user,

#'orig_master_password=s' => \$orig_master_password,

'new_master_host=s' =>\$new_master_host,

'new_master_ip=s' =>\$new_master_ip,

'new_master_port=i' =>\$new_master_port,

'new_master_user=s' =>\$new_master_user,

#'new_master_password=s' =>\$new_master_password,

);

exit &main();

sub current_time_us {

my ($sec, $microsec ) = gettimeofday();

my$curdate = localtime($sec);

return $curdate . " " . sprintf( "%06d", $microsec);

}

sub sleep_until {

my$elapsed = tv_interval($_tstart);

if ($_running_interval > $elapsed ) {

sleep( $_running_interval - $elapsed );

}

}

sub get_threads_util {

my$dbh = shift;

my$my_connection_id = shift;

my$running_time_threshold = shift;

my$type = shift;

$running_time_threshold = 0 unless ($running_time_threshold);

$type = 0 unless($type);

my@threads;

my$sth = $dbh->prepare("SHOW PROCESSLIST");

$sth->execute();

while ( my $ref = $sth->fetchrow_hashref() ) {

my$id = $ref->{Id};

my$user = $ref->{User};

my$host = $ref->{Host};

my$command = $ref->{Command};

my$state = $ref->{State};

my$query_time = $ref->{Time};

my$info = $ref->{Info};

$info =~ s/^\s*(.*?)\s*$/$1/ if defined($info);

next if ( $my_connection_id == $id );

next if ( defined($query_time) && $query_time <$running_time_threshold );

next if ( defined($command) && $command eq "Binlog Dump" );

next if ( defined($user) && $user eq "system user" );

next

if ( defined($command)

&& $command eq "Sleep"

&& defined($query_time)

&& $query_time >= 1 );

if( $type >= 1 ) {

next if ( defined($command) && $command eq "Sleep" );

nextif ( defined($command) && $command eq "Connect" );

}

if( $type >= 2 ) {

next if ( defined($info) && $info =~ m/^select/i );

next if ( defined($info) && $info =~ m/^show/i );

}

push @threads, $ref;

}

return @threads;

}

sub main {

if ($command eq "stop" ) {

##Gracefully killing connections on the current master

#1. Set read_only= 1 on the new master

#2. DROP USER so that no app user can establish new connections

#3. Set read_only= 1 on the current master

#4. Kill current queries

#* Any database access failure will result in script die.

my$exit_code = 1;

eval {

## Setting read_only=1 on the new master (to avoid accident)

my $new_master_handler = new MHA::DBHelper();

# args: hostname, port, user, password, raise_error(die_on_error)_or_not

$new_master_handler->connect( $new_master_ip, $new_master_port,

$new_master_user, $new_master_password, 1 );

print current_time_us() . " Set read_only on the new master..";

$new_master_handler->enable_read_only();

if ( $new_master_handler->is_read_only() ) {

print "ok.\n";

}

else {

die "Failed!\n";

}

$new_master_handler->disconnect();

# Connecting to the orig master, die if any database error happens

my $orig_master_handler = new MHA::DBHelper();

$orig_master_handler->connect( $orig_master_ip, $orig_master_port,

$orig_master_user, $orig_master_password, 1 );

## Drop application user so that nobodycan connect. Disabling per-session binlog beforehand

#$orig_master_handler->disable_log_bin_local();

#print current_time_us() . " Drpping app user on the origmaster..\n";

#FIXME_xxx_drop_app_user($orig_master_handler);

## Waiting for N * 100 milliseconds so that current connections can exit

my $time_until_read_only = 15;

$_tstart = [gettimeofday];

my @threads = get_threads_util( $orig_master_handler->{dbh},

$orig_master_handler->{connection_id} );

while ( $time_until_read_only > 0 && $#threads >= 0 ) {

if ( $time_until_read_only % 5 == 0 ) {

printf "%s Waiting all running %d threads aredisconnected.. (max %d milliseconds)\n",

current_time_us(), $#threads + 1, $time_until_read_only * 100;

if ( $#threads < 5 ) {

print Data::Dumper->new( [$_] )->Indent(0)->Terse(1)->Dump ."\n"

foreach (@threads);

}

}

sleep_until();

$_tstart = [gettimeofday];

$time_until_read_only--;

@threads = get_threads_util( $orig_master_handler->{dbh},

$orig_master_handler->{connection_id} );

}

## Setting read_only=1 on the current master so that nobody(exceptSUPER) can write

print current_time_us() . " Set read_only=1 on the orig master..";

$orig_master_handler->enable_read_only();

if ( $orig_master_handler->is_read_only() ) {

print "ok.\n";

}

else {

die "Failed!\n";

}

## Waiting for M * 100 milliseconds so that current update queries cancomplete

my $time_until_kill_threads = 5;

@threads = get_threads_util( $orig_master_handler->{dbh},

$orig_master_handler->{connection_id} );

while ( $time_until_kill_threads > 0 && $#threads >= 0 ) {

if ( $time_until_kill_threads % 5 == 0 ) {

printf "%s Waiting all running %d queries aredisconnected.. (max %d milliseconds)\n",

current_time_us(), $#threads + 1, $time_until_kill_threads * 100;

if ( $#threads < 5 ) {

print Data::Dumper->new( [$_] )->Indent(0)->Terse(1)->Dump ."\n"

foreach (@threads);

}

}

sleep_until();

$_tstart = [gettimeofday];

$time_until_kill_threads--;

@threads = get_threads_util( $orig_master_handler->{dbh},

$orig_master_handler->{connection_id} );

}

print "Disabling the VIPon old master: $orig_master_host \n";

&stop_vip();

## Terminating all threads

print current_time_us() . " Killing all applicationthreads..\n";

$orig_master_handler->kill_threads(@threads) if ( $#threads >= 0);

print current_time_us() . " done.\n";

#$orig_master_handler->enable_log_bin_local();

$orig_master_handler->disconnect();

## After finishing the script, MHA executes FLUSH TABLES WITH READ LOCK

$exit_code = 0;

};

if($@) {

warn "Got Error: $@\n";

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "start" ) {

##Activating master ip on the new master

#1. Create app user with write privileges

#2. Moving backup script if needed

#3. Register new master's ip to the catalog database

# We don't return error even thoughactivating updatable accounts/ip failed so that we don't interrupt slaves'recovery.

# If exit code is 0 or 10, MHA does notabort

my$exit_code = 10;

eval{

my $new_master_handler = new MHA::DBHelper();

# args: hostname, port, user, password, raise_error_or_not

$new_master_handler->connect( $new_master_ip, $new_master_port,

$new_master_user, $new_master_password, 1 );

## Set read_only=0 on the new master

#$new_master_handler->disable_log_bin_local();

print current_time_us() . " Set read_only=0 on the newmaster.\n";

$new_master_handler->disable_read_only();

## Creating an app user on the new master

#print current_time_us() . " Creating app user on the newmaster..\n";

#FIXME_xxx_create_app_user($new_master_handler);

#$new_master_handler->enable_log_bin_local();

$new_master_handler->disconnect();

## Update master ip on the catalog database, etc

print "Enabling the VIP -$vip on the new master - $new_master_host \n";

&start_vip();

$exit_code = 0;

};

if($@) {

warn "Got Error: $@\n";

exit $exit_code;

}

exit $exit_code;

}

elsif ( $command eq "status" ) {

#do nothing

exit 0;

}

else{

&usage();

exit 1;

}

}

# A simple system call that enable the VIPon the new master

sub start_vip() {

`ssh $ssh_user\@$new_master_host \" $ssh_start_vip \"`;

}

# A simple system call that disable the VIPon the old_master

sub stop_vip() {

`ssh $ssh_user\@$orig_master_host \" $ssh_stop_vip \"`;

}

sub usage {

"Usage: master_ip_online_change --command=start|stop|status--orig_master_host=host --orig_master_ip=ip --orig_master_port=port--new_master_host=host --new_master_ip=ip --new_master_port=port\n";

die;

}

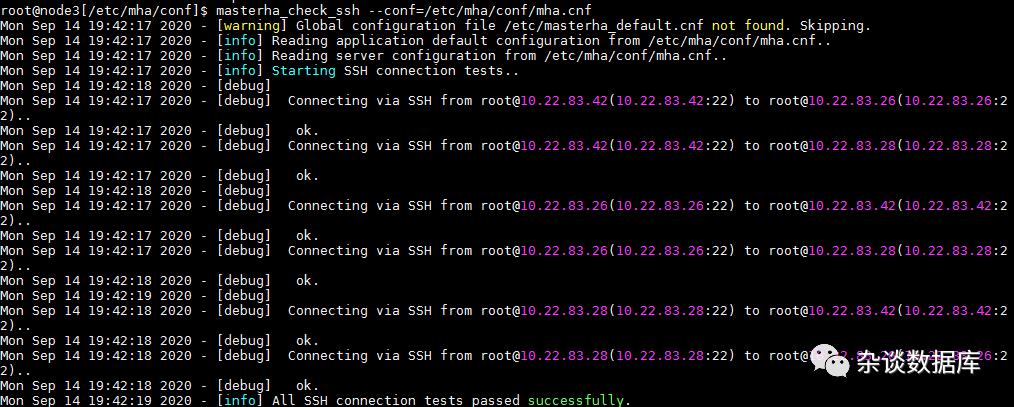

4. 检查MHA配置

(1)ssh免密检查

masterha_check_ssh --conf=/etc/mha/conf/mha.cnf

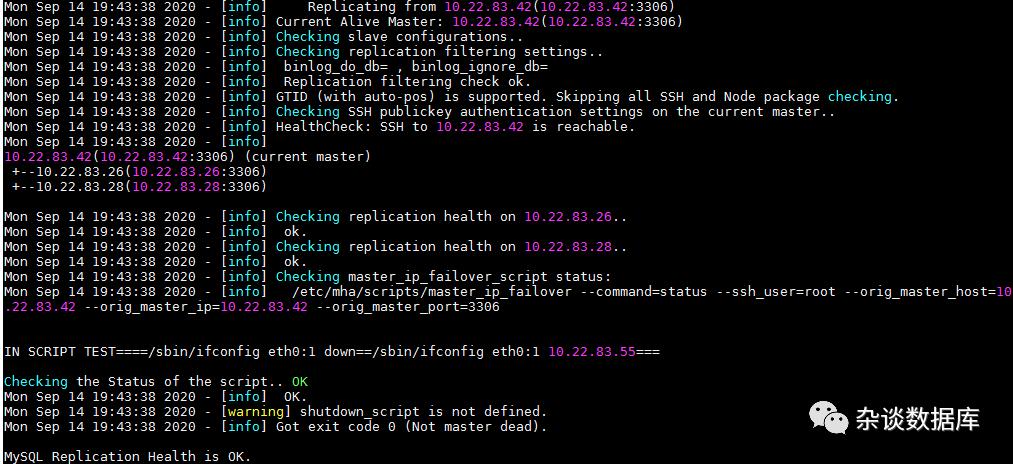

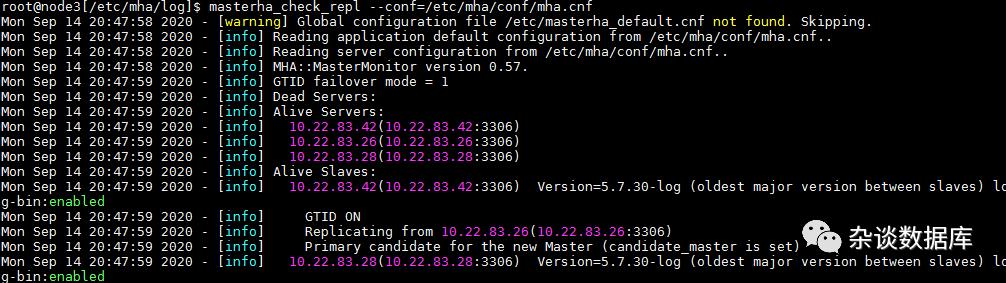

(2)各节点复制情况检查

masterha_check_repl --conf=/etc/mha/conf/mha.cnf

(3)检查manager的状态

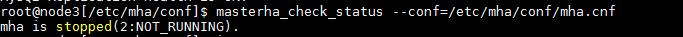

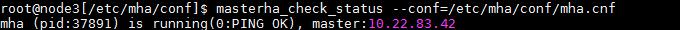

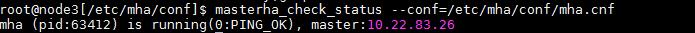

masterha_check_status --conf=/etc/mha/conf/mha.cnf

5. 启动manager

nohup masterha_manager --conf=/etc/mha/conf/mha.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /etc/mha/log/manager.log 2>&1 &

6. 检查日志

vi /etc/mha/log/manager.log

vip在node1上起来

7. 再次检查manager状态

masterha_check_status --conf=/etc/mha/conf/mha.cnf

五、主从failover测试

1. 将node1主节点的mysql进程停掉

systemctl stop mysqld

2. 检查manager.log

Mon Sep 14 20:09:04 2020 - [warning] Got error on MySQL connect: 2003 (Can't connect to MySQL server on '10.22.83.42' (111))

Mon Sep 14 20:09:04 2020 - [warning] Connection failed 4 time(s)..

Mon Sep 14 20:09:04 2020 - [warning] Master is not reachable from health checker!

Mon Sep 14 20:09:04 2020 - [warning] Master 10.22.83.42(10.22.83.42:3306) is not reachable!

Mon Sep 14 20:09:04 2020 - [warning] SSH is reachable.

Mon Sep 14 20:09:04 2020 - [info] Connecting to a master server failed. Reading configuration file /etc/masterha_default.cnf and /etc/mha/conf/mha.cnf again, and trying to connect to all servers to check server status..

Mon Sep 14 20:09:04 2020 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Mon Sep 14 20:09:04 2020 - [info] Reading application default configuration from /etc/mha/conf/mha.cnf..

Mon Sep 14 20:09:04 2020 - [info] Reading server configuration from /etc/mha/conf/mha.cnf..

Mon Sep 14 20:09:05 2020 - [info] GTID failover mode = 1

Mon Sep 14 20:09:05 2020 - [info] Dead Servers:

Mon Sep 14 20:09:05 2020 - [info] 10.22.83.42(10.22.83.42:3306)

Mon Sep 14 20:09:05 2020 - [info] Alive Servers:

Mon Sep 14 20:09:05 2020 - [info] 10.22.83.26(10.22.83.26:3306)

Mon Sep 14 20:09:05 2020 - [info] 10.22.83.28(10.22.83.28:3306)

Mon Sep 14 20:09:05 2020 - [info] Alive Slaves:

Mon Sep 14 20:09:05 2020 - [info] 10.22.83.26(10.22.83.26:3306) Version=5.7.30-log (oldest major version between slaves) log-bin:enabled

Mon Sep 14 20:09:05 2020 - [info] GTID ON

Mon Sep 14 20:09:05 2020 - [info] Replicating from 10.22.83.42(10.22.83.42:3306)

Mon Sep 14 20:09:05 2020 - [info] Primary candidate for the new Master (candidate_master is set)

Mon Sep 14 20:09:05 2020 - [info] 10.22.83.28(10.22.83.28:3306) Version=5.7.30-log (oldest major version between slaves) log-bin:enabled

Mon Sep 14 20:09:05 2020 - [info] GTID ON

Mon Sep 14 20:09:05 2020 - [info] Replicating from 10.22.83.42(10.22.83.42:3306)

Mon Sep 14 20:09:05 2020 - [info] Checking slave configurations..

Mon Sep 14 20:09:05 2020 - [info] Checking replication filtering settings..

Mon Sep 14 20:09:05 2020 - [info] Replication filtering check ok.

Mon Sep 14 20:09:05 2020 - [info] Master is down!

Mon Sep 14 20:09:05 2020 - [info] Terminating monitoring script.

Mon Sep 14 20:09:05 2020 - [info] Got exit code 20 (Master dead).

Mon Sep 14 20:09:05 2020 - [info] MHA::MasterFailover version 0.57.

Mon Sep 14 20:09:05 2020 - [info] Starting master failover.

Mon Sep 14 20:09:05 2020 - [info]

Mon Sep 14 20:09:05 2020 - [info] * Phase 1: Configuration Check Phase..

Mon Sep 14 20:09:05 2020 - [info]

Mon Sep 14 20:09:06 2020 - [info] GTID failover mode = 1

Mon Sep 14 20:09:06 2020 - [info] Dead Servers:

Mon Sep 14 20:09:06 2020 - [info] 10.22.83.42(10.22.83.42:3306)

Mon Sep 14 20:09:06 2020 - [info] Checking master reachability via MySQL(double check)...

Mon Sep 14 20:09:06 2020 - [info] ok.

Mon Sep 14 20:09:06 2020 - [info] Alive Servers:

Mon Sep 14 20:09:06 2020 - [info] 10.22.83.26(10.22.83.26:3306)

Mon Sep 14 20:09:06 2020 - [info] 10.22.83.28(10.22.83.28:3306)

Mon Sep 14 20:09:06 2020 - [info] Alive Slaves:

Mon Sep 14 20:09:06 2020 - [info] 10.22.83.26(10.22.83.26:3306) Version=5.7.30-log (oldest major version between slaves) log-bin:enabled

Mon Sep 14 20:09:06 2020 - [info] GTID ON

Mon Sep 14 20:09:06 2020 - [info] Replicating from 10.22.83.42(10.22.83.42:3306)

Mon Sep 14 20:09:06 2020 - [info] Primary candidate for the new Master (candidate_master is set)

Mon Sep 14 20:09:06 2020 - [info] 10.22.83.28(10.22.83.28:3306) Version=5.7.30-log (oldest major version between slaves) log-bin:enabled

Mon Sep 14 20:09:06 2020 - [info] GTID ON

Mon Sep 14 20:09:06 2020 - [info] Replicating from 10.22.83.42(10.22.83.42:3306)

Mon Sep 14 20:09:06 2020 - [info] Starting GTID based failover.

Mon Sep 14 20:09:06 2020 - [info]

Mon Sep 14 20:09:06 2020 - [info] ** Phase 1: Configuration Check Phase completed.

Mon Sep 14 20:09:06 2020 - [info]

Mon Sep 14 20:09:06 2020 - [info] * Phase 2: Dead Master Shutdown Phase..

Mon Sep 14 20:09:06 2020 - [info]

Mon Sep 14 20:09:06 2020 - [info] Forcing shutdown so that applications never connect to the current master..

Mon Sep 14 20:09:06 2020 - [info] Executing master IP deactivation script:

Mon Sep 14 20:09:06 2020 - [info] /etc/mha/scripts/master_ip_failover --orig_master_host=10.22.83.42 --orig_master_ip=10.22.83.42 --orig_master_port=3306 --command=stopssh --ssh_user=root

IN SCRIPT TEST====/sbin/ifconfig eth0:1 down==/sbin/ifconfig eth0:1 10.22.83.55===

Disabling the VIP on old master: 10.22.83.42

Mon Sep 14 20:09:07 2020 - [info] done.

Mon Sep 14 20:09:07 2020 - [warning] shutdown_script is not set. Skipping explicit shutting down of the dead master.

Mon Sep 14 20:09:07 2020 - [info] * Phase 2: Dead Master Shutdown Phase completed.

Mon Sep 14 20:09:07 2020 - [info]

Mon Sep 14 20:09:07 2020 - [info] * Phase 3: Master Recovery Phase..

Mon Sep 14 20:09:07 2020 - [info]

Mon Sep 14 20:09:07 2020 - [info] * Phase 3.1: Getting Latest Slaves Phase..

Mon Sep 14 20:09:07 2020 - [info]

Mon Sep 14 20:09:07 2020 - [info] The latest binary log file/position on all slaves is binlog.000007:36391394

Mon Sep 14 20:09:07 2020 - [info] Retrieved Gtid Set: ea1a905f-f1cd-11ea-b73a-fa163e402af4:18-50201

Mon Sep 14 20:09:07 2020 - [info] Latest slaves (Slaves that received relay log files to the latest):

Mon Sep 14 20:09:07 2020 - [info] 10.22.83.26(10.22.83.26:3306) Version=5.7.30-log (oldest major version between slaves) log-bin:enabled

Mon Sep 14 20:09:07 2020 - [info] GTID ON

Mon Sep 14 20:09:07 2020 - [info] Replicating from 10.22.83.42(10.22.83.42:3306)

Mon Sep 14 20:09:07 2020 - [info] Primary candidate for the new Master (candidate_master is set)

Mon Sep 14 20:09:07 2020 - [info] 10.22.83.28(10.22.83.28:3306) Version=5.7.30-log (oldest major version between slaves) log-bin:enabled

Mon Sep 14 20:09:07 2020 - [info] GTID ON

Mon Sep 14 20:09:07 2020 - [info] Replicating from 10.22.83.42(10.22.83.42:3306)

Mon Sep 14 20:09:07 2020 - [info] The oldest binary log file/position on all slaves is binlog.000007:36391394

Mon Sep 14 20:09:07 2020 - [info] Retrieved Gtid Set: ea1a905f-f1cd-11ea-b73a-fa163e402af4:18-50201

Mon Sep 14 20:09:07 2020 - [info] Oldest slaves:

Mon Sep 14 20:09:07 2020 - [info] 10.22.83.26(10.22.83.26:3306) Version=5.7.30-log (oldest major version between slaves) log-bin:enabled

Mon Sep 14 20:09:07 2020 - [info] GTID ON

Mon Sep 14 20:09:07 2020 - [info] Replicating from 10.22.83.42(10.22.83.42:3306)

Mon Sep 14 20:09:07 2020 - [info] Primary candidate for the new Master (candidate_master is set)

Mon Sep 14 20:09:07 2020 - [info] 10.22.83.28(10.22.83.28:3306) Version=5.7.30-log (oldest major version between slaves) log-bin:enabled

Mon Sep 14 20:09:07 2020 - [info] GTID ON

Mon Sep 14 20:09:07 2020 - [info] Replicating from 10.22.83.42(10.22.83.42:3306)

Mon Sep 14 20:09:07 2020 - [info]

Mon Sep 14 20:09:07 2020 - [info] * Phase 3.3: Determining New Master Phase..

Mon Sep 14 20:09:07 2020 - [info]

Mon Sep 14 20:09:07 2020 - [info] Searching new master from slaves..

Mon Sep 14 20:09:07 2020 - [info] Candidate masters from the configuration file:

Mon Sep 14 20:09:07 2020 - [info] 10.22.83.26(10.22.83.26:3306) Version=5.7.30-log (oldest major version between slaves) log-bin:enabled

Mon Sep 14 20:09:07 2020 - [info] GTID ON

Mon Sep 14 20:09:07 2020 - [info] Replicating from 10.22.83.42(10.22.83.42:3306)

Mon Sep 14 20:09:07 2020 - [info] Primary candidate for the new Master (candidate_master is set)

Mon Sep 14 20:09:07 2020 - [info] Non-candidate masters:

Mon Sep 14 20:09:07 2020 - [info] Searching from candidate_master slaves which have received the latest relay log events..

Mon Sep 14 20:09:07 2020 - [info] New master is 10.22.83.26(10.22.83.26:3306)

Mon Sep 14 20:09:07 2020 - [info] Starting master failover..

Mon Sep 14 20:09:07 2020 - [info]

From:

10.22.83.42(10.22.83.42:3306) (current master)

+--10.22.83.26(10.22.83.26:3306)

+--10.22.83.28(10.22.83.28:3306)

To:

10.22.83.26(10.22.83.26:3306) (new master)

+--10.22.83.28(10.22.83.28:3306)

Mon Sep 14 20:09:07 2020 - [info]

Mon Sep 14 20:09:07 2020 - [info] * Phase 3.3: New Master Recovery Phase..

Mon Sep 14 20:09:07 2020 - [info]

Mon Sep 14 20:09:07 2020 - [info] Waiting all logs to be applied..

Mon Sep 14 20:09:07 2020 - [info] done.

Mon Sep 14 20:09:07 2020 - [info] Getting new master's binlog name and position..

Mon Sep 14 20:09:07 2020 - [info] binlog.000008:35869659

Mon Sep 14 20:09:07 2020 - [info] All other slaves should start replication from here. Statement should be: CHANGE MASTER TO MASTER_HOST='10.22.83.26', MASTER_PORT=3306, MASTER_AUTO_POSITION=1, MASTER_USER='rpl_user', MASTER_PASSWORD='xxx';

Mon Sep 14 20:09:07 2020 - [info] Master Recovery succeeded. File:Pos:Exec_Gtid_Set: binlog.000008, 35869659, 705c2fa8-f1ce-11ea-8748-fa163e59c975:1-3,

ea1a905f-f1cd-11ea-b73a-fa163e402af4:1-50201,

f7152681-f1cd-11ea-979f-fa163e4b3bee:1

Mon Sep 14 20:09:07 2020 - [info] Executing master IP activate script:

Mon Sep 14 20:09:07 2020 - [info] /etc/mha/scripts/master_ip_failover --command=start --ssh_user=root --orig_master_host=10.22.83.42 --orig_master_ip=10.22.83.42 --orig_master_port=3306 --new_master_host=10.22.83.26 --new_master_ip=10.22.83.26 --new_master_port=3306 --new_master_user='root' --new_master_password=xxx

Unknown option: new_master_user

Unknown option: new_master_password

IN SCRIPT TEST====/sbin/ifconfig eth0:1 down==/sbin/ifconfig eth0:1 10.22.83.55===

Enabling the VIP - 10.22.83.55 on the new master - 10.22.83.26

Mon Sep 14 20:09:07 2020 - [info] OK.

Mon Sep 14 20:09:07 2020 - [info] Setting read_only=0 on 10.22.83.26(10.22.83.26:3306)..

Mon Sep 14 20:09:07 2020 - [info] ok.

Mon Sep 14 20:09:07 2020 - [info] ** Finished master recovery successfully.

Mon Sep 14 20:09:07 2020 - [info] * Phase 3: Master Recovery Phase completed.

Mon Sep 14 20:09:07 2020 - [info]

Mon Sep 14 20:09:07 2020 - [info] * Phase 4: Slaves Recovery Phase..

Mon Sep 14 20:09:07 2020 - [info]

Mon Sep 14 20:09:07 2020 - [info]

Mon Sep 14 20:09:07 2020 - [info] * Phase 4.1: Starting Slaves in parallel..

Mon Sep 14 20:09:07 2020 - [info]

Mon Sep 14 20:09:07 2020 - [info] -- Slave recovery on host 10.22.83.28(10.22.83.28:3306) started, pid: 48569. Check tmp log /etc/mha/log/10.22.83.28_3306_20200914200905.log if it takes time..

Mon Sep 14 20:09:08 2020 - [info]

Mon Sep 14 20:09:08 2020 - [info] Log messages from 10.22.83.28 ...

Mon Sep 14 20:09:08 2020 - [info]

Mon Sep 14 20:09:07 2020 - [info] Resetting slave 10.22.83.28(10.22.83.28:3306) and starting replication from the new master 10.22.83.26(10.22.83.26:3306)..

Mon Sep 14 20:09:07 2020 - [info] Executed CHANGE MASTER.

Mon Sep 14 20:09:07 2020 - [info] Slave started.

Mon Sep 14 20:09:07 2020 - [info] gtid_wait(705c2fa8-f1ce-11ea-8748-fa163e59c975:1-3,

ea1a905f-f1cd-11ea-b73a-fa163e402af4:1-50201,

f7152681-f1cd-11ea-979f-fa163e4b3bee:1) completed on 10.22.83.28(10.22.83.28:3306). Executed 0 events.

Mon Sep 14 20:09:08 2020 - [info] End of log messages from 10.22.83.28.

Mon Sep 14 20:09:08 2020 - [info] -- Slave on host 10.22.83.28(10.22.83.28:3306) started.

Mon Sep 14 20:09:08 2020 - [info] All new slave servers recovered successfully.

Mon Sep 14 20:09:08 2020 - [info]

Mon Sep 14 20:09:08 2020 - [info] * Phase 5: New master cleanup phase..

Mon Sep 14 20:09:08 2020 - [info]

Mon Sep 14 20:09:08 2020 - [info] Resetting slave info on the new master..

Mon Sep 14 20:09:08 2020 - [info] 10.22.83.26: Resetting slave info succeeded.

Mon Sep 14 20:09:08 2020 - [info] Master failover to 10.22.83.26(10.22.83.26:3306) completed successfully.

Mon Sep 14 20:09:08 2020 - [info] Deleted server1 entry from /etc/mha/conf/mha.cnf .

Mon Sep 14 20:09:08 2020 - [info]

----- Failover Report -----

mha: MySQL Master failover 10.22.83.42(10.22.83.42:3306) to 10.22.83.26(10.22.83.26:3306) succeeded

Master 10.22.83.42(10.22.83.42:3306) is down!

Check MHA Manager logs at node3:/etc/mha/log/manager.log for details.

Started automated(non-interactive) failover.

Invalidated master IP address on 10.22.83.42(10.22.83.42:3306)

Selected 10.22.83.26(10.22.83.26:3306) as a new master.

10.22.83.26(10.22.83.26:3306): OK: Applying all logs succeeded.

10.22.83.26(10.22.83.26:3306): OK: Activated master IP address.

10.22.83.28(10.22.83.28:3306): OK: Slave started, replicating from 10.22.83.26(10.22.83.26:3306)

10.22.83.26(10.22.83.26:3306): Resetting slave info succeeded.

Master failover to 10.22.83.26(10.22.83.26:3306) completed successfully.

3. 检查mha.cnf文件

发现原来的主节点已被从mha.cnf中删除

root@node3[/etc/mha/conf]$ cat mha.cnf

[server default]

manager_log=/etc/mha/log/manager.log

manager_workdir=/etc/mha/log

master_ip_failover_script=/etc/mha/scripts/master_ip_failover

master_ip_online_change_script=/etc/mha/scripts/master_ip_online_change

password=R00t_123

ping_interval=5

repl_password=R00t_123

repl_user=rpl_user

ssh_user=root

user=root

[server2]

candidate_master=1

check_repl_delay=0

hostname=10.22.83.26

master_binlog_dir=/data1/binlog

port=3306

remote_workdir=/etc/mha/log

[server3]

hostname=10.22.83.28

port=3306

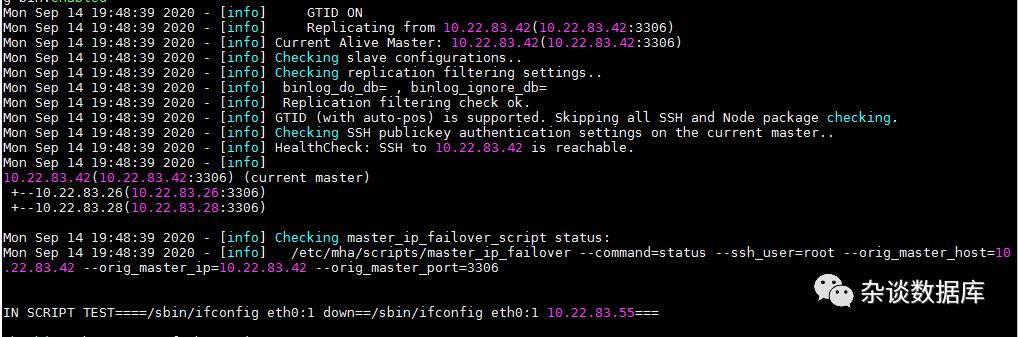

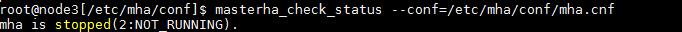

4. 检查当前mha的运行状态

masterha_check_status --conf=/etc/mha/conf/mha.cnf

发现mha已被停止

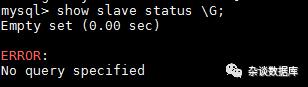

5. 检查新主节点node2的slave状态

发现新主节点已被reset slave

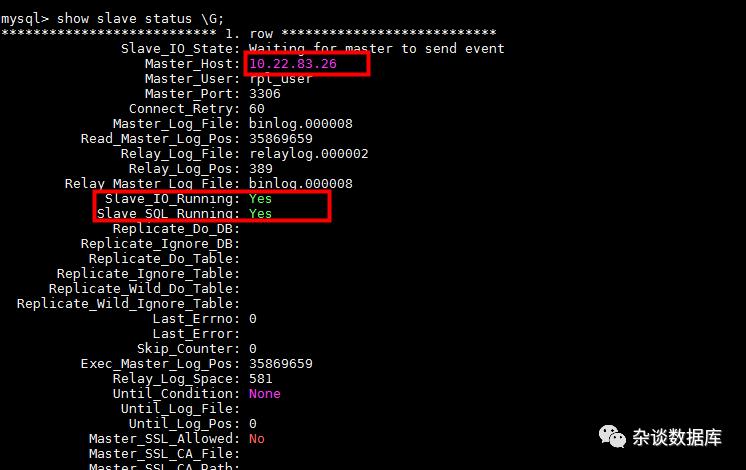

6.检查node3节点的slave状态

发现其复制通道变成了node2

7. 恢复mha

重启原来master节点的mysql

systemctl start mysqld

配置新主的复制通道

change master to master_host='10.22.83.26',master_port=3306,master_user='rpl_user',master_password='R00t_123',MASTER_AUTO_POSITION=1;

设置super_read_only开启

set global super_read_only=1;

启动复制:

start slave;

查看复制状态:

添加恢复的节点到mha.cnf文件

[server1]

hostname=10.22.83.42

port=3306

#cadidate_master为1表示优先考虑此节点转为主节点

candidate_master=1

master_binlog_dir=/data1/binlog

remote_workdir=/etc/mha/log

检查复制状态

masterha_check_repl --conf=/etc/mha/conf/mha.cnf

启动mha

nohup masterha_manager --conf=/etc/mha/conf/mha.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /etc/mha/log/manager.log 2>&1 &

检查mha状态

masterha_check_status --conf=/etc/mha/conf/mha.cnf

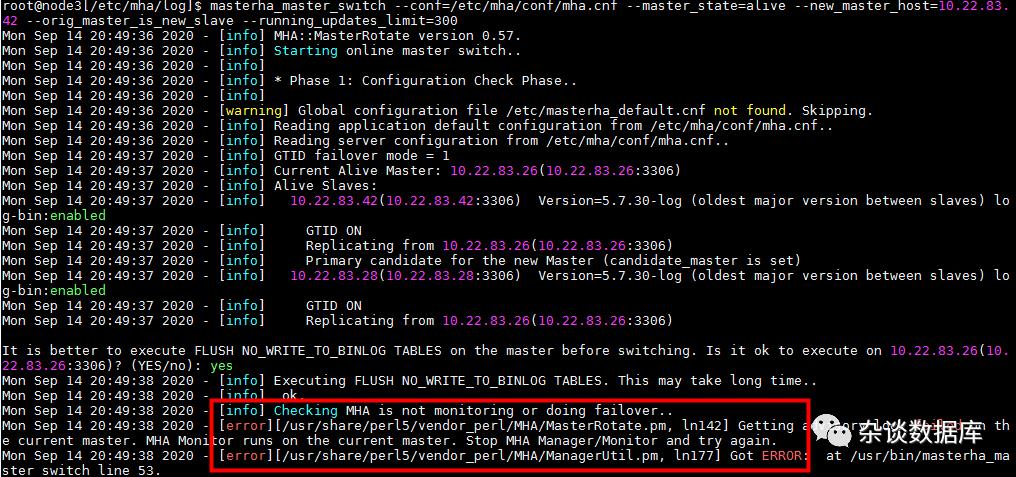

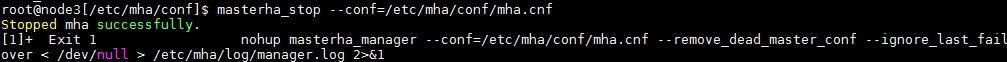

六、switchover测试

1. 将当前的mha停掉,否则报错如下

masterha_stop --conf=/etc/mha/conf/mha.cnf

2. 检查mha状态

masterha_check_status --conf=/etc/mha/conf/mha.cnf

为not_running

3. switchover到原来的主节点node1

masterha_master_switch --conf=/etc/mha/conf/mha.cnf --master_state=alive --new_master_host=10.22.83.42 --orig_master_is_new_slave --running_updates_limit=300

4. 检查vip,read_only和slave status

发现vip已漂到node1,read_only和slave status也都符合预期

5. 启动mha并检查状态

nohup masterha_manager --conf=/etc/mha/conf/mha.cnf --remove_dead_master_conf --ignore_last_failover < /dev/null > /etc/mha/log/manager.log 2>&1 &

masterha_check_status --conf=/etc/mha/conf/mha.cnf

以上是关于mysql MHA集群安装的主要内容,如果未能解决你的问题,请参考以下文章