Posted 澶ф暟鎹寲鎺楧T鏁版嵁鍒嗘瀽

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了相关的知识,希望对你有一定的参考价值。

鍚慉I杞瀷鐨勭▼搴忓憳閮藉叧娉ㄤ簡杩欎釜鍙?/span>馃憞馃憞馃憞

璁稿寮€鍙戣€呭悜鏂版墜寤鸿锛氬鏋滀綘鎯宠鍏ラ棬鏈哄櫒瀛︿範锛屽氨蹇呴』鍏堜簡瑙d竴浜涘叧閿畻娉曠殑宸ヤ綔鍘熺悊锛岀劧鍚庡啀寮€濮嬪姩鎵嬪疄璺点€備絾鎴戜笉杩欎箞璁や负銆?/p>

鎴戣寰楀疄璺甸珮浜庣悊璁猴紝鏂版墜棣栧厛瑕佸仛鐨勬槸浜嗚В鏁翠釜妯″瀷鐨勫伐浣滄祦绋嬶紝鏁版嵁澶ц嚧鏄€庢牱娴佸姩鐨勶紝缁忚繃浜嗗摢浜涘叧閿殑缁撶偣锛屾渶鍚庣殑缁撴灉鍦ㄥ摢閲岃幏鍙栵紝骞剁珛鍗冲紑濮嬪姩鎵嬪疄璺碉紝鏋勫缓鑷繁鐨勬満鍣ㄥ涔犳ā鍨嬨€傝嚦浜庣畻娉曞拰鍑芥暟鍐呴儴鐨勫疄鐜版満鍒讹紝鍙互绛変簡瑙f暣涓祦绋嬩箣鍚庯紝鍦ㄥ疄璺典腑杩涜鏇存繁鍏ョ殑瀛︿範鍜屾帉鎻°€?/p>

鍦ㄦ湰鏂囦腑锛屾垜浠皢鍒╃敤 TensorFlow 瀹炵幇涓€涓熀浜庢繁搴︾缁忕綉缁滐紙DNN锛夌殑鏂囨湰鍒嗙被妯″瀷锛屽笇鏈涘鍚勪綅鍒濆鑰?/strong>鏈夋墍甯姪銆傞渶

涓嬮潰鏄寮忕殑鏁欑▼鍐呭锛?/p>

鍏充簬 TensorFlow

TensorFlow 鏄胺姝屾棗涓嬩竴涓紑婧愮殑鏈哄櫒瀛︿範妗嗘灦銆備粠瀹冪殑鍚嶅瓧灏辫兘鐪嬪嚭杩欎釜妗嗘灦鍩烘湰鐨勫伐浣滃師鐞嗭細鐢卞缁存暟缁勬瀯鎴愮殑寮犻噺锛坱ensor锛夊湪鍥撅紙graph锛夌粨鐐逛箣闂村畾鍚戞祦鍔紙flow锛夛紝浠庤緭鍏ヨ蛋鍒拌緭鍑恒€?/p>

鍦?TensorFlow 涓紝姣忔杩愮畻閮藉彲浠ョ敤鏁版嵁娴佸浘锛坉ataflow graph锛夌殑鏂瑰紡琛ㄧず銆傛瘡涓暟鎹祦鍥鹃兘鏈変互涓嬩袱涓噸瑕佸厓绱狅細

鈼?涓€缁?tf.Operation 瀵硅薄锛屼唬琛ㄨ繍绠楁湰韬紱

鈼?涓€缁?tf.Tensor 瀵硅薄锛屼唬琛ㄨ杩愮畻鐨勬暟鎹€?/p>

濡備笅鍥炬墍绀猴紝杩欓噷鎴戜滑浠ヤ竴涓畝鍗曠殑渚嬪瓙璇存槑鏁版嵁娴佸浘鍏蜂綋鏄€庢牱杩愯鐨勩€?/p>

![璇︾粏浠嬬粛tensorflow 绁炵粡缃戠粶鍒嗙被妯″瀷鏋勫缓鍏ㄨ繃绋嬶細浠ユ枃鏈垎绫讳负渚?></p>

<p><br></p>

<p>鍋囪鍥句腑鐨?x=[1,3,6]锛寉=[1,1,1]銆傜敱浜?tf.Tensor 琚敤鏉ヨ〃绀鸿繍绠楁暟鎹紝鍥犳鍦?TensorFlow 涓垜浠細棣栧厛瀹氫箟涓や釜 tf.Tensor 甯搁噺瀵硅薄瀛樻斁鏁版嵁銆傜劧鍚庡啀鐢?tf.Operation 瀵硅薄瀹氫箟鍥句腑鐨勫姞娉曡繍绠楋紝鍏蜂綋浠g爜濡備笅锛?/p>

<p><br></p>

<blockquote>

<p>import tensorflow as tf</p>

<p><span>x = tf.constant([1,3,6]) </strong></p>

<p><span>y = tf.constant([1,1,1])</strong></p>

<p>op = tf.add(x,y)</p>

</blockquote>

<p><br></p>

<p>鐜板湪锛屾垜浠凡缁忓畾涔変簡鏁版嵁娴佸浘鐨勪袱涓噸瑕佸厓绱狅細tf.Operation 鍜?tf.Tensor锛岄偅涔堝浣曟瀯寤哄浘鏈韩鍛紝鍏蜂綋浠g爜濡備笅锛?/p>

<blockquote>

<p>import tensorflow as tf</p>

<p>my_graph = tf.Graph()</p>

<p><br></p>

<p>with my_graph.as_default():</p>

<p> x = tf.constant([1,3,6]) </p>

<p> y = tf.constant([1,1,1])</p>

<p> <span> op = tf.add(x,y)</strong></p>

</blockquote>

<p><br></p>

<p>鑷虫鎴戜滑宸茬粡瀹屾垚浜嗘暟鎹祦鍥剧殑瀹氫箟锛屽湪 TensorFlow 涓紝鍙湁鍏堝畾涔変簡鍥撅紝鎵嶈兘杩涜鍚庣画鐨勮绠楁搷浣滐紙鍗抽┍鍔ㄦ暟鎹湪鍥剧殑缁撶偣闂村畾鍚戞祦鍔級銆傝繖閲?TensorFlow 鍙堣瀹氾紝瑕佽繘琛屽悗缁殑璁$畻锛屽繀椤婚€氳繃 tf.Session 鏉ョ粺涓€绠$悊锛屽洜姝ゆ垜浠繕瑕佸畾涔変竴涓?tf.Session 瀵硅薄锛屽嵆浼氳瘽銆?/p>

<p><br></p>

<p>鍦?TensorFlow 涓紝tf.Session 涓撻棬鐢ㄦ潵灏佽 tf.Operation 鍦?tf.Tensor 鍩虹涓婃墽琛岀殑鎿嶄綔鐜銆傚洜姝わ紝鍦ㄥ畾涔?tf.Session 瀵硅薄鏃讹紝涔熼渶瑕佷紶鍏ョ浉搴旂殑鏁版嵁娴佸浘锛堝彲浠ラ€氳繃 graph 鍙傛暟浼犲叆锛夛紝鏈緥涓叿浣撶殑浠g爜濡備笅锛?/p>

<p><br></p>

<blockquote>

<p>import tensorflow as tf</p>

<p>my_graph = tf.Graph()</p>

<p><span>with tf.Session(graph=my_graph) as sess:</strong></p>

<p> x = tf.constant([1,3,6]) </p>

<p> y = tf.constant([1,1,1])</p>

<p> op = tf.add(x,y)</p>

</blockquote>

<p><br></p>

<p>瀹氫箟濂?tf.Session 涔嬪悗锛屾垜浠彲浠ラ€氳繃 tf.Session.run() 鏂规硶鏉ユ墽琛屽搴旂殑鏁版嵁娴佸浘銆俽un() 鏂规硶鍙互閫氳繃 fetches 鍙傛暟浼犲叆鐩稿簲 tf.Operation 瀵硅薄锛屽苟瀵煎叆涓?tf.Operation 鐩稿叧鐨勬墍鏈?tf.Tensor 瀵硅薄锛岀劧鍚庨€掑綊鎵ц涓庡綋鍓?tf.Operation 鏈変緷璧栧叧绯荤殑鎵€鏈夋搷浣溿€傛湰渚嬩腑鍏蜂綋鎵ц鐨勬槸姹傚拰鎿嶄綔锛屽疄鐜颁唬鐮佸涓嬶細</p>

<p><br></p>

<blockquote>

<p>import tensorflow as tf</p>

<p>my_graph = tf.Graph()</p>

<p>with tf.Session(graph=my_graph) as sess:</p>

<p> x = tf.constant([1,3,6]) </p>

<p> y = tf.constant([1,1,1])</p>

<p> op = tf.add(x,y)</p>

<p> <span>result = sess.run(fetches=op)</strong></p>

<p> print(result)</p>

<p>>>> [2 4 7]</p>

</blockquote>

<p><br></p>

<p>鍙互鐪嬪埌杩愮畻缁撴灉鏄?[2 4 7]銆?/p>

<p><br></p>

<p><br></p>

<h2><span class=](https://image.cha138.com/20210424/7991c7eabd3f4d7bb1c0aa270809ebd5.jpg) 鍏充簬棰勬祴妯″瀷

鍏充簬棰勬祴妯″瀷

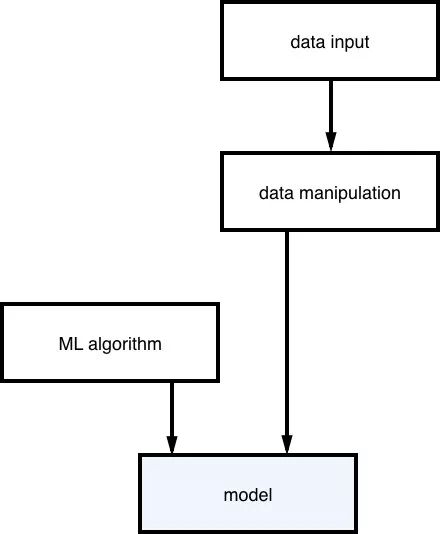

浜嗚В TensorFlow 鐨勫熀鏈師鐞嗕箣鍚庯紝涓嬮潰鐨勪换鍔℃槸濡備綍鏋勫缓涓€涓娴嬫ā鍨嬨€傜畝鍗曟潵璇达紝鏈哄櫒瀛︿範绠楁硶 + 鏁版嵁灏辩瓑浜庨娴嬫ā鍨嬨€傛瀯寤洪娴嬫ā鍨嬬殑娴佺▼濡備笅鍥炬墍绀猴細

鍏充簬绁炵粡缃戠粶

鍏充簬绁炵粡缃戠粶

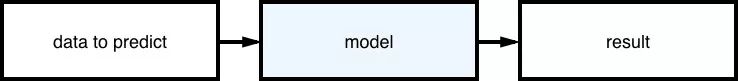

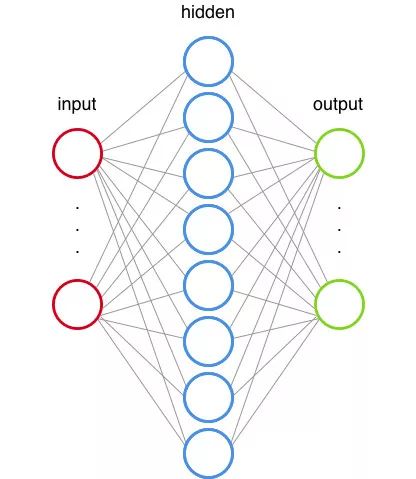

浠庢湰璐ㄤ笂璇达紝绁炵粡缃戠粶鏄绠楁ā鍨嬶紙computational model锛夌殑涓€绉嶃€傦紙娉細杩欓噷鎵€璋撹绠楁ā鍨嬫槸鎸囬€氳繃鏁板璇█鍜屾暟瀛︽蹇垫弿杩扮郴缁熺殑鏂规硶锛夊苟涓旇繖绉嶈绠楁ā鍨嬭繕鑳藉鑷姩瀹屾垚瀛︿範鍜岃缁冿紝涓嶉渶瑕佺簿纭紪绋嬨€?/p>

鏈€鍘熷涔熸槸鏈€鍩虹鐨勪竴涓缁忕綉缁滅畻娉曟ā鍨嬫槸鎰熺煡鏈烘ā鍨嬶紙Perceptron锛夛紝鍏充簬鎰熺煡鏈烘ā鍨嬬殑璇︾粏浠嬬粛璇峰弬瑙佽繖绡囧崥瀹細

http://t.cn/R5MphRp

鐢变簬绁炵粡缃戠粶妯″瀷鏄ā鎷熶汉绫诲ぇ鑴戠缁忕郴缁熺殑缁勭粐缁撴瀯鑰屾彁鍑虹殑锛屽洜姝ゅ畠涓庝汉绫荤殑鑴戠缁忕綉缁滃叿鏈夌浉浼肩殑缁撴瀯銆?/p>

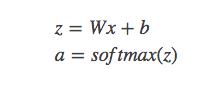

x涓轰竴涓缁忓厓鐨勫€硷紝W涓烘潈閲嶏紝b涓哄亸宸€硷紝softmax()涓烘縺娲诲嚱鏁帮紝a鍗充负杈撳嚭鍊笺€?br>

x涓轰竴涓缁忓厓鐨勫€硷紝W涓烘潈閲嶏紝b涓哄亸宸€硷紝softmax()涓烘縺娲诲嚱鏁帮紝a鍗充负杈撳嚭鍊笺€?br>

瀹為檯涓婏紝杩欓噷婵€娲诲嚱鏁扮‘瀹氫簡姣忎釜缁撶偣鐨勬渶缁堣緭鍑烘儏鍐碉紝鍚屾椂涓烘暣涓ā鍨嬪姞鍏ヤ簡闈炵嚎鎬у厓绱犮€傚鏋滅敤鍙扮伅鏉ュ仛姣斿柣鐨勮瘽锛屾縺娲诲嚱鏁扮殑浣滅敤灏辩浉褰撲簬寮€鍏炽€傚疄闄呯爺绌朵腑鏍规嵁搴旂敤鐨勫叿浣撳満鏅拰鐗圭偣锛屾湁鍚勭涓嶅悓鐨勬縺娲诲嚱鏁板彲渚涢€夋嫨锛岃繖閲屽睆钄藉眰閫夋嫨鐨勬槸 ReLu 鍑芥暟銆?br>

鍙﹀鍥句腑杩樻樉绀轰簡绗簩涓殣钄藉眰锛屽畠鐨勫姛鑳藉拰绗竴灞傚苟娌℃湁鏈川鍖哄埆锛屽敮涓€鐨勪笉鍚屽氨鏄畠鐨勮緭鍏ユ槸绗竴灞傜殑杈撳嚭锛岃€岀涓€灞傜殑杈撳叆鍒欐槸鍘熷鏁版嵁銆?/p>

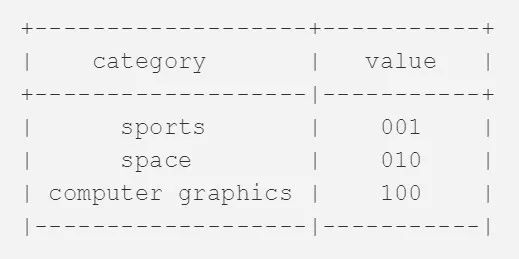

鏈€鍚庢槸杈撳嚭灞傦紝鏈緥涓簲鐢ㄤ簡鐙儹缂栫爜鐨勬柟寮忔潵瀵圭粨鏋滆繘琛屽垎绫汇€傝繖閲屾墍璋撶嫭鐑紪鐮佹槸鎸囨瘡涓悜閲忎腑鍙湁涓€涓厓绱犳槸 1锛屽叾浠栧潎涓?0 鐨勭紪鐮佹柟寮忋€備緥濡傛垜浠灏嗘枃鏈暟鎹垎涓轰笁涓被鍒紙浣撹偛銆佽埅绌哄拰鐢佃剳缁樺浘锛夛紝鍒欑紪鐮佺粨鏋滀负锛?/p>

![璇︾粏浠嬬粛tensorflow 绁炵粡缃戠粶鍒嗙被妯″瀷鏋勫缓鍏ㄨ繃绋嬶細浠ユ枃鏈垎绫讳负渚?></p>

<p><br></p>

<p>鍙互鐪嬪埌杩欎笁涓皬鏁扮殑鍜屾濂戒负 1銆?/p>

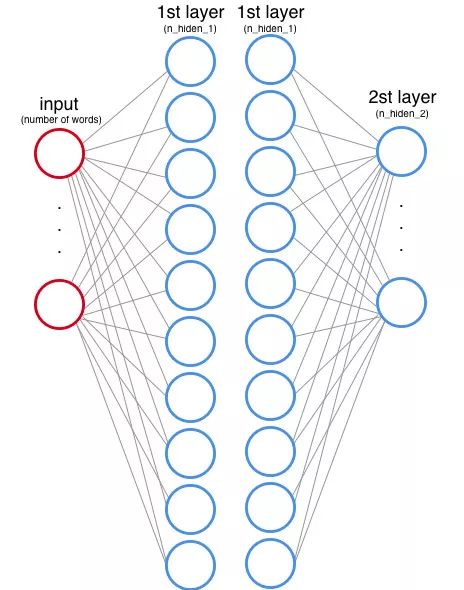

<p>鍒扮洰鍓嶄负姝紝鎴戜滑宸茬粡鏄庣‘浜嗚绁炵粡缃戠粶鐨勬暟鎹祦鍥撅紝涓嬮潰涓哄叿浣撶殑浠g爜瀹炵幇锛?/p>

<p><br></p>

<blockquote>

<p># Network Parameters<br>n_hidden_1 = 10 # 1st layer number of features<br>n_hidden_2 = 5 # 2nd layer number of features<br>n_input = total_words # Words in vocab<br>n_classes = 3 # Categories: graphics, space and baseball</p>

<p><br></p>

<p>def multilayer_perceptron(<span>input_tensor, weights, biases</strong>):</p>

<p><br> <span>layer_1_multiplication</strong> = tf.matmul(input_tensor, weights['h1'])<br> <span>layer_1_addition </strong>= tf.add(layer_1_multiplication, biases['b1'])<br> <span>layer_1_activation</strong> = tf.nn.relu(layer_1_addition)</p>

<p><br></p>

<p># Hidden layer with RELU activation<br> <span>layer_2_multiplication </strong>= tf.matmul(layer_1_activation, weights['h2'])<br> <span>layer_2_addition </strong>= tf.add(layer_2_multiplication, biases['b2'])<br> <span>layer_2_activation</strong> = tf.nn.relu(layer_2_addition)</p>

<p><br></p>

<p># Output layer with linear activation<br> <span>out_layer_multiplication </strong>= tf.matmul(layer_2_activation, weights['out'])<br> <span>out_layer_addition </strong>= out_layer_multiplication + biases['out']</p>

<p>return out_layer_addition<br></p>

<p><br></p>

<p><br></p>

</blockquote>

<h2><span class=](https://image.cha138.com/20210424/a2789350446a40bdbe638b213faa0b16.jpg) 绁炵粡缃戠粶鐨勮缁?/h2>

绁炵粡缃戠粶鐨勮缁?/h2>

濡傚墠鎵€杩帮紝妯″瀷璁粌涓竴椤归潪甯搁噸瑕佺殑浠诲姟灏辨槸璋冩暣缁撶偣鐨勬潈閲嶃€傛湰鑺傛垜浠皢浠嬬粛濡備綍鍦?TensorFlow 涓疄鐜拌繖涓€杩囩▼銆?/p>

鍦?TensorFlow 涓紝缁撶偣鏉冮噸鍜屽亸宸€间互鍙橀噺鐨勫舰寮忓瓨鍌紝鍗?tf.Variable 瀵硅薄銆傚湪鏁版嵁娴佸浘璋冪敤 run() 鍑芥暟鐨勬椂鍊欙紝杩欎簺鍊煎皢淇濇寔涓嶅彉銆傚湪涓€鑸殑鏈哄櫒瀛︿範鍦烘櫙涓紝鏉冮噸鍊煎拰鍋忓樊鍊肩殑鍒濆鍙栧€奸兘閫氳繃姝eお鍒嗗竷纭畾銆傚叿浣撲唬鐮佸涓嬪浘鎵€绀猴細

weights = {

'h1': tf.Variable(tf.random_normal([n_input, n_hidden_1])),

'h2': tf.Variable(tf.random_normal([n_hidden_1, n_hidden_2])),

'out': tf.Variable(tf.random_normal([n_hidden_2, n_classes]))

}

biases = {

'b1': tf.Variable(tf.random_normal([n_hidden_1])),

'b2': tf.Variable(tf.random_normal([n_hidden_2])),

'out': tf.Variable(tf.random_normal([n_classes]))

}

浠ュ垵濮嬪€艰繍琛岀缁忕綉缁滀箣鍚庯紝浼氬緱鍒颁竴涓疄闄呰緭鍑哄€?z锛岃€屾垜浠殑鏈熸湜杈撳嚭鍊兼槸 expected锛岃繖鏃舵垜浠渶瑕佸仛鐨勫氨鏄绠椾袱鑰呬箣闂寸殑璇樊锛屽苟閫氳繃璋冩暣鏉冮噸绛夊弬鏁颁娇涔嬫渶灏忓寲銆備竴鑸绠楄宸殑鏂规硶鏈夊緢澶氾紝杩欓噷鍥犱负鎴戜滑澶勭悊鐨勬槸鍒嗙被闂锛屽洜姝ら噰鐢ㄤ氦鍙夌喌璇樊銆?/p>

鍦?TensorFlow 涓紝鎴戜滑鍙互閫氳繃璋冪敤 tf.nn.softmax_cross_entropy_with_logits() 鍑芥暟鏉ヨ绠椾氦鍙夌喌璇樊锛屽洜涓鸿繖閲屾垜浠殑婵€娲诲嚱鏁伴€夋嫨浜?Softmax 锛屽洜姝よ宸嚱鏁颁腑鍑虹幇浜?softmax_ 鍓嶇紑銆傚叿浣撲唬鐮佸涓嬶紙浠g爜涓垜浠悓鏃惰皟鐢ㄤ簡

tf.reduced_mean() 鍑芥暟鏉ヨ绠楀钩鍧囪宸級锛?/p>

# Construct model

prediction = multilayer_perceptron(input_tensor, weights, biases)

# Define loss

entropy_loss = tf.nn.softmax_cross_entropy_with_logits(logits=prediction, labels=output_tensor)

loss = tf.reduce_mean(entropy_loss)

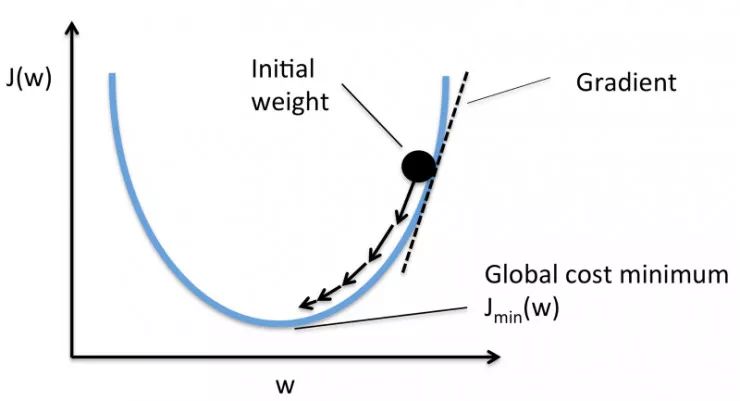

寰楀埌璇樊涔嬪悗锛屼笅闈㈢殑浠诲姟鏄浣曚娇涔嬫渶灏忓寲銆傝繖閲屾垜浠€夋嫨鐨勬柟娉曟槸鏈€甯哥敤鐨勯殢鏈烘搴︿笅闄嶆硶锛屽叾鐩磋鐨勫師鐞嗗浘濡備笅鎵€绀猴細

鏁版嵁澶勭悊

鏈緥涓紝鎴戜滑寰楀埌鐨勫師濮嬫暟鎹槸璁稿鑻辨枃鐨勬枃鏈墖娈碉紝涓轰簡灏嗚繖浜涙暟鎹鍏ユā鍨嬩腑锛屾垜浠渶瑕佸鍘熷鏁版嵁杩涜蹇呰鐨勯澶勭悊杩囩▼銆傝繖閲屽叿浣撳寘鎷袱涓儴鍒嗭細

鈼?涓烘瘡涓崟璇嶇紪鐮侊紱

鈼?涓烘瘡涓枃鏈墖娈靛垱寤哄搴旂殑寮犻噺琛ㄧず锛屽叾涓互鏁板瓧 1 浠h〃鍑虹幇浜嗘煇涓崟璇嶏紝0 琛ㄧず娌℃湁璇ュ崟璇嶃€?/p>

鍏蜂綋瀹炵幇浠g爜濡備笅锛?/p>

import numpy as np #numpy is a package for scientific computing

from collections import Counter

vocab = Counter()

text = "Hi from Brazil"

#Get all words

for word in text.split(' '):

vocab[word]+=1

#Convert words to indexes

def get_word_2_index(vocab):

word2index = {}

for i,word in enumerate(vocab):

word2index[word] = i

return word2index

#Now we have an index

word2index = get_word_2_index(vocab)

total_words = len(vocab)

#This is how we create a numpy array (our matrix)

matrix = np.zeros((total_words),dtype=float)

#Now we fill the values

for word in text.split():

matrix[word2index[word]] += 1

print(matrix)

>>> [ 1. 1. 1.]

浠庝互涓婁唬鐮佸彲浠ョ湅鍒帮紝褰撹緭鍏ユ枃鏈槸鈥淗i from Brazil鈥濇椂锛岃緭鍑虹煩闃垫槸

[ 1. 1. 1.]銆傝€屽綋杈撳叆鏂囨湰鍙湁鈥淗i鈥濇椂鍙堜細鎬庝箞鏍峰憿锛屽叿浣撲唬鐮佸拰缁撴灉濡備笅锛?/p>

matrix = np.zeros((total_words),dtype=float)

text = "Hi"

for word in text.split():

matrix[word2index[word.lower()]] += 1

print(matrix)

>>> [ 1. 0. 0.]

鍙互鐪嬪埌锛岃繖鏃剁殑杈撳嚭鏄?[ 1. 0. 0.]銆?/p>

鐩稿簲鐨勶紝鎴戜滑涔熷彲浠ュ绫诲埆淇℃伅杩涜缂栫爜锛屽彧涓嶈繃杩欐椂浣跨敤鐨勬槸鐙儹缂栫爜锛?/p>

y = np.zeros((3),dtype=float)

if category == 0:

y[0] = 1. # [ 1. 0. 0.]

elif category == 1:

y[1] = 1. # [ 0. 1. 0.]

else:

y[2] = 1. # [ 0. 0. 1.]

杩愯妯″瀷骞堕娴?/h2>

鑷虫鎴戜滑宸茬粡瀵?TensorFlow銆佺缁忕綉缁滄ā鍨嬨€佹ā鍨嬭缁冨拰鏁版嵁棰勫鐞嗙瓑鏂归潰鏈変簡鍒濇鐨勪簡瑙o紝涓嬮潰鎴戜滑灏嗘紨绀哄浣曞皢杩欎簺鐭ヨ瘑搴旂敤浜庡疄闄呯殑鏁版嵁銆?/p>

http://t.cn/zY6ssrE

棣栧厛锛屼负浜嗗鍏ヨ繖浜涙暟鎹泦锛屾垜浠渶瑕佸€熷姪 scikit-learn 搴撱€傚畠涔熸槸涓紑婧愮殑鍑芥暟搴擄紝鍩轰簬 Python 璇█锛屼富瑕佽繘琛屾満鍣ㄥ涔犵浉鍏崇殑鏁版嵁澶勭悊浠诲姟銆傛湰渚嬩腑鎴戜滑鍙娇鐢ㄤ簡鍏朵腑鐨勪笁涓被锛歝omp.graphics锛宻ci.space 鍜?rec.sport.baseball銆?/p>

鏈€缁堟暟鎹細琚垎涓轰袱涓瓙闆嗭紝涓€涓槸鏁版嵁璁粌闆嗭紝涓€涓槸娴嬭瘯闆嗐€傝繖閲岀殑寤鸿鏄渶濂戒笉瑕佹彁鍓嶆煡鐪嬫祴璇曟暟鎹泦銆傚洜涓烘彁鍓嶆煡鐪嬫祴璇曟暟鎹細褰卞搷鎴戜滑瀵规ā鍨嬪弬鏁扮殑閫夋嫨锛屼粠鑰屽奖鍝嶆ā鍨嬪鍏朵粬鏈煡鏁版嵁鐨勯€氱敤鎬с€?/p>

鍏蜂綋鐨勬暟鎹鍏ヤ唬鐮佸涓嬶細

from sklearn.datasets import fetch_20newsgroups

categories = ["comp.graphics","sci.space","rec.sport.baseball"]

newsgroups_train = fetch_20newsgroups(subset='train', categories=categories)

newsgroups_test = fetch_20newsgroups(subset='test', categories=categories)

鍦ㄧ缁忕綉缁滄湳璇腑锛屼竴涓?epoch 杩囩▼灏辨槸瀵规墍鏈夎缁冩暟鎹殑涓€涓墠鍚戜紶閫掞紙forward pass锛夊姞鍚庡悜浼犻€掞紙backward pass锛夌殑瀹屾暣寰幆銆傝繖閲屽墠鍚戞槸鎸囨牴鎹幇鏈夋潈閲嶅緱鍒板疄闄呰緭鍑哄€肩殑杩囩▼锛屽悗鍚戞槸鎸囨牴鎹宸粨鏋滃弽杩囨潵璋冩暣鏉冮噸鐨勮繃绋嬨€備笅闈㈡垜浠噸鐐逛粙缁嶄竴涓?tf.Session.run() 鍑芥暟锛屽疄闄呬笂瀹冪殑瀹屾暣璋冪敤褰㈠紡濡備笅锛?/p>

tf.Session.run(fetches, feed_dict=None, options=None, run_metadata=None)

鍦ㄦ枃绔犲紑澶翠粙缁嶈鍑芥暟鏃讹紝鎴戜滑鍙€氳繃 fetches 鍙傛暟浼犲叆浜嗗姞娉曟搷浣滐紝浣嗗叾瀹炲畠杩樻敮鎸佷竴娆′紶鍏ュ绉嶆搷浣滅殑鐢ㄦ硶銆傚湪闈㈠悜瀹為檯鏁版嵁鐨勬ā鍨嬭缁冪幆鑺傦紝鎴戜滑灏变紶鍏ヤ簡涓ょ鎿嶄綔锛氫竴涓槸璇樊璁$畻锛堝嵆闅忔満姊害涓嬮檷锛夛紝鍙︿竴涓槸浼樺寲鍑芥暟锛堝嵆鑷€傚簲鐭╀及璁★級銆?/p>

run() 鍑芥暟涓彟涓€涓噸瑕佺殑鍙傛暟鏄?feed_dict锛屾垜浠氨鏄€氳繃杩欎釜鍙傛暟浼犲叆妯″瀷姣忔澶勭悊鐨勮緭鍏ユ暟鎹€傝€屼负浜嗚緭鍏ユ暟鎹紝鎴戜滑鍙堝繀椤诲厛瀹氫箟 tf.placeholders銆?/p>

鎸夌収瀹樻柟鏂囨。鐨勮В閲婏紝杩欓噷 placeholder 浠呬粎鏄竴涓┖瀹紝鐢ㄤ簬寮曠敤鍗冲皢瀵煎叆妯″瀷鐨勬暟鎹紝鏃笉闇€瑕佸垵濮嬪寲锛屼篃涓嶅瓨鏀剧湡瀹炵殑鏁版嵁銆傛湰渚嬩腑瀹氫箟 tf.placeholders 鐨勪唬鐮佸涓嬶細

n_input = total_words # Words in vocab

n_classes = 3 # Categories: graphics, sci.space and baseball

input_tensor = tf.placeholder(tf.float32,[None, n_input],name="input")

output_tensor = tf.placeholder(tf.float32,[None, n_classes],name="output")

鍦ㄨ繘琛屽疄闄呯殑妯″瀷璁粌涔嬪墠锛岃繕闇€瑕佸皢鏁版嵁鍒嗘垚 batch锛屽嵆涓€娆¤绠楀鐞嗘暟鎹殑閲忋€?/p>

杩欐椂灏变綋鐜颁簡涔嬪墠瀹氫箟 tf.placeholders 鐨勫ソ澶勶紝鍗冲彲浠ラ€氳繃 placeholders 瀹氫箟涓殑鈥淣one鈥濆弬鏁版寚瀹氫竴涓淮搴﹀彲鍙樼殑 batch銆備篃灏辨槸璇达紝batch 鐨勫叿浣撳ぇ灏忓彲浠ョ瓑鍚庨潰浣跨敤鏃跺啀纭畾銆傝繖閲屾垜浠湪妯″瀷璁粌闃舵浼犲叆鐨?batch 鏇村ぇ锛岃€屾祴璇曢樁娈靛彲鑳戒細鍋氫竴浜涙敼鍙橈紝鍥犳闇€瑕佷娇鐢ㄥ彲鍙?batch銆傞殢鍚庡湪璁粌涓紝鎴戜滑閫氳繃 get_batches() 鍑芥暟鏉ヨ幏鍙栨瘡娆″鐞嗙殑鐪熷疄鏂囨湰鏁版嵁銆傚叿浣撴ā鍨嬭缁冮儴鍒嗙殑浠g爜濡備笅锛?/p>

training_epochs = 10

# Launch the graph

with tf.Session() as sess:

sess.run(init) #inits the variables (normal distribution, remember?)

# Training cycle

for epoch in range(training_epochs):

avg_cost = 0.

total_batch = int(len(newsgroups_train.data)/batch_size)

# Loop over all batches

for i in range(total_batch):

batch_x,batch_y = get_batch(newsgroups_train,i,batch_size)

# Run optimization op (backprop) and cost op (to get loss value)

c,_ = sess.run([loss,optimizer], feed_dict={input_tensor: batch_x, output_tensor:batch_y})

鑷虫鎴戜滑宸茬粡閽堝瀹為檯鏁版嵁瀹屾垚浜嗘ā鍨嬭缁冿紝涓嬮潰鍒颁簡搴旂敤娴嬭瘯鏁版嵁瀵规ā鍨嬭繘琛屾祴璇曠殑鏃跺€欍€傚湪娴嬭瘯杩囩▼涓紝鍜屼箣鍓嶇殑璁粌閮ㄥ垎绫讳技锛屾垜浠悓鏍疯瀹氫箟鍥惧厓绱狅紝鍖呮嫭鎿嶄綔鍜屾暟鎹袱绫汇€傝繖閲屼负浜嗚绠楁ā鍨嬬殑绮惧害锛屽悓鏃惰繕鍥犱负鎴戜滑瀵圭粨鏋滃紩鍏ヤ簡鐙儹缂栫爜锛屽洜姝ら渶瑕佸悓鏃跺緱鍒版纭緭鍑虹殑绱㈠紩锛屼互鍙婇娴嬭緭鍑虹殑绱㈠紩锛屽苟妫€鏌ュ畠浠槸鍚︾浉绛夛紝濡傛灉涓嶇瓑锛岃璁$畻鐩稿簲鐨勫钩鍧囪宸€傚叿浣撳疄鐜颁唬鐮佸拰缁撴灉濡備笅锛?/p>

# Test model

index_prediction = tf.argmax(prediction, 1)

index_correct = tf.argmax(output_tensor, 1)

correct_prediction = tf.equal(index_prediction, index_correct)

# Calculate accuracy

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

total_test_data = len(newsgroups_test.target)

batch_x_test,batch_y_test = get_batch(newsgroups_test,0,total_test_data)

print("Accuracy:", accuracy.eval({input_tensor: batch_x_test, output_tensor: batch_y_test}))

>>> Epoch: 0001 loss= 1133.908114347

Epoch: 0002 loss= 329.093700409

Epoch: 0003 loss= 111.876660109

Epoch: 0004 loss= 72.552971845

Epoch: 0005 loss= 16.673050320

Epoch: 0006 loss= 16.481995190

Epoch: 0007 loss= 4.848220565

Epoch: 0008 loss= 0.759822878

Epoch: 0009 loss= 0.000000000

Epoch: 0010 loss= 0.079848485

Optimization Finished!

Accuracy: 0.75

鏈€缁堝彲浠ョ湅鍒帮紝鎴戜滑鐨勬ā鍨嬮娴嬬簿搴﹁揪鍒颁簡 75%锛屽浜庡垵瀛﹁€呰€岃█锛岃繖涓垚缁╄繕鏄笉閿欑殑銆傝嚦姝わ紝鎴戜滑宸茬粡閫氳繃 TensorFlow 瀹炵幇浜嗗熀浜庣缁忕綉缁滄ā鍨嬬殑鏂囨湰鍒嗙被浠诲姟銆?/p>

浜哄伐鏅鸿兘澶ф暟鎹笌娣卞害瀛︿範

澶ф暟鎹寲鎺楧T鏁版嵁鍒嗘瀽

鏁欎綘鏈哄櫒瀛︿範锛屾暀浣犳暟鎹寲鎺?/strong>

以上是关于的主要内容,如果未能解决你的问题,请参考以下文章