keepalive高可用haproxy实现URL资源的动静分离

Posted 马哥Linux运维

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了keepalive高可用haproxy实现URL资源的动静分离相关的知识,希望对你有一定的参考价值。

实现要点:

(1) 动静分离discuzx,动静都要基于负载均衡实现;

(2) 进一步测试在haproxy和后端主机之间添加varnish缓存;

(3) 给出拓扑设计;

(4) haproxy的设定要求:

(a) 启动stats;

(b) 自定义403、502和503的错误页;

(c) 各组后端主机选择合适的调度方法;

(d) 记录好日志;

(e) 使用keepalived高可用haproxy;

拓扑结构

两台keepalived的双主模型对两台haproxy主机做高可用,两个VIP分别为10.1.253.11与10.1.253.12

haproxy主机负责接收请求、动静分离请求的图片资源、调度单台varnish缓存主机及两台httpd主机

varnish缓存主机负责缓存后端nginx服务器响应的用户上传的静态图片资源,并调度两台nginx主机

nginx主机负责响应图片资源,并为websrv主机提供nfs服务,映射为discuzx程序attachment目录

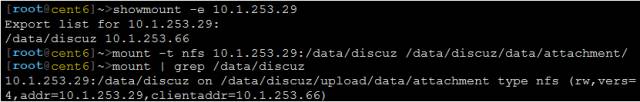

nginx服务器配置nfs服务

安装nfs服务

1.yum install nfs-utils

配置nfs共享

/etc/exports

1./data/discuz 10.1.253.66(rw,no_root_squash) 10.1.253.67(rw,no_root_squash)

创建apache用户,并授权

1.useradd -s -u 48 -g 48 apache

2.setfacl -m u:apache:rwx /data/discuz

启动nfs服务

1.systemctl start nfs.service

websrvs主机配置

安装amp程序和discuzx

关键步骤:

1.yum install httpd mysql php php-mysql php-xcache

2.mysql -uroot -p -e 'CREATE DATABASE ultrax;GRANT ALL ON ultrax.* TO ultraxuser@10.1.%.% IDENTIFIED BY "ultraxpass";FLUSH PRIVILEGES'

挂载nfs到用户上传附件路径

启动mysql、httpd并访问测试

nginx主机配置

nginx负责响应用户上传的静态图片资源,nginx的虚拟主机root路径指向nfs共享的目录即可。

为了能够将URL的资源路径映射为虚拟主机的root路径下所对应的资源,应使用nginx对请求的URL重写或重定向,显然可在最前端的haproxy主机或varnish服务或nginx服务都能够实现URL的重写,只要确保新的URL能够映射到nginx主机下该资源的路径即可。没有必要同时在haproxy、varnish、nginx都重写同一URL,考虑到后端主机的数量,我觉得可以在haproxy或varnish中重写URL。

安装nginx

配置虚拟主机

1.server {

2. listen 82;

3. server_name localhost;

4. location / {

5. root /data/discuz;

6. index index.html index.htm;

7. }

8. location ~* \.(jpg|jpeg|gif|png)$ {

9. root /data/discuz;

10. rewrite ^/.*forum/(.*)$ /$1 break;

11. }

12.}

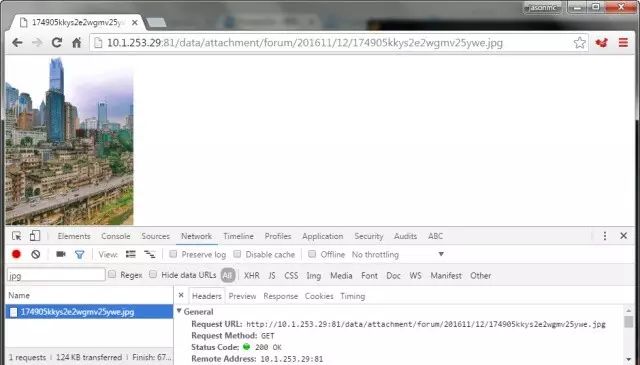

启动nginx服务并访问测试

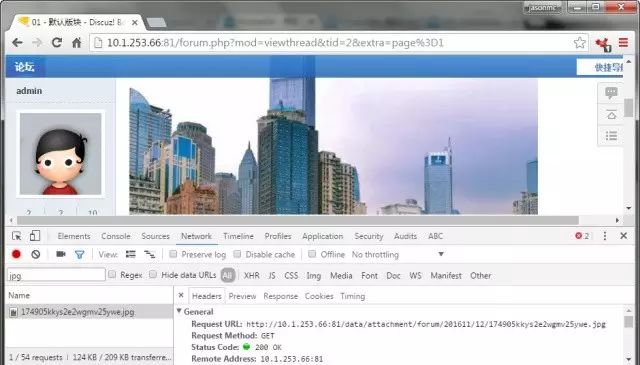

某资源的URL源路径

替换该URL的host为nginx主机,直接访问该URL

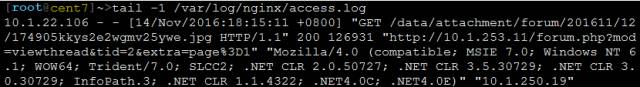

nginx服务器输出日志

1.10.1.250.19 - - [13/Nov/2016:9:01:53 +0800] "GET /data/attachment/forum/201611/12/174905kkys2e2wgmv25ywe.jpg HTTP/1.1" 200 126931 "-" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.82 Safari/537.36" "-"

varnish缓存服务器

varnish服务器负责缓存响应资源,并调度nginx服务器,以及检测nginx服务的健康状态等

安装varnish

安装前需配置好epel的yum源

1.yum install varnish

配置缓存服务

配置varnish运行时参数

/etc/varnish/varnish.params

1.VARNISH_LISTEN_PORT=80

2.……

3.VARNISH_STORAGE="malloc,128M"

配置varnish缓存服务

前面说过,对URL的重写可在varnish服务器中实现,对于有众多后端nginx主机的情况,在varnish重写URL更加方便;

在varnish中重写URL使用regsub函数实现;

为了避免与nginx服务器端的URL重写混淆,应把nginx虚拟主机配置中的URL重写注释;

/etc/varnish/default.vcl

1.vcl 4.0;

2.import directors;

……

13.backend nginx1 {

14. .host = "10.1.253.29";

15. .port = "81";

16. .probe = ok;

17.}

18.backend nginx2 {

19. .host = "10.1.253.29";

20. .port = "82";

22.}

23.sub vcl_init {

24. new RR = directors.round_robin();

25. RR.add_backend(nginx1);

26. RR.add_backend(nginx2);

27.}

28.

29.sub vcl_recv {

30. set req.backend_hint = RR.backend();

31. if (req.url ~ "(?i)\.(jpg|jpeg|gif|png)$") {

32. set req.url = regsub(req.url, "/.*attachment/(.*)", "/\1");

33. }

43.}

44.

48.sub vcl_backend_response {

49. # Happens after we have read the response headers from the backend.

50. #

51. # Here you clean the response headers, removing silly Set-Cookie headers

52. # and other mistakes your backend does.

53.}

54.

55.sub vcl_deliver {

61.}

启动varnish并访问测试

1.systemctl start varnish

—访问varnish服务器下该资源的URL

—nginx服务器端的访问日志

1.10.1.253.29 - - [13/Nov/2016:22:21:43 +0800] "GET /forum/201611/12/174905kkys2e2wgmv25ywe.jpg HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.82 Safari/537.36" "10.1.250.19"

效果就是:只要重写的路径下存在该资源,无论URL中该资源的前的路径是什么,都统统能够重写为自定义路径下的相同资源

haproxy主机配置

安装

1.yum install haproxy

配置文件

配置文件路径:/etc/haproxy/haproxy.cfg

主要是定义前端和后端的配置,其中前端基于acl对URI进行匹配控制:

url_static_geg条件为URI的起始路径,url_static_end条件为URI的后缀名

只有同时满足以上两个条件才调用static主机组,其余的URL使用默认的dynamic主机组

此外,还定义了错误响应码的重定向到另一主机的

以及开启了haproxy的stats页面

配置frontend前端

1.frontend main *:80

2. acl url_static_beg path_beg -i /data/attachment

3. acl url_static_end path_end -i .jpg .gif .png .css .js

4.

5. use_backend static if url_static_beg url_static_end

6.

7. default_backend dynamic

8.

9. errorloc 503 http://10.1.253.29:82/errorpage/503sorry.html

10. errorloc 403 http://10.1.253.29:82/errorpage/403sorry.html

11. errorloc 502 http://10.1.253.29:82/errorpage/502sorry.html

配置backend后端

1.backend dynamic

2. balance roundrobin

4. server web1 10.1.253.66:81 check cookie amp1

5. server web2 10.1.253.66:82 check cookie amp2

6.

7.backend static

8. balance roundrobin

9. server ngx1 10.1.253.29:81 check

10. server ngx2 10.1.253.29:82 check

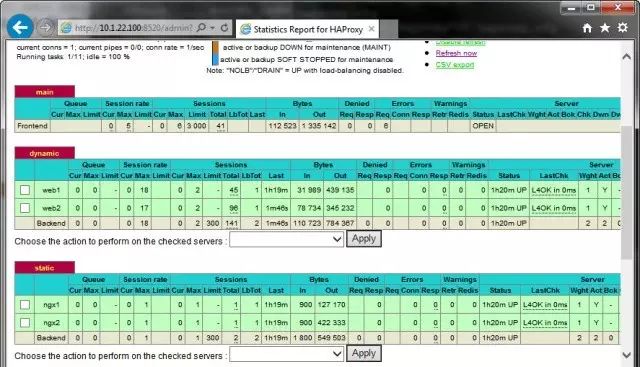

配置stats页面

1.listen stats

2. bind :

3. stats enable

4. stats uri /admin?stats

……

7. stats refresh 10s

8. stats admin if TRUE

9. stats hide-version

测试结果

keepalived高可用haproxy服务

安装

1.yum install keepalived

配置双主模型的keepalived主机

/etc/keepalived/keepalived.conf

1.global_defs {

2. notification_email {

3. root@localhost

4. }

5. notification_email_from keepalived@jasonmc.com

6. smtp_server localhost

7. smtp_connect_timeout 30

8. router_id node1

9. vrrp_mcast_group4 224.22.29.1

10.}

11.vrrp_script chk_down {

12. script "[[ -f /etc/keepalived/down ]]&& exit 1 || exit 0"

13. interval 1

14. weight -5

15.}

16.vrrp_script chk_haproxy {

17. script "killall -0 haproxy && exit 0 || exit 1"

18. interval 1

19. weight -5

20.}

21.vrrp_instance VI_1 {

22. state MASTER

23. interface eno16777736

24. virtual_router_id 10

25. priority 96

26. advert_int 10

27. authentication {

28. auth_type PASS

29. auth_pass 1a7b2ce6

30. }

31. virtual_ipaddress {

32. 10.1.253.11 dev eno16777736

33. }

34. track_script {

35. chk_down

36. chk_haproxy

37. }

38.}

39.vrrp_instance VI_2 {

40. state BACKUP

41. interface eno16777736

42. virtual_router_id 11

43. priority 100

44. advert_int 11

45. authentication {

46. ……

48. }

启动keepalived服务并测试

1.systemctl start keepalived

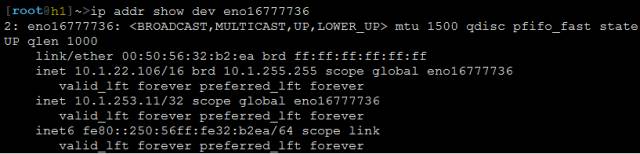

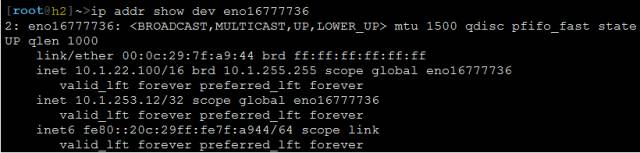

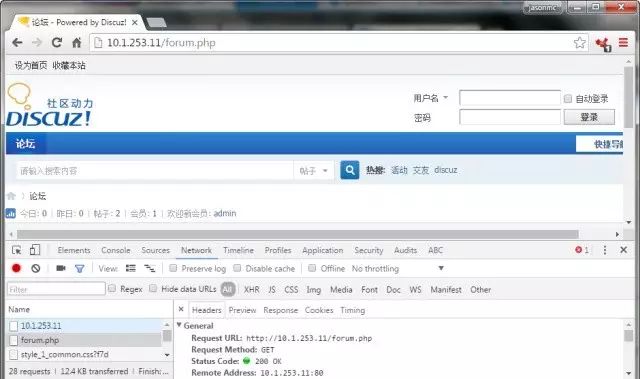

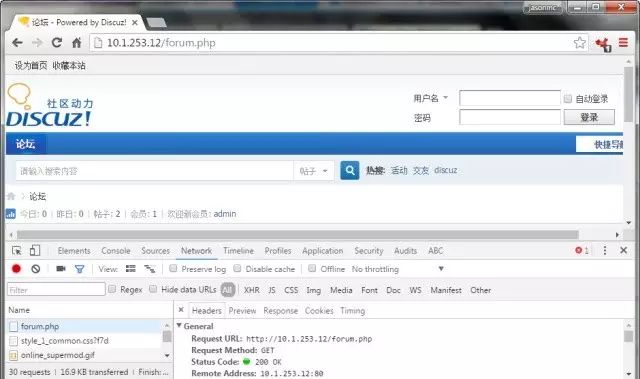

haproxy1与haproxy2同时上线时

—haproxy1拥有VIP1 10.1.253.11

—haproxy2拥有VIP2 10.1.253.12

触发haproxy1下线操作

VI_1(即haproxy1)上在/etc/keepalived/目录下建立down文件,让keepalived的track_script功能检测到此文件并实现下线功能。

—haproxy1上关于keepalived的日志输出:

1.Nov 14 13:18:55 h1 Keepalived_vrrp[54901]: VRRP_Script(chk_down) failed

2.Nov 14 13:19:01 h1 Keepalived_vrrp[54901]: VRRP_Instance(VI_1) Received higher prio advert

3.Nov 14 13:19:01 h1 Keepalived_vrrp[54901]: VRRP_Instance(VI_1) Entering BACKUP STATE

4.Nov 14 13:19:01 h1 Keepalived_vrrp[54901]: VRRP_Instance(VI_1) removing protocol VIPs.

5.Nov 14 13:19:01 h1 Keepalived_healthcheckers[54900]: Netlink reflector reports IP10.1.253.11 removed

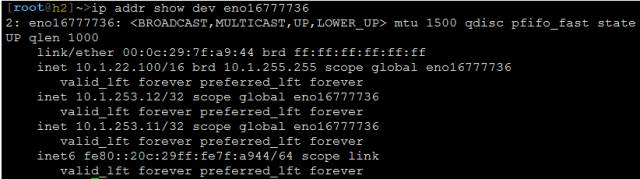

haproxy2主机无法收到haproxy1多播发送的HEARTBEAT信息,将成为VI_1的MASTER主机。

—haproxy2上关于keepalived的日志输出:

1.Nov 14 13:19:01 h1 Keepalived_vrrp[58092]: VRRP_Instance(VI_1) forcing a new MASTER election

2.Nov 14 13:19:01 h1 Keepalived_vrrp[58092]: VRRP_Instance(VI_1) forcing a new MASTER election

3.Nov 14 13:19:11 h1 Keepalived_vrrp[58092]: VRRP_Instance(VI_1) Transition to MASTER STATE

4.Nov 14 13:19:21 h1 Keepalived_vrrp[58092]: VRRP_Instance(VI_1) Entering MASTER STATE

5.Nov 14 13:19:21 h1 Keepalived_vrrp[58092]: VRRP_Instance(VI_1) setting protocol VIPs.

6.Nov 14 13:19:21 h1 Keepalived_vrrp[58092]: VRRP_Instance(VI_1) Sending gratuitousARPs on eno16777736 for 10.1.253.11

7.Nov 14 13:19:21 h1 Keepalived_healthcheckers[58091]: Netlink reflector reports IP10.1.253.11 added

8.Nov 14 13:19:26 h1 Keepalived_vrrp[58092]: VRRP_Instance(VI_1) Sending gratuitousARPs on eno16777736 for 10.1.253.11

—haproxy2上将同时拥有 VIP1 与VIP2

触发haproxy1重新上线操作

把VI_1(即haproxy1)中/etc/keepalived/目录下down文件移除,让keepalived的track_script功能检测不到此文件实现重新上线的功能。

—haproxy1上关于keepalived的日志输出:

1.Nov 14 13:58:02 h1 Keepalived_vrrp[67748]: VRRP_Script(chk_down) succeeded

2.Nov 14 13:58:12 h1 Keepalived_vrrp[67748]: VRRP_Instance(VI_1) Transition to MASTER STATE

3.Nov 14 13:58:22 h1 Keepalived_vrrp[67748]: VRRP_Instance(VI_1) Entering MASTER STATE

4.Nov 14 13:58:22 h1 Keepalived_vrrp[67748]: VRRP_Instance(VI_1) setting protocol VIPs.

5.Nov 14 13:58:22 h1 Keepalived_vrrp[67748]: VRRP_Instance(VI_1) Sending gratuitousARPs on eno16777736 for 10.1.253.11

6.Nov 14 13:58:22 h1 Keepalived_healthcheckers[67747]: Netlink reflector reports IP10.1.253.11 added

7.Nov 14 13:58:27 h1 Keepalived_vrrp[67748]: VRRP_Instance(VI_1) Sending gratuitousARPs on eno16777736 for 10.1.253.11

—haproxy2上关于keepalived的日志输出:

1.Nov 14 13:58:12 h1 Keepalived_vrrp[58092]: VRRP_Instance(VI_1) Received higher prio advert

2.Nov 14 13:58:12 h1 Keepalived_vrrp[58092]: VRRP_Instance(VI_1) Entering BACKUP STATE

3.Nov 14 13:58:12 h1 Keepalived_vrrp[58092]: VRRP_Instance(VI_1) removing protocol VIPs.

4.Nov 14 13:58:12 h1 Keepalived_healthcheckers[58091]: Netlink reflector reports IP10.1.253.11 removed

—再次恢复为haproxy1拥有VIP1,haproxy2拥有VIP2

测试结果

—同时访问 VIP1 或 VIP2 都能正常访问由haproxy代理的discuzx网站

—对于用户上传的附件资源,由varnish服务器或nginx服务器进行响应

总结

HAProxy是一款纯粹的高性能反向代理服务器,能够代理应用层协议,也可以定义mode tcp让代理传输层协议。HAProxy能够代理HTTP协议和TCP协议,支持代理Web Server、Dynamic Engine、DateBase,且能够检测后端主机的健康状态,实现后端主机的HA。其内建的stats管理页能够非常方便查看前端、后端主机的状态,简单的操作就能实现后端主机的上下线。

关于URL的重写,上文中已经说明可在HAProxy代理服务器、Varnish缓存服务器或Nginx主机上实现,但为了便于管理较多的后端主机,通常选择在HAProxy服务器或者Varnish缓存服务器上实现URL的重写。

HAProxy代理服务器的单进程事件驱动模型使得其能够处理大并发请求,使用弹性二叉树算法存储的连接会话能够非常灵活的进行管理,对于后端主机调度算法也能做到非常精细。

不关注

就捣蛋

敢不敢点开阅读原文啊?

以上是关于keepalive高可用haproxy实现URL资源的动静分离的主要内容,如果未能解决你的问题,请参考以下文章

haprox + keepalive 实现 高可用+ 四层负载均衡

高可用pxc+rocketmq+es+redis+minio+keepalive+haproxy 实操