记一次阿里云k8s部署-测试存储

Posted Sicc1107

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了记一次阿里云k8s部署-测试存储相关的知识,希望对你有一定的参考价值。

记一次阿里云k8s部署

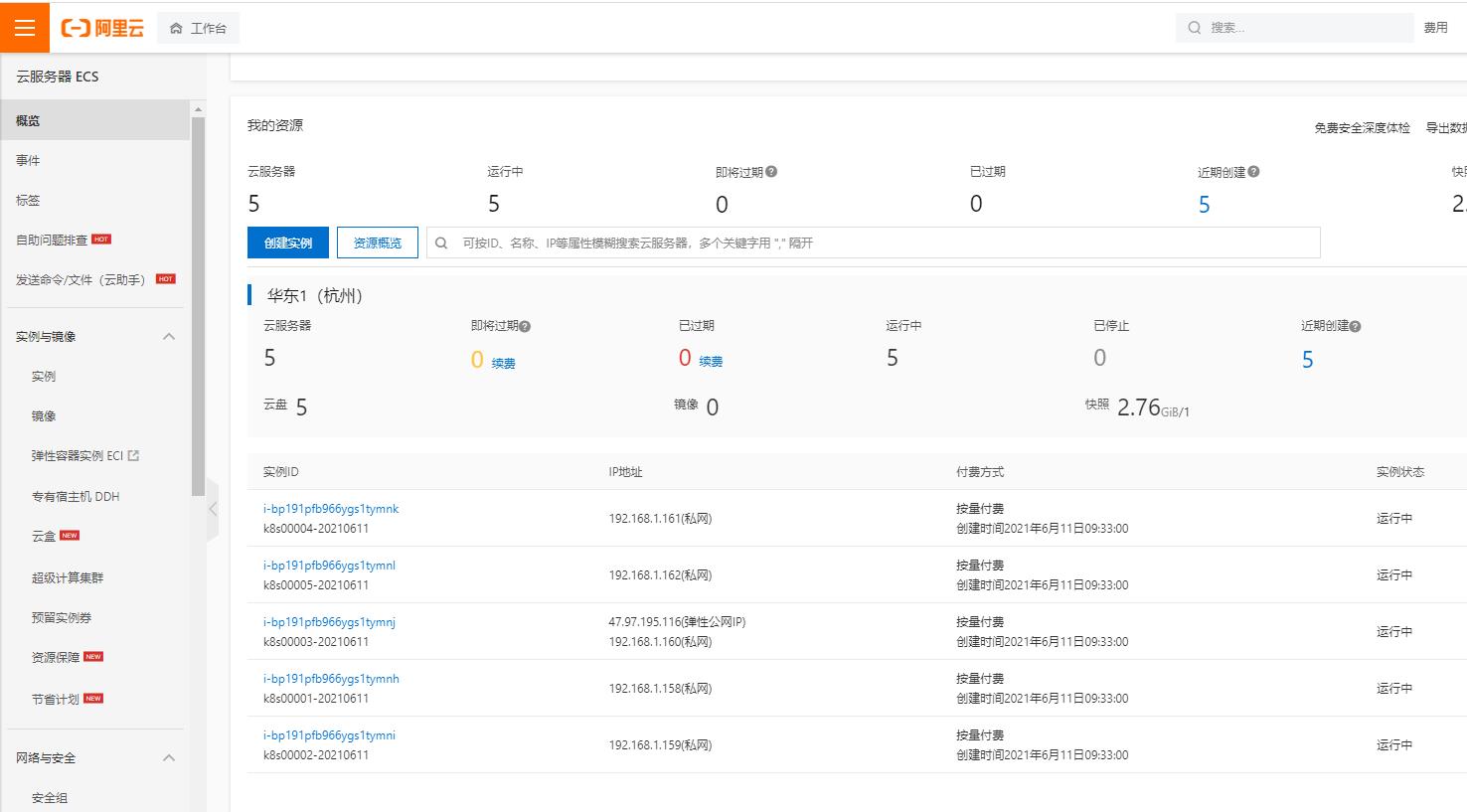

阿里云资源准备

服务器

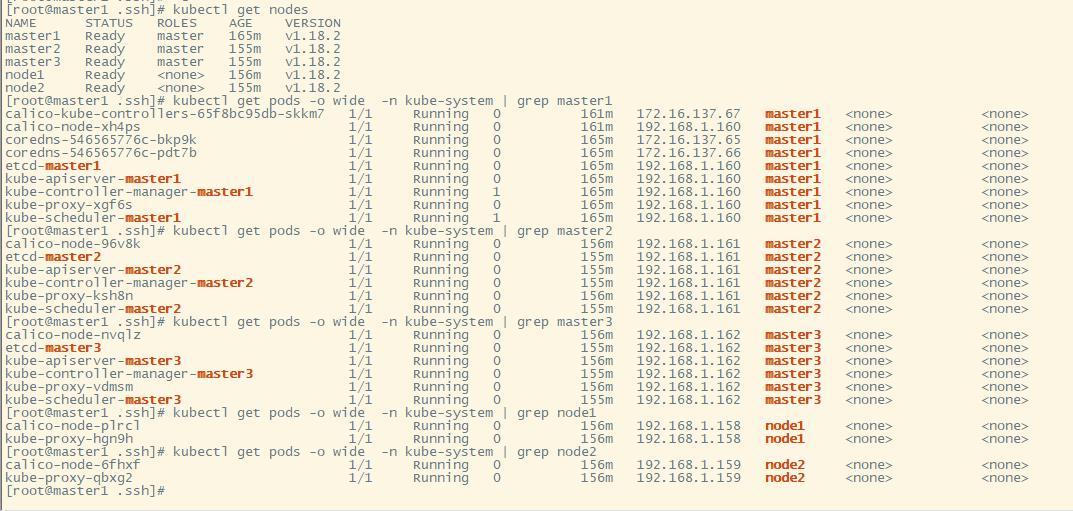

| ip | 角色 | 资源 |

|---|---|---|

| 192.168.1.160 | master1 | 2核4G |

| 192.168.1.161 | master2 | 2核4G |

| 192.168.1.162 | master3 | 2核4G |

| 192.168.1.159 | node2 | 2核4G |

| 192.168.1.158 | node1 | 2核4G |

| 192.168.1.158 | haproxy | |

| 192.168.1.159 | nfs | |

| 47.97.195.116 | 弹性公网IP 绑定192.168.1.160 |

ecs

密钥对

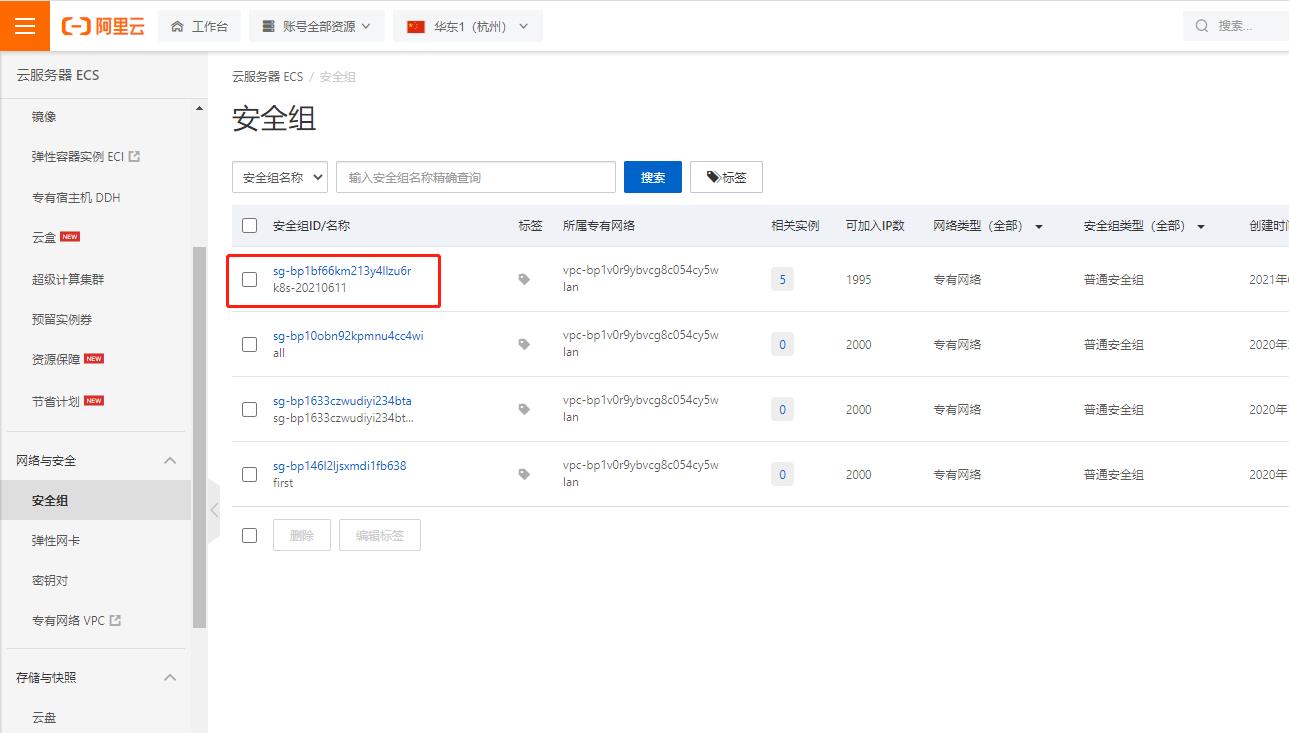

安全组

弹性公网IP

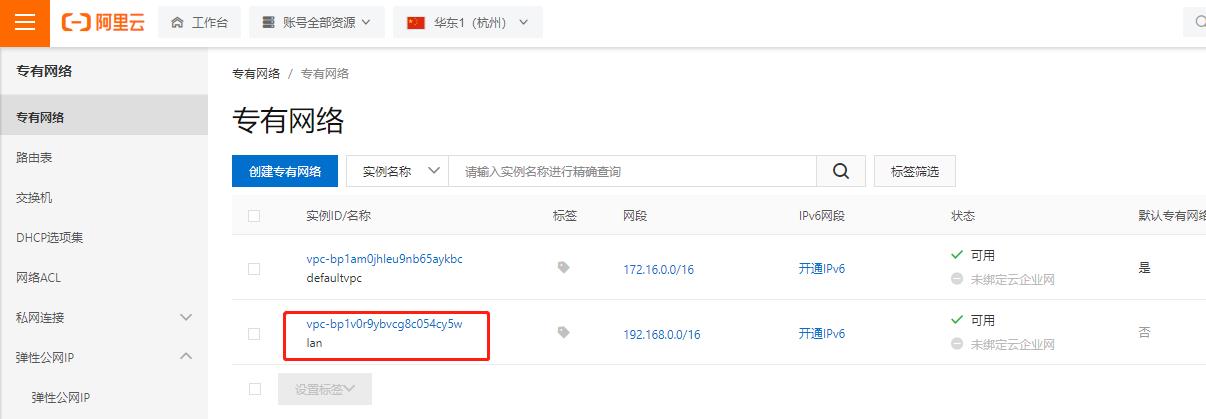

专有网络

交换机

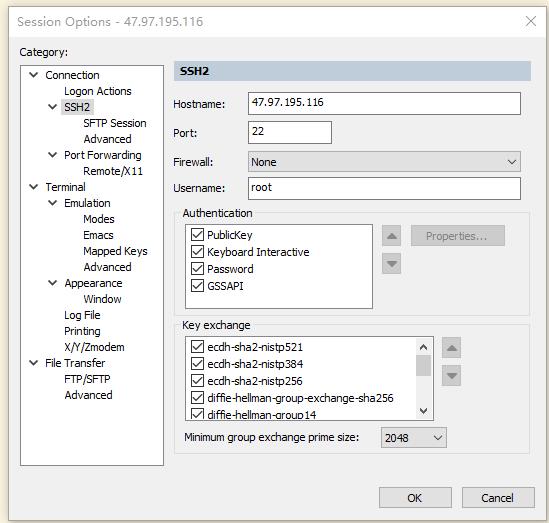

连接设置

1 在绑定弹性公网IP服务上设置密码

2 在安全组上开放SSH 22 端口

3 使用secureCRT连接绑定弹性公网IP服务器

4 在内网 使用密钥对 连接

上传密钥对 sicc.pem 文件至 192.168.1.160 /root/.ssh/目录下

vi /root/.bashrc

alias go161='ssh -i /root/.ssh/sicc.pem root@192.168.1.161'

alias go162='ssh -i /root/.ssh/sicc.pem root@192.168.1.162'

alias go158='ssh -i /root/.ssh/sicc.pem root@192.168.1.158'

alias go159='ssh -i /root/.ssh/sicc.pem root@192.168.1.159'

这样 就可以通过 192.168.1.160 去使用go161 到任意服务器

K8S集群安装

1 服务器初始化

#确认每台机器的时区和时间都正确 做定时任务同步阿里云时钟

crontab -e

*/30 * * * * /usr/sbin/ntpdate -u ntp.cloud.aliyuncs.com;clock -w

#每台机器设置主机名

hostnamectl set-hostname <hostname>

#每台机器添加所有机器的主机名到ip的映射 158 装了haproxy4层负载apiserver

cat << EOF >> /etc/hosts

192.168.1.160 master1

192.168.1.161 master2

192.168.1.162 master3

192.168.1.159 node2

192.168.1.158 node1

192.168.1.158 apiserver-haproxy

EOF

#每台修改内核参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

#每台禁用每台机器的swap 阿里云服务器本身无swap

swapoff -a

sed -i.bak '/ swap /s/^/#/' /etc/fstab

#每台机器关闭firewalld防火墙和selinux 阿里云服务器已经关闭

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=permissive/g' /etc/selinux/config

#每台机器添加阿里k8s和docker的官方yum repo

cat << EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum install -y yum-utils

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

#每台安装指定版本docker

yum install docker-ce-19.03.8-3 docker-ce-cli-19.03.8-3 containerd.io

#每台机器安装指定版本kubeadm,kubelet,kubectl --disableexcludes=kubernetes 禁掉除了这个之外的别的仓库

yum install -y kubelet-1.18.2 kubeadm-1.18.2 kubectl-1.18.2 --disableexcludes=kubernetes

#kubeadm:用来初始化集群的指令。

#kubelet:在集群中的每个节点上用来启动pod和容器等。

#kubectl:用来与集群通信的命令行工具。

2 192.168.1.158haproxy负责均衡安装

yum -y install haproxy

#修改配置文件 增加在最后

vim /etc/haproxy/haproxy.cfg

listen k8s_master 192.168.1.158:6443

mode tcp #配置TCP模式

maxconn 2000

balance roundrobin

server master1 192.168.1.160:6443 check port 6443 inter 5000 rise 3 fall 3 weight 3

server master2 192.168.1.161:6443 check port 6443 inter 5000 rise 3 fall 3 weight 3

server master3 192.168.1.162:6443 check port 6443 inter 5000 rise 3 fall 3 weight 3

srvtimeout 20000

k8s集群master节点配置

#在master1执行初始化init命令

kubeadm init --kubernetes-version 1.18.2 --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers --control-plane-endpoint apiserver-haproxy:6443 --upload-certs

#–image-repository:默认master初始化时,k8s会从k8s.gcr.io拉取容器镜像,由于国内此地址访问不到,故调整为阿里云仓库

#–control-plane-endpoint: 配置VIP地址映射的域名和port

#–upload-certs:将master之间的共享证书上传到集群

#结果

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join apiserver-haproxy:6443 --token i7ffha.cbp9wse6jhy4uz2q \\

--discovery-token-ca-cert-hash sha256:1f084d1ac878308635f1dbe8676bac33fe3df6d52fa212834787a0bc71f1db6d \\

--control-plane --certificate-key e6d08e338ee5e0178a85c01067e223d2a00b5ac0e452bca58561976cf2187dd5

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join apiserver-haproxy:6443 --token i7ffha.cbp9wse6jhy4uz2q \\

--discovery-token-ca-cert-hash sha256:1f084d1ac878308635f1dbe8676bac33fe3df6d52fa212834787a0bc71f1db6d

#根据步骤3的输出提示在master1上执行如下命令

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

#安装Calico网络也是高可用

wget https://docs.projectcalico.org/v3.14/manifests/calico.yaml

kubectl apply -f calico.yaml

#过10min左右在master1上执行如下命令查看所有的pod是否都处于Running状态,然后再继续接下来的步骤

kubectl get pods -A -o wide

#如果初始化有问题,则执行如下命令后重新初始化

kubeadm reset

rm -rf $HOME/.kube/config

#在master上执行验证API Server是否正常访问(需要负载均衡正确配置完成)

curl https://apiserver-lb:6443/version -k

"major": "1",

"minor": "18",

"gitVersion": "v1.18.2",

"gitCommit": "52c56ce7a8272c798dbc29846288d7cd9fbae032",

"gitTreeState": "clean",

"buildDate": "2020-04-16T11:48:36Z",

"goVersion": "go1.13.9",

"compiler": "gc",

"platform": "linux/amd64"

#如上输出已经提供了初始化其它master和其它work节点的命令(token有过期时间,默认2h,过期则如上命令就失效,需要手动重新生成token),但是需要等master1上所有服务都就绪后才能执行,具体见接下来的步骤。

#kubeadm init phase upload-certs --upload-certs #获取新的token

#如果距master1初始化时间没超过2h,则在master2和master3执行如下命令,开始初始化

kubeadm join apiserver-haproxy:6443 --token i7ffha.cbp9wse6jhy4uz2q \\

--discovery-token-ca-cert-hash sha256:1f084d1ac878308635f1dbe8676bac33fe3df6d52fa212834787a0bc71f1db6d \\

--control-plane --certificate-key e6d08e338ee5e0178a85c01067e223d2a00b5ac0e452bca58561976cf2187dd5

#如果距master1初始化时间没超过2h,则在node1和node2执行如下命令,开始初始化

kubeadm join apiserver-haproxy:6443 --token i7ffha.cbp9wse6jhy4uz2q \\

--discovery-token-ca-cert-hash sha256:1f084d1ac878308635f1dbe8676bac33fe3df6d52fa212834787a0bc71f1db6d

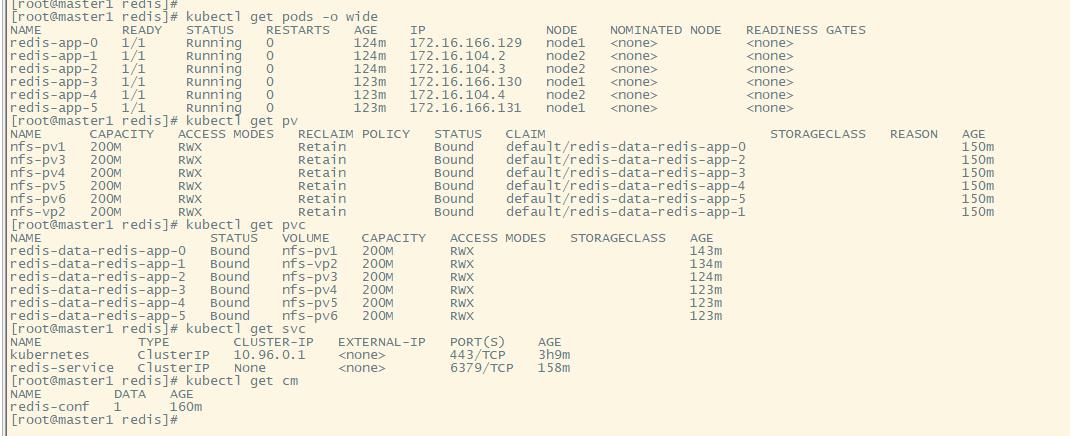

部署redis集群

部署nfs

#安装服务

yum -y install nfs-utils

yum -y install rpcbind

#新增/etc/exports文件,用于设置需要共享的路径

[root@node2 ~]# cat /etc/exports

/home/k8s/redis/pv1 192.168.1.0/24(rw,sync,no_root_squash)

/home/k8s/redis/pv2 192.168.1.0/24(rw,sync,no_root_squash)

/home/k8s/redis/pv3 192.168.1.0/24(rw,sync,no_root_squash)

/home/k8s/redis/pv4 192.168.1.0/24(rw,sync,no_root_squash)

/home/k8s/redis/pv5 192.168.1.0/24(rw,sync,no_root_squash)

/home/k8s/redis/pv6 192.168.1.0/24(rw,sync,no_root_squash)

#创建相应目录

mkdir -p /home/k8s/redis/pv1..6

#启动NFS和rpcbind服务

systemctl restart rpcbind

systemctl restart nfs

systemctl enable nfs

node1 node2 节点安装nfs客户端 不然无法挂载

yum -y install nfs-utils

创建PV

kubectl create -f pv.yaml

cat pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv1

spec:

capacity:

storage: 200M

accessModes:

- ReadWriteMany

nfs:

server: 192.168.1.159

path: "/home/k8s/redis/pv1"

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-vp2

spec:

capacity:

storage: 200M

accessModes:

- ReadWriteMany

nfs:

server: 192.168.1.159

path: "/home/k8s/redis/pv2"

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv3

spec:

capacity:

storage: 200M

accessModes:

- ReadWriteMany

nfs:

server: 192.168.1.159

path: "/home/k8s/redis/pv3"

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv4

spec:

capacity:

storage: 200M

accessModes:

- ReadWriteMany

nfs:

server: 192.168.1.159

path: "/home/k8s/redis/pv4"

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv5

spec:

capacity:

storage: 200M

accessModes:

- ReadWriteMany

nfs:

server: 192.168.1.159

path: "/home/k8s/redis/pv5"

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv6

spec:

capacity:

storage: 200M

accessModes:

- ReadWriteMany

nfs:

server: 192.168.1.159

path: "/home/k8s/redis/pv6"

创建Configmap

kubectl create configmap redis-conf --from-file=redis.conf

kubectl describe cm redis-conf

cat redis.conf

appendonly yes

cluster-enabled yes

cluster-config-file /var/lib/redis/nodes.conf

cluster-node-timeout 5000

dir /var/lib/redis

port 6379

创建Headless service

kubectl create -f headless-service.yml

cat headless-service.yaml

apiVersion: v1

kind: Service

metadata:

name: redis-service

labels:

app: redis

spec:

ports:

- name: redis-port

port: 6379

clusterIP: None

selector:

app: redis

appCluster: redis-cluster

创建Redis 集群节点

kubectl create -f redis.yaml

cat redis.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis-app

spec:

serviceName: "redis-service"

replicas: 6

selector:

matchLabels:

app: redis

template:

metadata:

labels:

app: redis

appCluster: redis-cluster

spec:

terminationGracePeriodSeconds: 20

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- redis

topologyKey: kubernetes.io/hostname

containers:

- name: redis

image: redis:latest

command:

- "redis-server"

args:

- "/etc/redis/redis.conf"

- "--protected-mode"

- "no"

resources:

requests:

cpu: "100m"

memory: "100Mi"

ports:

- name: redis

containerPort: 6379

protocol: "TCP"

- name: cluster

containerPort: 16379

protocol: "TCP"

volumeMounts:

- name: "redis-conf"

mountPath: "/etc/redis"

- name: "redis-data"

mountPath: "/var/lib/redis"

volumes:

- name: "redis-conf"

configMap:

name: "redis-conf"

items:

- key: "redis.conf"

path: "redis.conf"

volumeClaimTemplates:

- metadata:

name: redis-data

spec:

accessModes: [ "ReadWriteMany" ]

resources:

requests:

storage: 200M

问题

1 为什么使用 静态 存储pv redis 有状态部署 pod自动绑定 增加了 pvc,pvc与pv自动绑定了

他会根据 volumeClaimTemplates中的 spec 自动去匹配

2 有状态 最好使用 storgeclass 动态存储 每个 pod 自动创建 一个 pv 存储

静态PV

通过手动创建对应的PV(pv是集群级别的资源),需要创建一个PVC(pvc是namespace级别的资源),k8s会为pvc匹配满足条件的pv。

其中accessMode可以用来指定该PV的几种访问挂载方式:

- ReadWriteOnce(RWO)表示该卷只可以以读写方式挂载到一个 Pod 内;

- ReadOnlyMany( ROX)表示该卷可以挂载到多个节点上,并被多个 Pod 以只读方式挂载;

- ReadWriteMany(RWX)表示卷可以被多个节点以读写方式挂载供多个 Pod 同时使用。

PV的状态为Available(可以用),另外我们还看到ReclaimPolicy字段,该字段表明对PV的回收策略,默认是Retain,即PV使用完后数据保留,需要手动进行清理,此外还有以下两种策略:

- Recycle,即回收,这个时候会清除 PV 中的数据;

- Delete,即删除,这个策略常在云服务商的存储服务中使用到,比如 AWS EBS

K8S会为PVC匹配满足条件的PV,以上示例中我们在PVC里面指定storageClassName 为manua,这个时候就会去匹配storageClassName同样为manual的PV,一旦发现合适的PV后,就可以绑定到该PV上。

PV有五种状态:

- Pending 表示目前该 PV 在后端存储系统中还没创建完成;

- Available 即闲置可用状态,这个时候还没有被绑定到任何 PVC 上;

- Bound 就像上面例子里似的,这个时候已经绑定到某个 PVC 上了;

- Released 表示已经绑定的 PVC 已经被删掉了,但资源还未被回收掉;

- Failed 表示回收失败。

PVC有三种状态:

- Pending 表示还未绑定任何 PV;

- Bound 表示已经和某个 PV 进行了绑定;

- Lost 表示关联的 PV 失联。

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume # pv 的名字

labels: # pv 的一些label

type: local

spec:

storageClassName: manual

capacity: # 该 pv 的容量

storage: 10Gi

accessModes: # 该 pv 的接入模式

- ReadWriteOnce

hostPath: # 该 pv 使用的 hostpath 类型,还支持通过 CSI 接入其他 plugin

path: "/mnt/data"

task-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim

namespace: lab

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 3Gi

apiVersion: v1

kind: Pod

metadata:

name: task-demo

namespace: lab

labels:

app: task-demo

spec:

containers:

- image: nginx

name: task-demo

ports:

- name: web

containerPort: 80

volumeMounts:

- mountPath: '/usr/share/nginx/html'

name: task-pv

volumes:

- name: task-pv

persistentVolumeClaim:

claimName: task-pvc-claim

动态PV

动态PV需要一些参数进行创建,这里通过StorageClass这个对象进行描述,在k8s中可以定义很多的storageclass

通过注释storageclass.kubernetes.io/is-default-class来指定默认的 StorageClass。这样新创建出来的 PVC 中的 storageClassName 字段就会自动使用默认的 StorageClass。其中provisioner字段为必填项,主要指定通过哪个voume plugin来创建PV

首先我们定义了一个 StorageClass。当用户创建好 Pod 以后,指定了 PVC,这个时候 Kubernetes 就会根据 StorageClass 中定义的 Provisioner 来调用对应的 plugin 来创建 PV。PV 创建成功后,跟 PVC 进行绑定,挂载到 Pod 中使用。

StatefulSet中使用动态PVC

对应StatefulSet来说,每个POD存储的volume数据是不一样的,而且相互关系是需要强绑定的。在这里就不能通过template来指定PV和PVC了,需要通过VloumeClaimTemplate。

通过定义这个template,就可以单独为每一个pod生成一个PVC,并绑定了PV,生成的PVC名字和POD是一样的,都是带有特定的序列

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: fast-rbd-sc

annotation:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: kubernetes.io/rbd # 必填项,用来指定volume plugin来创建PV的物理资源

parameters: # 一些参数

monitors: 10.16.153.105:6789

adminId: kube

adminSecretName: ceph-secret

adminSecretNamespace: kube-system

pool: kube

userId: kube

userSecretName: ceph-secret-user

userSecretNamespace: default

fsType: ext4

imageFormat: "2"

imageFeatures: "layering"

参考文档

https://blog.51cto.com/leejia/2495558#h7

https://blog.csdn.net/zhutongcloud/article/details/90768390

以上是关于记一次阿里云k8s部署-测试存储的主要内容,如果未能解决你的问题,请参考以下文章