分析匿名页(anonymous_page)映射

Posted Loopers

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了分析匿名页(anonymous_page)映射相关的知识,希望对你有一定的参考价值。

接着上一次malloc(探秘malloc是如何申请内存的)的dump信息继续分析。

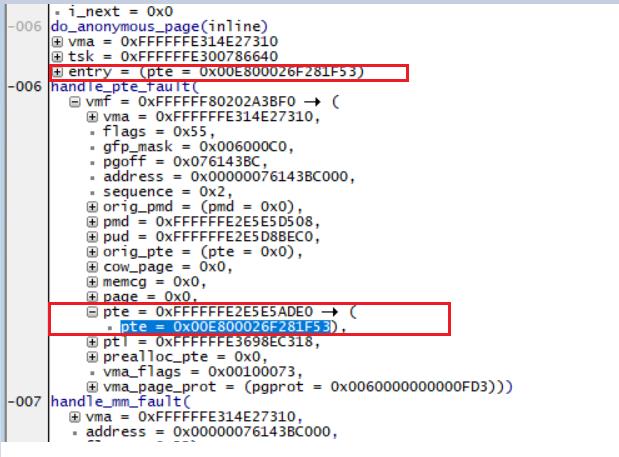

-006|do_anonymous_page(inline)

| vma = 0xFFFFFFE314E27310

| tsk = 0xFFFFFFE300786640

| entry = (pte = 0x00E800026F281F53)

-006|handle_pte_fault(

| vmf = 0xFFFFFF80202A3BF0)

-007|handle_mm_fault(

| vma = 0xFFFFFFE314E27310,

| address = 0x00000076143BC000,

| flags = 0x55)

-008|do_page_fault(

| addr = 0x00000076143BC008,

| esr = 0x92000047,

| regs = 0xFFFFFF80202A3EC0)

| vma = 0xFFFFFFE314E27310

| mm_flags = 0x55

| vm_flags = 0x2

| major = 0x0

| tsk = 0xFFFFFFE300786640

| mm = 0xFFFFFFE2EBB33440

-009|test_ti_thread_flag(inline)

-009|do_translation_fault(

| addr = 0x00000076143BC008,

| esr = 0x92000047,

| regs = 0xFFFFFF80202A3EC0)

-010|do_mem_abort(

| addr = 0x00000076143BC008,

| esr = 0x92000047,

| regs = 0xFFFFFF80202A3EC0)

-011|el0_da(asm)

-->|exception现在来看下代码的流程

static vm_fault_t do_anonymous_page(struct vm_fault *vmf)

struct vm_area_struct *vma = vmf->vma;

struct mem_cgroup *memcg;

struct page *page;

vm_fault_t ret = 0;

pte_t entry;

/* File mapping without ->vm_ops ? */

if (vmf->vma_flags & VM_SHARED)

return VM_FAULT_SIGBUS;

if (pte_alloc(vma->vm_mm, vmf->pmd, vmf->address))

return VM_FAULT_OOM;- 如果是共享的匿名映射,但是没有提供vma→vm_ops则返回VM_FAULT_SIGBUS错误

- 如果pte不存在,则分配pte。建立pmd和pte的关系

/* Use the zero-page for reads */

if (!(vmf->flags & FAULT_FLAG_WRITE) &&

!mm_forbids_zeropage(vma->vm_mm))

entry = pte_mkspecial(pfn_pte(my_zero_pfn(vmf->address),

vmf->vma_page_prot));

if (!pte_map_lock(vmf))

return VM_FAULT_RETRY;

if (!pte_none(*vmf->pte))

goto unlock;

ret = check_stable_address_space(vma->vm_mm);

if (ret)

goto unlock;

/*

* Don't call the userfaultfd during the speculative path.

* We already checked for the VMA to not be managed through

* userfaultfd, but it may be set in our back once we have lock

* the pte. In such a case we can ignore it this time.

*/

if (vmf->flags & FAULT_FLAG_SPECULATIVE)

goto setpte;

/* Deliver the page fault to userland, check inside PT lock */

if (userfaultfd_missing(vma))

pte_unmap_unlock(vmf->pte, vmf->ptl);

return handle_userfault(vmf, VM_UFFD_MISSING);

goto setpte;

- 如果缺页不是写操作,不是写那就是读么。而且没有强制0页。翻译过来的意思就是:如果是读操作触发的缺页,则映射到一个头通用的零页。这样做是为了提供效率

- 调用pte_mkspecial生成一个特殊页表项,映射到专有的0页,一页大小

#define my_zero_pfn(addr) page_to_pfn(ZERO_PAGE(addr))

/*

* ZERO_PAGE is a global shared page that is always zero: used

* for zero-mapped memory areas etc..

*/

extern unsigned long empty_zero_page[PAGE_SIZE / sizeof(unsigned long)];

#define ZERO_PAGE(vaddr) phys_to_page(__pa_symbol(empty_zero_page))- pte_map_lock : 根据pmd,address找到pte表对应的一个表项,并且lock住

- pte_none(*vmf→pte): 如果页表项不为空。我们第一次访问,不可能有值的,所以可能别的进程在使用此物理地址,跳unlock

/* Allocate our own private page. */

if (unlikely(anon_vma_prepare(vma)))

goto oom;

page = alloc_zeroed_user_highpage_movable(vma, vmf->address);

if (!page)

goto oom;- 分配一个匿名的anon_vma,如果已经存在则跳过

- 真正的分配一个物理地址,优先从highmem分配。

static inline struct page *

alloc_zeroed_user_highpage_movable(struct vm_area_struct *vma,

unsigned long vaddr)

#ifndef CONFIG_CMA

return __alloc_zeroed_user_highpage(__GFP_MOVABLE, vma, vaddr);

#else

return __alloc_zeroed_user_highpage(__GFP_MOVABLE|__GFP_CMA, vma,

vaddr);

#endif

- 如果没有定义CMA,则从高端内存的可移动区域分配

- 如果定义CMA,则从高端内存的可移动驱动或者CMA中分配物理内存

entry = mk_pte(page, vmf->vma_page_prot);

if (vmf->vma_flags & VM_WRITE)

entry = pte_mkwrite(pte_mkdirty(entry));

if (!pte_map_lock(vmf))

ret = VM_FAULT_RETRY;

goto release;

if (!pte_none(*vmf->pte))

goto unlock_and_release- 和读操作的时候基本一样,只是读操作的时候设置的零页,而我们是分配的真正一个物理page

- 如果是写的操作,就需要给页表项设置脏页flag==PTE_DIRTY和write flag==PTE_WRITE

inc_mm_counter_fast(vma->vm_mm, MM_ANONPAGES);

__page_add_new_anon_rmap(page, vma, vmf->address, false);

mem_cgroup_commit_charge(page, memcg, false, false);

__lru_cache_add_active_or_unevictable(page, vmf->vma_flags);

setpte:

set_pte_at(vma->vm_mm, vmf->address, vmf->pte, entry);

/* No need to invalidate - it was non-present before */

update_mmu_cache(vma, vmf->address, vmf->pte);- 增加mm_count的引用计数

- 设置反向映射

- 将此页添加到LRU链表中去,回收的时候使用

- set_pte_at:设置页表项,pte里面的值就是entry

- 更新mmu的cache,在arm64中update_mmu_cache为空函数,具体操作时在set_pte_at操作的

/*

* PTE bits configuration in the presence of hardware Dirty Bit Management

* (PTE_WRITE == PTE_DBM):

*

* Dirty Writable | PTE_RDONLY PTE_WRITE PTE_DIRTY (sw)

* 0 0 | 1 0 0

* 0 1 | 1 1 0

* 1 0 | 1 0 1

* 1 1 | 0 1 x

*

* When hardware DBM is not present, the sofware PTE_DIRTY bit is updated via

* the page fault mechanism. Checking the dirty status of a pte becomes:

*

* PTE_DIRTY || (PTE_WRITE && !PTE_RDONLY)

*/

static inline void set_pte_at(struct mm_struct *mm, unsigned long addr,

pte_t *ptep, pte_t pte)

pte_t old_pte;

if (pte_present(pte) && pte_user_exec(pte) && !pte_special(pte))

__sync_icache_dcache(pte);

/*

* If the existing pte is valid, check for potential race with

* hardware updates of the pte (ptep_set_access_flags safely changes

* valid ptes without going through an invalid entry).

*/

old_pte = READ_ONCE(*ptep);

if (IS_ENABLED(CONFIG_DEBUG_VM) && pte_valid(old_pte) && pte_valid(pte) &&

(mm == current->active_mm || atomic_read(&mm->mm_users) > 1))

VM_WARN_ONCE(!pte_young(pte),

"%s: racy access flag clearing: 0x%016llx -> 0x%016llx",

__func__, pte_val(old_pte), pte_val(pte));

VM_WARN_ONCE(pte_write(old_pte) && !pte_dirty(pte),

"%s: racy dirty state clearing: 0x%016llx -> 0x%016llx",

__func__, pte_val(old_pte), pte_val(pte));

set_pte(ptep, pte);

- 当pte存在,有执行权限,不是特殊的pte,则更新指令和数据cache

- 调用set_pte设置pte的内容为entry

那我们0x00000076143BC000地址到底对应的物理地址是多少呢? 我们来计算下:

pdg_index = (0x00000076143BC000 >> 30) & (0x200 - 1) = 0x01D8

pdg = 0xFFFFFFE2E5D8B000+ 0x01D8*8 = 0xFFFFFFE2E5D8BEC0 = rd(0xFFFFFFE2E5D8BEC0 ) = 0xE5E5D003

pmd_index = (0x00000076143BC000 >> 21) & (0x1FF ) = 0xA1

pmd = 0xE5E5D003+ 0xA1 * 8 = 0xE5E5D000+ 0xA1 * 8 = 0xE5E5D508 = rd(C:0xE5E5D508) = E5E5A003

pte_index = (0x00000076143BC000 >> 12) & (0x200 -1 ) = 0x01BC

pte = 0xE5E5A003 + 0x1BC * 8 = 0xE5E5A000 + 0x1BC*8 = 0xE5E5ADE0 = rd(0xE5E5ADE0 ) = 0xE800026F281F53

pfn = (0xE800026F281F53 >> 12)&0xfffffffff = 0x26F281

phy = 0x0x26F281 << 12 + offset = 0x26F281000 + 000 = 0x26F281000

以上是关于分析匿名页(anonymous_page)映射的主要内容,如果未能解决你的问题,请参考以下文章

Linux 内核 内存管理内存映射原理 ② ( 内存映射概念 | 文件映射 | 匿名映射 | 内存映射原理 | 分配虚拟内存页 | 产生缺页异常 | 分配物理内存页 | 共享内存 | 进程内存 )