Xception实现动物识别(TensorFlow)

Posted csp_

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Xception实现动物识别(TensorFlow)相关的知识,希望对你有一定的参考价值。

活动地址:CSDN21天学习挑战赛

目录

1.任务介绍

数据结构为:

data

├── cat(文件夹含1000张图像)

│

├── chook(文件夹含1000张图像)

│

├── dog(文件夹含1000张图像)

│

└── horse(文件夹含1000张图像)

需要把数据分成训练集train和验证集val,对train数据集进行训练,达到给定val数据集中的一张猫 / 狗的图片,识别其是猫还是狗的目的

2.数据处理

2.1.数据预处理

设置GPU环境进行训练:

import tensorflow as tf

gpus = tf.config.list_physical_devices("GPU")

if gpus:

tf.config.experimental.set_memory_growth(gpus[0], True) #设置GPU显存用量按需使用

tf.config.set_visible_devices([gpus[0]],"GPU")

# 打印显卡信息,确认GPU可用

print(gpus)

输出:

[PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

导入图片数据:

import matplotlib.pyplot as plt

# 支持中文

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

import os,PIL

# 设置随机种子尽可能使结果可以重现

import numpy as np

np.random.seed(1)

# 设置随机种子尽可能使结果可以重现

import tensorflow as tf

tf.random.set_seed(1)

import pathlib

data_dir = "./data"

data_dir = pathlib.Path(data_dir)

image_count = len(list(data_dir.glob('*/*')))

print("图片总数为:",image_count)

输出:

图片总数为: 4000

之后初始化参数,并使用image_dataset_from_directory方法将磁盘中的数据加载到tf.data.Dataset中

函数原型:

tf.keras.preprocessing.image_dataset_from_directory(

directory,

labels="inferred",

label_mode="int",

class_names=None,

color_mode="rgb",

batch_size=32,

image_size=(256, 256),

shuffle=True,

seed=None,

validation_split=None,

subset=None,

interpolation="bilinear",

follow_links=False,

)

官网介绍:tf.keras.utils.image_dataset_from_directory

代码:

batch_size = 4

img_height = 299

img_width = 299

train_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="training",

seed=12,

image_size=(img_height, img_width),

batch_size=batch_size)

输出:

Found 4000 files belonging to 4 classes.

Using 3200 files for training.

同理配置验证集:

val_ds = tf.keras.preprocessing.image_dataset_from_directory(

data_dir,

validation_split=0.2,

subset="validation",

seed=12,

image_size=(img_height, img_width),

batch_size=batch_size)

输出:

Found 4000 files belonging to 4 classes.

Using 800 files for validation.

我们可以通过class_names输出数据集的标签,标签将按字母顺序对应于目录名称

class_names = train_ds.class_names

print(class_names)

输出:

['cat', 'chook', 'dog', 'horse']

查看batch的数据类型:

for image_batch, labels_batch in train_ds:

print(image_batch.shape)

print(labels_batch.shape)

break

输出:

(4, 299, 299, 3)

(4,)

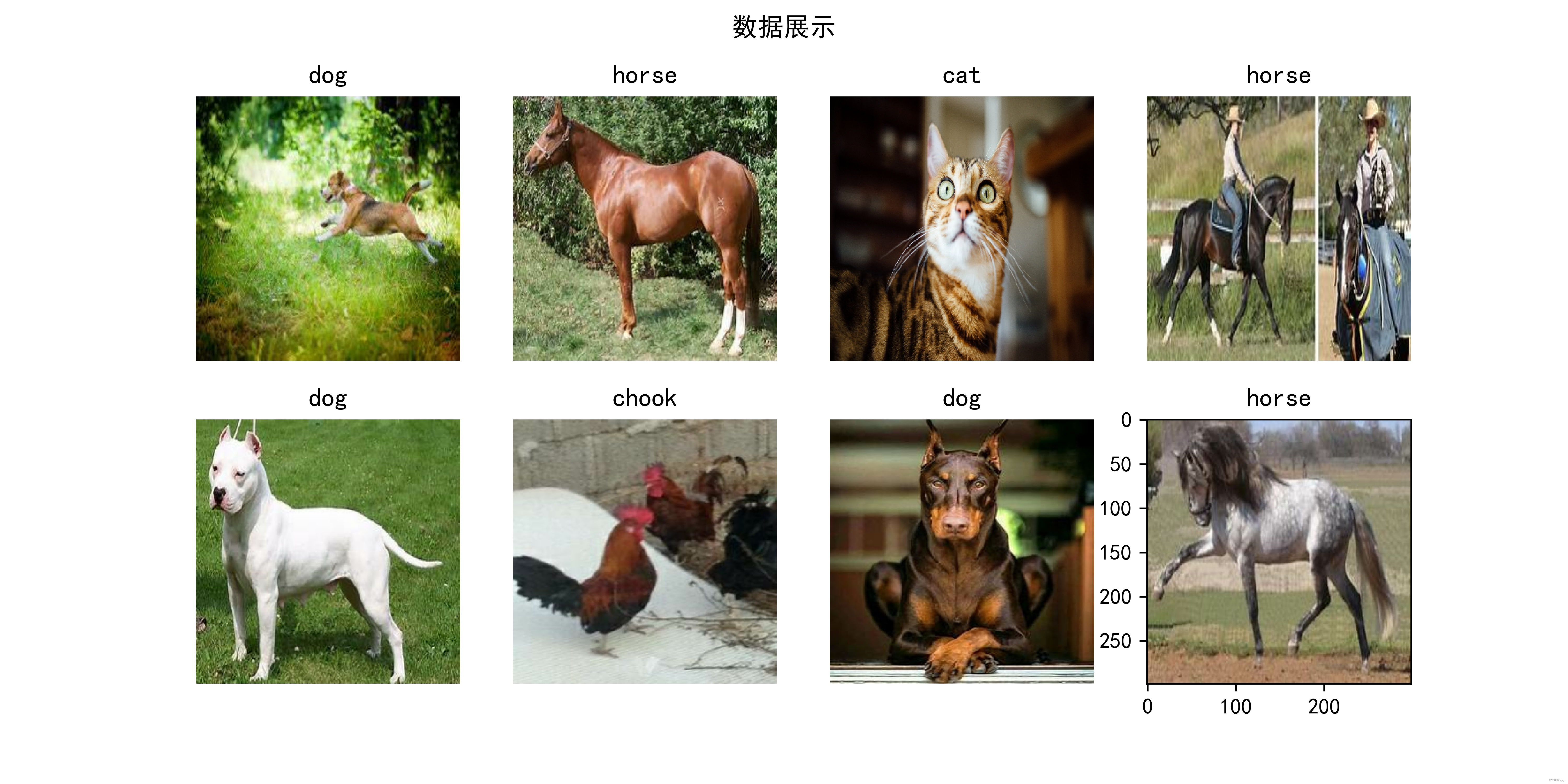

2.2.可视化数据

plt.figure(figsize=(10, 5)) # 图形的宽为10高为5

plt.suptitle("数据展示")

num = -1

for images, labels in train_ds.take(2):

for i in range(4):

num = num + 1

ax = plt.subplot(2, 4, num + 1)

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(class_names[labels[i]])

plt.savefig('pic1.jpg', dpi=600) #指定分辨率保存

plt.axis("off")

输出:

2.3.配置数据集

shuffle() : 打乱数据,详细可参考:数据集shuffle方法中buffer_size的理解

prefetch() :预取数据,加速运行,详细可参考:Better performance with the tf.data API

cache() :将数据集缓存到内存当中,加速运行

AUTOTUNE = tf.data.AUTOTUNE

train_ds = (

train_ds.cache()

.shuffle(1000)

# .map(train_preprocessing) # 这里可以设置预处理函数

# .batch(batch_size) # 在image_dataset_from_directory处已经设置了batch_size

.prefetch(buffer_size=AUTOTUNE)

)

val_ds = (

val_ds.cache()

.shuffle(1000)

# .map(val_preprocessing) # 这里可以设置预处理函数

# .batch(batch_size) # 在image_dataset_from_directory处已经设置了batch_size

.prefetch(buffer_size=AUTOTUNE)

)

2.网络设计

2.1.Xception简单介绍

详细可看:知乎

论文地址:Xception: Deep Learning with Depthwise Separable Convolutions

工程代码:https://github.com/keras-team/keras-applications/blob/master/keras_applications/xception.py

Xception是Google2016年10月提出的,时间在Google家的MobileNet v1之后,MobileNet v2之前。其吸纳了ResNet、Inception、MobileNet v1的设计思想,直接以Inception v3为模子,将里面的基本Inception module的卷积替换为使用 Depthwise Separable Convolution,又外加了残差连接

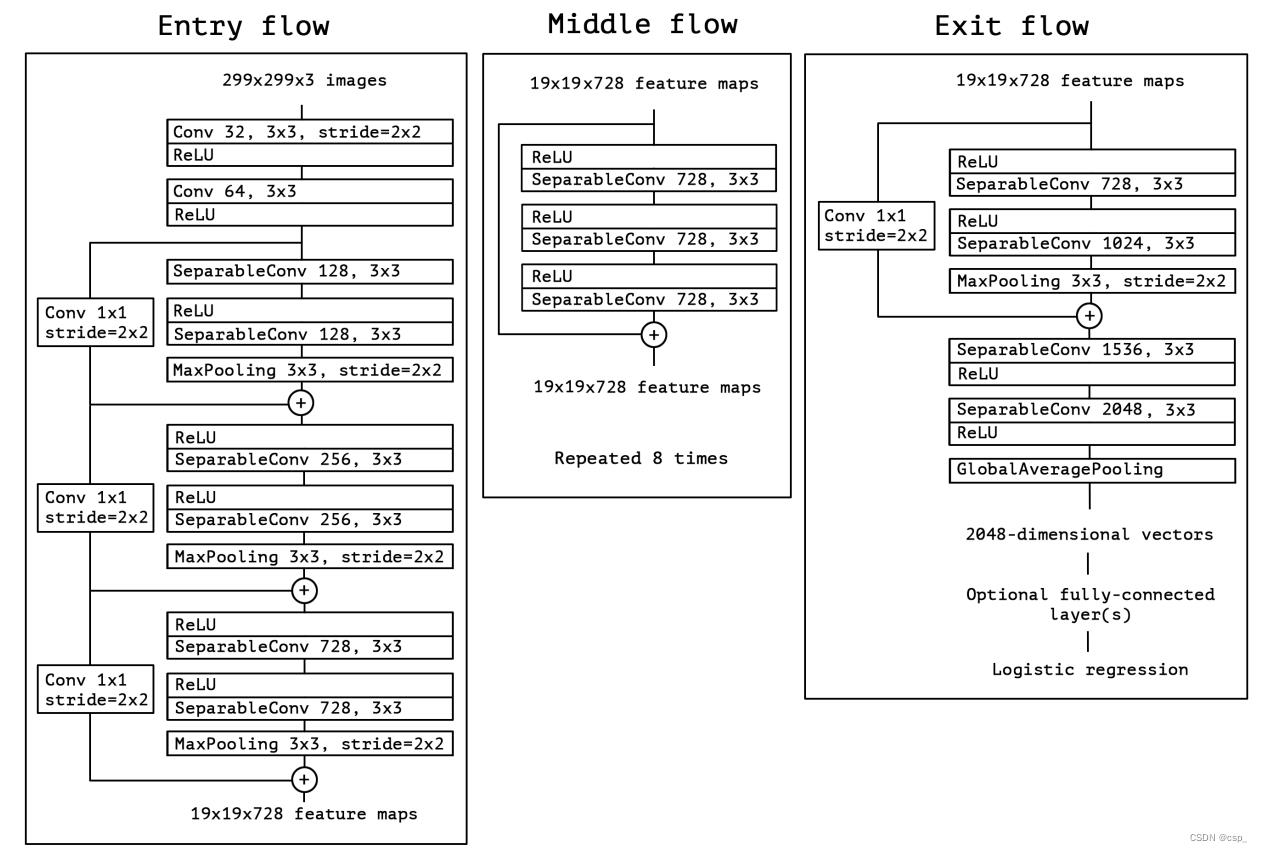

Xception 的结构基于ResNet,整个网络被分为了三个部分:Entry,Middle和Exit

Entry部分主要是用来不断下采样,减小空间维度Middle部分则是不断学习关联关系,优化特征,其有8个部分;所有的普通卷积和可分离卷积后面都接了BN,不过图中没有给出- 最终

Exit部分则是汇总、整理特征,最后交由FC来进行表达

网络的整个流程如下图,Xception架构有36个卷积层作为网络特征提取的基础,这36个卷积层被分为14个模块,除了第一个和最后一个,其他每一个模块都使用了残差连接

简而言之,Xception架构是一个深度可分离卷积层的线性叠加,这个架构易于修改,仅使用30-40行代码就可以完成

2.2.设计网络模型

#====================================#

# Xception的网络部分

#====================================#

from tensorflow.keras.preprocessing import image

from tensorflow.keras.models import Model

from tensorflow.keras import layers

from tensorflow.keras.layers import Dense,Input,BatchNormalization,Activation,Conv2D,SeparableConv2D,MaxPooling2D

from tensorflow.keras.layers import GlobalAveragePooling2D,GlobalMaxPooling2D

from tensorflow.keras import backend as K

from tensorflow.keras.applications.imagenet_utils import decode_predictions

def Xception(input_shape = [299,299,3],classes=1000):

img_input = Input(shape=input_shape)

#=================#

# Entry flow

#=================#

# block1

# 299,299,3 -> 149,149,64

x = Conv2D(32, (3, 3), strides=(2, 2), use_bias=False, name='block1_conv1')(img_input)

x = BatchNormalization(name='block1_conv1_bn')(x)

x = Activation('relu', name='block1_conv1_act')(x)

x = Conv2D(64, (3, 3), use_bias=False, name='block1_conv2')(x)

x = BatchNormalization(name='block1_conv2_bn')(x)

x = Activation('relu', name='block1_conv2_act')(x)

# block2

# 149,149,64 -> 75,75,128

residual = Conv2D(128, (1, 1), strides=(2, 2), padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = SeparableConv2D(128, (3, 3), padding='same', use_bias=False, name='block2_sepconv1')(x)

x = BatchNormalization(name='block2_sepconv1_bn')(x)

x = Activation('relu', name='block2_sepconv2_act')(x)

x = SeparableConv2D(128, (3, 3), padding='same', use_bias=False, name='block2_sepconv2')(x)

x = BatchNormalization(name='block2_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block2_pool')(x)

x = layers.add([x, residual])

# block3

# 75,75,128 -> 38,38,256

residual = Conv2D(256, (1, 1), strides=(2, 2),padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = Activation('relu', name='block3_sepconv1_act')(x)

x = SeparableConv2D(256, (3, 3), padding='same', use_bias=False, name='block3_sepconv1')(x)

x = BatchNormalization(name='block3_sepconv1_bn')(x)

x = Activation('relu', name='block3_sepconv2_act')(x)

x = SeparableConv2D(256, (3, 3), padding='same', use_bias=False, name='block3_sepconv2')(x)

x = BatchNormalization(name='block3_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block3_pool')(x)

x = layers.add([x, residual])

# block4

# 38,38,256 -> 19,19,728

residual = Conv2D(728, (1, 1), strides=(2, 2),padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = Activation('relu', name='block4_sepconv1_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name='block4_sepconv1')(x)

x = BatchNormalization(name='block4_sepconv1_bn')(x)

x = Activation('relu', name='block4_sepconv2_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name='block4_sepconv2')(x)

x = BatchNormalization(name='block4_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block4_pool')(x)

x = layers.add([x, residual])

#=================#

# Middle flow

#=================#

# block5--block12

# 19,19,728 -> 19,19,728

for i in range(8):

residual = x

prefix = 'block' + str(i + 5)

x = Activation('relu', name=prefix + '_sepconv1_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name=prefix + '_sepconv1')(x)

x = BatchNormalization(name=prefix + '_sepconv1_bn')(x)

x = Activation('relu', name=prefix + '_sepconv2_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name=prefix + '_sepconv2')(x)

x = BatchNormalization(name=prefix + '_sepconv2_bn')(x)

x = Activation('relu', name=prefix + '_sepconv3_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name=prefix + '_sepconv3')(x)

x = BatchNormalization(name=prefix + '_sepconv3_bn')(x)

x = layers.add([x, residual])

#=================#

# Exit flow

#=================#

# block13

# 19,19,728 -> 10,10,1024

residual = Conv2D(1024, (1, 1), strides=(2, 2),

padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = Activation('relu', name='block13_sepconv1_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name='block13_sepconv1')(x)

x = BatchNormalization(name='block13_sepconv1_bn')(x)

x = Activation('relu', name='block13_sepconv2_act')(x)

x = SeparableConv2D(1024, (3, 3), padding='same', use_bias=False, name='block13_sepconv2')(x)

x = BatchNormalization(name='block13_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block13_pool')(x)

x = layers.add([x, residual])

# block14

# 10,10,1024 -> 10,10,2048

x = SeparableConv2D(1536, (3, 3), padding='same', use_bias=False, name='block14_sepconv1')(x)

x = BatchNormalization(name='block14_sepconv1_bn')(x)

x = Activation('relu', name='block14_sepconv1_act')(x)

x = SeparableConv2D(2048, (3, 3), padding='same', use_bias=False, name='block14_sepconv2')(x)

x = BatchNormalization(name='block14_sepconv2_bn')(x)

x = Activation('relu', name='block14_sepconv2_act')(x)

x = GlobalAveragePooling2D(name='avg_pool')(x)

x = Dense(classes, activation='softmax', name='predictions')(x)

inputs = img_input

model = Model(inputs, x, name='xception')

return model

打印模型信息: