02 记一次 netty 内存泄露

Posted 蓝风9

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了02 记一次 netty 内存泄露相关的知识,希望对你有一定的参考价值。

前言

最近在一个 jt809 的项目中碰到了这样的一个问题

最开始 对方传输给我们的车辆数量不多的情况下, 系统一直很稳健

但是 由于之前又对接了大量的车辆, 然后 之后出现了一次 OutOfDirectMemoryError, 然后 当时 临时重启了一下

然后 过了一周左右的时间(7, 8天), 服务再一次 OutOfDirectMemoryError

大概的错误信息是这样

Exception in thread "main" io.netty.util.internal.OutOfDirectMemoryError: failed to allocate 16777216 byte(s) of direct memory (used: 16777216, max: 32440320)明显可以判断出来的情况应该是 DirectMemory 存在泄漏, 服务堆内存上限是 8G, 可用本地内存上限也是 8G, 每天的内存泄漏大概是在 1G 左右

呵呵 刚好最近也稍微有点时间了, 细究一下这个问题

这里还会引申出一些其他的东西

以下代码, 运行时截图 基于 jdk8

测试用例

配置 vmOptions 为 "-Xint -Xmx32M -XX:+UseSerialGC -XX:+PrintGCDetails -Dio.netty.allocator.numDirectArenas=2"

package com.hx.test12;

import io.netty.buffer.ByteBuf;

import io.netty.buffer.PooledByteBufAllocator;

/**

* Test26NativeMemoryGc

*

* @author Jerry.X.He <970655147@qq.com>

* @version 1.0

* @date 2021-11-30 20:34

*/

public class Test26NativeMemoryGc

// Test26NativeMemoryGc

// -Xint -Xmx32M -XX:+UseSerialGC -XX:+PrintGCDetails -Dio.netty.allocator.numDirectArenas=2

public static void main(String[] args) throws Exception

int _1M = 1 * 1024 * 1024;

ByteBuf buffer = PooledByteBufAllocator.DEFAULT.buffer(_1M, _1M);

for (int i = 0; i < _1M; i++)

buffer.writeByte(0x01);

for (int i = 0; i < 30; i++)

ByteBuf ref = buffer.copy();

System.out.println("allocate - " + i);

// ref.release();

System.out.println("end");

System.in.read();

日志如下

[GC (Allocation Failure) [DefNew: 8704K->1088K(9792K), 0.0039713 secs] 8704K->2105K(31680K), 0.0039972 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9792K->623K(9792K), 0.0028818 secs] 10809K->2705K(31680K), 0.0029044 secs] [Times: user=0.01 sys=0.00, real=0.00 secs]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/Users/jerry/.m2/repository/org/apache/logging/log4j/log4j-slf4j-impl/2.10.0/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/jerry/.m2/repository/ch/qos/logback/logback-classic/1.2.3/logback-classic-1.2.3.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/jerry/.m2/repository/org/slf4j/slf4j-log4j12/1.7.16/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

[GC (Allocation Failure) [DefNew: 9327K->582K(9792K), 0.0012796 secs] 11409K->2838K(31680K), 0.0012940 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9286K->886K(9792K), 0.0014551 secs] 11542K->3395K(31680K), 0.0014722 secs] [Times: user=0.01 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9590K->830K(9792K), 0.0008231 secs] 12099K->3541K(31680K), 0.0008401 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9534K->853K(9792K), 0.0015186 secs] 12245K->3622K(31680K), 0.0015356 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9557K->60K(9792K), 0.0019172 secs] 12326K->3388K(31680K), 0.0019374 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 8764K->651K(9792K), 0.0011036 secs] 12092K->3978K(31680K), 0.0011191 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9355K->505K(9792K), 0.0012746 secs] 12682K->3956K(31680K), 0.0012907 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9209K->1088K(9792K), 0.0022060 secs] 12660K->5087K(31680K), 0.0022299 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9792K->783K(9792K), 0.0029064 secs] 13791K->5674K(31680K), 0.0029232 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9487K->801K(9792K), 0.0024921 secs] 14378K->6152K(31680K), 0.0025077 secs] [Times: user=0.01 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9505K->423K(9792K), 0.0019411 secs] 14856K->6240K(31680K), 0.0019574 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

allocate - 0

allocate - 1

allocate - 2

allocate - 3

allocate - 4

allocate - 5

allocate - 6

allocate - 7

allocate - 8

allocate - 9

allocate - 10

allocate - 11

allocate - 12

allocate - 13

allocate - 14

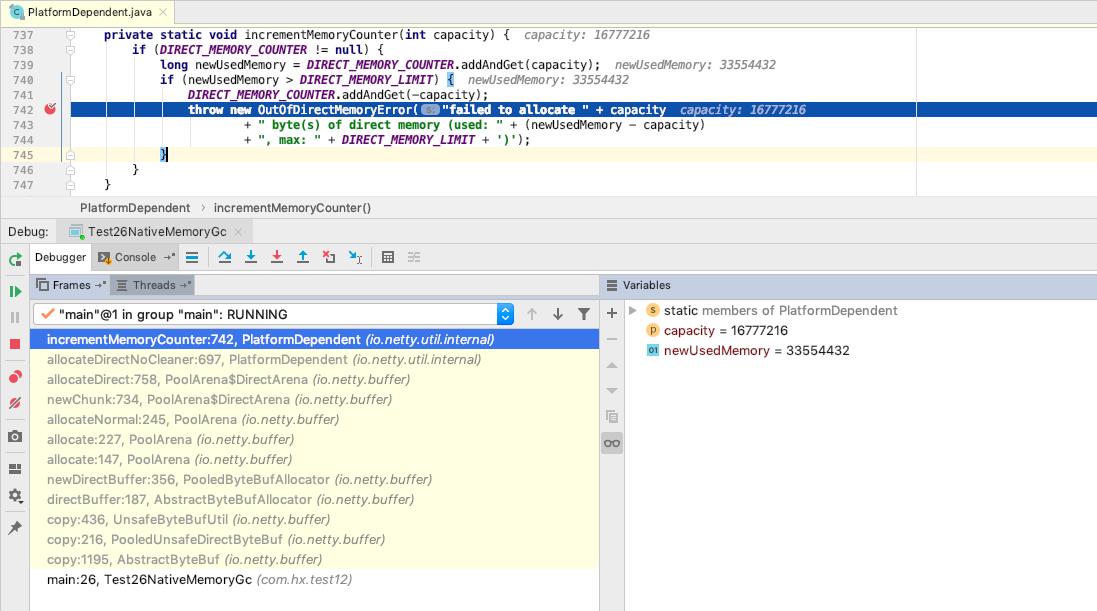

Exception in thread "main" io.netty.util.internal.OutOfDirectMemoryError: failed to allocate 16777216 byte(s) of direct memory (used: 16777216, max: 32440320)

at io.netty.util.internal.PlatformDependent.incrementMemoryCounter(PlatformDependent.java:742)

at io.netty.util.internal.PlatformDependent.allocateDirectNoCleaner(PlatformDependent.java:697)

at io.netty.buffer.PoolArena$DirectArena.allocateDirect(PoolArena.java:758)

at io.netty.buffer.PoolArena$DirectArena.newChunk(PoolArena.java:734)

at io.netty.buffer.PoolArena.allocateNormal(PoolArena.java:245)

at io.netty.buffer.PoolArena.allocate(PoolArena.java:227)

at io.netty.buffer.PoolArena.allocate(PoolArena.java:147)

at io.netty.buffer.PooledByteBufAllocator.newDirectBuffer(PooledByteBufAllocator.java:356)

at io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:187)

at io.netty.buffer.UnsafeByteBufUtil.copy(UnsafeByteBufUtil.java:436)

at io.netty.buffer.PooledUnsafeDirectByteBuf.copy(PooledUnsafeDirectByteBuf.java:216)

at io.netty.buffer.AbstractByteBuf.copy(AbstractByteBuf.java:1195)

at com.hx.test12.Test26NativeMemoryGc.main(Test26NativeMemoryGc.java:26)

Heap

def new generation total 9792K, used 6444K [0x00000007be000000, 0x00000007beaa0000, 0x00000007beaa0000)

eden space 8704K, 69% used [0x00000007be000000, 0x00000007be5e1218, 0x00000007be880000)

from space 1088K, 38% used [0x00000007be990000, 0x00000007be9f9ec8, 0x00000007beaa0000)

to space 1088K, 0% used [0x00000007be880000, 0x00000007be880000, 0x00000007be990000)

tenured generation total 21888K, used 5816K [0x00000007beaa0000, 0x00000007c0000000, 0x00000007c0000000)

the space 21888K, 26% used [0x00000007beaa0000, 0x00000007bf04e338, 0x00000007bf04e400, 0x00000007c0000000)

Metaspace used 14048K, capacity 14246K, committed 14464K, reserved 1062912K

class space used 1805K, capacity 1906K, committed 1920K, reserved 1048576K问题的分析

上面之所以要配置 io.netty.allocator.numDirectArenas, 是模拟 生产环境的配置, PooledByteBufAllocator.DEFAULT 使用 "本地内存 池" 来进行内存空间的分配

构造出来的 buf 类型是 io.netty.buffer.PooledUnsafeDirectByteBuf

调用 copy 复制 ByteBuf, 分配空间什么的也是基于 PooledByteBufAllocator.DEFAULT

抛出异常的地方在 PlatformDependent, 有一个逻辑上的 MEMORY_COUNTER 来记录使用的 directMemory, 空间的限制的校验 也是 逻辑上的, 和 java.nio.ByteBuffer 的限制类似

这里是 申请空间的地方, 那么 释放空间的地方呢? 可以找到 PlatformDependent 有一个 decrementMemoryCounter, 查找一下, 可以找到 PoolArena.destroyChunk 和 UnpooledUnsafeNoCleanerDirectByteBuf.release 会调用这个方法

因此 ByteBuf 还有一个 release 方法, 是需要 手动调用的, 特别是对于这部分 XXXDirectByteBuf

然后 在测试用例中 释放掉 buf.release 的注释, 跑一下, 完全正常

[GC (Allocation Failure) [DefNew: 8704K->1088K(9792K), 0.0031623 secs] 8704K->2105K(31680K), 0.0032014 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9792K->623K(9792K), 0.0023611 secs] 10809K->2705K(31680K), 0.0023772 secs] [Times: user=0.00 sys=0.00, real=0.01 secs]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/Users/jerry/.m2/repository/org/apache/logging/log4j/log4j-slf4j-impl/2.10.0/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/jerry/.m2/repository/ch/qos/logback/logback-classic/1.2.3/logback-classic-1.2.3.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/jerry/.m2/repository/org/slf4j/slf4j-log4j12/1.7.16/slf4j-log4j12-1.7.16.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

[GC (Allocation Failure) [DefNew: 9327K->582K(9792K), 0.0011898 secs] 11409K->2838K(31680K), 0.0012042 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9286K->886K(9792K), 0.0015809 secs] 11542K->3395K(31680K), 0.0015982 secs] [Times: user=0.01 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9590K->830K(9792K), 0.0010269 secs] 12099K->3541K(31680K), 0.0010457 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9534K->853K(9792K), 0.0021730 secs] 12245K->3621K(31680K), 0.0021947 secs] [Times: user=0.01 sys=0.00, real=0.01 secs]

[GC (Allocation Failure) [DefNew: 9557K->60K(9792K), 0.0017718 secs] 12325K->3388K(31680K), 0.0017913 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 8764K->651K(9792K), 0.0012783 secs] 12092K->3979K(31680K), 0.0012942 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9355K->505K(9792K), 0.0012316 secs] 12683K->3956K(31680K), 0.0012499 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9209K->1088K(9792K), 0.0019973 secs] 12660K->5087K(31680K), 0.0020173 secs] [Times: user=0.00 sys=0.00, real=0.00 secs]

[GC (Allocation Failure) [DefNew: 9792K->786K(9792K), 0.0036759 secs] 13791K->5678K(31680K), 0.0036953 secs] [Times: user=0.00 sys=0.00, real=0.01 secs]

[GC (Allocation Failure) [DefNew: 9490K->802K(9792K), 0.0024656 secs] 14382K->6153K(31680K), 0.0024804 secs] [Times: user=0.00 sys=0.00, real=0.01 secs]

[GC (Allocation Failure) [DefNew: 9506K->423K(9792K), 0.0016908 secs] 14857K->6241K(31680K), 0.0017091 secs] [Times: user=0.00 sys=0.00, real=0.01 secs]

allocate - 0

allocate - 1

allocate - 2

allocate - 3

allocate - 4

allocate - 5

allocate - 6

allocate - 7

allocate - 8

allocate - 9

allocate - 10

allocate - 11

allocate - 12

allocate - 13

allocate - 14

allocate - 15

allocate - 16

allocate - 17

allocate - 18

allocate - 19

allocate - 20

allocate - 21

allocate - 22

allocate - 23

allocate - 24

allocate - 25

allocate - 26

allocate - 27

allocate - 28

allocate - 29

end

Heap

def new generation total 9792K, used 6668K [0x00000007be000000, 0x00000007beaa0000, 0x00000007beaa0000)

eden space 8704K, 71% used [0x00000007be000000, 0x00000007be6194a0, 0x00000007be880000)

from space 1088K, 38% used [0x00000007be990000, 0x00000007be9f9ec8, 0x00000007beaa0000)

to space 1088K, 0% used [0x00000007be880000, 0x00000007be880000, 0x00000007be990000)

tenured generation total 21888K, used 5817K [0x00000007beaa0000, 0x00000007c0000000, 0x00000007c0000000)

the space 21888K, 26% used [0x00000007beaa0000, 0x00000007bf04e668, 0x00000007bf04e800, 0x00000007c0000000)

Metaspace used 14046K, capacity 14214K, committed 14464K, reserved 1062912K

class space used 1805K, capacity 1874K, committed 1920K, reserved 1048576K所以, 在 netty 中, 对于我们自己的业务代码中的创建 ByteBuf 的相关操作, 请记得 release

这里的 创建 ByteBuf 的相关操作 包含了很多东西, 诸如 XXXByteBufAllocator.buffer, XXXByteBufAllocator.heapBuffer, XXXByteBufAllocator.directBuffer, buffer.copy, buffer.retainedSlice 等等

netty 交给业务代码的 ByteBuf, 勿动, netty 自己维护好了各个 ByteBuf 的 创建, 使用, 释放

我们接下来看一下 更细节的 ByteBuf 的相关信息

为什么是 7/8 天?

这里大致预估一下 整个流程中的内存泄漏的量级

服务这边接受的信息主要是 车辆位置信息, 其他信息 暂时忽略

一天大概是有 2800000 个请求, 假设每一个车辆位置信息 在 200byte

流程中 会创建出新的 ByteBuf 的地方如下

其中 3 是偶尔出现, 4 是没有解密的过程

一天的开销大概是在 2800000 * 200 * 2 = 1120M

1. 前后缀解码的时候 copy 了一份

2. channel 状态的时候 copy 了一份

3. 存在转义字符的时候 copy 了一份

4. 解密的时候 copy 了一份

堆直接内存最大是 8G, 算起来 还是比较契合上面的结论

明显可以判断出来的情况应该是 DirectMemory 存在泄漏, 服务堆内存上限是 8G, 可用本地内存上限也是 8G, 每天的内存泄漏大概是在 1G 左右

netty 中几个维度的 ByteBuf

netty 中的 ByteBuf 大概有这几个维度

1. Direct/Heap : 表示的是 是从本地内存分配空间 还是 堆内存 分配空间

2. Pooled/Unpooled : 表示的分配空间否基于内存池

这里基于上面两个维度大致分类了一下 netty 中常用的几类 ByteBuf

以下一部分日志输出信息基于以下用例

"-Dio.netty.allocator.numDirectArenas=2 -Dio.netty.allocator.numHeapArenas=2" 是用于控制是否使用 内存池

“-Dio.netty.maxDirectMemory=0” 是控制 PlatformDependent.USE_DIRECT_BUFFER_NO_CLEANER, 用于 InstrumentedUnpooledUnsafeNoCleanerDirectByteBuf 的构造

除此之外 构造 PlatformDependent.hasUnsafe 是需要一些 tricks 的

/**

* Test26NativeMemoryGc

*

* @author Jerry.X.He <970655147@qq.com>

* @version 1.0

* @date 2021-11-30 20:34

*/

public class Test26NativeMemoryGc03

// Test26NativeMemoryGc

// -Xint -Xmx32M -XX:+UseSerialGC -XX:+PrintGCDetails -Dio.netty.allocator.numDirectArenas=2 -Dio.netty.allocator.numHeapArenas=2

// -Xint -Xmx32M -XX:+UseSerialGC -Dio.netty.maxDirectMemory=0

public static void main(String[] args) throws Exception

int _1M = 1 * 1024 * 1024;

// ByteBuf buffer = UnpooledByteBufAllocator.DEFAULT.directBuffer(_1M, _1M);

ByteBuf buffer = UnpooledByteBufAllocator.DEFAULT.heapBuffer(_1M, _1M);

// ByteBuf buffer = PooledByteBufAllocator.DEFAULT.directBuffer(_1M, _1M);

// ByteBuf buffer = PooledByteBufAllocator.DEFAULT.heapBuffer(_1M, _1M);

for (int i = 0; i < buffer.capacity(); i++)

buffer.writeByte(0x01);

// List<Object> refList = new ArrayList<>();

for (int i = 0; ; i++)

ByteBuf ref = buffer.copy();

// refList.add(ref);

if (i % 10 == 0)

System.out.println("allocate - " + i);

Tools.sleep(2);

// ref.release();

unsafe 相关 api 分配的空间是需要手动 free 的

java.io.ByteBuffer 则不用, full gc 的时候 vm 会清理空间

讨论基于 jdk 8

PooledDirectByteBuf / PooledUnsafeDirectByteBuf

二者仅仅是操作方式不同, 一个是使用 java api, 一个是使用 unsafe

二者的空间分配, 均来自于 DirectArena 来进行分配, 可以理解为一个 "本地内存 池"

DirectArena 的内存是来自于 unsafe 或者 java.nio.ByteBuffer

UnpooledDirectByteBuf / UnpooledUnsafeDirectByteBuf / UnpooledUnsafeNoCleanerDirectByteBuf

UnpooledDirectByteBuf 内存来自于 java.nio.ByteBuffer, 操作方式基于 java.nio.ByteBuffer

UnpooledUnsafeDirectByteBuf 继承自 UnpooledDirectByteBuf, 内存来自于 java.nio.ByteBuffer, 操作方式基于 unsafe

UnpooledUnsafeNoCleanerDirectByteBuf 继承自 UnpooledUnsafeDirectByteBuf, 内存来自于 unsafe, 操作方式基于 unsafe

PooledHeapByteBuf / PooledUnsafeHeapByteBuf

二者仅仅是操作方式不同, 一个是使用 java api, 一个是使用 unsafe

二者的空间分配, 均来自于 HeapArena 来进行分配, 可以理解为一个 "堆内存 池"

HeapArena 的内存是来自于 new byte[]

UnpooledHeapByteBuf / UnpooledUnsafeHeapByteBuf

二者仅仅是操作方式不同, 一个是使用 java api, 一个是使用 unsafe

二者的空间分配, new byte[]PooledXXXDirectByteBuf / PooledXXXHeapByteBuf

PooledDirectByteBuf / PooledUnsafeDirectByteBuf

二者仅仅是操作方式不同, 一个是使用 java api, 一个是使用 unsafe

二者的空间分配, 均来自于 DirectArena 来进行分配, 可以理解为一个 "本地内存 池"

DirectArena 的内存是来自于 unsafe 或者 java.nio.ByteBuffer

PooledHeapByteBuf / PooledUnsafeHeapByteBuf

二者仅仅是操作方式不同, 一个是使用 java api, 一个是使用 unsafe

二者的空间分配, 均来自于 HeapArena 来进行分配, 可以理解为一个 "堆内存 池"

HeapArena 的内存是来自于 new byte[]对于 PooledXXXDirectByteBuf / PooledXXXHeapByteBuf 来说, 内存的控制是交给 DirectArea / HeapArena 来控制的, 对应的 release 操作是通知到对应的 PoolArena 逻辑上更新空间使用情况,

对于 申请到的 Chunk 的维护是由 PoolArena 来维护的, 如果 managed 的内存不够分配新的对象, 申请新的 Chunk, 如果给定的 Chunk 的 usage 小于约束的 minUsage, free 给定的 Chunk

PooledXXXDirectByteBuf / PooledXXXHeapByteBuf 本身的 release 是逻辑上的内存操作

PoolArena 新增 Chunk, free Chunk 是物理上的内存操作

这两种 ByteBuf 使用完了之后是一定需要 release, 否则是会发生内存泄漏, 就像这里的 case 的情况

1. PooledUnsafeDirectByteBuf 如果不 release 异常如下

DirectArena 发现空间一直没有释放的, 然后业务代码又不断的需要空间, 因此会不断的申请 Chunk, PlatformDependent 这里有逻辑上的限制, 进而抛出异常如下

Exception in thread "main" io.netty.util.internal.OutOfDirectMemoryError: failed to allocate 16777216 byte(s) of direct memory (used: 16777216, max: 32440320)

at io.netty.util.internal.PlatformDependent.incrementMemoryCounter(PlatformDependent.java:742)

at io.netty.util.internal.PlatformDependent.allocateDirectNoCleaner(PlatformDependent.java:697)

at io.netty.buffer.PoolArena$DirectArena.allocateDirect(PoolArena.java:758)

at io.netty.buffer.PoolArena$DirectArena.newChunk(PoolArena.java:734)

at io.netty.buffer.PoolArena.allocateNormal(PoolArena.java:245)

at io.netty.buffer.PoolArena.allocate(PoolArena.java:227)

at io.netty.buffer.PoolArena.allocate(PoolArena.java:147)

at io.netty.buffer.PooledByteBufAllocator.newDirectBuffer(PooledByteBufAllocator.java:356)

at io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:187)

at io.netty.buffer.UnsafeByteBufUtil.copy(UnsafeByteBufUtil.java:436)

at io.netty.buffer.PooledUnsafeDirectByteBuf.copy(PooledUnsafeDirectByteBuf.java:216)2. PooledDirectByteBuf 如果不 release 异常如下

PooledDirectByteBuf 的 DirectArena 的空间来自于 java.nio.ByteBuffer, 基于 Bits 有一个逻辑上的限制, 进而抛出异常如下

Exception in thread "main" java.lang.OutOfMemoryError: Direct buffer memory

at java.nio.Bits.reserveMemory(Bits.java:694)

at java.nio.DirectByteBuffer.<init>(DirectByteBuffer.java:123)

at java.nio.ByteBuffer.allocateDirect(ByteBuffer.java:311)

at io.netty.buffer.PoolArena$DirectArena.allocateDirect(PoolArena.java:758)

at io.netty.buffer.PoolArena$DirectArena.newChunk(PoolArena.java:734)

at io.netty.buffer.PoolArena.allocateNormal(PoolArena.java:245)

at io.netty.buffer.PoolArena.allocate(PoolArena.java:227)

at io.netty.buffer.PoolArena.allocate(PoolArena.java:147)

at io.netty.buffer.PooledByteBufAllocator.newDirectBuffer(PooledByteBufAllocator.java:356)

at io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:187)

at io.netty.buffer.PooledDirectByteBuf.copy(PooledDirectByteBuf.java:285)3. PooledUnsafeHeapByteBuf 如果不 release 异常如下

PooledUnsafeHeapByteBuf 继承自 PooledHeapByteBuf

PooledUnsafeHeapByteBuf 的 HeapArena 的空间来自于 堆, 整个堆的空间使用是有限制的, 进而抛出异常如下

Exception in thread "main" java.lang.OutOfMemoryError: Java heap space

at io.netty.util.internal.PlatformDependent.allocateUninitializedArray(PlatformDependent.java:281)

at io.netty.buffer.PoolArena$HeapArena.newByteArray(PoolArena.java:665)

at io.netty.buffer.PoolArena$HeapArena.newChunk(PoolArena.java:675)

at io.netty.buffer.PoolArena.allocateNormal(PoolArena.java:245)

at io.netty.buffer.PoolArena.allocate(PoolArena.java:227)

at io.netty.buffer.PoolArena.allocate(PoolArena.java:147)

at io.netty.buffer.PooledByteBufAllocator.newHeapBuffer(PooledByteBufAllocator.java:339)

at io.netty.buffer.AbstractByteBufAllocator.heapBuffer(AbstractByteBufAllocator.java:168)

at io.netty.buffer.PooledHeapByteBuf.copy(PooledHeapByteBuf.java:214)4. PooledHeapByteBuf 如果不 release 异常如下

PooledHeapByteBuf 的 HeapArena 的空间来自于 堆, 整个堆的空间使用是有限制的, 进而抛出异常如下

Exception in thread "main" java.lang.OutOfMemoryError: Java heap space

at io.netty.util.internal.PlatformDependent.allocateUninitializedArray(PlatformDependent.java:281)

at io.netty.buffer.PoolArena$HeapArena.newByteArray(PoolArena.java:665)

at io.netty.buffer.PoolArena$HeapArena.newChunk(PoolArena.java:675)

at io.netty.buffer.PoolArena.allocateNormal(PoolArena.java:245)

at io.netty.buffer.PoolArena.allocate(PoolArena.java:227)

at io.netty.buffer.PoolArena.allocate(PoolArena.java:147)

at io.netty.buffer.PooledByteBufAllocator.newHeapBuffer(PooledByteBufAllocator.java:339)

at io.netty.buffer.AbstractByteBufAllocator.heapBuffer(AbstractByteBufAllocator.java:168)

at io.netty.buffer.PooledHeapByteBuf.copy(PooledHeapByteBuf.java:214)UnpooledDirectByteBuf / UnpooledUnsafeDirectByteBuf / UnpooledUnsafeNoCleanerDirectByteBuf

UnpooledDirectByteBuf / UnpooledUnsafeDirectByteBuf / UnpooledUnsafeNoCleanerDirectByteBuf

UnpooledDirectByteBuf 内存来自于 java.nio.ByteBuffer, 操作方式基于 java.nio.ByteBuffer

UnpooledUnsafeDirectByteBuf 继承自 UnpooledDirectByteBuf, 内存来自于 java.nio.ByteBuffer, 操作方式基于 unsafe

UnpooledUnsafeNoCleanerDirectByteBuf 继承自 UnpooledUnsafeDirectByteBuf, 内存来自于 unsafe, 操作方式基于 unsafe对于 UnpooledDirectByteBuf / UnpooledUnsafeDirectByteBuf / UnpooledUnsafeNoCleanerDirectByteBuf 来说, 内存空间的分配都是 需要使用多少, 就立即物理上申请多少

前两者的空间是 基于 java.nio.ByteBuffer, 后者的空间是基于 unsafe

所以 前两者的空间是可以在 full gc 的时候被回收的, 后者需要 手动释放

UnpooledDirectByteBuf 日志输出如下, 可以看到是没有 OOM 的

allocate - 0

... 省略部分日志输出

allocate - 180

[16:13:53.920] ERROR io.netty.util.ResourceLeakDetector 320 reportTracedLeak - LEAK: ByteBuf.release() was not called before it's garbage-collected. See https://netty.io/wiki/reference-counted-objects.html for more information.

Recent access records:

Created at:

io.netty.buffer.UnpooledByteBufAllocator.newDirectBuffer(UnpooledByteBufAllocator.java:96)

io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:187)

io.netty.buffer.UnpooledDirectByteBuf.copy(UnpooledDirectByteBuf.java:613)

io.netty.buffer.AbstractByteBuf.copy(AbstractByteBuf.java:1195)

com.hx.test12.Test26NativeMemoryGc03.main(Test26NativeMemoryGc03.java:32)

... 省略部分日志输出

allocate - 49380

allocate - 49390

allocate - 49400

allocate - 49410

allocate - 49420

allocate - 49430

allocate - 49440

allocate - 49450

allocate - 49460

allocate - 49470

allocate - 49480

allocate - 49490

allocate - 49500

allocate - 49510

allocate - 49520

allocate - 49530

allocate - 49540

allocate - 49550

allocate - 49560

allocate - 49570

allocate - 49580

allocate - 49590

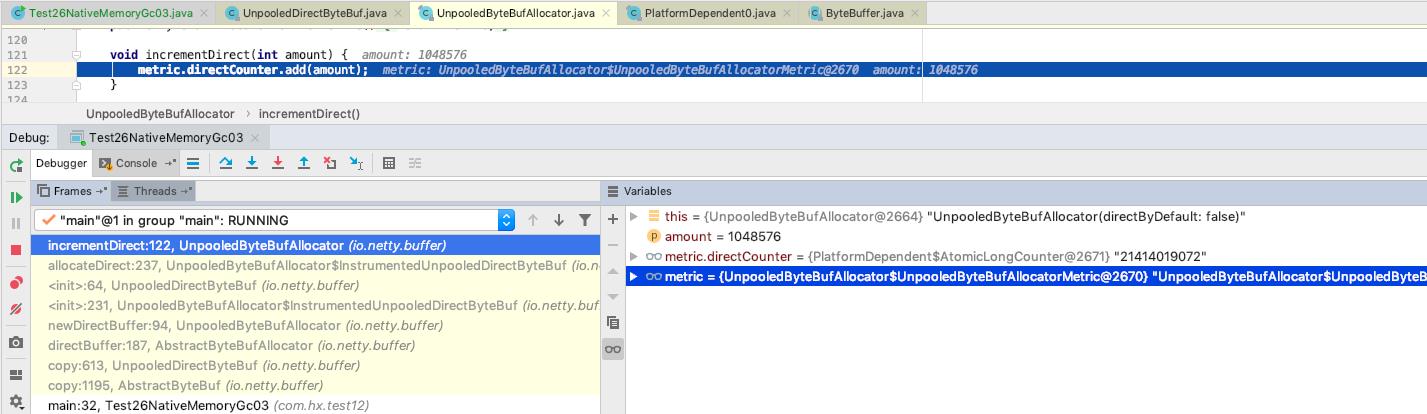

allocate - 49600但是这样会有 福作用, 空间虽然被 gc 了, 但是 相关 metrics 的统计是存在问题的

UnpooledUnsafeDirectByteBuf 日志输出如下, 可以看到是没有 OOM 的

副作用同上

allocate - 0

... 省略部分日志输出

allocate - 220

[16:17:09.780] ERROR io.netty.util.ResourceLeakDetector 320 reportTracedLeak - LEAK: ByteBuf.release() was not called before it's garbage-collected. See https://netty.io/wiki/reference-counted-objects.html for more information.

Recent access records:

Created at:

io.netty.buffer.UnpooledByteBufAllocator.newDirectBuffer(UnpooledByteBufAllocator.java:96)

io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:187)

io.netty.buffer.UnsafeByteBufUtil.copy(UnsafeByteBufUtil.java:436)

io.netty.buffer.UnpooledUnsafeDirectByteBuf.copy(UnpooledUnsafeDirectByteBuf.java:284)

io.netty.buffer.AbstractByteBuf.copy(AbstractByteBuf.java:1195)

com.hx.test12.Test26NativeMemoryGc03.main(Test26NativeMemoryGc03.java:32)

... 省略部分日志输出

allocate - 10600

allocate - 10610

allocate - 10620

allocate - 10630

allocate - 10640

allocate - 10650

allocate - 10660

allocate - 10670

allocate - 10680

allocate - 10690

allocate - 10700

allocate - 10710

allocate - 10720

allocate - 10730

allocate - 10740

allocate - 10750UnpooledUnsafeNoCleanerDirectByteBuf 日志输出如下

可以看到的是 已经零零散散的申请了 30M 的空间, 然后 在 i = 31 的时候, 申请空间, 被 PlatformDependent 这里有逻辑上的限制, 进而抛出异常如下

allocate - 0

allocate - 10

allocate - 20

Exception in thread "main" io.netty.util.internal.OutOfDirectMemoryError: failed to allocate 1048576 byte(s) of direct memory (used: 31457280, max: 32440320)

at io.netty.util.internal.PlatformDependent.incrementMemoryCounter(PlatformDependent.java:742)

at io.netty.util.internal.PlatformDependent.allocateDirectNoCleaner(PlatformDependent.java:697)

at io.netty.buffer.UnpooledUnsafeNoCleanerDirectByteBuf.allocateDirect(UnpooledUnsafeNoCleanerDirectByteBuf.java:30)

at io.netty.buffer.UnpooledByteBufAllocator$InstrumentedUnpooledUnsafeNoCleanerDirectByteBuf.allocateDirect(UnpooledByteBufAllocator.java:186)

at io.netty.buffer.UnpooledDirectByteBuf.<init>(UnpooledDirectByteBuf.java:64)

at io.netty.buffer.UnpooledUnsafeDirectByteBuf.<init>(UnpooledUnsafeDirectByteBuf.java:41)

at io.netty.buffer.UnpooledUnsafeNoCleanerDirectByteBuf.<init>(UnpooledUnsafeNoCleanerDirectByteBuf.java:25)

at io.netty.buffer.UnpooledByteBufAllocator$InstrumentedUnpooledUnsafeNoCleanerDirectByteBuf.<init>(UnpooledByteBufAllocator.java:181)

at io.netty.buffer.UnpooledByteBufAllocator.newDirectBuffer(UnpooledByteBufAllocator.java:91)

at io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:187)

at io.netty.buffer.UnsafeByteBufUtil.copy(UnsafeByteBufUtil.java:436)

at io.netty.buffer.UnpooledUnsafeDirectByteBuf.copy(UnpooledUnsafeDirectByteBuf.java:284)

at io.netty.buffer.AbstractByteBuf.copy(AbstractByteBuf.java:1195)

at com.hx.test12.Test26NativeMemoryGc03.main(Test26NativeMemoryGc03.java:32)UnpooledHeapByteBuf / UnpooledUnsafeHeapByteBuf

UnpooledHeapByteBuf / UnpooledUnsafeHeapByteBuf

二者仅仅是操作方式不同, 一个是使用 java api, 一个是使用 unsafe

二者的空间分配, new byte[]对于 UnpooledHeapByteBuf / UnpooledUnsafeHeapByteBuf 来说, 内存空间的分配都是 需要使用多少, 就立即物理上申请多少

这两者的空间是 基于 堆空间

所以 前两者的空间是可以在 gc 的时候被回收的, 副作用同上 UnpooledDirectByteBuf

UnpooledHeapByteBuf 日志输出如下, 可以看到是没有 OOM 的

副作用同上 UnpooledDirectByteBuf

allocate - 0

... 省略部分日志输出

allocate - 37400

allocate - 37410

allocate - 37420

allocate - 37430

allocate - 37440

allocate - 37450

allocate - 37460

allocate - 37470

allocate - 37480

allocate - 37490

allocate - 37500

allocate - 37510

allocate - 37520

allocate - 37530

allocate - 37540

allocate - 37550

allocate - 37560

allocate - 37570

allocate - 37580UnpooledUnsafeHeapByteBuf 日志输出如下, 可以看到是没有 OOM 的

副作用同上 UnpooledDirectByteBuf

allocate - 0

... 省略部分日志输出

allocate - 38800

allocate - 38810

allocate - 38820

allocate - 38830

allocate - 38840

allocate - 38850

allocate - 38860

allocate - 38870

allocate - 38880

allocate - 38890

allocate - 38900

allocate - 38910

allocate - 38920虽然 有一部分内存取自 java.nio.ByteBuffer 或者 堆空间 的 ByteBuf, 没有了外部强引用之后, vm 是可以回收掉对应的空间的, 但是 也会遗留一些副作用

并且 更关键的是 有一部分基于 unsafe 分配的空间, 或者基于 内存池 分配的空间是需要手动 release 的, 因此

所以, 在 netty 中, 对于我们自己的业务代码中的创建 ByteBuf 的相关操作, 请记得 release

这里的 创建 ByteBuf 的相关操作 包含了很多东西, 诸如 XXXByteBufAllocator.buffer, XXXByteBufAllocator.heapBuffer, XXXByteBufAllocator.directBuffer, buffer.copy, buffer.retainedSlice 等等

netty 交给业务代码的 ByteBuf, 勿动, netty 自己维护好了各个 ByteBuf 的 创建, 使用, 释放

netty 自己的 ResourceLeakDetector

上面出现过这样的一段日志, netty 提醒我们 ByteBuf 存在内存泄漏, 并且 该对象的创建堆栈信息 还帮助我们打印出来了

allocate - 0

... 省略部分日志输出

allocate - 220

[16:17:09.780] ERROR io.netty.util.ResourceLeakDetector 320 reportTracedLeak - LEAK: ByteBuf.release() was not called before it's garbage-collected. See https://netty.io/wiki/reference-counted-objects.html for more information.

Recent access records:

Created at:

io.netty.buffer.UnpooledByteBufAllocator.newDirectBuffer(UnpooledByteBufAllocator.java:96)

io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:187)

io.netty.buffer.UnsafeByteBufUtil.copy(UnsafeByteBufUtil.java:436)

io.netty.buffer.UnpooledUnsafeDirectByteBuf.copy(UnpooledUnsafeDirectByteBuf.java:284)

io.netty.buffer.AbstractByteBuf.copy(AbstractByteBuf.java:1195)

com.hx.test12.Test26NativeMemoryGc03.main(Test26NativeMemoryGc03.java:32)

... 省略部分日志输出

allocate - 10600

allocate - 10610

allocate - 10620

allocate - 10630

allocate - 10640

allocate - 10650

allocate - 10660

allocate - 10670

allocate - 10680

allocate - 10690

allocate - 10700

allocate - 10710

allocate - 10720

allocate - 10730

allocate - 10740

allocate - 10750这个又是怎么做到的呢 ?

在 PooledByteBufAllocator 创建的 directBuffer / heapBuffer, 会尝试 ResourceLeak 的辅助对象, 并包一层 XXXLeakAwareByteBuf

PooledByteBufAllocator 创建的 directBuffer 取决于 disableLeakDetector 的配置

这个 ResourceLeak 是一个 WeakReference, 对应的 referent 是创建的 ByteBuf 对象

然后这里就存在一些情况了

1. 如果在 ByteBuf 被回收了, 在回收之前没有调用 release 方法, 来清理 ResourceLeak 的 referent , 那么 gc 之后, 那么该 ByteBuf 对应的 ResourceLeak 就会进入创建 ResourceLeak 的时候注册的 referenceQueue

2. 如果在 ByteBuf 被回收了, 在回收之前调用了 release 方法, 来清理 ResourceLeak 的 referent , 那么 gc 之后 ResourceLeak 是不会被 vm 添加到 referenceQueue 的

netty 再来根据策略 reportLeak, 默认的策略是 1/128 概率 reportLeak

netty 是基于此 来进行一个 ResourceLeakDetector 的

完

以上是关于02 记一次 netty 内存泄露的主要内容,如果未能解决你的问题,请参考以下文章