linux内核源码分析之内存

Posted 为了维护世界和平_

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了linux内核源码分析之内存相关的知识,希望对你有一定的参考价值。

目录

一、内存管理子系统

可以分为:用户空间、内核空间以及硬件

1,用户空间:

使用malloc()申请,free()释放。malloc()/free()是glibc库的内存分配器ptmalloc提供的接口,ptmalloc使用系统调用brk或mmap向内核以页为单位申请内存,然后进行分成小内存块分配给应用程序。

2,内核空间:

虚拟内存管理负责从进程的虚拟地址空间分配虚拟页,sys_brk来扩大或收缩堆,sys_mmap用来在内存映射区域分配虚拟页,sys_munmap用来释放虚拟页。页分配器负责分配物理页,使用分配器是伙伴分配器。

内核空间扩展功能,从不连续页分配器提供分配内存的接口vmalloc和释放内存接口vfree。在内存碎片化的时候,申请连续物理页的成功率比较低,可以申请不连续的物理页,映射到连续的虚拟页,即虚拟地址连续而物理地址不连续。

内存控制组用来控制进程的内存资源。当内存碎片化的时候,找不到连续的物理页,内存碎片整理通过迁移方式得到连续的物理页。在内存不足的时候,页回收负责回收物理页。

3,硬件:

MMU包括一个页表缓存,保存最近使用过得页表映射,避免每次把虚拟地址转化为物理地址都需要查询内存中的页表。解决处理器执行速度和内存速度不匹配问题,中间加一个缓存。一级缓存分为数据缓存和指令缓存。二级缓存用于协调一级缓存和内存之间的工作效率。

二、用户虚拟地址空间划分

进程的用户虚拟地址空间起始地址是0,长度是TASK_SIZE,由每种处理器架构自己定义。

ARM64架构定义的宏TASK_SIZE

32位用户空间程序:TASK_SIZE的值是TASK_SIZE_32 ,即0x100000000, 4GB

32位用户空间程序:TASK_SIZE的值是TASK_SIZE_64

程序源码定义如下:

arch/arm64/include/asm/processor.h

#define TASK_SIZE_64 (UL(1) << vabits_actual)

#define TASK_SIZE_32 UL(0x100000000) 一个进程的虚拟地址空间主要由两个数据结构描述。一个是最高层的mm_struct,另一个是vm_area_struct。每个进程只有一个mm_struct结构

mm_struct结构描述一个进程整个虚拟地址空间

vm_area_struct描述虚拟地址空间的一个区间(成为虚拟区)。

三、mm_struct结构

struct mm_struct

struct

struct vm_area_struct *mmap; /* list of VMAs 虚拟内存区域链表 */

struct rb_root mm_rb;//虚拟内存区域红黑树

u64 vmacache_seqnum; /* per-thread vmacache */

#ifdef CONFIG_MMU//在内存映射区域找到一个没有映射的区域

unsigned long (*get_unmapped_area) (struct file *filp,

unsigned long addr, unsigned long len,

unsigned long pgoff, unsigned long flags);

#endif

unsigned long mmap_base; /* base of mmap area 内存映射区域的起始地址*/

unsigned long mmap_legacy_base; /* base of mmap area in bottom-up allocations */

#ifdef CONFIG_HAVE_ARCH_COMPAT_MMAP_BASES

/* Base adresses for compatible mmap() */

unsigned long mmap_compat_base;

unsigned long mmap_compat_legacy_base;

#endif

unsigned long task_size; /* size of task vm space 用户虚拟地址空间长度*/

unsigned long highest_vm_end; /* highest vma end address */

pgd_t * pgd;//指向全局目录,即第一级页表

#ifdef CONFIG_MEMBARRIER

/**

* @membarrier_state: Flags controlling membarrier behavior.

*

* This field is close to @pgd to hopefully fit in the same

* cache-line, which needs to be touched by switch_mm().

*/

atomic_t membarrier_state;

#endif

/**

* @mm_users: The number of users including userspace.

*

* Use mmget()/mmget_not_zero()/mmput() to modify. When this

* drops to 0 (i.e. when the task exits and there are no other

* temporary reference holders), we also release a reference on

* @mm_count (which may then free the &struct mm_struct if

* @mm_count also drops to 0).

*/

atomic_t mm_users;//共享一个用户虚拟地址空间的进程的数量,也就是线程组包含的进程的数据

/**

* @mm_count: The number of references to &struct mm_struct

* (@mm_users count as 1).

*

* Use mmgrab()/mmdrop() to modify. When this drops to 0, the

* &struct mm_struct is freed.

*/

atomic_t mm_count;//内存描述符的引用计数

#ifdef CONFIG_MMU

atomic_long_t pgtables_bytes; /* PTE page table pages */

#endif

int map_count; /* number of VMAs */

spinlock_t page_table_lock; /* Protects page tables and some

* counters

*/

struct rw_semaphore mmap_sem;

struct list_head mmlist; /* List of maybe swapped mm's. These

* are globally strung together off

* init_mm.mmlist, and are protected

* by mmlist_lock

*/

unsigned long hiwater_rss; /* 进程所拥有的最大页框数High-watermark of RSS usage */

unsigned long hiwater_vm; /* 进程线性区中最大页数High-water virtual memory usage */

unsigned long total_vm; /* 进程地址空间的大小Total pages mapped */

unsigned long locked_vm; /* 锁住而不能换成的页的个数Pages that have PG_mlocked set */

atomic64_t pinned_vm; /* Refcount permanently increased */

unsigned long data_vm; /* VM_WRITE & ~VM_SHARED & ~VM_STACK */

unsigned long exec_vm; /* VM_EXEC & ~VM_WRITE & ~VM_STACK */

unsigned long stack_vm; /* VM_STACK */

unsigned long def_flags;

spinlock_t arg_lock; /* protect the below fields */

//代码段的起始地址和结束地址,数据段的起始地址和结束地址

unsigned long start_code, end_code, start_data, end_data;

//堆的起始地址和结束地址,栈的起始地址

unsigned long start_brk, brk, start_stack;

//参数字符串起始地址和结束地址,环境变量的起始地址和结束地址

unsigned long arg_start, arg_end, env_start, env_end;

unsigned long saved_auxv[AT_VECTOR_SIZE]; /* for /proc/PID/auxv */

/*

* Special counters, in some configurations protected by the

* page_table_lock, in other configurations by being atomic.

*/

struct mm_rss_stat rss_stat;

struct linux_binfmt *binfmt;

/* Architecture-specific MM context */

//处理器架构特定的内存管理上下文

mm_context_t context;

unsigned long flags; /* Must use atomic bitops to access */

struct core_state *core_state; /* coredumping support */

#ifdef CONFIG_AIO

spinlock_t ioctx_lock;

struct kioctx_table __rcu *ioctx_table;

#endif

/* store ref to file /proc/<pid>/exe symlink points to */

struct file __rcu *exe_file;

...

/*

* The mm_cpumask needs to be at the end of mm_struct, because it

* is dynamically sized based on nr_cpu_ids.

*/

unsigned long cpu_bitmap[];

;四、vm_area_struct结构

此结构定义内存VMM内存区域。每个VM区域/任务都有一个。VM区域是进程虚拟内存空间的任何部分,它对页面错误处理程序(即共享库、可执行区域等)具有特殊规则

struct vm_area_struct

/* The first cache line has the info for VMA tree walking. */

//虚拟内存地址空间的首地址和末尾地址

unsigned long vm_start; /* Our start address within vm_mm. */

unsigned long vm_end; /* The first byte after our end address

within vm_mm. */

/* linked list of VM areas per task, sorted by address */

struct vm_area_struct *vm_next, *vm_prev;//链表操作

//如果采用链表组织化,会影响到它搜索速度问题,解决此问题采用红黑树,每个进程结构体mm_struct中都

//创建一个红黑树,将VMA作为一个节点加入到红黑树中,提高速度

struct rb_node vm_rb;

/*

* Largest free memory gap in bytes to the left of this VMA.

* Either between this VMA and vma->vm_prev, or between one of the

* VMAs below us in the VMA rbtree and its ->vm_prev. This helps

* get_unmapped_area find a free area of the right size.

*/

unsigned long rb_subtree_gap;

/* Second cache line starts here. */

struct mm_struct *vm_mm; /* The address space we belong to. */

//支持查询一个文件区间被映射到哪些虚拟内存区域,把一个文件映射到所有内存区域加入该文件地址空间结构

struct

struct rb_node rb;

unsigned long rb_subtree_last;

shared;

/*

* A file's MAP_PRIVATE vma can be in both i_mmap tree and anon_vma

* list, after a COW of one of the file pages. A MAP_SHARED vma

* can only be in the i_mmap tree. An anonymous MAP_PRIVATE, stack

* or brk vma (with NULL file) can only be in an anon_vma list.

*/

struct list_head anon_vma_chain; /* Serialized by mmap_sem &

* page_table_lock */

struct anon_vma *anon_vma; /* Serialized by page_table_lock */

/* Function pointers to deal with this struct. 成员如下*/

//void (*open)(struct vm_area_struct * area);

//void (*close)(struct vm_area_struct * area);

//int (*split)(struct vm_area_struct * area, unsigned long addr);

//int (*mremap)(struct vm_area_struct * area);

//vm_fault_t (*fault)(struct vm_fault *vmf);

//vm_fault_t (*huge_fault)(struct vm_fault *vmf,

// enum page_entry_size pe_size);

//内存操作

const struct vm_operations_struct *vm_ops;

/* Information about our backing store: */

unsigned long vm_pgoff; /* 文件偏移 Offset (within vm_file) in PAGE_SIZE

units */

struct file * vm_file; /* 文件,如果是私有的匿名映射,该成员是空指针File we map to (can be NULL). */

void * vm_private_data; /* 指向内存区的私有数据 was vm_pte (shared mem) */

/*

* Access permissions of this VMA.

* See vmf_insert_mixed_prot() for discussion.

*/

pgprot_t vm_page_prot;

unsigned long vm_flags; /* Flags, see mm.h. */

...

__randomize_layout;五、结构体之间的关系

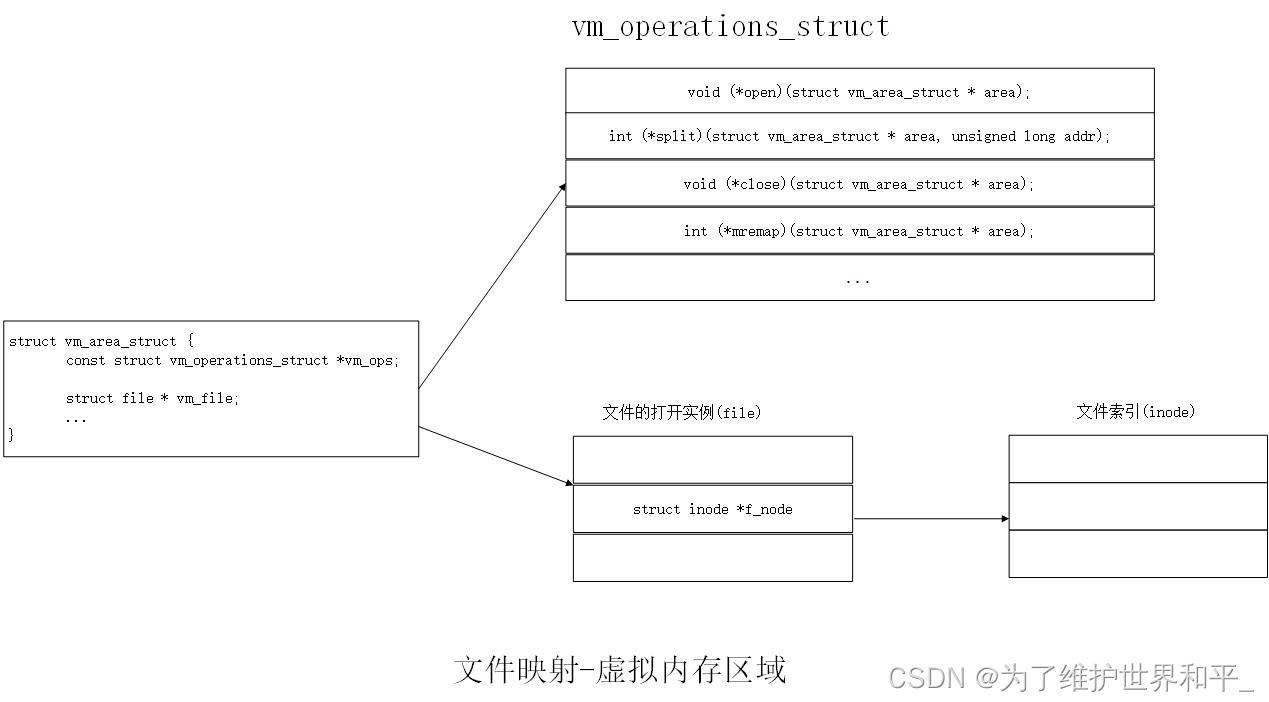

六、文件映射

const struct vm_operations_struct *vm_ops;

/* Function pointers to deal with this struct. 成员如下*/

void (*open)(struct vm_area_struct * area);//创建虚拟内存区域时调用open

void (*close)(struct vm_area_struct * area);

int (*split)(struct vm_area_struct * area, unsigned long addr);

int (*mremap)(struct vm_area_struct * area);//移动内存区域

vm_fault_t (*fault)(struct vm_fault *vmf);//访问文件映射的虚拟页,如果没有映射到物理页,产生缺页异常,异常处理程序调用falut把文件的数据读到文件页缓存当中

//与fault类似,区别是huge_fault方法针对透明巨型页的文件映射

vm_fault_t (*huge_fault)(struct vm_fault *vmf, enum page_entry_size pe_size);

//读文件映射的虚拟页时,如果没有映射到物理页,生成缺页异常,异常处理程序除了读入正在访问的文件页,还会预读后续页,调用map_pages方法在文件的页缓存中分配物理页

void (*map_pages)(struct vm_fault *vmf,

pgoff_t start_pgoff, pgoff_t end_pgoff);

unsigned long (*pagesize)(struct vm_area_struct * area);

/* notification that a previously read-only page is about to become

* writable, if an error is returned it will cause a SIGBUS */

vm_fault_t (*page_mkwrite)(struct vm_fault *vmf);

//第一次写私有的文件映射时,生成页错误异常,异常处理程序执行写时复制,

//调用page_mkwrite方法以通知文件系统页即将变成可写,以便文件系统检查是否允许写,或者等待页进入合适的状态

/* same as page_mkwrite when using VM_PFNMAP|VM_MIXEDMAP */

vm_fault_t (*pfn_mkwrite)(struct vm_fault *vmf);

/* called by access_process_vm when get_user_pages() fails, typically

* for use by special VMAs that can switch between memory and hardware

*/

int (*access)(struct vm_area_struct *vma, unsigned long addr,

void *buf, int len, int write);

参考链接

https://ke.qq.com/course/4032547/12394914843035683?flowToken=1042700

以上是关于linux内核源码分析之内存的主要内容,如果未能解决你的问题,请参考以下文章