SparkSpark开发报错总结

Posted 魏晓蕾

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了SparkSpark开发报错总结相关的知识,希望对你有一定的参考价值。

【报错一】

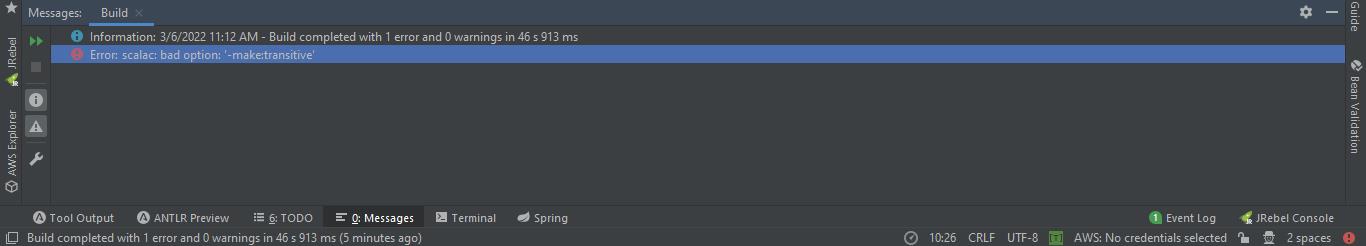

Scala文件编译报错:

【报错原因】

Scala自2.11版本开始,移除了弃用的-make选项(https://groups.google.com/forum/#!msg/scala-tools/9xLQO263sjg/ThXw-uqKCG4J),而idea的scala插件没有更新,因此,Scala升级到2.11后,就出现了上述错误。

【解决方案】

编辑H:\\IDEAWorkspace\\GuPaoStudySparkCoreDemo.idea\\scala_compiler.xml文件,注释掉<parameter value="-make:transitive" />即可。

## scala_compiler.xml

<?xml version="1.0" encoding="UTF-8"?>

<project version="4">

<component name="ScalaCompilerConfiguration">

<profile name="Maven 1" modules="GuPaoStudySparkCoreDemo">

<parameters>

<!-- <parameter value="-make:transitive" /> -->

<parameter value="-dependencyfile" />

<parameter value="H:\\IDEAWorkspace\\GuPaoStudySparkCoreDemo\\target/.scala_dependencies" />

</parameters>

</profile>

</component>

</project>

【报错二】

【报错原因】Scala项目的pom.xml配置文件导包不正确。

【解决方案】pom.xml配置文件如下:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>GuPaoStudySparkCoreDemo</artifactId>

<version>1.0-SNAPSHOT</version>

<name>$project.artifactId</name>

<description>My wonderfull scala app</description>

<inceptionYear>2010</inceptionYear>

<properties>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

<encoding>UTF-8</encoding>

<scala.version>2.11.8</scala.version>

</properties>

<repositories>

<repository>

<id>nexus-aliyun</id>

<url>http://maven.aliyun.com/nexus/content/groups/public</url>

</repository>

</repositories>

<!--

<repositories>

<repository>

<id>scala-tools.org</id>

<name>Scala-Tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>scala-tools.org</id>

<name>Scala-Tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</pluginRepository>

</pluginRepositories>

-->

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>2.4.0</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>2.4.0</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-yarn_2.11</artifactId>

<version>2.4.0</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib-local_2.11</artifactId>

<version>2.4.0</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_2.11</artifactId>

<version>2.4.0</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>2.4.0</version>

</dependency>

<dependency>

<groupId>org.antlr</groupId>

<artifactId>antlr4-runtime</artifactId>

<version>4.9.3</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.7.5</version>

</dependency>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>$scala.version</version>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-util</artifactId>

<version>9.4.33.v20201020</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.8.1</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.scalatest</groupId>

<artifactId>scalatest_2.11</artifactId>

<version>3.0.5</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-context</artifactId>

<version>4.3.11.RELEASE</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-hive-thriftserver_2.11</artifactId>

<version>2.4.0</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.mybatis</groupId>

<artifactId>mybatis</artifactId>

<version>3.5.1</version>

</dependency>

<dependency>

<groupId>com.redislabs</groupId>

<artifactId>spark-redis</artifactId>

<version>2.4.0</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.1.20</version>

</dependency>

</dependencies>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.3.1</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

<configuration>

<args>

<arg>-feature</arg>

<arg>-deprecation</arg>

<arg>-dependencyfile</arg>

<arg>$project.build.directory/.scala_dependencies</arg>

</args>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.18.1</version>

<configuration>

<useFile>false</useFile>

<disableXmlReport>true</disableXmlReport>

<!-- If you have classpath issue like NoDefClassError,... -->

<!-- useManifestOnlyJar>false</useManifestOnlyJar -->

<includes>

<include>**/*Test.*</include>

<include>**/*Suite.*</include>

</includes>

</configuration>

</plugin>

<plugin>

<groupId>org.codehaus.mojo</groupId>

<artifactId>exec-maven-plugin</artifactId>

<version>1.5.0</version>

<executions>

<execution>

<id>run-local</id>

<goals>

<goal>exec</goal>

</goals>

<configuration>

<executable>spark-submit</executable>

<arguments>

<argument>--master</argument>

<argument>local</argument>

<argument>$project.build.directory/$project.artifactId-$project.version-uber.jar</argument>

</arguments>

</configuration>

</execution>

<execution>

<id>run-yarn</id>

<goals>

<goal>exec</goal>

</goals>

<configuration>

<environmentVariables>

<HADOOP_CONF_DIR>

$basedir/spark-remote/conf

</HADOOP_CONF_DIR>

</environmentVariables>

<executable>spark-submit</executable>

<arguments>

<argument>--master</argument>

<argument>yarn</argument>

<argument>$project.build.directory/$project.artifactId-$project.version-uber.jar</argument>

</arguments>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>3.1.0</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers>

<transformer implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<manifestEntries>

<Main-Class>net.martinprobson.spark.spark_example.SparkTest</Main-Class>

</manifestEntries>

</transformer>

</transformers>

<artifactSet>

<excludes>

<exclude>javax.servlet:*</exclude>

<exclude>org.apache.hadoop:*</exclude>

<exclude>org.apache.maven.plugins:*</exclude>

<exclude>org.apache.spark:*</exclude>

<exclude>org.apache.avro:*</exclude>

<exclude>org.apache.parquet:*</exclude>

<exclude>org.scala-lang:*</exclude>

</excludes>

</artifactSet>

<finalName>$project.artifactId-$project.version-uber</finalName>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>以上是关于SparkSpark开发报错总结的主要内容,如果未能解决你的问题,请参考以下文章

SparkSpark Class is not registered joins UnsafeHashedRelation kryo

SparkSpark kafka because consumer rebalance same group id joined different streaming

SparkSpark的一个案例 Encountered removing nulls from dataset or using handleInvalid = “keep“ or “skip“(代码