Zookeeper--08---zk实现分布式锁案例

Posted 高高for 循环

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了Zookeeper--08---zk实现分布式锁案例相关的知识,希望对你有一定的参考价值。

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

文章目录

zk实现分布式锁

1.zk中锁的种类:

- 读锁:⼤家都可以读,要想上读锁的前提:之前的锁没有写锁

- 写锁:只有得到写锁的才能写。要想上写锁的前提是,之前没有任何锁。

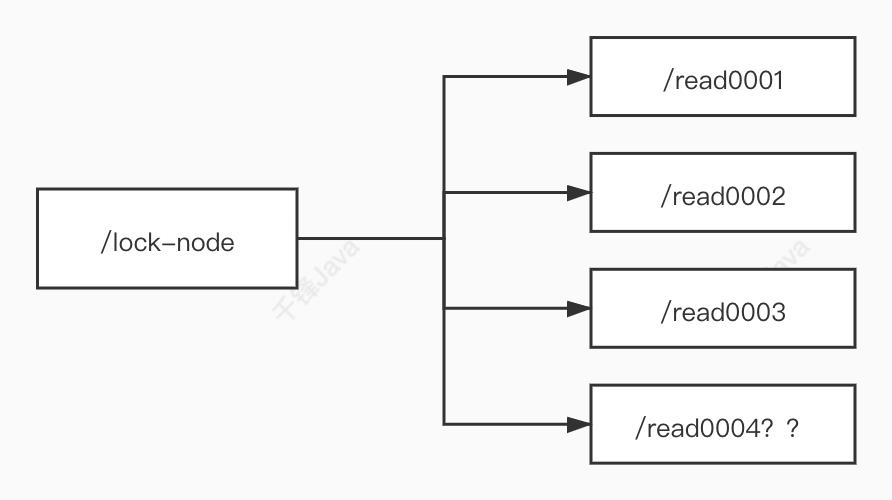

2.zk如何上读锁

1.创建⼀个临时序号节点,节点的数据是read,表示是读锁

2.获取当前zk中序号⽐⾃⼰⼩的所有节点

3.判断最⼩节点是否是读锁:

- 如果不是读锁的话,则上锁失败,为最⼩节点设置监听。阻塞等待,zk的watch机制

会当最⼩节点发⽣变化时通知当前节点,于是再执⾏第⼆步的流程 - 如果是读锁的话,则上锁成功

3.zk如何上写锁

1.创建⼀个临时序号节点,节点的数据是write,表示是 写锁

2.获取zk中所有的⼦节点

3.判断⾃⼰是否是最⼩的节点:

- 如果是,则上写锁成功

- 如果不是,说明前⾯还有锁,则上锁失败,监听最⼩的节点,如果最⼩节点有变化, 则回到第⼆步。

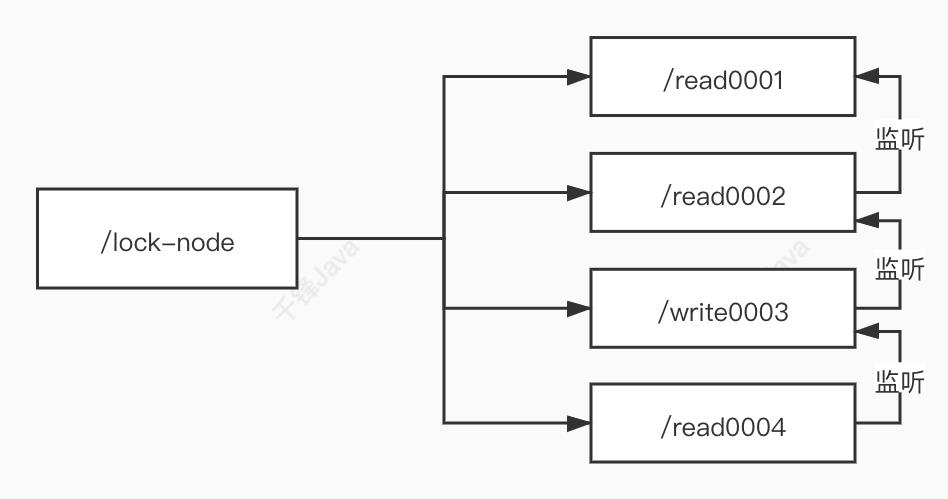

4.⽺群效应

如果⽤上述的上锁⽅式,只要有节点发⽣变化,就会触发其他节点的监听事件,这样的话对

zk的压⼒⾮常⼤,——⽺群效应。

可以调整成链式监听。解决这个问题。

5.curator实现读写锁

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.recipes.locks.InterProcessLock;

import org.apache.curator.framework.recipes.locks.InterProcessReadWriteLock;

import org.junit.jupiter.api.Test;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

@SpringBootTest

public class TestReadWriteLock

@Autowired

private CuratorFramework client;

@Test

void testGetReadLock() throws Exception

// 读写锁

InterProcessReadWriteLock interProcessReadWriteLock=new InterProcessReadWriteLock(client, "/lock1");

// 获取读锁对象

InterProcessLock interProcessLock=interProcessReadWriteLock.readLock();

System.out.println("等待获取读锁对象!");

// 获取锁

interProcessLock.acquire();

for (int i = 1; i <= 100; i++)

Thread.sleep(3000);

System.out.println(i);

// 释放锁

interProcessLock.release();

System.out.println("等待释放锁!");

@Test

void testGetWriteLock() throws Exception

// 读写锁

InterProcessReadWriteLock interProcessReadWriteLock=new InterProcessReadWriteLock(client, "/lock1");

// 获取写锁对象

InterProcessLock interProcessLock=interProcessReadWriteLock.writeLock();

System.out.println("等待获取写锁对象!");

// 获取锁

interProcessLock.acquire();

for (int i = 1; i <= 100; i++)

Thread.sleep(3000);

System.out.println(i);

// 释放锁

interProcessLock.release();

System.out.println("等待释放锁!");

分布式锁案例

案例分析

- 比如说 "进程 1"在使用该资源的时候,会先去获得锁在使用该资源的时候,保持独占,这样其他进程就无法访问该资源,

- "进程1"用完该资源以后就将锁释放掉,让其他进程来获得锁,

- 那么通过这个锁机制,我们就能保证了分布式系统中多个进程能够有序的访问该临界资源。那么我们把这个分布式环境下的这个锁叫作分布式锁。

- 接收到请求后,在/locks节点下创建一个临时顺序节点

- 判断自己是不是当前节点下最小的节点:是,获取到锁;不是,对前一个节点进行监听

- 获取到锁,处理完业务后,delete节点释放锁,然后下面的节点将收到通知,重复第二步判断

依赖:

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>3.5.7</version>

</dependency>

分布式锁实现

- 获取连接

- 对zk加锁

- 对zk解锁

import org.apache.zookeeper.*;

import org.apache.zookeeper.data.Stat;

import java.io.IOException;

import java.util.Collections;

import java.util.List;

import java.util.concurrent.CountDownLatch;

public class DistributedLock

private final String connectString = "hadoop102:2181,hadoop103:2181,hadoop104:2181";

private final int sessionTimeout = 2000;

private final ZooKeeper zk;

private CountDownLatch connectLatch = new CountDownLatch(1);

private CountDownLatch waitLatch = new CountDownLatch(1);

private String waitPath;

private String currentMode;

public DistributedLock() throws IOException, InterruptedException, KeeperException

// 获取连接

zk = new ZooKeeper(connectString, sessionTimeout, new Watcher()

@Override

public void process(WatchedEvent watchedEvent)

// connectLatch 如果连接上zk 可以释放

if (watchedEvent.getState() == Event.KeeperState.SyncConnected)

connectLatch.countDown();

// waitLatch 需要释放

if (watchedEvent.getType()== Event.EventType.NodeDeleted && watchedEvent.getPath().equals(waitPath))

waitLatch.countDown();

);

// 等待zk正常连接后,往下走程序

connectLatch.await();

// 判断根节点/locks是否存在

Stat stat = zk.exists("/locks", false);

if (stat == null)

// 创建一下根节点

zk.create("/locks", "locks".getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT);

// 对zk加锁

public void zklock()

// 创建对应的临时带序号节点

try

currentMode = zk.create("/locks/" + "seq-", null, ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL_SEQUENTIAL);

// wait一小会, 让结果更清晰一些

Thread.sleep(10);

// 判断创建的节点是否是最小的序号节点,如果是获取到锁;如果不是,监听他序号前一个节点

List<String> children = zk.getChildren("/locks", false);

// 如果children 只有一个值,那就直接获取锁; 如果有多个节点,需要判断,谁最小

if (children.size() == 1)

return;

else

Collections.sort(children);

// 获取节点名称 seq-00000000

String thisNode = currentMode.substring("/locks/".length());

// 通过seq-00000000获取该节点在children集合的位置

int index = children.indexOf(thisNode);

// 判断

if (index == -1)

System.out.println("数据异常");

else if (index == 0)

// 就一个节点,可以获取锁了

return;

else

// 需要监听 他前一个节点变化

waitPath = "/locks/" + children.get(index - 1);

zk.getData(waitPath,true,new Stat());

// 等待监听

waitLatch.await();

return;

catch (KeeperException e)

e.printStackTrace();

catch (InterruptedException e)

e.printStackTrace();

// 解锁

public void unZkLock()

// 删除节点

try

zk.delete(this.currentMode,-1);

catch (InterruptedException e)

e.printStackTrace();

catch (KeeperException e)

e.printStackTrace();

测试

import org.apache.zookeeper.KeeperException;

import java.io.IOException;

public class DistributedLockTest

public static void main(String[] args) throws InterruptedException, IOException, KeeperException

final DistributedLock lock1 = new DistributedLock();

final DistributedLock lock2 = new DistributedLock();

new Thread(new Runnable()

@Override

public void run()

try

lock1.zklock();

System.out.println("线程1 启动,获取到锁");

Thread.sleep(5 * 1000);

lock1.unZkLock();

System.out.println("线程1 释放锁");

catch (InterruptedException e)

e.printStackTrace();

).start();

new Thread(new Runnable()

@Override

public void run()

try

lock2.zklock();

System.out.println("线程2 启动,获取到锁");

Thread.sleep(5 * 1000);

lock2.unZkLock();

System.out.println("线程2 释放锁");

catch (InterruptedException e)

e.printStackTrace();

).start();

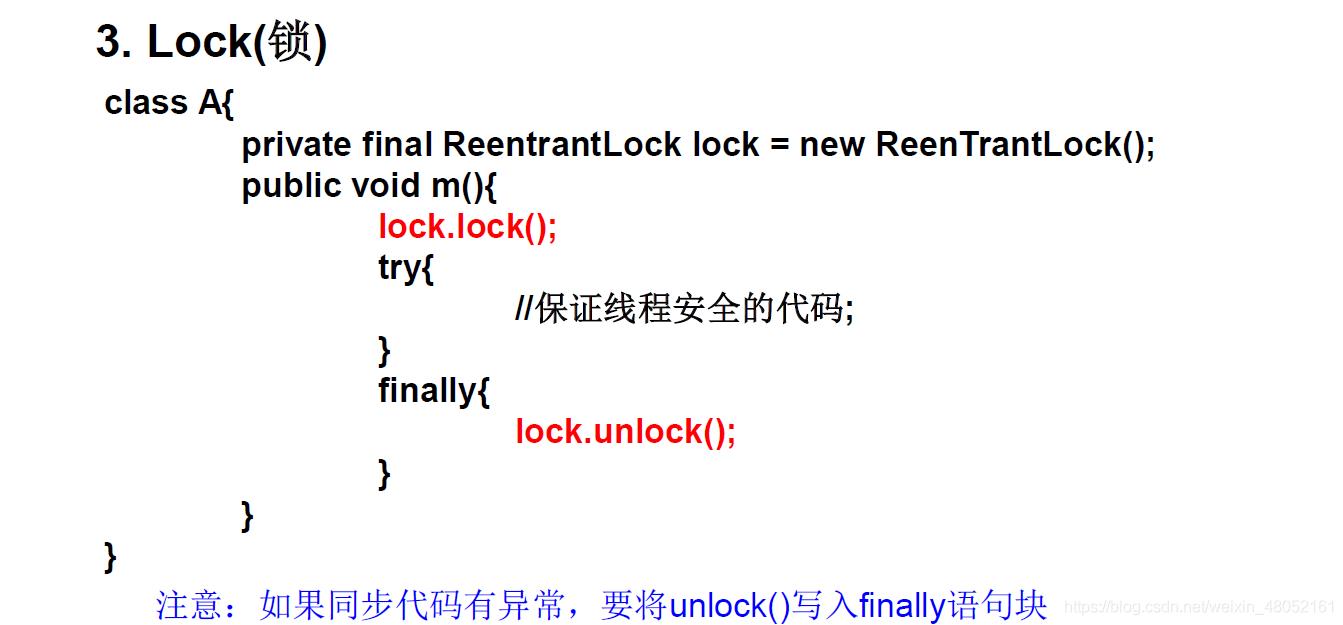

对比单体模式下—ReentrantLock

Curator框架实现分布式锁案例

依赖

<dependency>

<groupId>org.apache.curator</groupId>

<artifactId>curator-framework</artifactId>

<version>4.3.0</version>

</dependency>

<dependency>

<groupId>org.apache.curator</groupId>

<artifactId>curator-recipes</artifactId>

<version>4.3.0</version>

</dependency>

<dependency>

<groupId>org.apache.curator</groupId>

<artifactId>curator-client</artifactId>

<version>4.3.0</version>

</dependency>

获取客户端连接

private static CuratorFramework getCuratorFramework()

ExponentialBackoffRetry policy = new ExponentialBackoffRetry(3000, 3);

CuratorFramework client = CuratorFrameworkFactory.builder().connectString("hadoop102:2181,hadoop103:2181,hadoop104:2181")

.connectionTimeoutMs(2000)

.sessionTimeoutMs(2000)

.retryPolicy(policy).build();

// 启动客户端

client.start();

System.out.println("zookeeper 启动成功");

return client;

测试案例

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.CuratorFrameworkFactory;

import org.apache.curator.framework.recipes.locks.InterProcessMutex;

import org.apache.curator.retry.ExponentialBackoffRetry;

public class CuratorLockTest

public static void main(String[] args)

// 创建分布式锁1

InterProcessMutex lock1 = new InterProcessMutex(getCuratorFramework(), "/locks");

// 创建分布式锁2

InterProcessMutex lock2 = new InterProcessMutex(getCuratorFramework(), "/locks");

new Thread(new Runnable()

@Override

public void run()

try

lock1.acquire();

System.out.println("线程1 获取到锁");

lock1.acquire();

System.out.println("线程1 再次获取到锁");

Thread.sleep(5 * 1000);

lock1.release();

System.out.println("线程1 释放锁");

lock1.release();

System.out.println("线程1 再次释放锁");

catch (Exception e)

e.printStackTrace();

).start();

new Thread(new Runnable()

@Override

public void run()

try

lock2.acquire();

System.out.println("线程2 获取到锁");

lock2.acquire();

System.out.println("线程2 再次获取到锁");

Thread.sleep(5 * 1000);

lock2.release以上是关于Zookeeper--08---zk实现分布式锁案例的主要内容,如果未能解决你的问题,请参考以下文章