跪求答案 Error:could not open ' D:\ JAVA \ lib \ i386 \

Posted

tags:

篇首语:本文由小常识网(cha138.com)小编为大家整理,主要介绍了跪求答案 Error:could not open ' D:\ JAVA \ lib \ i386 \相关的知识,希望对你有一定的参考价值。

参考技术A我不知你解决没,但我还是答一下给其他兄弟遇到同样的问题解答:

其实很简单,你的文件夹名字肯定是错了 导致这个问题

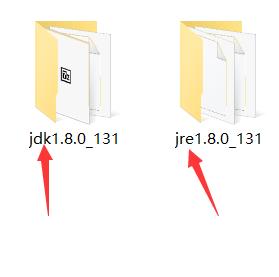

看以下图:

这是正确的名字

在安装jdk完成时会提示你安装jre的,在安装过程中可能在这个时候你文件夹名字修改和jdk名字一样,或者其他名字,导致系统找不到java.exe,报错Error:could not open ' D:\\ JAVA \\ lib \\ i386 \\ jvm.cfg (翻译过来:找不到该路径中程序)但javac.exe 是可以使用,

不明白再问哈~~

hiveserver2连接出错如下:Error: Could not open client transport with JDBC Uri: jdbc:hive2://hadoop01:10000:

hiveserver2连接出错如下:Error: Could not open client transport with JDBC Uri: jdbc:hive2://hadoop01:10000: java.net.ConnectException: Connection refused (Connection refused) (state=08S01,code=0)

1.看hiveserver2服务和HiveMetaStore是否启动

[root@hadoop01 ~]# ps -ef |grep -i metastore

root 20607 1 0 Mar06 ? 00:01:19 /root/servers/jdk1.8.0/bin/java -Xmx256m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/root/servers/hadoop-2.8.5/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/root/servers/hadoop-2.8.5 -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,console -Djava.library.path=/root/servers/hadoop-2.8.5/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Dproc_metastore -Dlog4j.configurationFile=hive-log4j2.properties -Djava.util.logging.config.file=/root/servers/hive-apache-2.3.6/conf/parquet-logging.properties -Dhadoop.security.logger=INFO,NullAppender org.apache.hadoop.util.RunJar /root/servers/hive-apache-2.3.6/lib/hive-metastore-2.3.6.jar org.apache.hadoop.hive.metastore.HiveMetaStore

root 111660 111580 0 05:40 pts/2 00:00:00 grep --color=auto -i metastore

[root@hadoop01 ~]# ps -ef |grep -i hiveserver2

root 20729 1 0 Mar06 ? 00:06:36 /root/servers/jdk1.8.0/bin/java -Xmx256m -Djava.net.preferIPv4Stack=true -Dhadoop.log.dir=/root/servers/hadoop-2.8.5/logs -Dhadoop.log.file=hadoop.log -Dhadoop.home.dir=/root/servers/hadoop-2.8.5 -Dhadoop.id.str=root -Dhadoop.root.logger=INFO,console -Djava.library.path=/root/servers/hadoop-2.8.5/lib/native -Dhadoop.policy.file=hadoop-policy.xml -Djava.net.preferIPv4Stack=true -Dproc_hiveserver2 -Dlog4j.configurationFile=hive-log4j2.properties -Djava.util.logging.config.file=/root/servers/hive-apache-2.3.6/conf/parquet-logging.properties -Dhadoop.security.logger=INFO,NullAppender org.apache.hadoop.util.RunJar /root/servers/hive-apache-2.3.6/lib/hive-service-2.3.6.jar org.apache.hive.service.server.HiveServer2

root 111762 111580 0 05:40 pts/2 00:00:00 grep --color=auto -i hiveserver2

2.看Hadoop安全模式是否关闭

[root@hadoop01 ~]# hdfs dfsadmin -safemode get

Safe mode is OFF # 表示正常

如果为:Safe mode is ON 处理方法见https://www.cnblogs.com/-xiaoyu-/p/11399287.html

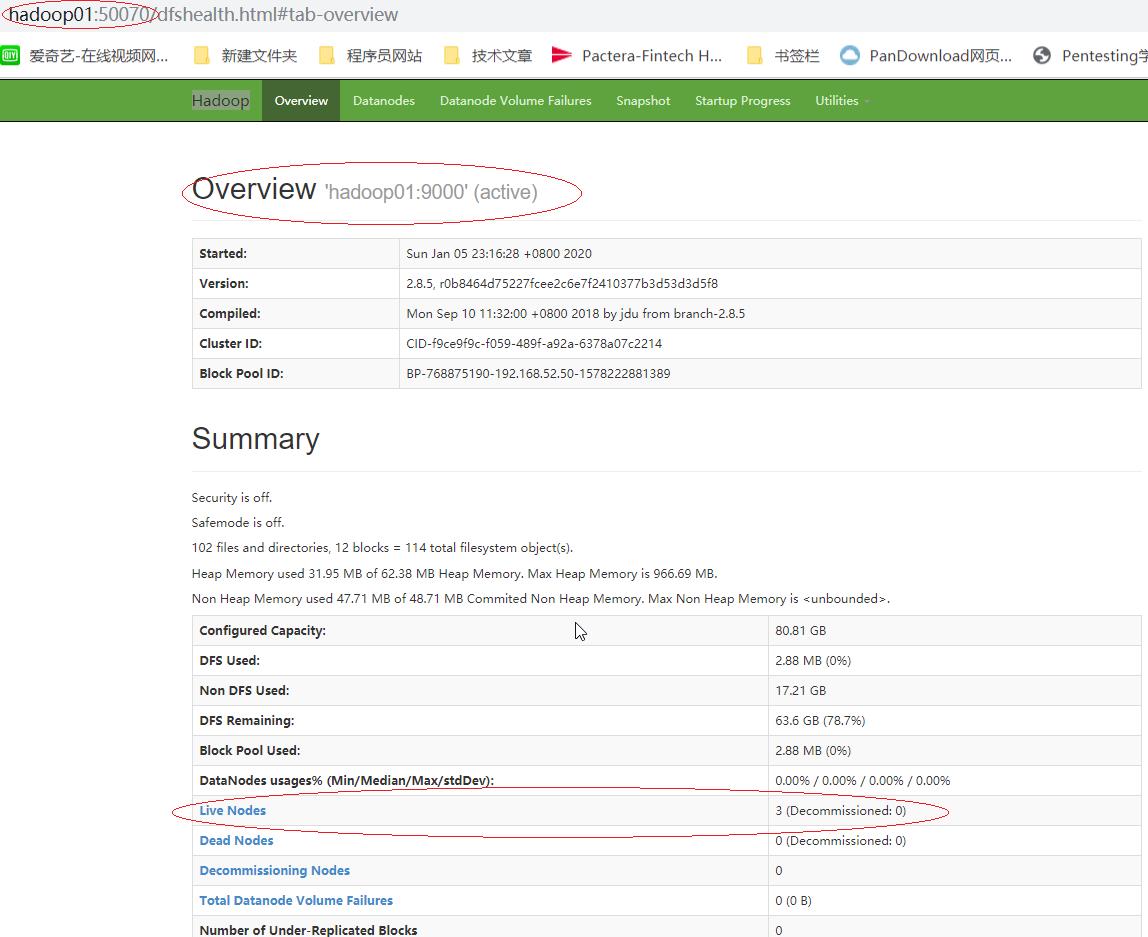

3.浏览器打开http://hadoop01:50070/看Hadoop集群是否正常启动

4.看MySQL服务是否启动

[root@hadoop01 ~]# service mysqld status

Redirecting to /bin/systemctl status mysqld.service

● mysqld.service - MySQL 8.0 database server

Loaded: loaded (/usr/lib/systemd/system/mysqld.service; disabled; vendor preset: disabled)

Active: active (running) since Sun 2020-01-05 23:30:18 CST; 8min ago

Process: 5463 ExecStartPost=/usr/libexec/mysql-check-upgrade (code=exited, status=0/SUCCESS)

Process: 5381 ExecStartPre=/usr/libexec/mysql-prepare-db-dir mysqld.service (code=exited, status=0/SUCCESS)

Process: 5357 ExecStartPre=/usr/libexec/mysql-check-socket (code=exited, status=0/SUCCESS)

Main PID: 5418 (mysqld)

Status: "Server is operational"

Tasks: 46 (limit: 17813)

Memory: 512.5M

CGroup: /system.slice/mysqld.service

└─5418 /usr/libexec/mysqld --basedir=/usr

Jan 05 23:29:55 hadoop01 systemd[1]: Starting MySQL 8.0 database server...

Jan 05 23:30:18 hadoop01 systemd[1]: Started MySQL 8.0 database server.

Active: active (running) since Sun 2020-01-05 23:30:18 CST; 8min ago 表示启动正常

如没有启动则:service mysqld start 启动mysql

注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意注意:

一定要用本地mysql工具连接mysql服务器,看是否能正常进行连接!!!!!(只是检查)

如不能连接看下:

配置只要是root用户+密码,在任何主机上都能登录MySQL数据库。

1.进入mysql

[root@hadoop102 mysql-libs]# mysql -uroot -p000000

2.显示数据库

mysql>show databases;

3.使用mysql数据库

mysql>use mysql;

4.展示mysql数据库中的所有表

mysql>show tables;

5.展示user表的结构

mysql>desc user;

6.查询user表

mysql>select User, Host, Password from user;

7.修改user表,把Host表内容修改为%,其中%表示所有主机都可以连接,否则只有本机可以连接

mysql>update user set host=\'%\' where host=\'localhost\';

8.删除root用户的其他host

mysql>delete from user where Host=\'hadoop102\';

mysql>delete from user where Host=\'127.0.0.1\';

mysql>delete from user where Host=\'::1\';

9.刷新

mysql>flush privileges; # 一定要刷新权限,否则可能权限不起效

10.退出

mysql>quit;

检查mysql-connector-java-5.1.27.tar.gz驱动包是否一句放入:/root/servers/hive-apache-2.3.6/lib下面

<value>jdbc:mysql://hadoop01:3306/hive?createDatabaseIfNotExist=true</value>

#查看mysql里面是否有上面指定的库hive 如果 mysql中没有库请看 第 7 步

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| hive |

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.01 sec)

3306后面的hive是元数据库,可以自己指定 比如:

<value>jdbc:mysql://hadoop01:3306/metastore?createDatabaseIfNotExist=true</value>

5.看Hadoop配置文件core-site.xml有没有加如下配置

<property>

<name>hadoop.proxyuser.root.hosts</name> -- root为当前Linux的用户,我的是root用户

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

如果linux用户为自己名字 如:xiaoyu

则配置如下:

<property>

<name>hadoop.proxyuser.xiaoyu.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.xiaoyu.groups</name>

<value>*</value>

</property>

6.其他问题

# HDFS文件权限问题

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

7.org.apache.hadoop.hive.metastore.hivemetaexception: failed to get schema version.

schematool -dbType mysql -initSchema

8.最后一句 别下载错包

apache hive-2.3.6下载地址:

http://mirror.bit.edu.cn/apache/hive/hive-2.3.6/

Index of /apache/hive/hive-2.3.6

Icon Name Last modified Size Description

[DIR] Parent Directory -

[ ] apache-hive-2.3.6-bin.tar.gz 23-Aug-2019 02:53 221M (下载这个)

[ ] apache-hive-2.3.6-src.tar.gz 23-Aug-2019 02:53 20M

9.重要

所有东西都检查啦,还是出错!!!

jps查看所有机器开启的进程全部关闭,然后 重启 设备,再

开启zookeeper(如果有)

开启hadoop集群

开启mysql服务

开启hiveserver2

beeline连接

配置文件如下,仅供参考,以实际自己配置为准

hive-site.xml

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://hadoop01:3306/hive?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>12345678</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>hadoop01</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>datanucleus.schema.autoCreateAll</name>

<value>true</value>

</property>

<!--

<property>

<name>hive.metastore.uris</name>

<value>thrift://node03.hadoop.com:9083</value>

</property>

-->

</configuration>

core-site.xml

<configuration>

<!-- 指定HDFS中NameNode的地址 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop01:9000</value>

</property>

<!-- 指定Hadoop运行时产生文件的存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/root/servers/hadoop-2.8.5/data/tmp</value>

</property>

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!-- 指定Hadoop辅助名称节点主机配置 第三台 -->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop03:50090</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

mapred-site.xml

<configuration>

<!-- 指定MR运行在Yarn上 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- 历史服务器端地址 第三台 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>hadoop03:10020</value>

</property>

<!-- 历史服务器web端地址 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>hadoop03:19888</value>

</property>

</configuration>

yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<!-- Reducer获取数据的方式 -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 指定YARN的ResourceManager的地址 第二台 -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop02</value>

</property>

<!-- 日志聚集功能使能 -->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!-- 日志保留时间设置7天 -->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

</configuration>

原创地址:https://www.cnblogs.com/-xiaoyu-/p/12158984.html

以上是关于跪求答案 Error:could not open ' D:\ JAVA \ lib \ i386 \的主要内容,如果未能解决你的问题,请参考以下文章

报错Error: Could not open client transport with JDBC Uri: jdbc:hive2://hadoop102:10000: Failed to open

CMake error:System Error:No such file or directory CMake error:Could not open file for write in cop

vcftools报错:Writing PLINK PED and MAP files ... Error: Could not open temporary file.解决方案

Error: could not open `C:Program FilesJavajre6libi386jvm.cfg'

Error: Could not open client transport with JDBC Uri: jdbc:hive2://hadoop1:10000:

hiveserver2连接出错如下:Error: Could not open client transport with JDBC Uri: jdbc:hive2://hadoop01:10000: